By Jiang Ling (nicknamed Ling Xin).

How can you achieve a daily mean CPU usage of only 45% in a data center? Interested to know how? Well, to learn the answer to this question, read on to see what co-location is, and why and how Alibaba have explored it and have been using it as a large-scale solution architecture.

In this article, to understand how co-location technology has evolved at Alibaba, we're going to first discuss Alibaba's exploration in co-location, and then look at Alibaba's co-location solution and technical architecture for online and offline services. In particular, we're going to look at how it relates to Alibaba's O&M systems. Later, we will look at some of the core technologies behind co-location and the direction for the development of this technology. And, last, we're going to discuss some future expectations.

Co-location technology was created in the hope of providing a balance between the demands of growing business and ever increasing resource costs. That is, co-location was developed for those who hope to have minimum resource costs while also requiring the increasing support of their businesses. Co-location can do this by reusing existing resources to help a business meet new and existing business requirements.

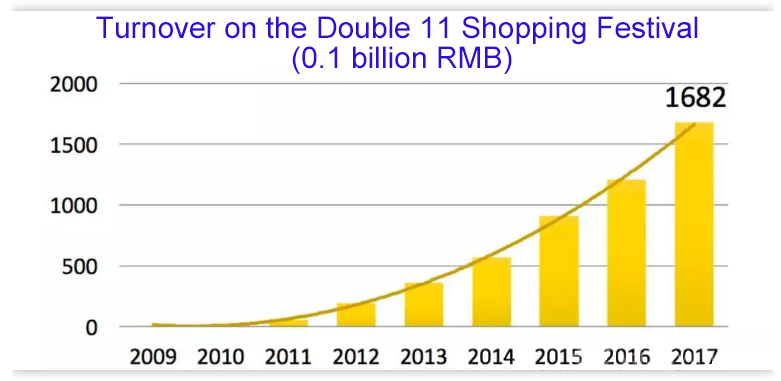

The above figure shows the turnover of Alibaba Group over the years after the first Double 11 Shopping Festival in 2009. This curve is remarkable in the eyes of the business teams at Alibaba Group, but it reads as all sort of great challenges and huge resource pressure for those in Alibaba's technical support and O&M teams.

As industry peers also engaged in e-commerce platform services know, the pressure of the technical infrastructure of the system often comes from the first second after a major promotion activity launches.

The traffic peaks seen each year at the midnight launches of the Double 11 shopping festival is consistent with the more general curve shown in the figure above. Since 2012, the peak pressure at the midnight launch has more or less doubled each year, which is something that speaks to the fact of how the rapid development of online services has greatly attributed to this promotion event.

In addition to online services, Alibaba also provides large-scale offline computing services. With emergence of new technologies like AI, these computing services are also on the rise. And up to the time of writing this article, Alibaba already holds a massive amount of data, being at the exabyte scale, with daily tasks running up to the million scale.

As the scope and the scale of services offered at Alibaba Group are continuously increasing, and a lot of resources have been reserved at the infrastructure layer to meet the requirements of these online and offline services. As online services use resources in quite a different way from offline services, two independent data centers were used to provide resources for Alibaba's online and offline services, respectively. As of now, however, this has amounted to two sets of over 10,000 servers.

However, what we discovered was that, despite of the huge amount of resources in the data centers, the usages percentages of some resources were far from efficient. In particular, the daily resource usage is only about 10% in the online service data centers.

Different services may use resources in different ways and require for different resources. On the one hand, the traffic of different services may reach peak at different times, and therefore resources can be reused for different times.

On the other hand, different services have different resource response time requirements, and therefore resources are used based on priority. Based on these two aspects, we explore the service co-location technology in different directions.

Co-location technology can implemented in a server so that two different types of services can be both deployed on it. Co-location allows for the provision of two services through sharing common resources, such as a single server. These common resources are equivalent to the resources found on two servers.

The greatest value of co-location is that resources can be fully utilized by means of sharing, realizing "acquisition of resources out of nothing". Co-location is designed to ensure the resources for the service with higher priority when resource competition occurs between two services. Therefore, we hope, with Alibaba's systems, to use the scheduling management and kernel isolation measures to fully share resources and isolate competition.

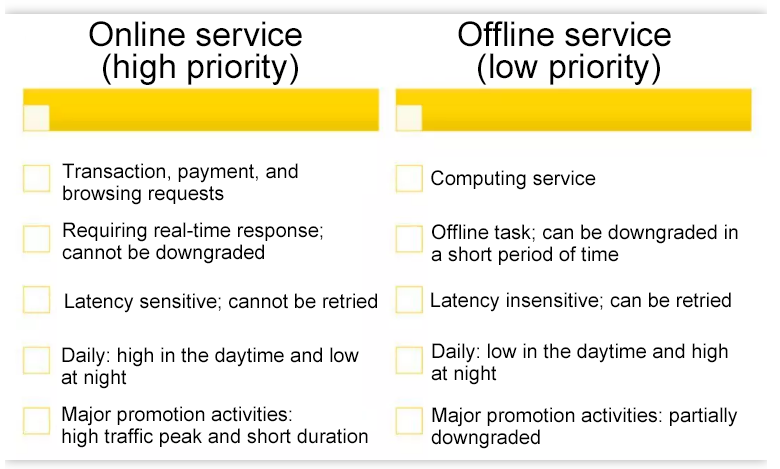

With co-location technology, online services refer to transactions, payment services, and browsing requests.

Online services are characterized by their rigid requirement for real-time performance. If a user has to wait for a long time (say, more than several seconds' time) while shopping, the user may decide not to purchase the item they were originally going to buy. Similarly, if the user is requested to try again later, the user is unlikely to stay on the same page and wait in most cases.

This traffic trend is clear for online services, especially in e-commerce scenarios. Traffic varies, but, generally speaking, traffic is higher during the day and lower during the night, because most users prefer to shop during daytime.

Another major characteristic of an e-commerce platform is that daily traffic is still much lower than traffic during a major promotion activity. On the very day of a major promotion activity, the number of transactions created in just a few seconds may be ten times or even hundreds of times that of the peak trading volume seen on an ordinary day. Therefore, one thing that is clear is that traffic on an e-commerce platform is highly dependent on time-related factors.

Offline services, such as computing and algorithm services as well as statistical and data processing services are less sensitive to latency issues. Tasks and jobs submitted by users to these services often take seconds or minutes to process, but they could also take hours or even days to process. These tasks can be stopped if necessary. That is to say, what we are more concerned more about is who takes care of retrying these tasks or jobs. Having users initiate retries is unacceptable because the system is expected to have retries done so that users cannot perceive these retries happening in the background.

Compared to online services, offline services are more time independent and can therefore can run at any time. Sometimes, offline services have time-related features that are very much opposite to that of online services, and may have a certain probability of low traffic in the daytime and high traffic in the early morning.

Such characteristics, of course, are directly connected with user behavior. For example, if, say, a user submits a statistical task, which will be executed after midnight. The user will expect to receive the report when going to work the next morning.

Based on the preceding discussion of service runtime characteristics, we can learn that online and offline services can reach the traffic peak and resource usage peak alternatively.

Online services generally have a higher priority and better resource pre-emption capabilities, whereas offline services are more tolerable when resources are insufficient. All these factors contribute to feasibility of co-locating the online and offline services.

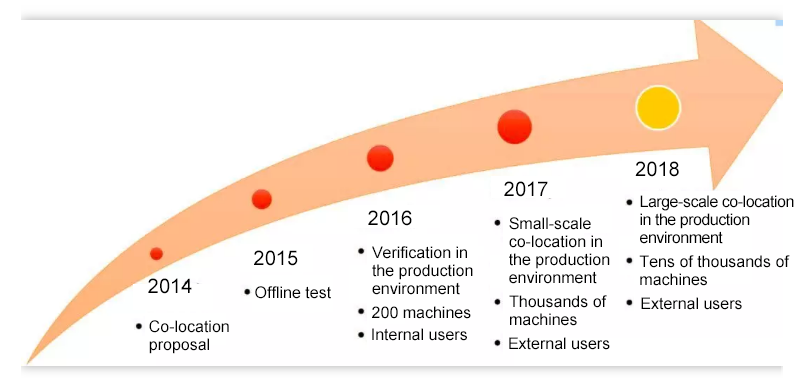

Before elaborating on the co-location technology, we would like to brief the course of Alibaba's exploration in co-location.

As a simple convention in Alibaba, we put the services, online or offline services, which provide the machines in the front when naming a co-location scenario. Therefore, we have online-offline co-location, as well as offline-online co-location.

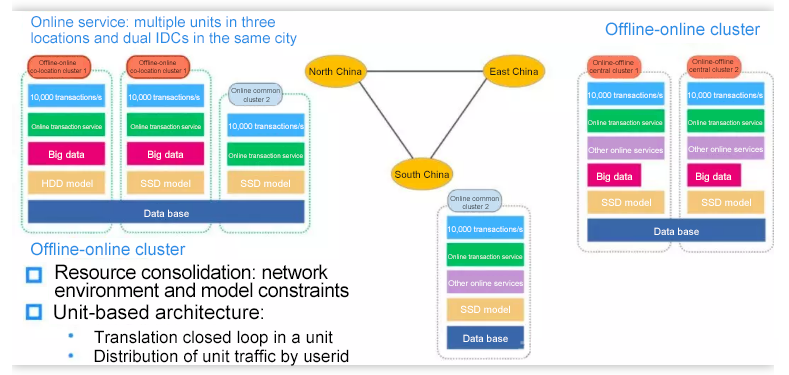

On the Double 11 Shopping Festival in 2017, 375,000 transactions were created per second according to the official announcement. The offline-online co-location cluster created 10,000 transactions per second, and offline resources were used to support the traffic peaks of online services. All of this reduces the resource overhead for the major promotion activity.

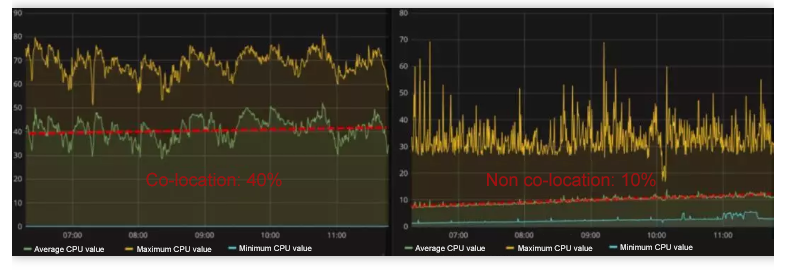

Meanwhile, after the online-offline co-location cluster is deployed, the daily mean resource usage of the native cluster for online services increases from 10% to 40%, and the online services provide the extra daily computing capability for offline services, as shown in the following figure.

The above figures show the real data of the monitoring system. The right figure shows the non co-location scenario, in which the time is from 7 o'clock in the morning to about 11 o'clock in the morning, and the CPU usage of the online service data center is 10%. The left figure shows the co-location scenario, in which the mean usage is about 40% and the jitter is large because the offline services themselves have relatively large fluctuations.

With so many resources saved, is the QoS (quality of service), and more particularly the quality of online services, downgraded?

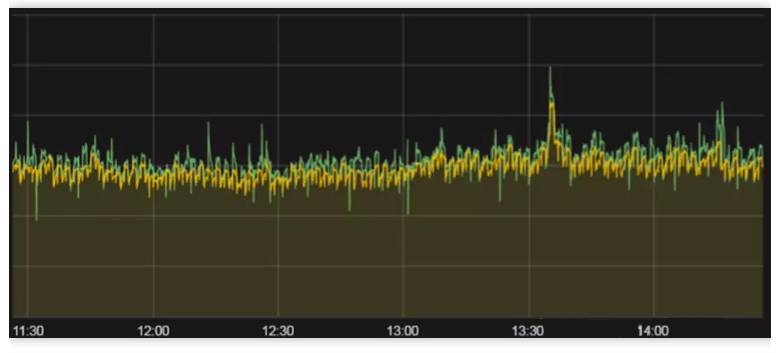

The above figure shows the real-time curves of the online core service that processes transactions. The green curve indicates the real-time performance of the co-location cluster, and the yellow one indicates the real-time performance of the non co-location cluster. The two curves basically coincide with each other, with the difference less than 5%, meaning that the QoS has met applicable level.

The co-location technology is associated with the business system and O&M system of a company. Therefore, this article may briefly mention different technical backgrounds.

This chapter describes the co-location solution, including the overall architecture, service deployment policies, management and distribution of co-location clusters, and the service operation policies.

Co-location is implemented by taking the following measures:

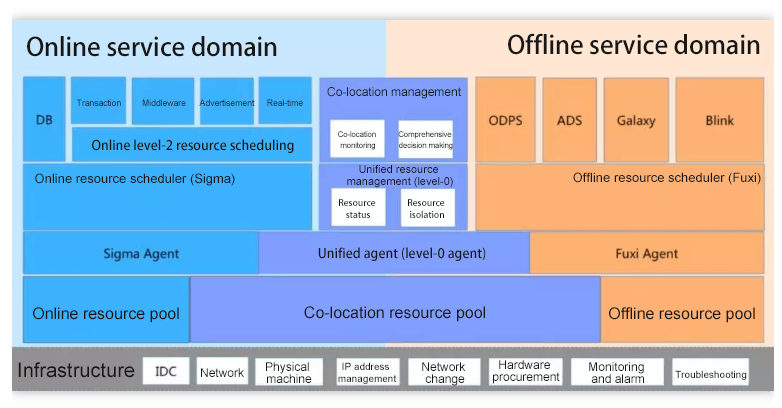

As shown in the following figure, the co-location architecture is hierarchical:

The underlying layer is the infrastructure layer. The data centers of Alibaba Group share the same hardware facilities and supporting devices, such as servers and networks, regardless of the ways that these resources are used for the upper layers.

Above the underlying layer is the resource layer. In this layer, resource pools are integrated for centralized management.

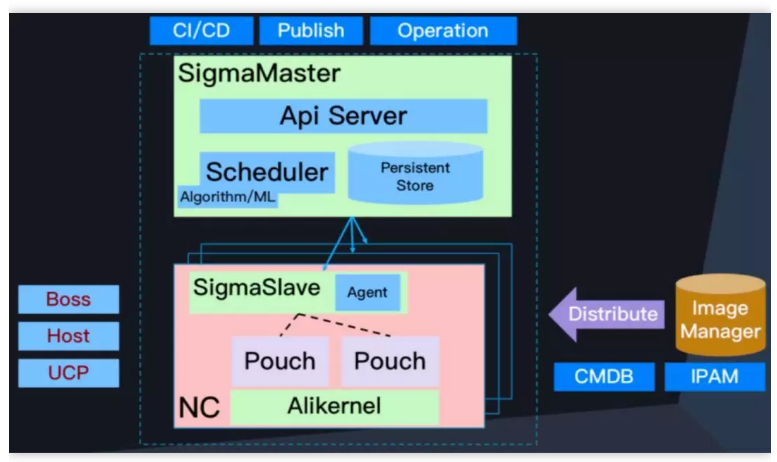

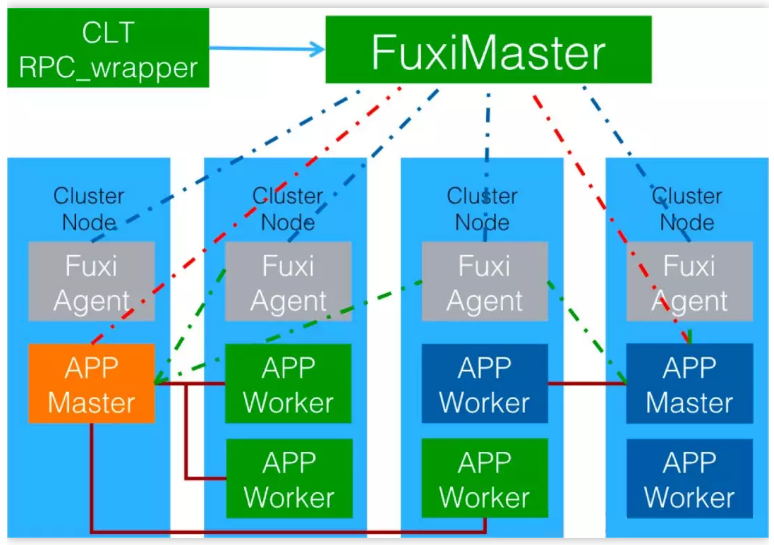

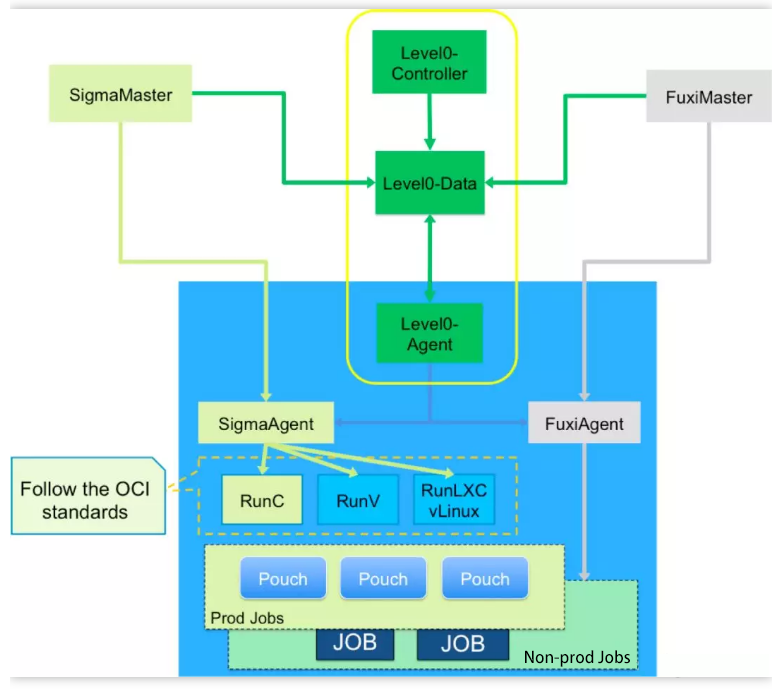

Above the resource layer is the scheduling layer, which is divided into the server end and client end. Sigma is the resource scheduling system for online services, whereas Fuxi is for offline services. Both Sigma and Fuxi are level-1 schedulers serving their own services. In the co-location architecture, a level-0 scheduler is introduced for management and allocation of resources in the two level-1 schedulers. The level-0 scheduler has a dedicated agent.

The uppermost layer is the service-oriented resource scheduling and management layer. Some resources are directly allocated to services by the level-1 schedulers, and some are allocated by level-2 schedulers, such as Hippo Manager.

The co-location architecture also has a special co-location management layer, which orchestrates and implements service operation, manages configuration of physical resources, monitors services, and makes decisions.

The above resource allocation architecture allocates servers and resources to different services. After allocation is complete, how can the service priority and SLA be ensured in the operation process? If online and offline services run on the same physical server, what should the system do if resource competition occurs between services? To solve these problems, kernel isolation technology is used to ensure resources in the operation process. A lot of kernel features are developed to support isolation, switchover, and downgrading of different types of resources. The kernel-related mechanisms will be introduced later in this article.

This section describes how to apply the co-location technology to the online services to provide the transaction creation capability for the e-commerce platform.

The co-location technology is new and contains many technical transformations. To avoid risks, a small-scale test is expected within a limited and controllable range. Therefore, a service deployment policy is developed based on the unit-based deployment architecture for the Alibaba e-commerce platform (online services) to build an independent transaction unit in the co-location cluster. This not only ensures that convergence of the co-location technology in the local scope does not affect the global system, but also implements closed-loop and independent resource scheduling and management for services within the unit.

In the e-commerce online system, the end-to-end services related to buyers' purchase behaviors are put in a closed-loop service set, which is called a transaction unit. All requests and instructions related to buyers' transaction behavior are completed in this transaction unit in a closed-loop manner. This is a unit-based deployment architecture with multiple remote backups.

In implementation of the co-location technology, another constraint comes from hardware resources. Offline and online services have different requirements for hardware resources, and existing resources of one service may not be suitable for another service. In the co-location implementation process, the adaptation problem of existing resources is the most significant on disks.

Among the native resources of offline services, a lot of low-cost HDDs are available, and offline services use almost all HDDs when running. Therefore, HDDs are unavailable to online services.

To solve the IOPS performance problem of disks, the computing and storage separation technology is introduced, which is another technology that is now continuously evolving in Alibaba Group. This technology provides the centralized computing and storage service. The compute node is connected to the storage center over a network to shield off the dependency of the compute node on the local disks.

The storage cluster can provide different storage capabilities. Online services are demanding in terms of the storage performance, but their throughput is low. Therefore, the computing and storage separation technology is used to receive the remote storage service with IOPS assurance.

After finishing the overall architecture, let's take a look at resource allocation in the co-location cluster from the resources' point of view, and see how to "create resources out of nothing".

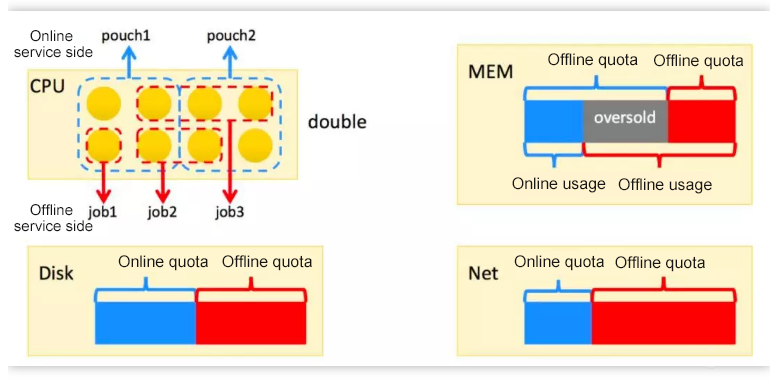

Resources of a single server include the CPU, memory (MEM), disk, and network (Net). The following describes how to obtain extra resources.

In terms of the CPU, the daily CPU usage of a pure online service cluster is about 10%. That is, online services cannot fully utilize the CPU during the daily operations. However, a peak CPU usage will be reached instantly during a major promotion activity.

Offline services are similar to a sponge that absorbs water. They have a huge business volume and consume whatever CPU computing capability is available. Given these resource usage characteristics, the co-location technology is developed to split one CPU into two.

CPU cores are allocated to different processes based on the time slice in a polling manner. One CPU core is allocated to an online service and an offline service, where the online service takes precedence over the offline service. When the online service is idle, the offline service can use the CPU core. When the online service needs to use the CPU core, it preempts the CPU core from the offline service and the offline service is suspended.

As described earlier, two resource schedulers are available, including Sigma for online services and Fuxi for offline services. Online services use the pouch containers as their resource units. A pouch container is bound to a certain number of CPU cores, which are used by a certain online service. Sigma assumes that the entire physical server belongs to online services.

Meanwhile, Fuxi assumes that this physical server belongs to offline services, and therefore allocates the CPU resource of the entire server to offline services. In this way, the CPU resource is doubled.

When the same CPU resource is allocated to two services, resource competition arises and the kernel technologies must be used for CPU isolation and scheduling. These technologies will be mentioned later in this article.

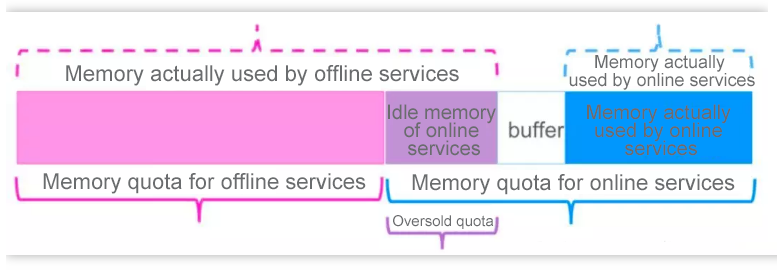

The CPU can be shared by multiple process based on the time slice. However, the memory and disks are consumable resources. Once allocated to one process, these resources cannot be used by another process. Otherwise, the resource will be preempted by the new process. Therefore, how to reuse the memory resource becomes another priority for research.

The MEM chart shows the memory overselling in a co-location environment. Above the blocks that indicate the memory sizes, the blue bracket indicates the memory quota that has been allocated to the online service, while the red one indicates that allocated to the offline service. Below the blocks, the blue bracket indicates the memory used by the online service, while the red one indicates the memory used by the offline service.

The chart reveals that the offline service uses the memory quota that has been allocated to the online service. This is known as memory overselling.

Why can online memory be oversold? This is because the Alibaba online services are mainly developed in Java. One part of the memory allocated to containers is used as the overhead for the Java stack, and the remaining part of the memory is used as the cache.

As a result, a certain amount of idle memory exists in the online container. With the monitoring of the memory usage and protection technologies, the idle memory in the online container can be allocated to offline services. This part of memory belongs to online services, and cannot always be allocated to offline services. Therefore, these resources are allocated only to low-priority offline services that can be downgraded.

In terms of disks, because the disk capacity is sufficient for both online and offline services, few restrictions are imposed. A series of bandwidth limitation measures are set forth to ensure that the maximum IOPS of an offline service is smaller than a certain threshold, preventing offline services from reducing the online service IOPS and system IOPS.

In terms of the network resource of a single server, the capacity is currently sufficient and not a bottleneck for the time being. Therefore, related descriptions are omitted here.

The previous sections describe how to achieve resource sharing and competition isolation on a single server. This section describes how to migrate resources and maximize the resource usage in the entire resource cluster through overall O&M management. Co-location aims to maximize the resource usage and eliminate waste of resources.

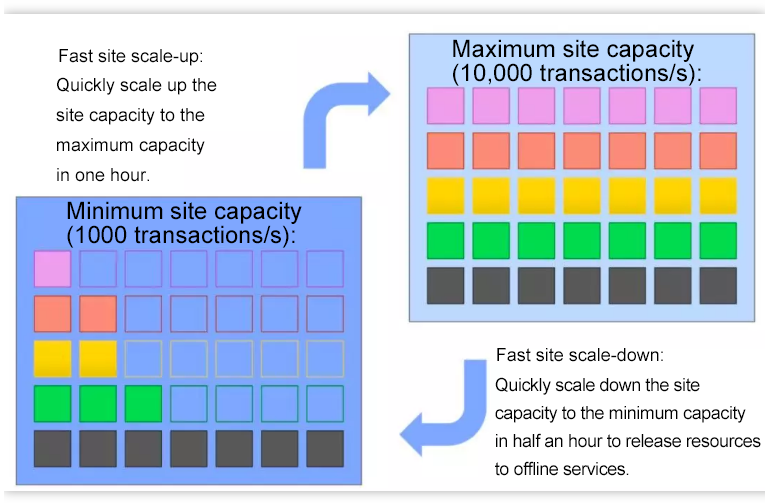

To achieve this objective, the concept of fast site scale-up and scale-down is proposed. This concept is applicable to online services. As described earlier, every co-location cluster is an online transaction unit, which independently supports transaction behaviors of a small amount of users. Therefore, the co-location cluster is treated as a site. The overall capacity of the site can be scaled up or down, which is called fast site scale-up and scale-down, as shown in the following figure.

Online services exhibit huge differences between the daily traffic and the traffic during major promotion activities. The traffic on the Double 11 Shopping Festival may be hundreds of times the daily traffic. This makes it feasible to implement the fast site scale-up and scale-down solution.

As shown in the above figure, each of the two big blocks represents the overall capacity of the online site, each small block represents a container of an online service, and each row represents the total number of containers reserved for an online service. The capacity of the entire site can be planned to switch between the capacity model for daily operations and the capacity model for a major promotion activity to better utilize resources.

In e-commerce, the business objective, such as the number of transactions created per second, is often used as the baseline for evaluating the site capacity. Generally speaking, it is sufficient to reserve a capacity of 1,000 transactions per second for a single site during daily operations. When a major promotion activity approaches, the capacity model of the site is switched to that for a major promotion activity, which is generally 10,000 transactions per second.

In this way, the unused online capacity of the site is scaled down to release resources so that offline services can obtain more physical resources.

The site scale-up process is completed in one hour. The site scale-down process is completed in half an hour.

During daily operations, the co-location site supports the traffic of online services using the minimum capacity model. When a major promotion activity or an end-to-end stress test approaches, the capacity of the co-location site is quickly scaled up. After the site runs continuously for several hours, the capacity of the site is quickly scaled down.

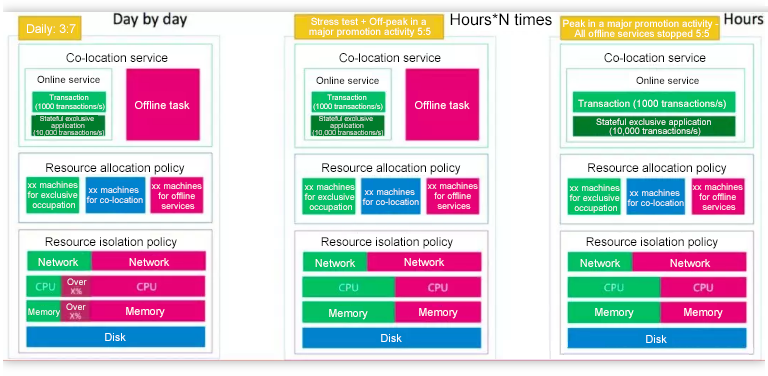

This scheme ensures that the online service occupies few resources most of the time, and more than 90% of resources are fully consumed by the offline service. The following figure shows the resource allocation details in each scale-up or scale-down phase.

In the above figure, three rectangle frames show how the resources in a co-location cluster are allocated during daily operations, during a stress test, and during a major promotion activity.

The red blocks indicate offline services, and the green blocks indicate online services. Each rectangle frame has the upper, middle, and lower layers. The upper layer indicates the service operation and weight. The middle layer indicates distribution of resources (specifically, the host machines), where the blue blocks indicate the co-location resources. The lower layer indicates the resource allocation proportion and running mode at the cluster level.

During daily operations (farthest on the left in the above figure), offline services occupy most resources, some of which are obtained by means of allocation, and others are seized from online services when the online services do not use these resources.

During a stress test (in the middle) or a major promotion activity (farthest on the right), offline services give away resources until both the online and offline services occupy 50% of resources. When the traffic of online services is high, offline services do not compete for the oversold resources. In the preparation phase, such as off-peak hours during a major promotion activity, offline services can still compete for idle resources of online services.

On the very day of the Double 11 Shopping Festival, offline services are downgraded to ensure stability of online services.

The preceding fast scale-up and scale-down scheme is a process of switching the online site capacity when a major promotion activity approaches. In addition to the significant traffic difference between daily operations and a major promotion activity, online services also regularly exhibit the following characteristics: traffic peak in the daytime and traffic valley in the early morning. To further improve the resource usage, the resource concession mechanism time-based reuse is proposed for daily operations.

The preceding figure shows the curve of online service traffic in a common day. The traffic is low in the early morning and high in the daytime. The capacity is scaled up or down by day for every online service to minimize the amount of resources used by online services and offer the saved resources for offline services.

Key technologies of co-location are divided into the kernel isolation technology and resource scheduling technology. The following lists only some technical points without elaborating more about the specific technologies used.

Enhanced isolation features have been developed in different dimensions of the kernel resources, including the CPU, I/O, memory, and network dimensions. The online and offline service groups are defined based on the CGroup to differentiate the kernel priorities of the two types of services.

In the CPU dimension, isolation features, such as the hyper-threading pair, scheduler, and level-3 cache, are implemented. In the memory dimension, memory bandwidth isolation and the OOM kill priority are implemented. In the disk dimension, the I/O bandwidth limitation is implemented. In the network dimension, traffic control is implemented on a single server, and end-to-end hierarchical QoS assurance is implemented on the network.

You can search for detailed descriptions about the kernel isolation technologies of co-location. The following only details memory overselling.

Dynamic memory overselling:

As shown in the above figure, the solid-line brackets in red and blue represent the memory quotas of the offline and online CGroups, respectively. The sum represents the memory that can be allocated by the entire server, excluding the memory used as the system overhead. The solid-line bracket in purple represents the oversold memory quota for offline services, whose size is determined by the detected size of the idle memory unused by online services in the operation process.

The upper dashed-line brackets represent the actual memory sizes used by offline and online services, respectively, wherein online services generally do not consume all the memory quota, and the memory quota unused by online services is used as the oversold quota by offline services. To prevent a bursty memory requirement of online services, a certain size of memory is reserved as the buffer. In this way, offline services can use the oversold memory.

As the second core technology of co-location, resource scheduling can be further divided into the native level-1 resource scheduling with the online resource scheduler, Sigma. and the offline resource scheduler, Fuxi, and also co-location level-0 scheduling.

The online resource scheduler schedules and allocates resources properly based on the resource portraits of applications. It involves a series of packing problems, affinity and mutual exclusion rules, and global optimal solutions. The online resource scheduler implements automatic scaling of application capacities and time-based reuse at the global dimension, and fast scale-up and scale-down upon special events.

The above figure shows the architecture of the online level-1 scheduler Sigma. Sigma is compatible with Kubernetes APIs, uses the Alibaba pouch container technology for scheduling, and has been tested by the Alibaba's heavy traffic and the traffic on Double 11 Shopping Festival over the years.

The offline resource scheduler implements hierarchical job scheduling, dynamic memory overselling, and lossful or lossless offline service downgrade solutions.

The above figure shows the operation of the offline resource scheduler Fuxi. Fuxi implements scheduling based on jobs and provides a data-driven, multi-level, pipelined, and parallel computing framework for complex applications that require mass data processing and large-scale computing.

Fuxi is compatible with multiple programming modes, including MapReduce, Map-Reduce-Merge, Cascading, and FlumeJava. It also features high scalability, supports scheduling of hundreds of thousands of parallel tasks, and can optimize the network overhead based on the data distribution.

In a co-location environment, resources are scheduled and allocated for offline and online services respectively by their own schedulers. A unified resource scheduling level, level 0, is also available under the level-1 schedulers. Level 0 schedulers are responsible for coordination and arbitration of online and offline service resources, and implement monitoring and decision making to allocate resources properly. The following figure shows the overall architecture for co-location resource scheduling.

The co-location technology will evolve towards three directions in the future: large-scale, diversified, and refined.

Alibaba Clouder - November 8, 2018

Alibaba Developer - March 11, 2020

OpenAnolis - June 1, 2023

Alibaba Cloud Native Community - December 1, 2022

Alibaba Clouder - January 22, 2020

Alibaba Developer - January 7, 2019

E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More Livestreaming for E-Commerce Solution

Livestreaming for E-Commerce Solution

Set up an all-in-one live shopping platform quickly and simply and bring the in-person shopping experience to online audiences through a fast and reliable global network

Learn More E-Commerce Solution

E-Commerce Solution

Alibaba Cloud e-commerce solutions offer a suite of cloud computing and big data services.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More