The PostgreSQL arrays, JSON data, and other data types offer a lot of convenience to sellers for managing store operations. However, the optimization of these data types becomes increasingly complicated. For example, if a seller stores the information of some products in the database and attaches multiple tags in JSON format to each product. Then, the data structure may appear as follows.

create table js(

gid int, -- 店铺ID

item int, -- 商品ID

prop jsonb[] -- 商品标签, json数组

);

Consider the following sample data, where:

1, 1, ['{"id":10, "score":80}', '{"id":11, "score":70}', '{"id":21, "score":60}', .....]

1, 2, ['{"id":11, "score":50}', '{"id":13, "score":30}', '{"id":21, "score":80}', .....]

.....

Assume that the seller wants to search for data, where "gid=?", the data contains a certain ID, and the ID score is within a certain range. The purpose is to find data that meets certain tag conditions in a store.

select * from js where gid=? and prop包含id=? 并且score between ? and ?的记录。

Now, write a User Defined Function (UDF) to implement the query of the specific class.

create or replace function get_res(

jsonb[], -- 输入的JSONB数组

int, -- id

int, -- score最小值

int -- score最大值

) returns boolean as $$

declare

v_id int;

v_score int;

v_js jsonb;

begin

foreach v_js in array $1 loop

if (v_js->>'id')::int = $2 and (v_js->>'score')::float4 between $3 and $4 then

return true;

end if;

end loop;

return false;

end;

$$ language plpgsql strict;

The final SQL statement is shown below.

select * from js where gid=? and get_res(prop, ?, ?, ?);

The preceding method only uses the Group Identification (GID) index. Therefore, it requires a large amount of CPU computing which inevitably becomes a bottleneck during high concurrency.

The critical question is how to accurately perform index retrieval on data and improve the performance when a known property has a maximum of N JSON elements.

In fact, the SQL statement can be changed as follows.

select * from js where

(gid=? and prop[1]->>'id'=? and prop[1]->>'score' between x and x) or -- 每个JSON元素对应一颗树

(gid=? and prop[2]->>'id'=? and prop[2]->>'score' between x and x) or -- 每个JSON元素对应一颗树

...

Or

select * from js where

(gid=? and prop[1]->>'id'=? and prop[1]->>'score' between x and x)

union all

select * from js where

(gid=? and prop[2]->>'id'=? and prop[2]->>'score' between x and x)

union all

...

;

The SQL statement contains many OR conditions, but it does not affect the performance because the GID, ID, and score are all set to fixed values, and the ID is unique in the same record. Although multiple trees are scanned, the I/O or CPU usage does not increase upon the final retrieval considering the same record can only be retrieved from one tree.

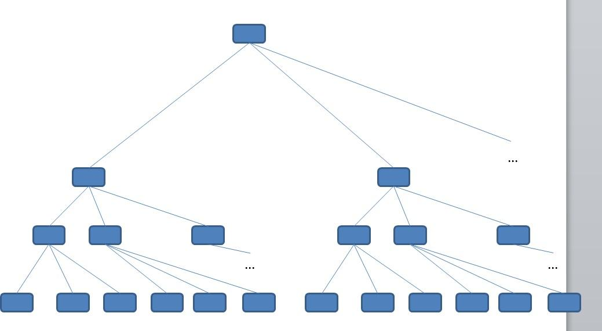

Follow the steps listed below to optimize the performance:

Step 1. Set the number of JSON elements in the prop field to a fixed value.

Step 2. Construct a composite expression index for each JSON element.

create index idx_js_1 on js (gid, ((prop[1]->>'id')::int), ((prop[1]->>'score')::float4));

create index idx_js_2 on js (gid, ((prop[2]->>'id')::int), ((prop[2]->>'score')::float4));

create index idx_js_3 on js (gid, ((prop[3]->>'id')::int), ((prop[3]->>'score')::float4));

create index idx_js_4 on js (gid, ((prop[4]->>'id')::int), ((prop[4]->>'score')::float4));

create index idx_js_5 on js (gid, ((prop[5]->>'id')::int), ((prop[5]->>'score')::float4));

create index idx_js_6 on js (gid, ((prop[6]->>'id')::int), ((prop[6]->>'score')::float4));

create index idx_js_7 on js (gid, ((prop[7]->>'id')::int), ((prop[7]->>'score')::float4));

create index idx_js_8 on js (gid, ((prop[8]->>'id')::int), ((prop[8]->>'score')::float4));

create index idx_js_9 on js (gid, ((prop[9]->>'id')::int), ((prop[9]->>'score')::float4));

create index idx_js_10 on js (gid, ((prop[10]->>'id')::int), ((prop[10]->>'score')::float4));

Step 3. Rewrite the SQL statement.

Step 4. Use UDF to splice dynamic SQL statements or splice dynamic SQL statements at the program end.

create or replace function get_js(

int, -- loops, 一个prop里多少个json元素

int, -- gid

int, -- json id

int, -- json score 最小值

int -- json score 最大值

) returns setof js as $$

declare

sql text := 'select * from js where ';

begin

for i in 1..$1 loop

sql := format($_$ %s (gid=%s and (prop[%s]->>'id')::int=%s and (prop[%s]->>'score')::float4 between %s and %s) union all select * from js where $_$, sql, $2, i, $3, i, $4, $5);

end loop;

sql := rtrim(sql, 'union all select * from js where ');

-- raise notice '%', sql;

return query execute sql;

end;

$$ language plpgsql strict;

The following statement shows the dynamic query splicing.

postgres=# select * from get_js(10,1,1,10,20);

gid | item | prop

-----+------+------

(0 rows)

The index is correctly used as shown below.

postgres=# explain select * from js where (gid=1 and (prop[1]->>'id')::int=1 and (prop[1]->>'score')::float4 between 10 and 20) union all select * from js where (gid=1 and (prop[2]->>'id')::int=1 and (prop[2]->>'score')::float4 between 10 and 20) union all select * from js where (gid=1 and (prop[3]->>'id')::int=1 and (prop[3]->>'score')::float4 between 10 and 20) union all select * from js where (gid=1 and (prop[4]->>'id')::int=1 and (prop[4]->>'score')::float4 between 10 and 20) union all select * from js where (gid=1 and (prop[5]->>'id')::int=1 and (prop[5]->>'score')::float4 between 10 and 20) union all select * from js where (gid=1 and (prop[6]->>'id')::int=1 and (prop[6]->>'score')::float4 between 10 and 20) union all select * from js where (gid=1 and (prop[7]->>'id')::int=1 and (prop[7]->>'score')::float4 between 10 and 20) union all select * from js where (gid=1 and (prop[8]->>'id')::int=1 and (prop[8]->>'score')::float4 between 10 and 20) union all select * from js where (gid=1 and (prop[9]->>'id')::int=1 and (prop[9]->>'score')::float4 between 10 and 20) union all select * from js where (gid=1 and (prop[10]->>'id')::int=1 and (prop[10]->>'score')::float4 between 10 and 20);

QUERY PLAN

----------------------------------------------------------------------------------------------------------------------------------------

Append (cost=0.15..21.87 rows=10 width=40)

-> Index Scan using idx_js_1 on js (cost=0.15..2.18 rows=1 width=40)

Index Cond: ((gid = 1) AND (((prop[1] ->> 'id'::text))::integer = 1) AND (((prop[1] ->> 'score'::text))::real >= '10'::double precision) AND (((prop[1] ->> 'score'::text))::real <= '20'::doubleprecision))

-> Index Scan using idx_js_2 on js js_1 (cost=0.15..2.18 rows=1 width=40)

Index Cond: ((gid = 1) AND (((prop[2] ->> 'id'::text))::integer = 1) AND (((prop[2] ->> 'score'::text))::real >= '10'::double precision) AND (((prop[2] ->> 'score'::text))::real <= '20'::doubleprecision))

-> Index Scan using idx_js_3 on js js_2 (cost=0.15..2.18 rows=1 width=40)

Index Cond: ((gid = 1) AND (((prop[3] ->> 'id'::text))::integer = 1) AND (((prop[3] ->> 'score'::text))::real >= '10'::double precision) AND (((prop[3] ->> 'score'::text))::real <= '20'::doubleprecision))

-> Index Scan using idx_js_4 on js js_3 (cost=0.15..2.18 rows=1 width=40)

Index Cond: ((gid = 1) AND (((prop[4] ->> 'id'::text))::integer = 1) AND (((prop[4] ->> 'score'::text))::real >= '10'::double precision) AND (((prop[4] ->> 'score'::text))::real <= '20'::doubleprecision))

-> Index Scan using idx_js_5 on js js_4 (cost=0.15..2.18 rows=1 width=40)

Index Cond: ((gid = 1) AND (((prop[5] ->> 'id'::text))::integer = 1) AND (((prop[5] ->> 'score'::text))::real >= '10'::double precision) AND (((prop[5] ->> 'score'::text))::real <= '20'::doubleprecision))

-> Index Scan using idx_js_6 on js js_5 (cost=0.15..2.18 rows=1 width=40)

Index Cond: ((gid = 1) AND (((prop[6] ->> 'id'::text))::integer = 1) AND (((prop[6] ->> 'score'::text))::real >= '10'::double precision) AND (((prop[6] ->> 'score'::text))::real <= '20'::doubleprecision))

-> Index Scan using idx_js_7 on js js_6 (cost=0.15..2.18 rows=1 width=40)

Index Cond: ((gid = 1) AND (((prop[7] ->> 'id'::text))::integer = 1) AND (((prop[7] ->> 'score'::text))::real >= '10'::double precision) AND (((prop[7] ->> 'score'::text))::real <= '20'::doubleprecision))

-> Index Scan using idx_js_8 on js js_7 (cost=0.15..2.18 rows=1 width=40)

Index Cond: ((gid = 1) AND (((prop[8] ->> 'id'::text))::integer = 1) AND (((prop[8] ->> 'score'::text))::real >= '10'::double precision) AND (((prop[8] ->> 'score'::text))::real <= '20'::doubleprecision))

-> Index Scan using idx_js_9 on js js_8 (cost=0.15..2.18 rows=1 width=40)

Index Cond: ((gid = 1) AND (((prop[9] ->> 'id'::text))::integer = 1) AND (((prop[9] ->> 'score'::text))::real >= '10'::double precision) AND (((prop[9] ->> 'score'::text))::real <= '20'::doubleprecision))

-> Index Scan using idx_js_10 on js js_9 (cost=0.15..2.18 rows=1 width=40)

Index Cond: ((gid = 1) AND (((prop[10] ->> 'id'::text))::integer = 1) AND (((prop[10] ->> 'score'::text))::real >= '10'::double precision) AND (((prop[10] ->> 'score'::text))::real <= '20'::doubleprecision))

(21 rows)

Step 5. Add a composite express index if the number of elements increases.

Note that this method involves many indexes, so it might affect the Data Manipulation Language (DML) performance. However, there is a significant improvement in query performance.

If you do not want to use a composite index, use a single column expression index instead.

You can apply this approach to implement searches by equivalent and range. Consider the following example.

create index idx_js_1 on js ( (gid||'_'||prop[1]->>'id'||'_'||lpad(prop[1]->>'score',4,'0')) );

.............

select * from js where (gid||'_'||prop[1]->>'id'||'_'||lpad(prop[1]->>'score',4,'0')) between '1_1_0020' and '1_1_0100'

union all

select * from js where (gid||'_'||prop[2]->>'id'||'_'||lpad(prop[2]->>'score',4,'0')) between '1_1_0020' and '1_1_0100'

union all

....;

Integrate GID and prop-> ID into the index.

LPAD function is also critical. Scores may vary by the number of digits, and therefore, the query result in the TEXT range may not meet expectations. In this case, LPAD can be used to ensure that the scores have the same number of digits to achieve the same sequence effect as numerical values.

The effect after padding is as follows.

postgres=# select '90' > '100';

?column?

----------

t

(1 row)

postgres=# select '090' > '100';

?column?

----------

f

(1 row)

Implement the following steps to test the performance.

Step1. Create a table.

create table js(

gid int, -- 店铺ID

item int, -- 商品ID

prop jsonb[], -- 商品标签, json数组

primary key (gid,item)

);

Step 2. Construct data by creating 10 thousand GIDs, consisting of 100 thousand items each.

Make each prop have 10 JSONBs, and set the ID range of each JSONB to 0 to 1000 and the score range to 0 to 100.

create or replace function ins(int, int) returns void as $$

declare

begin

insert into js values (

$1, $2,

array[

format('{"id":%s, "score":%s}', (random()*1000)::int, (random()*100)::int)::jsonb,

format('{"id":%s, "score":%s}', (random()*1000)::int, (random()*100)::int)::jsonb,

format('{"id":%s, "score":%s}', (random()*1000)::int, (random()*100)::int)::jsonb,

format('{"id":%s, "score":%s}', (random()*1000)::int, (random()*100)::int)::jsonb,

format('{"id":%s, "score":%s}', (random()*1000)::int, (random()*100)::int)::jsonb,

format('{"id":%s, "score":%s}', (random()*1000)::int, (random()*100)::int)::jsonb,

format('{"id":%s, "score":%s}', (random()*1000)::int, (random()*100)::int)::jsonb,

format('{"id":%s, "score":%s}', (random()*1000)::int, (random()*100)::int)::jsonb,

format('{"id":%s, "score":%s}', (random()*1000)::int, (random()*100)::int)::jsonb,

format('{"id":%s, "score":%s}', (random()*1000)::int, (random()*100)::int)::jsonb

]

) on conflict do nothing;

end;

$$ language plpgsql strict;

vi test.sql

\set gid random(1,10000)

\set item random(1,100000)

select ins(:gid, :item);

Now, write 1 billion records as shown below.

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 50 -j 50 -t 20000000

Step 3. Next, you need to perform the indexing.

psql -c "create index idx_js_1 on js (gid, ((prop[1]->>'id')::int), ((prop[1]->>'score')::float4));" &

psql -c "create index idx_js_2 on js (gid, ((prop[2]->>'id')::int), ((prop[2]->>'score')::float4));" &

psql -c "create index idx_js_3 on js (gid, ((prop[3]->>'id')::int), ((prop[3]->>'score')::float4));" &

psql -c "create index idx_js_4 on js (gid, ((prop[4]->>'id')::int), ((prop[4]->>'score')::float4));" &

psql -c "create index idx_js_5 on js (gid, ((prop[5]->>'id')::int), ((prop[5]->>'score')::float4));" &

psql -c "create index idx_js_6 on js (gid, ((prop[6]->>'id')::int), ((prop[6]->>'score')::float4));" &

psql -c "create index idx_js_7 on js (gid, ((prop[7]->>'id')::int), ((prop[7]->>'score')::float4));" &

psql -c "create index idx_js_8 on js (gid, ((prop[8]->>'id')::int), ((prop[8]->>'score')::float4));" &

psql -c "create index idx_js_9 on js (gid, ((prop[9]->>'id')::int), ((prop[9]->>'score')::float4));" &

psql -c "create index idx_js_10 on js (gid, ((prop[10]->>'id')::int), ((prop[10]->>'score')::float4));" &

Step 4. Perform the pressure test by using the original method.

vi test1.sql

\set gid random(1,10000)

\set id random(0,1000)

\set l random(0,50)

\set u random(51,100)

select * from js where gid=:gid and get_res(prop, :id, :l, :u);

pgbench -M prepared -n -r -P 1 -f ./test1.sql -c 56 -j 56 -T 120

transaction type: ./test1.sql

scaling factor: 1

query mode: prepared

number of clients: 56

number of threads: 56

duration: 120 s

number of transactions actually processed: 11517

latency average = 582.923 ms

latency stddev = 109.062 ms

tps = 95.708298 (including connections establishing)

tps = 95.927842 (excluding connections establishing)

statement latencies in milliseconds:

0.004 \set gid random(1,10000)

0.001 \set id random(0,1000)

0.001 \set l random(0,50)

0.001 \set u random(51,100)

582.917 select * from js where gid=:gid and get_res(prop, :id, :l, :u);

top - 12:37:42 up 28 days, 17:25, 3 users, load average: 47.06, 19.61, 10.53

Tasks: 542 total, 58 running, 484 sleeping, 0 stopped, 0 zombie

%Cpu(s): 95.6 us, 4.3 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem : 23094336+total, 85957776 free, 5347196 used, 13963840+buff/cache

KiB Swap: 0 total, 0 free, 0 used. 17652979+avail Mem

Step 5. Perform the pressure test by using the optimized method.

vi test2.sql

\set gid random(1,10000)

\set id random(0,1000)

\set l random(0,50)

\set u random(51,100)

select * from get_js(10,:gid, :id, :l, :u);

pgbench -M prepared -n -r -P 1 -f ./test2.sql -c 56 -j 56 -T 120

transaction type: ./test2.sql

scaling factor: 1

query mode: prepared

number of clients: 56

number of threads: 56

duration: 120 s

number of transactions actually processed: 2042359

latency average = 3.290 ms

latency stddev = 0.300 ms

tps = 16999.278637 (including connections establishing)

tps = 17000.090714 (excluding connections establishing)

statement latencies in milliseconds:

0.002 \set gid random(1,10000)

0.001 \set id random(0,1000)

0.000 \set l random(0,50)

0.001 \set u random(51,100)

3.288 select * from get_js(10,:gid, :id, :l, :u);

top - 12:45:12 up 28 days, 17:32, 2 users, load average: 22.72, 23.75, 17.37

Tasks: 537 total, 58 running, 479 sleeping, 0 stopped, 0 zombie

%Cpu(s): 93.8 us, 6.2 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem : 23094336+total, 49231168 free, 8543336 used, 17316886+buff/cache

KiB Swap: 0 total, 0 free, 0 used. 14191040+avail Mem

1. When you use the multi-tree APPEND method described in this document, there is no wastage of CPU resources and the performance improves by N times.

| Maximum data capacity | Case | TPS | RT | CPU usage |

|---|---|---|---|---|

| 1 billion | Original method | 96 | 583 ms | 100% |

| 1 billion | Optimization method | 17,000 | 3.3 ms | 100% |

2. We recommend you to perform indexing by partition to optimize the database kernel. At the kernel layer, one BTree index corresponds to multiple trees, which solves the problem related to the multi-value point query and interval query in the array.

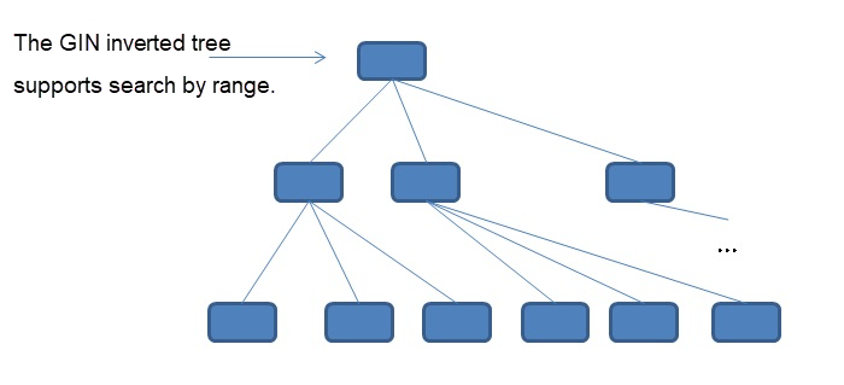

3. Currently, PostgreSQL GIN and GiST indexes only support the retrieval of the multi-value data, array, and JSON data in the inclusion and overlapping relations. It does not support query by value range. To support query by value range, you need to improve the inverted tree and develop the corresponding OPS.

Source:https://www.postgresql.org/docs/10/static/datatype-json.htm

The RUM index API can realize this function to some extent.

Build your own PostgreSQL solution on Alibaba Cloud with ApsaraDB for RDS PostgreSQL.

digoal - March 20, 2019

digoal - April 12, 2019

Alibaba Cloud MaxCompute - December 6, 2021

digoal - July 4, 2019

digoal - May 9, 2020

ApsaraDB - October 20, 2020

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More Database Overview

Database Overview

ApsaraDB: Faster, Stronger, More Secure

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn MoreMore Posts by digoal