MLlib is Spark's machine learning library that aims to simplify the engineering practice of machine learning and facilitate its expansion to a large scale. Because machine learning requires multiple regression, conventional Hadoop computing framework will results in high I/O and CPU consumption.

In contrast, Spark is memory-based computing, which has a natural advantage. Its RDD can seamlessly share data and operations with other sub-frameworks and databases, such as Spark SQL, Spark Streaming, and GraphX. For example, MLlib can directly use data provided by SparkSQL or directly perform the join operation with GraphX.

MLlib consists of three parts:

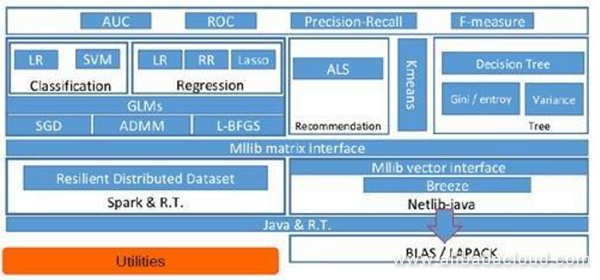

The following figure shows the core content of the MLlib algorithm library.

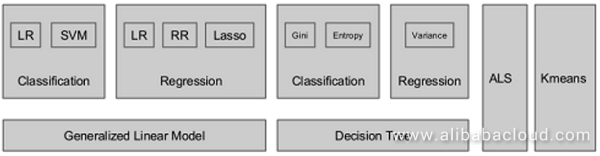

MLlib is composed of some general learning algorithms and tools, including classification, regression, cluster, collaborative filtering (CF), and dimensionality reduction. It also includes underlying optimization primitives and high-level pipeline APIs.

Spark.mllib supports various classification methods, including binary classification, multiple classifications, and regression analysis. The following table lists algorithms supported for different types of problems.

| Problem type | Supported method |

|---|---|

| Binary classification | Linear SVM, Logistic regression, Decision tree, Random forest, GBDT, Naive Bayes |

| Multi-class classification | Logistic regression, Decision tree, Random forest, Naive Bayes |

| Regression | Linear least square, Lasso, Ridge regression, Decision tree, Random forest, GBDT, Isotonic regression |

As a subtype of supervised learning, classification is commonly used to create reliable predictions based on data. Applications of classification include:

1. Bus route prediction and optimization

The expectation is that the behavior pattern of citizens in selecting public transportation can be explored based on the massive public transportation data. In Guangdong province, transportation models are created by analyzing historical data of bus routes and associating them with user behavior patterns. This model is then used to optimize bus routes based on predicted passenger needs.

2. Credit evaluation based on historical transactions

In China, personal credit evaluation mainly refers to the individual credit evaluation reports of the central bank. However, for users without personal credit records, it is expensive for financial institutions to learn their credit records. Products such as Alipay provide an alternative measure for businesses to evaluate customer's credit history.

3. Product recommendation through image search

Users of e-commerce platforms such as Taobao and TMall can search for millions of products by simply taking pictures of a product. These intelligent platforms will then extract necessary information from product pictures and provide accurate recommendation to users.

4. Advertisement click behavior prediction

Online marketing is a constant battle of traffic and impressions. By analyzing user behavior, enterprises can optimize ads by predicting topics that interest specific users the most.

5. Text content-based spam message identification

Anyone with an email address would have come across spam messages. Spam has the potential to seriously affect enterprises, especially when it involves some kind of cyberattack. Email service providers can create intelligent spam filters through machine learning algorithms and big data analysis and mining.

A cluster center is located using iteration to minimize the sum of the squared errors (SSE) between samples and class means.

Spark2.0 (not the RDD API-based MLlib) supports four clustering methods:

The RDD API-based MLlib supports six clustering methods:

PIC and Streaming k-means are not supported by Spark2.0.

K-means is the most frequently used clustering method as it is easy to understand but reliable. K-Means is a clustering algorithm of the iterative solution and is a partitioning-type clustering method. That is, K partitions get created, and then samples is moved from one partition to another in an iterative manner to improve the quality of the final cluster. K-Means can be used to create a model for clustering problems easily.

The K-Means clustering algorithm can be implemented in three steps:

Step 1: Select K samples at random as the initial cluster centers for points to be clustered.

Step 2: Calculate the distance from each point to the cluster center, and cluster each end to the nearest cluster center.

Step 3: Calculate the mean coordinate value of all points in the cluster, and use the mean value as the new cluster center.

Step 2 is repeated until the cluster center is no longer moving with a large variation or until the number of clustering times meets the requirement.

1. Commercial site selection based on location data

When using cellular networks, users naturally leave their location information. By clustering customer's location data, enterprises can optimize locations of commercial sites. This technology can also be used to optimize cell tower location for cellular networks.

2. Standardization of Chinese addresses

Due to the complexity of Chinese language and nonstandard naming of Chinese addresses, rich information included in addresses cannot be analyzed or mined in an in-depth manner. Address standardization makes it possible to extract and analyze multiple dimensions based on addresses and provide more diversified methods and measures for e-commerce application mining in different scenarios.

Collaborative filtering (CF) is defined in Wikipedia as follows: preferences of a group with the same interest and common experience are used to recommended interesting information to users. Individual users respond to, for example, score the recommended information through the collaborative mechanism. Such responses are recorded for filtering and help others to filter information. The response is not necessarily limited to responses to, particularly interesting information. Responses to uninterested information are also critical.

CF often gets applied to recommendation systems. This technology aims to supplement the part that is missing from the user-product association matrix.

MLlib currently supports model-based CF, where users and products are described using a small group of recessive factors and these factors are also used to predict the missing elements. MLlib uses the Alternating Least Squares (ALS) to learn these latent factors.

Users' preferences for objects or information vary according to applications, and may include users' rating of objects, object viewing records, and purchase records. Preference information of these users can get classified into two types:

Explicit user feedback: In addition to browsing or using websites, users explicitly provide feedback, for example, object rating or comments.

Implicit user feedback: This type of information gets generated when users are using websites. It implicitly reflects users' preference for objects. For example, a user purchases a particular object, or the user views information about this object.

Explicit user feedback can accurately reflect users' real preferences for some objects, but users must pay extra costs. Implicit user feedback, through analysis and processing, can also reflect users' preferences, but the data is not very accurate, and some behavior analysis has large noises. However, if correct behavior characteristics are selected, good effects can be achieved from the implicit user feedback. Selection of behavior characteristics varies a lot in different applications. For example, on an e-commerce website, the purchasing behavior is, in fact, implicit feedback that can well demonstrate users' preferences.

Depending on the recommendation mechanisms, the recommendation engine may use part of the data source and analyze the data to obtain specific rules or directly predict users' preferences for other objects. In this way, the recommendation engine can recommend objects that a user may be interested in when the user visits the website.

The following parameters are available to implement CF with MLlib:

You can easily integrate Spark onto Alibaba Cloud Big Data products and services. Alibaba Cloud E-MapReduce and MaxCompute are fully compatible with Spark. To learn more, visit the Alibaba Cloud official documentation center.

Industrial Big Data: An Interview with K2Data’s Chief Operating Officer

2,593 posts | 793 followers

FollowAlibaba Clouder - April 9, 2019

Alibaba Clouder - November 14, 2017

Alibaba Clouder - November 23, 2020

Alibaba Clouder - July 26, 2019

Alibaba Clouder - November 23, 2020

Apache Flink Community China - November 6, 2020

2,593 posts | 793 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT February 16, 2019 at 6:25 am

Good sharing. Spark is memory-centric solution and if we can see use cases where we use along side GPUs, it will be more interesting :)