By: Jeremy Pedersen

It's the moment of truth. The meetings are over, the architecture for your new data warehouse has been decided on, all the stakeholders have signed off on the plan, and now the time has come...to talk to finance.

So here you are: you've got an architecture diagram and a scary-looking spreadsheet from the finance team in front of you. As the engineer responsible for implementing the project, it falls on YOU to figure out what it will cost the company.

Here we go.

Luckily, the project is simple. You are collecting data from an Oracle database, in batches (once a day), and storing it in MaxCompute. You're going to do this with scheduled workflows you are creating in DataWorks.

Even more luckily, all the other stuff (load balancers, VMs, networking) is the IT team's problem. This means you only need to calculate prices for MaxCompute and DataWorks.

You have a pretty good idea how much data you need to store, how it will scale over time, and what ETL work needs to be done.

You have a large Oracle database containing about 100 GB of user data and 1 TB of order data. The order data is growing at the rate of about 1 GB per day, while the user data grows at about 1 GB per month. You expect this growth pattern to continue for the forseeable future.

Each day, you want to import all the new user and order data that was generated the previous day. You will be setting up a workflow in DataWorks to achieve this.

Further, at the end of every month, a report needs to be generated which combines data about all users with any new orders that have taken place. You are planning to write some MaxCompute SQL code that will use SELECT, GROUP BY, JOIN, INSERT, and ORDER BY to compile these results into a new table.

Your team also requires that each month's new data needs to be backed up locally, so you need to export it from MaxCompute somehow.

We have four expenses to consider here:

We'll consider each in turn.

Note: we'll be working out an annual budget, so we need to look at our costs over the whole year.

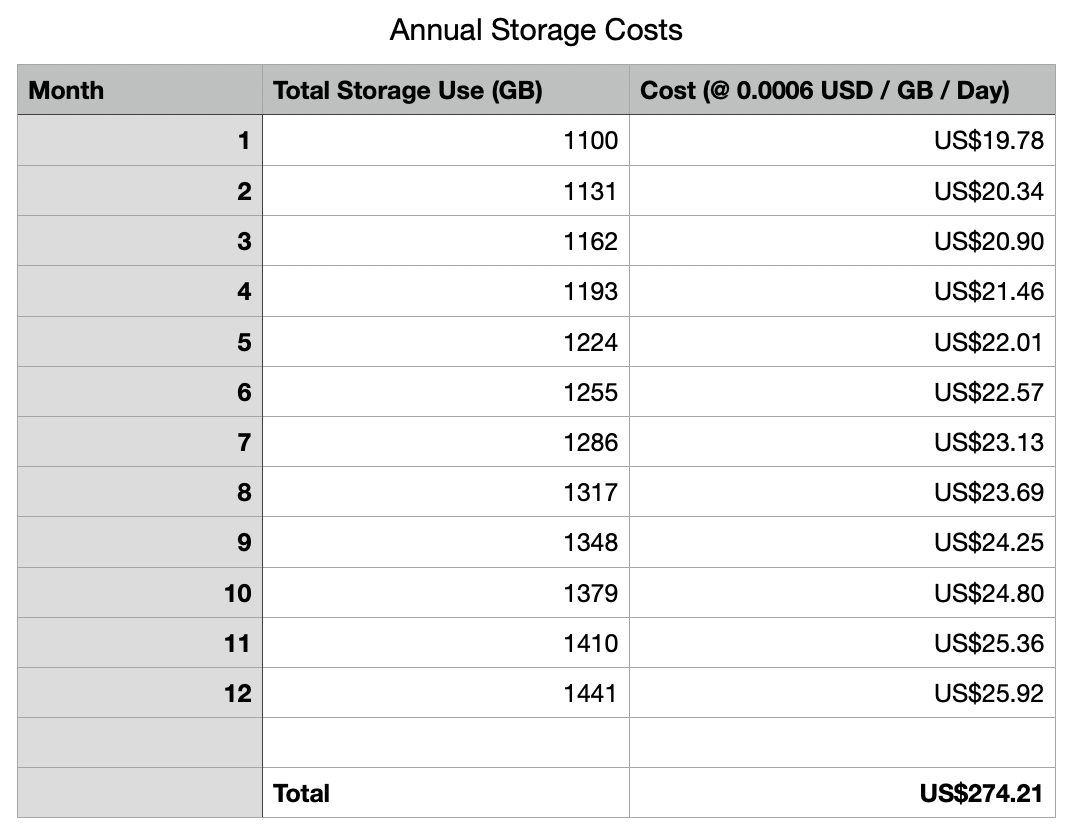

To start with, let's set up some rough estimates for the amount of data we will need to store over the next 12 months.

We start out with approximately 100 GB of customer data and 1000 GB (1 TB) of order data.

Each month, we add 1 GB of new customer data and about 30 GB of new order data (1 GB per day).

MaxCompute charges a fixed rate: 0.0006 USD / GB / Day for any data we store, so doing the calculations will be fairly easy. Oh, also, the first 1 GB of data we store is free, so we will subtract 1 GB before computing our storage costs.

So our total cost for the year is not bad: about 274 USD.

In actuality our storage costs should be even lower, because of compression. MaxCompute charges for storage after compression has been applied. Not bad!

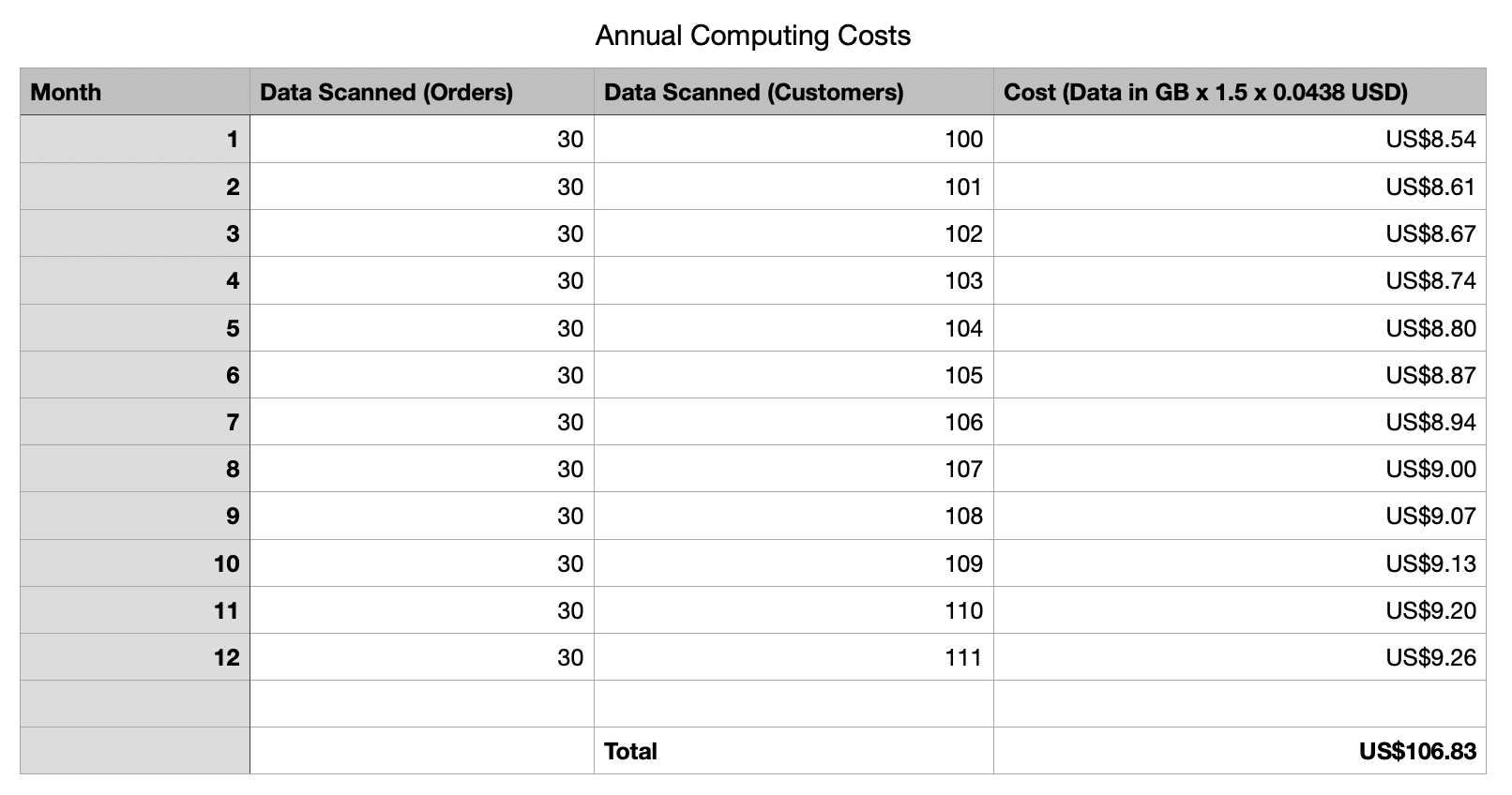

We will also have to pay to run our monthly SQL query and compile our monthly report.

Although you can pay for MaxCompute resources up front, here we'll be using Pay-As-You-Go: it's cheaper for scenarios like this where we only run queries infrequently.

MaxCompute charges you for computing resources based on:

"SQL complexity" is a little difficult to explain, so you might want to take a look at this document in order to understand it better.

Essentially, you count up the number of SQL keywords, using this formula:

Number of SQL keywords = Number of JOIN clauses + Number of GROUP BY clauses + Number of ORDER BY clauses + Number of DISTINCT clauses + Number of window functions +

MAX(Number of INSERT statements - 1, 1)

Then, you use the following conversion rule to arrive at the "complexity" score:

You then use the following formula to work out the total cost to run the query:

SQL Complexity Score x Amount of Data Scanned (GB) x 0.0438 USDWe said our SQL query would involve SELECT, GROUP BY, JOIN, INSERT, and ORDER BY. Except for SELECT, all of those count as SQL keywords here, so we have 4 keywords. Using the rules above, this gives us a complexity score of 1.5.

We said that compiling our report requires us to read:

Each month, we're generating about 30 GB worth of new order data, and 1 GB of new customer data.

Because we care only about orders from the current month, the amount of order data we need to scan is fixed, at around 30 GB per month.

Because we care about all customers, both new and existing, the amount of customer data we need to consider increases every month.

Let's see what effect this has on costs over a year:

Our annual storage costs should be around 107 USD. Still quite cheap.

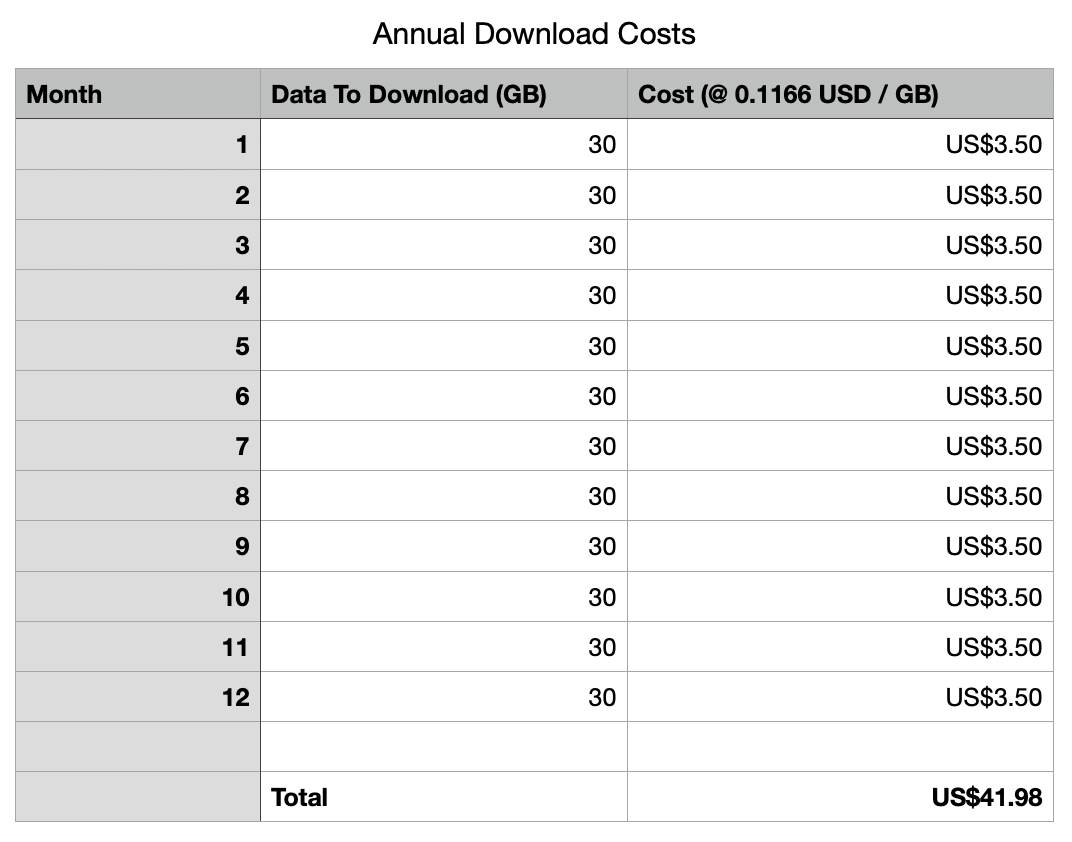

Our team has also asked us to make backups of the new data that is generated each month. Luckily, that first 100 GB of user data and 1000 GB of order data was already stored in our own environment (our Oracle database), so there's no need to download that.

Each month we are generating a table summarizing the new order and new user data. Let's say that this table is typically about the same size as the order data itself (30 GB), and we need to download all of that locally.

What will this cost us? We'll be downloading roughly the same amount of data (30 GB) each month, and we will do this twelve times over the course of the year.

MaxCompute charges a fixed cost for outbound traffic, which is 0.1166 USD / GB.

Our total cost here ends up being just 41.98 USD for the year.

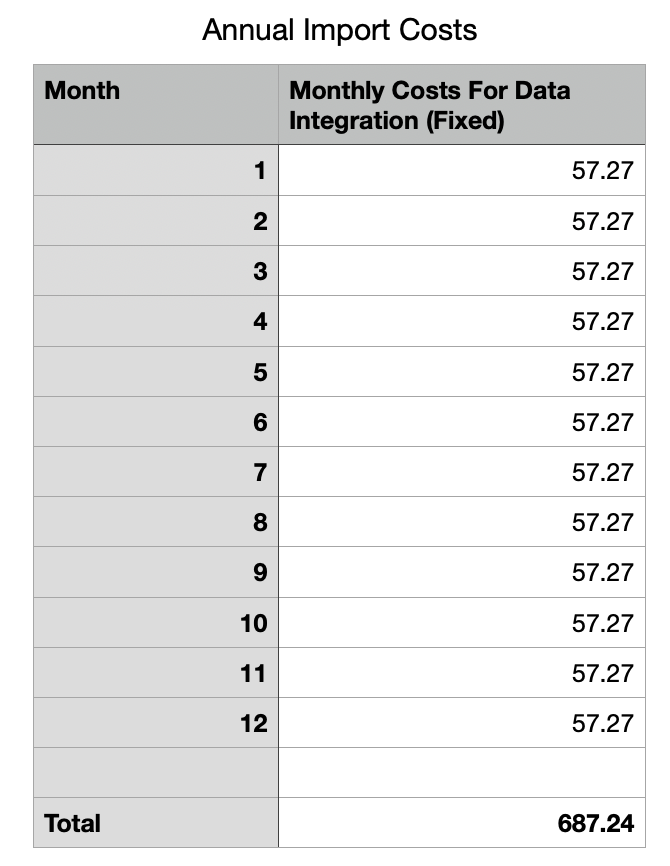

Because we will use DataWorks Data Integration to pull in data from our Oracle database, we need to have an Exclusive Resource Group for Data Integration set up to actually run the import tasks.

Unfortunately, this is likely to be our most significant expense, since it requires setting up a virtual machine in the background, which needs to run 24/7.

Prices for this service vary by region, so let's use the China (Zhangjiakou) region as an example. The monthly price for the smallest Exclusive Resource Group instance is 57.27 USD. This brings our annual costs to:

This teaches us an important lesson: your costs for additional services like Data Integration can often exceed your costs for data storage and compute!

Now we have everything we need to find our total annual costs for MaxCompute:

Our total cost for the year comes out to 1110.26 USD.

This is far, far too big a topic for a single blog post, but there are some general actions you can take to reduce your overall costs:

WHERE clauses that operate on MaxCompute table partitions.That's it! Now you know how to understand data warehousing costs on Alibaba Cloud, and hopefully you are beginning to understand how to reduce those costs as well. See you next week!

Great! Reach out to me at jierui.pjr@alibabacloud.com and I'll do my best to answer in a future Friday Q&A blog.

You can also follow the Alibaba Cloud Academy LinkedIn Page. We'll re-post these blogs there each Friday.

Realtime Synchronization From MySQL to MaxCompute with DataWorks - Friday Blog - Week 42

Lift-and-Shift: Moving VMs To Alibaba Cloud With Server Migration Center (SMC) - Friday Blog Week 45

Alibaba Cloud MaxCompute - September 18, 2019

JDP - June 25, 2021

JDP - December 30, 2021

JDP - May 20, 2021

Alibaba Clouder - June 26, 2018

Alibaba Cloud MaxCompute - December 17, 2018

MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More Personalized Content Recommendation Solution

Personalized Content Recommendation Solution

Help media companies build a discovery service for their customers to find the most appropriate content.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn MoreMore Posts by JDP