By Shushao

RocketMQ 5.0 introduces innovative features such as the proxy module, the stateless pop consumption mechanism, and the gRPC protocol. It also introduces a new client type: SimpleConsumer.

The SimpleConsumer client uses a stateless pop mechanism to completely solve Server Load Balancing (SLB) problems that may occur when the client publishes messages and goes online and offline. However, this new mechanism also brings a new challenge: message consumption latency may occur when the number of clients and messages is small.

The current messaging products commonly use the long polling mechanism for consumption. This means that the client sends a request with a relatively long timeout to the server. The request remains suspended until a message exists in the queue or the specified long polling period is reached.

However, the introduction of the proxy exposes a problem with the long polling mechanism. The long polling at the client level is coupled with the long polling within the proxy and the broker. This means that one long polling from the client to the proxy corresponds to only one long polling from the proxy to the broker. As a result, problems occur in the following situations: when the number of clients is small and multiple available brokers are present at the backend, the long polling suspension logic is triggered if the request reaches a broker without messages. In this case, even if a message exists in another broker, it cannot be retrieved because the request is attached to a different broker. This leads to the client being unable to receive the message in real time, resulting in a false empty response.

In such cases, a consumer who sends a message and initiates a consumption request cannot see the request retrieve the message in real time. This impairs the real-time message delivery and its reliability.

When designing the solution, the first consideration is how existing message commercial products handle this issue.

MNS adopts the following strategy: it divides the long polling period into multiple short polling time slices to cover all brokers as much as possible.

First, multiple requests are made to the backend brokers during the long polling period. Second, if the short polling quota is not exceeded, priority is given to short polling consumption requests to communicate with the broker. Otherwise, long polling is used, which is equivalent to the client's long polling period. Additionally, to avoid excessive consumption of CPU and network resources caused by a large number of short polls, when the number of short polls exceeds a certain limit and there is sufficient remaining time, the last request is converted to long polling.

However, despite the goal of polling all brokers as much as possible, the above strategy does not solve all problems. If the polling period is short or there are many brokers, it is not possible to poll all brokers completely. Even with sufficient time, a time mismatch may occur where a message is only ready on the broker after the short polling request ends, resulting in the message not being retrieved in time.

Firstly, it is important to clarify the scope and conditions of the problem. This issue only arises when there is a small number of clients and requests. It does not occur when there are a large number of clients with strong request response capability. Therefore, the ideal solution should be adaptive and able to solve the problem without introducing additional costs when there is a large number of clients.

The key to the solution is to separate the client's long polling at the frontend from the broker's long polling at the backend. The proxy should have the ability to detect the number of messages at the backend and prioritize selecting the broker with messages to avoid false empty responses.

Considering the stateless nature of pop consumption, the solution should have a design logic consistent with pop without introducing additional state in the proxy to handle this problem.

In addition, simplicity is crucial. Therefore, the solution should be simple and reliable without adding unnecessary complexity.

We use NOTIFICATION to determine if there are unconsumed messages at the backend. With this information, we can intelligently guide the message pulling from the proxy side.

By refactoring NOTIFICATION, we have made some improvements to better meet the requirements of this solution.

A client's request can be abstracted as a long polling task, which consists of a notification task and a request task.

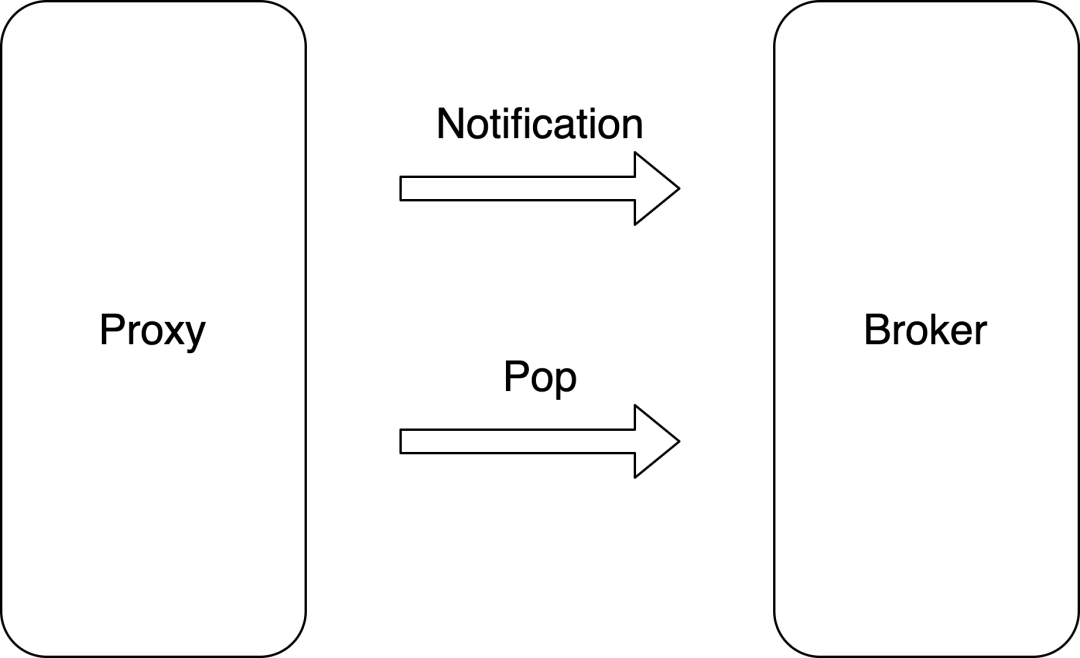

The notification task aims to check if there are consumable messages in the broker, corresponding to the notification request. The request task is to consume messages from the broker, corresponding to the pop request.

Initially, the long polling task executes a pop request to ensure efficient processing when there is a backlog of messages. If a message is obtained, the result is returned and the task ends. If no message is obtained and there is still polling time remaining, an asynchronous notification task is submitted to each broker.

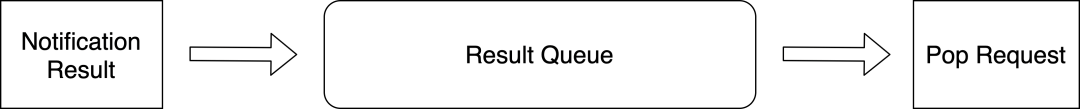

When the notification task returns, if no message exists, the long polling task is marked as completed. However, if the relevant broker has messages, the result is added to the queue and the consumption task is started. This queue is designed to cache multiple returned results for future retries. In a standalone proxy, the proxy can pull messages as long as one notification result returns a message. However, in a distributed environment with multiple proxies, even if the notification result returns a message, it cannot guarantee that the client will be able to retrieve the message. Therefore, the queue is designed to avoid this situation.

The consumption task obtains the result from the preceding queue. If no result is found, it directly returns the result. This is because the consumption task is triggered only when the notification task returns the existence message of the broker. Therefore, if the consumption task cannot obtain the result, it can be inferred that other concurrent consumption tasks have already processed the message.

After the consumption task obtains the result from the queue, it will be locked to ensure that a long polling task has only one ongoing consumption task, so as to avoid additional unprocessed messages.

If a message is obtained or the long polling time ends, the task is marked as complete, and a result is returned. However, if the message is not obtained (it may be concurrent operations of other clients), the system continues to initiate the asynchronous notification task corresponding to the route and attempts to consume it.

When there are many requests, it is not necessary to use the pop with notify mechanism. In such cases, we can utilize the original pop long polling broker solution. However, we need to consider how to adaptively switch between the two solutions. Currently, the decision is based on the number of pop requests counted by the proxy. If the number of requests is less than a certain value, it is considered small, and we use pop with notify. Otherwise, the pop long polling is used.

The preceding solutions are based on the single-machine perspective. Therefore, if the consumption requests are unbalanced on the proxy side, the judging conditions may be different.

To further tune long polling and observe the effect of long polling, we designed a set of metrics to record and observe the performance and loss of real-time long polling.

On the latest Apache RocketMQ server, the minimum time of a long poll is 5 seconds. It takes more than 5 seconds to trigger long polling. This value can be configured or modified in the ProxyConfig#grpcClientConsumerMinLongPollingTimeoutMillis.

For SimpleConsumers, you can use the awaitDuration field to adjust the long polling period.

SimpleConsumer consumer = provider.newSimpleConsumerBuilder()

.setClientConfiguration(clientConfiguration)

.setConsumerGroup(consumerGroup)

// set await duration for long-polling.

.setAwaitDuration(awaitDuration)

.setSubscriptionExpressions(Collections.singletonMap(topic, filterExpression))

.build();With the above solution, we have successfully designed a real-time consumption solution based on a stateless consumption mode. This solution can avoid false empty responses and ensure real-time consumption while maintaining a stateless consumption on the client side. Furthermore, compared to the original long polling scheme of PushConsumer, it significantly reduces the number of invalid requests on the user side and reduces network overhead.

Locate and Solve RPC Service Registration Failure with MSE Autonomous Service

637 posts | 55 followers

FollowAlibaba Cloud Native Community - January 5, 2023

Alibaba Cloud Native Community - December 19, 2022

Alibaba Cloud Native Community - January 9, 2024

Alibaba Cloud Native Community - November 23, 2022

Alibaba Developer - April 18, 2022

Alibaba Cloud Native Community - November 20, 2023

637 posts | 55 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More ChatAPP

ChatAPP

Reach global users more accurately and efficiently via IM Channel

Learn More Voice Messaging Service

Voice Messaging Service

A one-stop cloud service for global voice communication

Learn MoreMore Posts by Alibaba Cloud Native Community