By Huaixin Chang and Tianchen Ding

In the first and second parts of the series, we have introduced the optimization effect and evaluated the impact. This article shows the detailed simulation results and gives conclusions and suggestions by analyzing the results of these different scenarios. It also introduces collection and simulation tools to enable readers to evaluate their business scenarios.

The number of cgroups in the whole system is m, and each cgroup will divide up the total CPU resources of 100% (quota=1/m). Each cgoup generates computing requirements according to the same rules (independent and obeys the same distribution) and gives them to the CPU for execution.

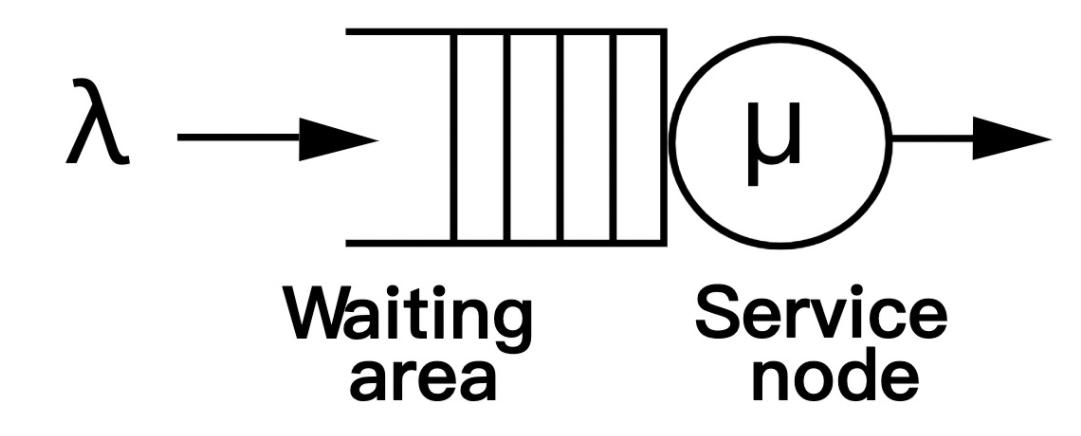

We refer to the model of queuing theory and treat each cgroup as a customer. Then, the CPU is the service desk, and the service time of each customer is limited by quota. We discretely define the arrival time interval of all customers as a constant to simplify the model. CPU can serve the computing requirements of 100% at most in that interval. This interval is a period.

Then, we need to define the service time of each customer in a period. We assume the computation demand generated by the customer is independently and identically distributed, with an average of u_avg times its quota. The customer's unmet computation demand in each period will be accumulated. The service time it submits to the service desk in each period depends on its computation demand and the maximum CPU time allowed by the system (namely its quota plus the token accumulated in the previous periods).

Finally, there is an adjustable parameter buffer in the CPU Burst technology, which indicates the upper limit of the token allowed to accumulate. It determines the instantaneous burst capability of each cgroup, and we denote its size by b times the quota.

We have made the following settings for the parameters defined above:

| Parameters | Description | Value |

| Distribution | Computing the distribution of demand generation | Negative Exponential, Pareto |

| u_avg | Average generated computing requirements | 10%-90% |

| m | Number of cgroups (containers) | 10, 20, 30 |

| b | The buffer size of the token bucket (Multiplier relative to its quota) | 100%, 200%, ∞ |

The negative exponential distribution is one of the most common and most used distributions in queuing theory models. Its density function is  , where

, where  .

.

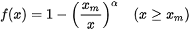

Pareto distribution is a common distribution in the computer scheduling system. It can simulate a high long tail latency, reflecting the effect of CPU Burst. The density function is  . We set the

. We set the  to suppress the probability distribution of the tail, in which the maximum calculation demand that can be generated when u_avg=30% is about 500%.

to suppress the probability distribution of the tail, in which the maximum calculation demand that can be generated when u_avg=30% is about 500%.

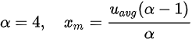

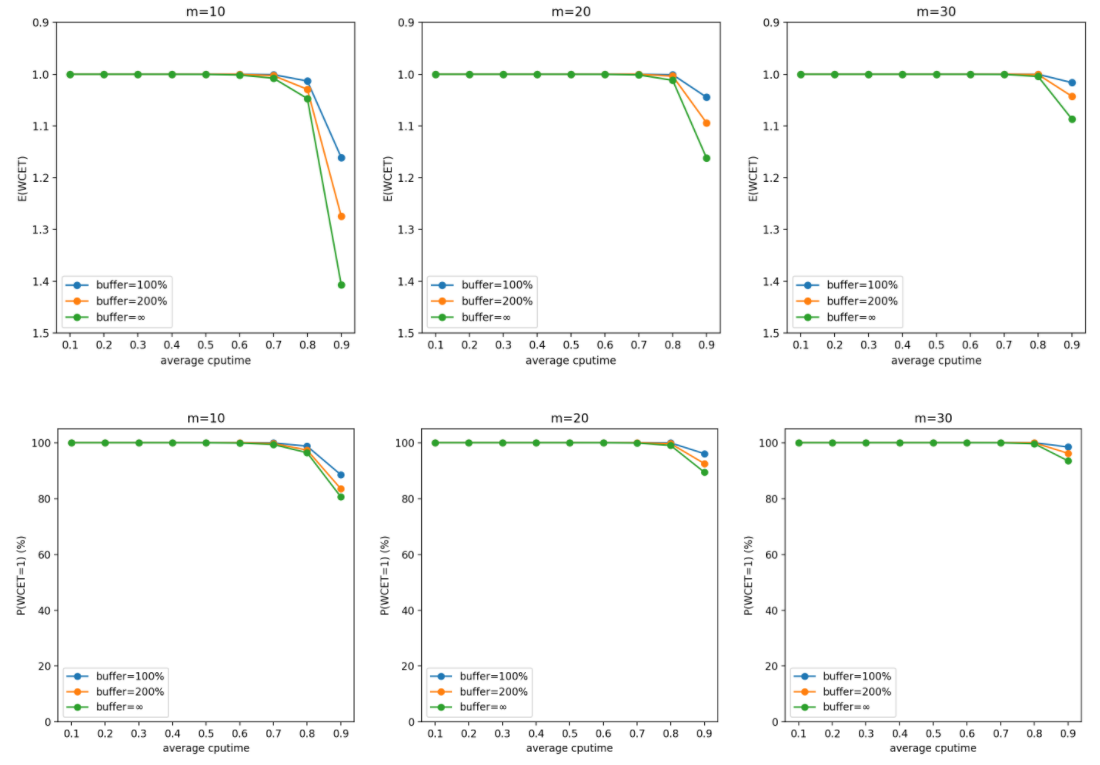

The results of the Monte Carlo simulation based on the preceding parameter settings are shown below. We reverse the y-axis of the first group of (WCET expectations) charts for easier understanding. Similarly, the second charts (probability that WCET equals 1) represent the probability that the real-time performance of the schedule is guaranteed, expressed in a percentile system.

A higher u_avg (load of computation demand) and a lower m(number of cgroups) result in a larger WCET. The former conclusion is clear. The latter happens because more tasks are independently distributed, meaning more overall demand tends to be average. Tasks that exceed quota requirements and tasks lower than quota that free up CPU time are more likely to complement each other.

Increasing buffer will make CPU Burst perform better, and the optimization benefit for a single task is clearer. However, it will increase WCET, which means increasing the interference to adjacent tasks. This is also an intuitive conclusion.

When setting the buffer size, we recommend determining it based on the computation requirements (including distribution and mean), the number of containers for specific business scenarios, and your requirements. If you want to increase the throughput of the overall system and optimize the performance of the container when the average load is low, you can increase the buffer. Conversely, if you want to ensure the stability and fairness of scheduling in the case of high overall load to reduce the impact on the container, you can reduce the buffer.

In general, CPU Burst does not have a significant impact on adjacent containers in scenarios where the average CPU utilization is lower than 70%.

After finishing the boring data and conclusions, we can move on to the question that may concern many readers. Will CPU Burst affect the business scenario? We modified the tool used in the Monte Carlo simulation to help test the specific impact in real-world scenarios.

You can obtain the tools at this link. Detailed instructions are attached in a README. Let's look at a specific example.

Let's say a person wants to deploy ten containers on the server for the same business. This person starts a container to run the business normally, binds it to the cgroup named cg1, and does not set throttling to obtain the real performance of the business.

Then, call sample.py to collect the data. (*For demonstration purposes, we only collected 1000 times. We recommend collecting data more times if the conditions allow.)

These data are stored in ./data/cg1_data.npy. The final output prompt indicates that the business occupies about 6.5% of CPU on average. The total average CPU utilization is about 65% when ten containers are deployed. (Variance data is also printed for reference. More variance equals more benefits from CPU Burst.)

Then, he uses simu_from_data.py to calculate the impact of setting the buffer to 200% when configuring ten cgroups in the same scenario as cg1:

According to the simulation results, enabling the CPU Burst has almost no negative impact on the container in this business scenario. Thus, this person can rest assured when using CPU Burst technology.

Please visit the warehouse link for more information!

Tianchen Ding from Alibaba Cloud, core member of Cloud Kernel SIG in the OpenAnolis community.

Huaixin Chang from Alibaba Cloud, core member of Cloud Kernel SIG in the OpenAnolis community.

SIG address: https://openanolis.cn/sig?lang=en

96 posts | 6 followers

FollowOpenAnolis - March 25, 2022

Alibaba Cloud Native Community - July 13, 2022

Alibaba F(x) Team - September 30, 2021

Wei Kuo - August 30, 2019

Apache Flink Community China - April 20, 2023

Alibaba Cloud Serverless - September 29, 2022

96 posts | 6 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by OpenAnolis