Remote Procedure Call (RPC) is a computer communication protocol that allows the program running on one computer to call subprograms on another computer, without coding for remote interaction. In the object-oriented programming paradigm, RPC calls are represented by remote invocation or remote method invocation (RMI). In simple terms, RPC is a method to invoke a remote known procedure through the network. RPC is a design and concept, but not a specific technology. RPC involves three key points:

These three key points constitute a simple RPC frame. The Go Programming Language (Golang) maintains a simple RPC frame, of which the source code are located at src/net/rpc. Its implementation is simple:

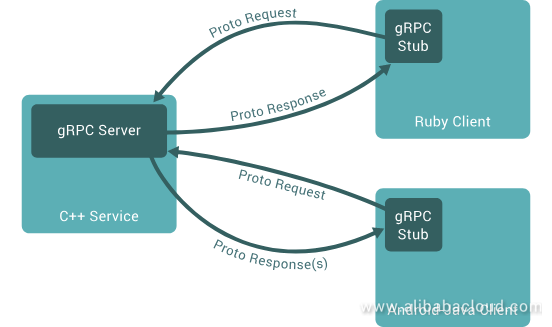

The official RPC frame of Go is insufficient for large projects. Golang officially recommends the GRPC frame, which is maintained in community. GRPC provides all basic functions and fully utilizes the features of Golang. You can learn a lot just by reading the source code.

As just mentioned, Golang officially recommends GRPC. What are the advantages of GRPC?

Source: https://grpc.io/

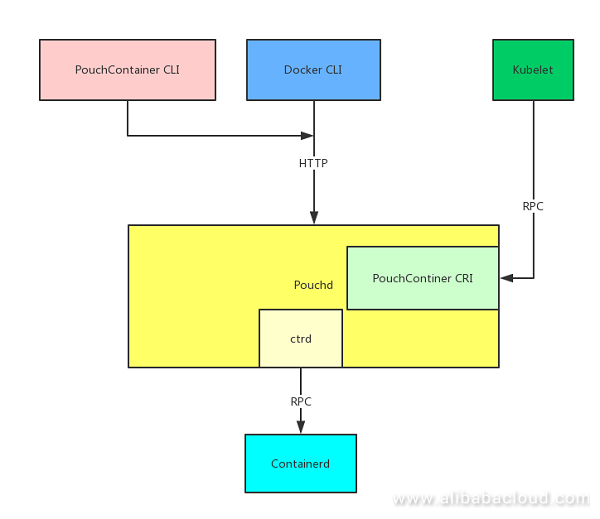

The PouchContainer community has provided a clear architecture diagram. However, the diagram is extremely detailed, which makes it difficult to illustrate RPC on PouchContainer. The following is a simplified figure:

The arrows point to the service end. When the Pouchcontainer Daemon (Pouchd) starts, two types of services are also started: HTTP Server and RPC Server. The PouchContainer was designed to work with Docker, so both Pouch CLI and Docker CLI can send requests through the HTTP server started by Pouchd to operate the PouchContainer. In addition, PouchContainer cooperates with CNCF and is compatible with Kubernetes. It implements the PouchContainer CRI defined by the Kubernetes community. The RPC server related to CRI can be started using enable-cri. By default, Pouchd does not start any service. In addition, the Containerd provides container service to the bottom layer of PouchContainer, and they interact through RPC client.

As we know, PouchContainer provides the RPC server to be invoked by Kubelet on CRI Manager, and invokes the bottom-layer Containerd through RPC Client. The GRPC frame is used in this process.

The following is the simplified codes for server booting:

// Start CRI service with CRI version: v1alpha2

func runv1alpha2(daemonconfig *config.Config, containerMgr mgr.ContainerMgr, imageMgr mgr.ImageMgr) error {

······

criMgr, err := criv1alpha2.NewCriManager(daemonconfig, containerMgr, imageMgr)

······

server := grpc.NewServer(),

runtime.RegisterRuntimeServiceServer(server, criMgr)

runtime.RegisterImageServiceServer(server, criMgr)

······

service.Serve()

······

}The simplified codes are similar to the demo officially provided by GRPC, including the following steps:

GRPC has done many jobs. We only need to write the proto files to define services and use tools to generate the corresponding server and client codes. The server additionally carries out the previous three steps. Due to the limitation on Kubernetes and service requirements, CRI Manager needs to offer more services. Therefore, the PouchContainer team expanded the CRIs. The steps are as follows:

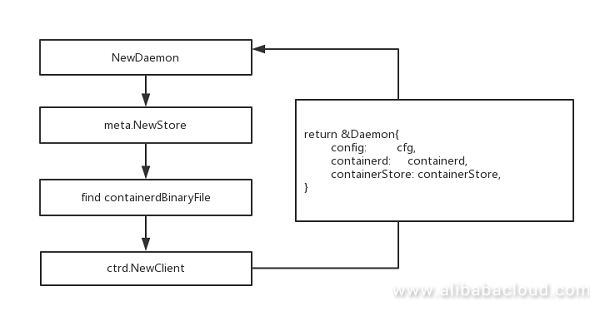

protoc --proto_path=. --proto_path=../../../../../vendor/ --proto_path=${GOPATH}/src/github.com/google/protobuf/src --gogo_out=plugins=grpc:. *.protoIn the simplified PouchContainer architecture, you can find that Pouchd interacts with Containerd through ctrd. For better understanding, we can see the NewDaemon workflow:

To understand what ctrd.NewClient did, let's look at the following source code.

// NewClient connect to containerd.

func NewClient(homeDir string, opts ...ClientOpt) (APIClient, error) {

// set default value for parameters

copts := clientOpts{

rpcAddr: unixSocketPath,

grpcClientPoolCapacity: defaultGrpcClientPoolCapacity,

maxStreamsClient: defaultMaxStreamsClient,

}

for _, opt := range opts {

if err := opt(&copts); err != nil {

return nil, err

}

}

client := &Client{

lock: &containerLock{

ids: make(map[string]struct{}),

},

watch: &watch{

containers: make(map[string]*containerPack),

},

daemonPid: -1,

homeDir: homeDir,

oomScoreAdjust: copts.oomScoreAdjust,

debugLog: copts.debugLog,

rpcAddr: copts.rpcAddr,

}

// start new containerd instance.

if copts.startDaemon {

if err := client.runContainerdDaemon(homeDir, copts); err != nil {

return nil, err

}

}

for i := 0; i < copts.grpcClientPoolCapacity; i++ {

cli, err := newWrapperClient(copts.rpcAddr, copts.defaultns, copts.maxStreamsClient)

if err != nil {

return nil, fmt.Errorf("failed to create containerd client: %v", err)

}

client.pool = append(client.pool, cli)

}

logrus.Infof("success to create %d containerd clients, connect to: %s", copts.grpcClientPoolCapacity, copts.rpcAddr)

scheduler, err := scheduler.NewLRUScheduler(client.pool)

if err != nil {

return nil, fmt.Errorf("failed to create clients pool scheduler")

}

client.scheduler = scheduler

return client, nil

}The code can be understood by dividing it into the following steps:

ContainerdDaemon as required.grpcClientPoolCapacity clients are repeatedly created and added to the client object pool. There are three key points:newWrapperClient. Created GRPC client object.maxStreamsClient. According to the verification of the PouchContainer team, the maximum number of grpc-go client streams is 100. The GRPC bottom layer is based on the HTTP2 protocol, so each request is encapsulated into the HTTP2 stream. Because the GRPC does not provide an official connection pool, PouchContainer defines a connection pool to improve the concurrency capacity of clients and offers a schedule in the Get methods. By default, PouchContainer provides a recently least used scheduling algorithm. You can also define other schedulers. Actually, to avoid repeated work, Containerd provides an official RPC client package to encapsulate the codes on RPC clients. The newWrapperClient also invokes the containerd.New() method in the client package. Therefore, the codes of ctrd are not as much as you think.The component decoupling was considered in the design of PouchContainer architecture, so you will easily understand the PouchContainer overall design through the architecture figure. As a member in container ecosystem, PouchContainer is connected to the upper-layer Kubernetes through RPC, and connected to the lower-layer Containerd by reusing what is already available. Then PouchContainer can implement its own features such as rich container.

665 posts | 55 followers

FollowAlibaba System Software - August 27, 2018

Alibaba System Software - August 30, 2018

Alibaba System Software - August 14, 2018

Alibaba System Software - September 3, 2018

Alibaba Clouder - April 19, 2019

Alibaba System Software - August 6, 2018

665 posts | 55 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Cloud Native Community