By digoal

With mobile devices becoming popular, more and more services have spatiotemporal properties. For example, express delivery companies can now track the locations of packages and couriers. Now, objects have spatial properties, couriers have location properties, and vehicles have real-time location properties.

Then we have two major requirements:

1) Real-time object location tracking, for example, real-time querying of couriers near a point or in a polygonal region.

2) Recording and analyzing object location traces, for example, trace analysis based on a map and prediction and generation of optimal path based on routing algorithms.

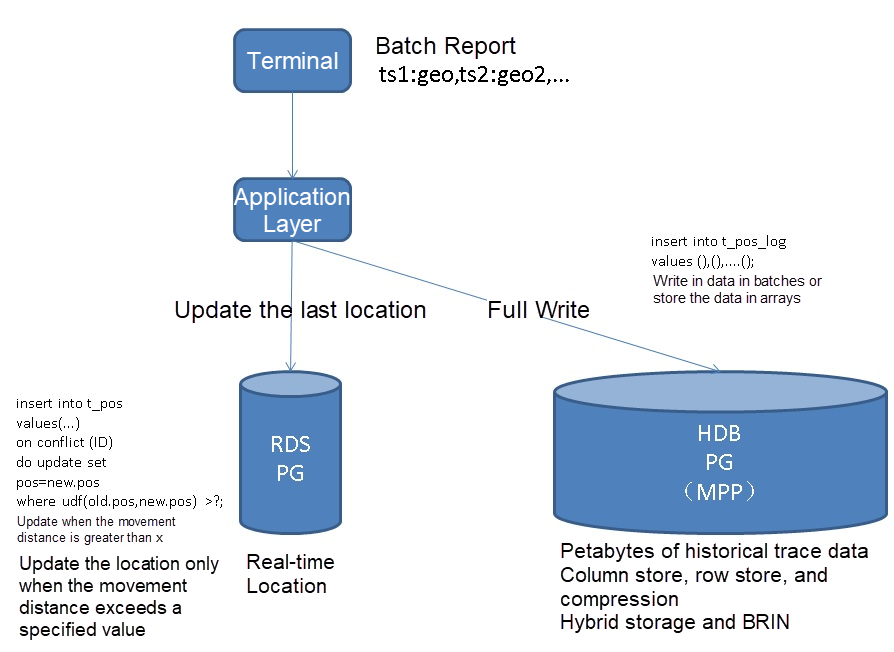

Take express delivery as an example. The GPS device reports a courier's trace in real time, writes the courier's locations into the tracking system, and stores the trace records in the trace analysis system.

Sometimes, the courier may stay in an area for a long time during the delivery process. For example, when the courier delivers packages in a residential community, the courier's reported locations may not change much. The location may not be updated on some occasions, considering the database update costs and location timeliness. For example, when the distance between the courier's last location and current location is less than 50 m, the location is not updated.

Dynamically updating locations prevents unnecessary database updates and improves overall throughput.

1) Create a table.

create table t_pos (

uid int primary key, -- 传感器、快递员、车辆、。。。对象ID

pos point, -- 位置

mod_time timestamp -- 最后修改时间

);

create index idx_t_pos_1 on t_pos using gist (pos); In a real-life environment, points in longitude and latitude can be stored using the PostGIS plugin's geometry data type.

create extension postgis;

create table t_pos (

uid int primary key, -- 传感器、快递员、车辆、。。。对象ID

pos geometry, -- 位置

mod_time timestamp -- 最后修改时间

);

create index idx_t_pos_1 on t_pos using gist (pos); 2) Report the location and automatically update the location according to the movement range.

For example, if the movement range is less than 50 m, the location is not updated.

insert into t_pos values (?, st_setsrid(st_makepoint($lat, $lon), 4326), now())

on conflict (uid)

do update set pos=excluded.pos, mod_time=excluded.mod_time

where st_distancespheroid(t_pos.pos, excluded.pos, 'SPHEROID["WGS84",6378137,298.257223563]') > ?; -- 超过多少米不更新 Generally, terminals report data in batches. For example, points collected every 10 s are reported. The data reported at a time may contain multiple points, which can be stored in PostgreSQL arrays.

create table t_pos_hist (

uid int, -- 传感器、快递员、车辆、。。。对象ID

pos point[], -- 批量上报的位置

crt_time timestamp[] -- 批量上报的时间点

);

create index idx_t_pos_hist_uid on t_pos_hist (uid); -- 对象ID

create index idx_t_pos_hist_crt_time on t_pos_hist ((crt_time[1])); -- 对每批数据的起始时间创建索引 If necessary, you can add an additional time field for partitioning.

Write and combine the location data. Update the location only when the movement is greater than 50 m.

If you use the point type in testing, run the following SQL statement:

insert into t_pos values (1, point(1,1), now())

on conflict (uid)

do update set pos=excluded.pos, mod_time=excluded.mod_time

where t_pos.pos <-> excluded.pos > 50; If you use the PostGIS geometry type in production, run the following SQL statement:

insert into t_pos values (1, st_setsrid(st_makepoint(120, 71), 4326), now())

on conflict (uid)

do update set pos=excluded.pos, mod_time=excluded.mod_time

where st_distancespheroid(t_pos.pos, excluded.pos, 'SPHEROID["WGS84",6378137,298.257223563]') > 50; First, generate location data of 100 million random spatial objects.

postgres=# insert into t_pos select generate_series(1,100000000), point(random()*10000, random()*10000), now();

INSERT 0 100000000

Time: 250039.193 ms (04:10.039) Run the following script to test the performance of dynamic location updates for 100 million spatial objects (not updated if the movement distance is less than 50 m).

vi test.sql

\set uid random(1,100000000)

insert into t_pos

select uid, point(pos[0]+random()*100-50, pos[1]+random()*100-50), now() from t_pos where uid=:uid

on conflict (uid)

do update set pos=excluded.pos, mod_time=excluded.mod_time

where t_pos.pos <-> excluded.pos > 50; Stress testing results: 216,000 points are dynamically updated per second; that is, 18.7 billion points are dynamically updated per day.

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 64 -j 64 -T 120

number of transactions actually processed: 26014936

latency average = 0.295 ms

latency stddev = 0.163 ms

tps = 216767.645838 (including connections establishing)

tps = 216786.403543 (excluding connections establishing)Write 50 records in each batch for each UID: the write speed is about 4.675 million points/s or 403.9 billion points/day.

Use dynamic SQL to write multiple tables during stress testing.

do language plpgsql $$

declare

begin

for i in 0..127 loop

execute 'create table t_pos_hist'||i||' (like t_pos_hist including all)';

end loop;

end;

$$;

create or replace function import_test(int) returns void as $$

declare

begin

execute format('insert into t_pos_hist%s values (%s, %L, %L)', mod($1, 128), $1,

array[point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1), point(1,1)] ,

array['2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10', '2018-01-01 10:10:10']);

end;

$$ language plpgsql strict;

vi test1.sql

\set uid random(1,100000000)

select import_test(:uid);

pgbench -M prepared -n -r -P 1 -f ./test1.sql -c 56 -j 56 -T 120

number of transactions actually processed: 11220725

latency average = 0.599 ms

latency stddev = 5.452 ms

tps = 93504.532256 (including connections establishing)

tps = 93512.274135 (excluding connections establishing) 1) Block range index (BRIN): A BRIN is created on the time series attribute field to achieve efficient retrieval, save index space, and accelerate the write performance.

2) Features of Alibaba Cloud HybridDB for PostgreSQL: sort key and MetaScan

Similar to BRIN, they are suitable for linear data, where block-level metadata (such as value range, mean, CNT, and SUM) can be created automatically for filtering.

3) Spatial Indexes: The GiST and SP-GiST spatial indexes are suitable for spatial data and other heterogeneous data.

4) Dynamic write combining: Automatically determines whether a combined update is required based on the location change information.

You can use the "insert on conflict do UPDATE" syntax to specify that an update is performed only when the movement distance exceeds N meters.

5) Multi-value types such as array, JSON, and KV.

Multi-value types are perfect for data with multi-value attributes, such as trace data reported in batches. Locations reported by a GPS terminal in batches usually lag behind the actual location. Data can be stored in array and JSON formats.

When data is stored in arrays, you can use UNNEST to fetch the data in the arrays to draw the trace during a subsequent trace analysis.

1) Dynamic location update: For 100 million tracked objects, the TPS is 216,000. Specifically, 216,000 points are updated dynamically per second; that is, 18.7 billion points are updated per day.

2) Trace write: The TPS is about 100,000. Specifically, 4.675 million points are written per second; that is, 403.9 billion points are written per day.

PostgreSQL-enabled Electronic Fences: Application Scenarios and Performance

digoal - December 16, 2020

digoal - May 16, 2019

Alibaba Cloud Native Community - April 13, 2023

digoal - September 17, 2019

Alibaba Cloud Native Community - March 2, 2026

Alibaba Developer - February 7, 2022

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More Real-Time Livestreaming Solutions

Real-Time Livestreaming Solutions

Stream sports and events on the Internet smoothly to worldwide audiences concurrently

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by digoal