Authors: Sun Jiang (Lin Lixiang), Yu Ce (Gong Zhigang), Fei Lian (Wang Zhiming), Yun Long (You Liang)

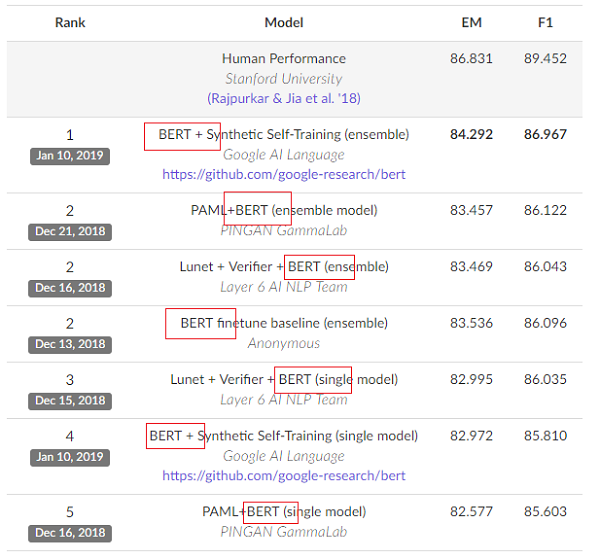

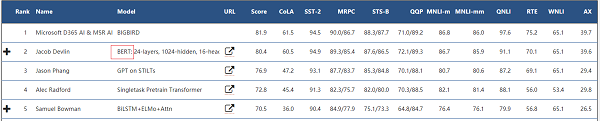

In 2018, the most ground-breaking achievement in natural language processing (NLP) is the BERT (Bidirectional Encoder Representations from Transformers) model developed by Google. As a new language representation model, BERT stands out in the ranking list in fields such as question answering, language understanding, and language predictions, as shown in Figure 1 and Figure 2.

[Figure 1] SQuAD is a standard NLP question answering dataset based on a set of Wikipedia articles. Currently the top 10 rankings in SQuAD2.0 are all BERT-based models (Figure 1 only shows models ranked top 5). Of the top 20 models, 16 models are based one BERT.

[Figure 2] GLUE is the General Language Understanding Evaluation benchmark, which achieves new results on 11 NLP tasks. Since BERT was built, it has long dominated the rankings. (BERT is currently 2nd place. The BIGBIRD model by Microsoft is currently 1st place. No URL links are available for us to find more details about this model. However, it is said that BIGBIRD seems to have derived its name from BERT BIG.)

BERT is considered a milestone in NLP, as ResNet is in the computer vision field. After the birth of BERT, all NLP tasks can be performed based on the BERT model.

In one word, current NLP researchers who do not understand BERT are lagging scientific workers; science and technology companies that rely heavily on NLP and do not apply BERT in production are representatives of lagging productivity.

What makes BERT powerful? Let's brush away the mist and explore the mystery behind it.

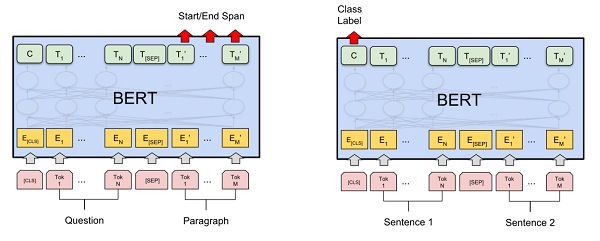

The BERT model is divided into a Pretrain model and a Finetune model. The Pretrain model is a general language model. The Finetune model can serve all kinds of tasks from Q&A to language inference without modifying the overall model architecture for specific tasks, only by adding an adaptation layer on top of the Pretrain model, as shown in Figure 3. This design facilitates the adaptation of the BERT Pretrain model to various specific NLP models (similar to various Backbone models based on ImageNet training in CV field).

[Figure 3] The Finetune model used for SQuAD tasks based on the BERT Pretrain model is shown on the left. And the Finetune model used for Sentence Pair Classification tasks based on the Pretrain mode is shown on the right. Both of them add an adaptation layer for specific tasks on the basis of the BERT Pretrain model.

Therefore, the power of BERT is mainly attributed to the Pretrain language model with excellent accuracy and robustness. Most of the computing workload also comes from the Pretrain model. It mainly uses the following two technologies, both of which are extremely computationally resource-intensive modules.

1. Bidirectional Transformer architecture

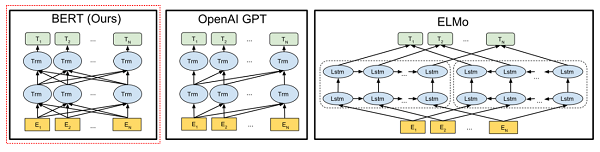

As shown in figure 4, unlike other pre-training model architectures, BERT Pretrain model performs Transformer processing on the corpus from left to right and from right to left simultaneously. This bidirectional technique can fully extract the time-temporal relativity of the corpus, but it also greatly increases the burden of computing resources. (Transformer is a masterpiece of Google on NLP in 2017. It uses full Attention mechanism to replace RNN and its variant LSTM, which are commonly used in NLP, greatly improving the prediction accuracy of NLP. This article does not cover this. If you are interested, you can search for it yourself.)

[Figure 4] Pretrain architecture comparison. OpenAI GPT adopts a left-to-right Transformer architecture, and ELMo adopts a cascade LSTM with parts from left to right and parts from right to left. BERT adopts a bidirectional Transformer architecture that performs simultaneously from left to right and from right to left.

2. Random prediction of word and sentence

The BERT Pretrain model performs two unsupervised prediction tasks, word prediction and sentence prediction, in the iterative computing.

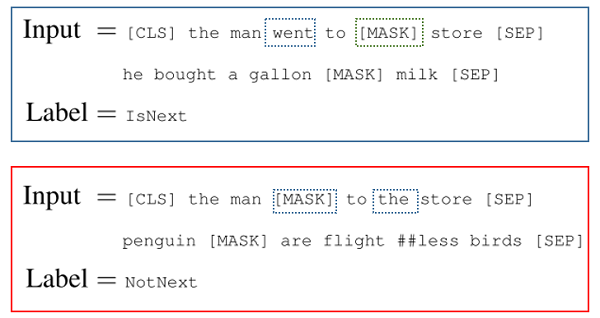

First, the word prediction task. The model performs a random MASK operation (Masked LM) on the corpus. 15% words are randomly selected as the masked data from all corpora. In the iterative computing process, the selected corpus words are masked for prediction 80% of the time, kept unchanged 10% of the time, and randomly replaced with other words 10% of the time, as shown in Figure 5.

Second, the sentence prediction task. For the selected preceding and following sentences A and B, during the whole iterative prediction process, B is taken as the real follow-up sentence for A (Label=IsNext) for 50% of the time, while for the other 50% of the time, other sentences are randomly selected from the corpus as the follow-up sentence for A (Label=NotNext), as shown in Figure 5

[Figure 5] Example of input corpus for word and sentence random prediction. The blue and red boxes represent the random states of the same corpus input at different times. For the word prediction task, the word "went" in the blue box is the real data, but the word in the red box is masked. The situation of the word "the" in the red box is exactly the opposite. For the sentence prediction task, the sentence group in the blue box is real preceding and following sentences, while the sentence group in the red box is a random combination.

This prediction method of randomly selecting words and sentences functionally implements the function of unsupervised data input and effectively prevents over-fitting of the model. However, random selection according to proportion needs to greatly increase the number of iterations on the corpus to digest all the corpus data, which puts significant pressure on the computing resources.

In summary, the function of BERT Pretrain model needs to be built on strong computing power. The BERT paper shows that training the BERT BASE Pretrain model (L=12, H=768, A=12, Total Parameters=110M, 1000,000 iterations) requires 1 Cloud TPU to work for 16 days. However, Nvidia GPU accelerator cards, which are mainstream in deep learning, are unable to cope with such a huge amount of computation. Even with the current most powerful Nvidia V100 accelerator card, it takes a month or two to train a BERT-Base Pretrain model. It takes at least four to five months to train a Large model.

For most users who train BERT using GPUs, it is pretty much unaffordable to spend a few months to train a model.

Alibaba Cloud flexible AI team leverages the powerful infrastructure resources of Alibaba Cloud to create a highly competitive AI innovation solution in the industry. Based on the training pain point of BERT, the team has created the Perseus-BERT, greatly improving the training speed of the BERT Pretrain model. On a V100 8-card instance on the cloud, a BERT model can be trained in less than 4 days.

How does Perseus-BERT create the best BERT training practice on the cloud? The following tips reveal the unique skills of Perseus-BERT.

Perseus, a unified distributed communication framework, is a distributed training framework designed by the team that is optimized for Alibaba Cloud infrastructure based on the smart cloud training pain point. The single-machine training code of mainstream AI frameworks can be easily embedded into it, to efficiently improve the scalability of multiple machines while ensuring the training accuracy. For details about how to use the Perseus distributed framework, see another article by the team Perseus: Unified Deep Learning Distributed Communication Framework.

For BERT of tensorflow code, Perseus provides the Horovod Python API to facilitate embedding BERT Pretrain code. The basic process is as follows:

It should be noted that the custom Optimizer used by BERT source code uses the following API for computing the gradients

grads = tf.gradients(loss, tvars)The DistributeOptimizer of Perseus inherits the standard Optimizer implementation, and implements distributed gradient update computing on the compute_gradients API. Therefore, the grads acquisition is fine-tuned as follows:

grads_and_vars = optimizer.compute_gradients(loss, tvars)

grads = list()

for grad, var in grads_and_vars:

grads.append(grad)Mixed precision

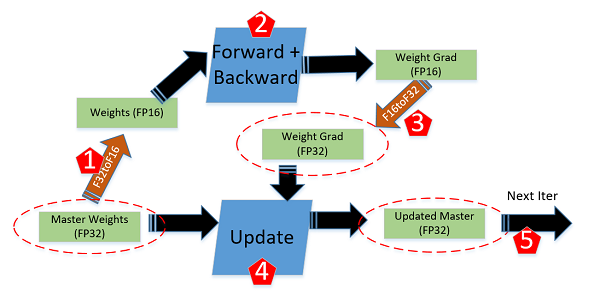

In deep learning, mixed precision training refers to the training mode in which float32 and float16 are mixed. The general mixed precision mode is shown in Figure 6.

[Figure 6] Example of mixed precision training. In the Forward+Backward computation process, float16 is used for computation, and when the gradient is updated, it is converted to float32 for gradient update.

Mixed gradients bring the following benefits to BERT training:

XLA compilation optimization

XLA is a model compiler recently proposed by Tensorflow. It can compile Graph into IR, fuse redundant Ops, and optimize performance and adapt hardware resources to Ops. However, the official Tensorflow release does not support XLA distributed training. To ensure that the distributed training can be properly performed and accurate, we have compiled the Tensorflow with additional patches to support distributed training, Perseus-BERT accelerates the training process and increases the batch size by enabling XLA compilation optimization.

Perseus BERT simultaneously optimizes word embedding and sentence dividing for text pre-processing. It will not be explained in detail here.

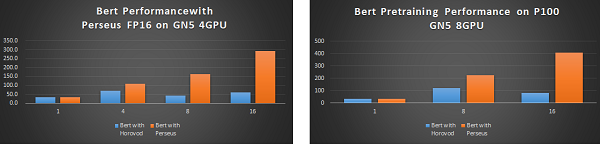

Figure 7 shows the performance of Perseus-BERT on the P100 instance. Compared with the mainstream Open Source Horovod, the distributed performance of Peseus-BERT dual-machine 16-card is five times that of the former.

Currently, a major customer has launched Perseus-BERT on a large scale in the Alibaba Cloud P100 cluster. It only takes 2.5 days to train and complete the business model with 10 4-card P100, and it takes about one month if the Open Source Horovod (Tensorflow Distributed Performance Optimization Edition) is used.

[Figure 7] Comparison of BERT on the Alibaba Cloud P100 instance (experiment environment: Bert on P100; Batch size: 22; Max seq length: 256; Data type: float32; Tensorflow 1.12; Perseus: 0.9.1; Horovod: 0.15.2)

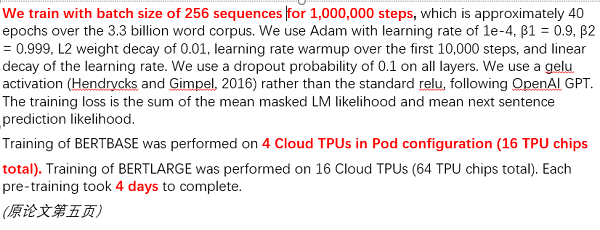

To compare with Google TPU, we have quantified the performance of TPU. The performance basis is shown in Figure 8. The performance of a Cloud TPU computing the BERT-Base is 256 * (1000000/4/4/24/60/60) = 185 examples/s. The V100 single-machine 8-card instance on Alibaba Cloud can compute 680 examples/s through the Perseus-BERT optimized Base model training under the same condition of sequence_size=512, which is close to 4 times the performance of a Cloud TPU. For a BERT model that takes 16 days to train for a Cloud TPU, it takes less than four days for an Alibaba Cloud V100 8-card instance to complete training.

[Figure 8] Performance basis for BERT Pretain on Google Cloud TPU

The elastic AI team has been working on innovative solutions for AI ultimate performance optimization based on Alibaba Cloud infrastructure. Perseus-BERT is a very typical case. We make in-depth optimization on the framework level based on the infrastructure of Alibaba Cloud, to fully release the computing power of the basic resources on Alibaba Cloud, and to allow Alibaba Cloud customers to fully enjoy the AI computing advantages on the cloud, so that no hard-to-compute AI exists anywhere in the world.

2,593 posts | 793 followers

FollowAlibaba Cloud Community - November 20, 2024

Alibaba Clouder - June 3, 2019

youliang - February 5, 2021

qixiang.qx - February 6, 2020

shiming xie - November 4, 2019

Alibaba Clouder - July 22, 2020

2,593 posts | 793 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More GPU(Elastic GPU Service)

GPU(Elastic GPU Service)

Powerful parallel computing capabilities based on GPU technology.

Learn MoreMore Posts by Alibaba Clouder

Kesha April 21, 2019 at 4:22 am

Thanks Alot