By Zhuzhao

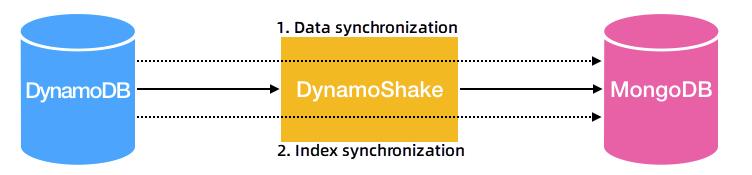

MongoShake and RedisShake are used to migrate, synchronize, and back up data from MongoDB and Redis databases respectively. Recently, the Shake series has expanded further with the launch of DynamoShake (also known as NimoShake), a migration tool for DynamoDB. DynamoShake supports the migration of data from a DynamoDB database to MongoDB. In the future, we will also consider multiple channels, such as direct file backup, migration to Kafka, and migration to other databases like Cassandra and Redis.

GitHub address: https://github.com/alibaba/nimoshake

DynamoDB supports both full and incremental synchronization. When a process is started, it begins with a full synchronization, followed by incremental synchronization.

The full synchronization consists of two parts: data synchronization and index synchronization. Data synchronization is responsible for synchronizing the data, followed by the synchronization of indexes. Default primary keys are synchronized during the process. However, synchronization of user-created GSIs is only supported in a MongoDB instance within a replica set, and not in Cluster Edition. Incremental synchronization only synchronizes data and does not include generated indexes. It's important to note that during both full and incremental synchronization, DDL operations such as table deletion, table creation, and index creation are not supported.

Resumable upload is supported in incremental synchronization but not in full synchronization. This means that if an incremental synchronization task is interrupted due to a disconnection and the connection is restored within a short time range, the task can be resumed. However, in certain cases, a full synchronization will be triggered again. For example, if the disconnection lasts for too long or if the previous offset is lost.

All source tables will be written into different tables in the destination database (default is dynamo-shake). For example, if you have table1 and table2, after synchronization, you will have a destination database called dynamo-shake that contains table1 and table2. In native DynamoDB, the protocol encloses a layer of type fields in the "key: type: value" format. For instance, if you insert {hello: 1}, the data obtained through the DynamoDB interface will be in the format of {"hello": {"N": 1}}.

All Dynamo data types:

Two conversion methods are provided, raw and change. Raw refers to writing the raw data obtained by the DynamoDB interface:

rszz-4.0-2:PRIMARY> use dynamo-shake

switched to db dynamo-shake

rszz-4.0-2:PRIMARY> db.zhuzhao.find()

{ "_id" : ObjectId("5d43f8f8c51d73b1ba2cd845"), "aaa" : { "L" : [ { "S" : "aa1" }, { "N" : "1234" } ] }, "hello_world" : { "S" : "f2" } }

{ "_id" : ObjectId("5d43f8f8c51d73b1ba2cd847"), "aaa" : { "N" : "222" }, "qqq" : { "SS" : [ "h1", "h2" ] }, "hello_world" : { "S" : "yyyyyyyyyyy" }, "test" : { "S" : "aaa" } }

{ "_id" : ObjectId("5d43f8f8c51d73b1ba2cd849"), "aaa" : { "L" : [ { "N" : "0" }, { "N" : "1" }, { "N" : "2" } ] }, "hello_world" : { "S" : " Test Chinese" } }Change indicates writing data after parsing the type field:

rszz-4.0-2:PRIMARY> use dynamo-shake

switched to db dynamo-shake

rszz-4.0-2:PRIMARY> db.zhuzhao.find()

{ "_id" : ObjectId("5d43f8f8c51d73b1ba2cd845"), "aaa" : [ "aa1", 1234 ] , "hello_world" : "f2" }

{ "_id" : ObjectId("5d43f8f8c51d73b1ba2cd847"), "aaa" : 222, "qqq" : [ "h1", "h2" ] , "hello_world" : "yyyyyyyyyyy", "test" : "aaa" }

{ "_id" : ObjectId("5d43f8f8c51d73b1ba2cd849"), "aaa" : [ 0, 1, 2 ], "hello_world" : "Test Chinese" }You can specify your synchronization types based on your needs.

Incremental resumable upload is achieved through the use of offsets. By default, the offset is stored in the destination MongoDB database named dynamo-shake-checkpoint. For each table, a corresponding table is created to record the checkpoint. Additionally, there is a status_table that records whether the current phase is full synchronization or incremental synchronization.

rszz-4.0-2:PRIMARY> use dynamo-shake42-checkpoint

switched to db dynamo-shake42-checkpoint

rszz-4.0-2:PRIMARY> show collections

status_table

zz_incr0

zz_incr1

rszz-4.0-2:PRIMARY>

rszz-4.0-2:PRIMARY>

rszz-4.0-2:PRIMARY> db.status_table.find()

{ "_id" : ObjectId("5d6e0ef77e592206a8c86bfd"), "key" : "status_key", "status_value" : "incr_sync" }

rszz-4.0-2:PRIMARY> db.zz_incr0.find()

{ "_id" : ObjectId("5d6e0ef17e592206a8c8643a"), "shard_id" : "shardId-00000001567391596311-61ca009c", "father_id" : "shardId-00000001567375527511-6a3ba193", "seq_num" : "", "status" : "no need to process", "worker_id" : "unknown-worker", "iterator_type" : "AT_SEQUENCE_NUMBER", "shard_it" : "", "update_date" : "" }

{ "_id" : ObjectId("5d6e0ef17e592206a8c8644c"), "shard_id" : "shardId-00000001567406847810-f5b6578b", "father_id" : "shardId-00000001567391596311-61ca009c", "seq_num" : "", "status" : "no need to process", "worker_id" : "unknown-worker", "iterator_type" : "AT_SEQUENCE_NUMBER", "shard_it" : "", "update_date" : "" }

{ "_id" : ObjectId("5d6e0ef17e592206a8c86456"), "shard_id" : "shardId-00000001567422218995-fe7104bc", "father_id" : "shardId-00000001567406847810-f5b6578b", "seq_num" : "", "status" : "no need to process", "worker_id" : "unknown-worker", "iterator_type" : "AT_SEQUENCE_NUMBER", "shard_it" : "", "update_date" : "" }

{ "_id" : ObjectId("5d6e0ef17e592206a8c86460"), "shard_id" : "shardId-00000001567438304561-d3dc6f28", "father_id" : "shardId-00000001567422218995-fe7104bc", "seq_num" : "", "status" : "no need to process", "worker_id" : "unknown-worker", "iterator_type" : "AT_SEQUENCE_NUMBER", "shard_it" : "", "update_date" : "" }

{ "_id" : ObjectId("5d6e0ef17e592206a8c8646a"), "shard_id" : "shardId-00000001567452243581-ed601f96", "father_id" : "shardId-00000001567438304561-d3dc6f28", "seq_num" : "", "status" : "no need to process", "worker_id" : "unknown-worker", "iterator_type" : "AT_SEQUENCE_NUMBER", "shard_it" : "", "update_date" : "" }

{ "_id" : ObjectId("5d6e0ef17e592206a8c86474"), "shard_id" : "shardId-00000001567466737539-cc721900", "father_id" : "shardId-00000001567452243581-ed601f96", "seq_num" : "", "status" : "no need to process", "worker_id" : "unknown-worker", "iterator_type" : "AT_SEQUENCE_NUMBER", "shard_it" : "", "update_date" : "" }

{ "_id" : ObjectId("5d6e0ef27e592206a8c8647e"), "shard_id" : "shardId-00000001567481807517-935745a3", "father_id" : "shardId-00000001567466737539-cc721900", "seq_num" : "", "status" : "done", "worker_id" : "unknown-worker", "iterator_type" : "LATEST", "shard_it" : "arn:aws:dynamodb:us-east-2:240770237302:table/zz_incr0/stream/2019-08-27T08:23:51.043|1|AAAAAAAAAAGsTOg0+3HY+yzzD1cTzc7TPXi/iBi7sA5Q6SGSoaAJ2gz2deQu5aPRW/flYK0pG9ZUvmCfWqe1A5usMFWfVvd+yubMwWSHfV2IPVs36TaQnqpMvsywll/x7IVlCgmsjr6jStyonbuHlUYwKtUSq8t0tFvAQXtKi0zzS25fQpITy/nIb2y/FLppcbV/iZ+ae1ujgWGRoojhJ0FiYPhmbrR5ZBY2dKwEpok+QeYMfF3cEOkA4iFeuqtboUMgVqBh0zUn87iyTFRd6Xm49PwWZHDqtj/jtpdFn0CPoQPj2ilapjh9lYq/ArXMai5DUHJ7xnmtSITsyzUHakhYyIRXQqF2UWbDK3F7+Bx5d4rub1d4S2yqNUYA2eZ5CySeQz7CgvzaZT391axoqKUjjPpdUsm05zS003cDDwrzxmLnFi0/mtoJdGoO/FX9LXuvk8G3hgsDXBLSyTggRE0YM+feER8hPgjRBqbfubhdjUxR+VazwjcVO3pzt2nIkyKPStPXJZIf4cjCagTxQpC/UPMtcwWNo2gQjM2XSkWpj7DGS2E4738biV3mtKXGUXtMFVecxTL/qXy2qpLgy4dD3AG0Z7pE+eJ9qP5YRE6pxQeDlgbERg==", "update_date" : "" }

{ "_id" : ObjectId("5d6e1d807e592206a8c9a102"), "shard_id" : "shardId-00000001567497561747-03819eba", "father_id" : "shardId-00000001567481807517-935745a3", "seq_num" : "39136900000000000325557205", "status" : "in processing", "worker_id" : "unknown", "iterator_type" : "AT_SEQUENCE_NUMBER", "shard_it" : "arn:aws:dynamodb:us-east-2:240770237302:table/zz_incr0/stream/2019-08-27T08:23:51.043|1|AAAAAAAAAAFw/qdbPLjsXMlPalnhh55koia44yz6A1W2uwUyu/MzRUhaaqnI0gPM8ebVgy7dW7dDWLTh/WXYyDNNyXR3Hvk01IfEKDf+FSLMNvh2iELdrO5tRoLtZI2fxxrPZvudRc3KShX0Pvqy2YYwl4nlBR6QezHTWx5H2AU22MGPTx8aMRbjUgPwvgEExRgdzfhG6G9gkc7C71fIc98azwpSm/IW+mV/h/doFndme47k2v8g0GNJvgLSoET7HdJYH3XFdqh4QVDIP4sbz8X1cpN3y8AlT7Muk2/yXOdNeTL6tApuonCrUpJME9/qyBYQVI5dsAHnAWaP2Te3EAvz3ao7oNdnA8O6uz5VF9zFdN1OUHWM40kLUsX4sHve7McEzFLgf4NL1WTAnPN13cFhEm9BS8M7tiJqZ0OzgkbF1AWfq+xg/O6c57/Vvx/G/75DZ8XcWIABgGNkWBET/vLDrgjJQ0PUZJZKNmmbgKKTyHgSl4YOXNEeyH7l6atuc2WaREDjbf7lnQO5No11sz4g3O+AreBcpGVhdZNhGGcrG/wduPYEZfg2hG1sfYiSAM8GipUPMA0PM7JPIJmqCaY90JxRcI1By24tpp9Th35/5rLTGPYJZA==", "update_date" : "" }The "status_value" : "incr_sync" in the status table indicates that the current phase is incremental synchronization. Each incremental shard records a checkpoint. For more information about shard splitting rules, see DynamoDB Documentation. The following is the description of each field of the incremental table checkpoint:

_id: ID of the MongoDB primary key.shard_id: ID of the shard. Each shard has a unique ID.father_id: ID of the parent shard. A shard may have one parent shard.seq_num: Sequence number of the processed shard. This is the primary offset information.status: Current synchronization phase. The following are the states of the process:

worker_id: ID of the worker to be processed. This parameter is disabled.iterator_type: Shard traversal mode.shard_it: Iterator address of the shard. This is the secondary offset information.update_date: Timestamp of checkpoint update.A unique index is created based on the default primary key, and a shard key is created based on the partition key. However, user-created GSI indexes are not created.

This section provides some details about the internal architecture of DynamoShake.

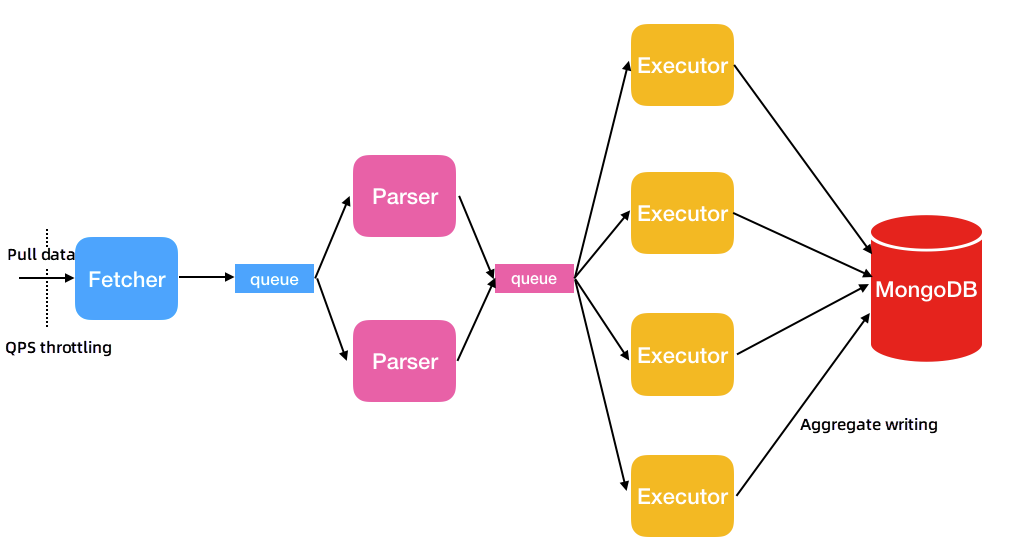

The following figure shows the basic architecture of data synchronization for a table. DynamoShake can initiate multiple concurrent TableSyncer threads to pull data, with the concurrency level specified by the user. The fetcher thread retrieves data from the source DynamoDB database and pushes it into queues. Then, the parser thread reads data from the queues and performs parsing, converting Dynamo protocol data into BSON format. Finally, the executor component aggregates and writes the data into the MongoDB database.

• Fetcher: Currently, there is only one fetcher thread available, which utilizes the protocol conversion driver provided by AWS. The fetcher feature works by calling the driver to capture data in batches from the source database and placing them into queues until all the source data for the current table is captured. The fetcher thread is separated from the others due to network I/O considerations. Since fetching is affected by network conditions, the process may be relatively slow.

• Parser: Multiple parser threads can be started, with the default value set to 2. The number of parser threads can be specified using the FullDocumentParser parameter. The parser thread reads data from the queues and parses it into the BSON structure. Once the data is parsed, the parser thread writes it as entries to the queues of the executor thread. Since parsing consumes significant CPU resources, the parser thread is separated from the others.

• Executor: Multiple executor threads can be started, with the default value set to 4. The number of executor threads can be specified using the FullDocumentConcurrency parameter. The executor thread pulls data from the queues, aggregates it in batches, and then writes it to the destination MongoDB database. Up to 16 MB of data in 1,024 entries can be aggregated. Once the data from all tables is written, the tableSyncer process will exit.

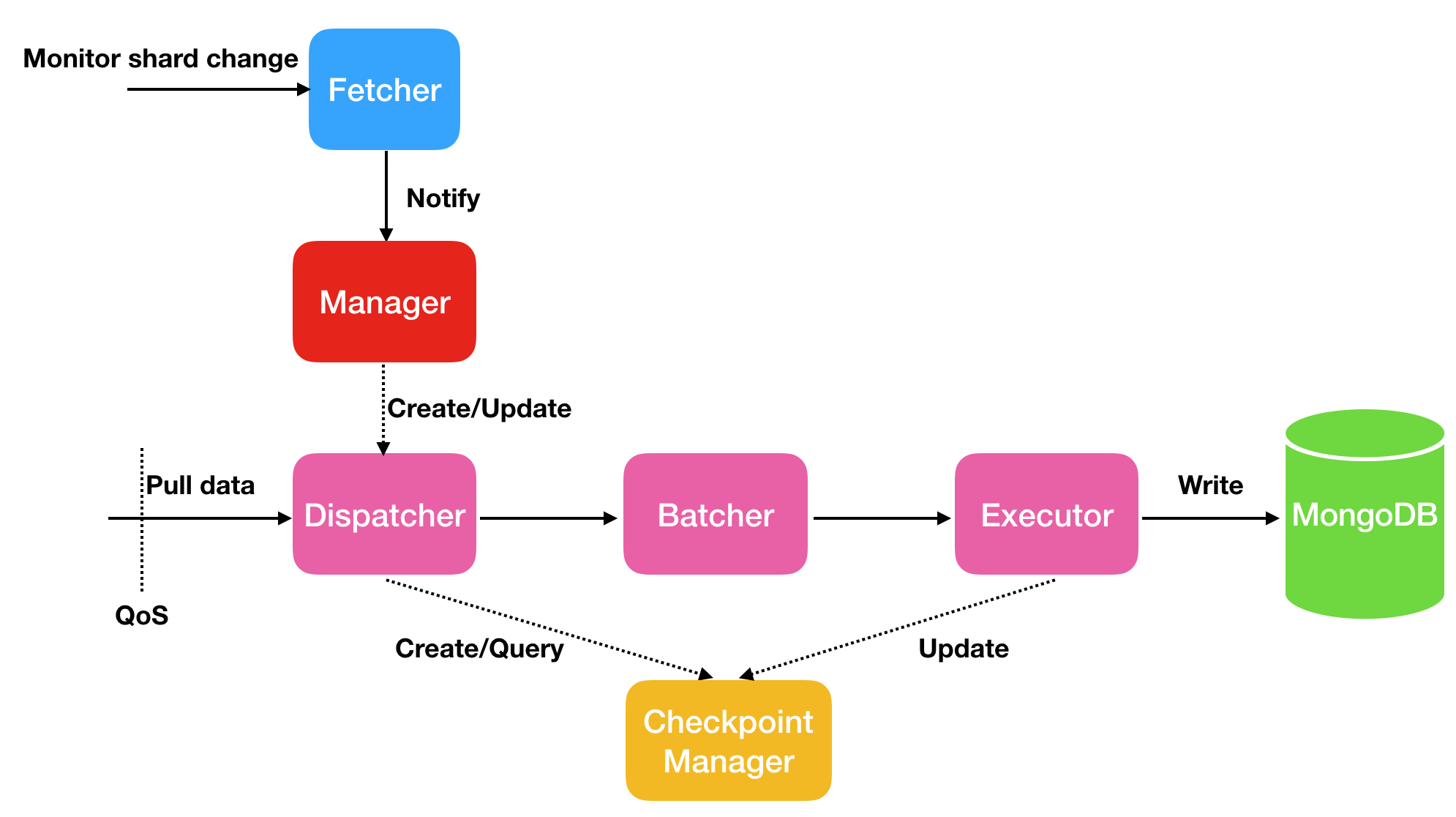

The following figure shows the basic architecture of incremental synchronization.

The fetcher thread is responsible for detecting changes in shards within the stream. The manager handles message notifications and creates new dispatchers to process messages, with each shard corresponding to one dispatcher. The dispatcher retrieves incremental data from the source, parses and encapsulates it using a batcher, and then writes the data to the MongoDB database through the executor. Simultaneously, the checkpoint is updated. In the case of resumable upload, the dispatcher pulls data from the previous checkpoint instead of starting from the beginning.

To start, use the command: ./dynamo-shake -conf=dynamo-shake.conf. Configuration parameters are specified in the dynamo-shake.conf file. The following list provides a description of each parameter.

DynamoFullCheck is a tool used to verify the consistency of data between DynamoDB and MongoDB. It currently only supports full data verification and does not support incremental verification. This means that during incremental synchronization, the source and destination databases may be inconsistent.

DynamoFullCheck only supports one-way verification, specifically checking if the data in DynamoDB is a subset of MongoDB. Reverse verification is not performed. Additionally, it supports sampling verification, allowing verification of only the tables of interest.

The verification process consists of the following parts:

During precise check, if sampling is enabled, each document is sampled to determine if it needs to be checked. The principle is relatively simple. For example, if the verification is sampled at 30%, a random number is generated from 0 to 100. If it falls within the range of 0 to 30, it will be checked; otherwise, it will not be checked.

When pulling data from the source DynamoDB database, DynamoFullCheck also goes through fetch and parse phases, reusing parts of the DynamoShake code. The difference is that the concurrency of each fetcher, parser, and executor thread in the DynamoFullCheck is 1.

The full-check parameter is simpler and is injected directly from the command line. For example, ./dynamo-full-check --sourceAccessKeyID=BUIASOISUJPYS5OP3P5Q --sourceSecretAccessKey=TwWV9reJCrZhHKSYfqtTaFHW0qRPvjXb3m8TYHMe --sourceRegion=ap-east-1 -t="10.1.1.1:30441" --sample=300

Usage:

dynamo-full-check.darwin [OPTIONS]

Application Options:

-i, --id= target database collection name (default: dynamo-shake)

-l, --logLevel=

-s, --sourceAccessKeyID= dynamodb source access key id

--sourceSecretAccessKey= dynamodb source secret access key

--sourceSessionToken= dynamodb source session token

--sourceRegion= dynamodb source region

--qpsFull= qps of scan command, default is 10000

--qpsFullBatchNum= batch number in each scan command, default is 128

-t, --targetAddress= mongodb target address

-d, --diffOutputFile= diff output file name (default: dynamo-full-check-diff)

-p, --parallel= how many threads used to compare, default is 16 (default: 16)

-e, --sample= comparison sample number for each table, 0 means disable (default: 1000)

--filterCollectionWhite= only compare the given tables, split by ';'

--filterCollectionBlack= do not compare the given tables, split by ';'

-c, --convertType= convert type (default: raw)

-v, --version print version

Help Options:

-h, --help Show this help messageOpen-Source PolarDB-X 2.3: An Integration of Centralized and Distributed Architectures

ApsaraDB - December 1, 2023

Alibaba Cloud Community - December 8, 2023

ApsaraDB - September 27, 2025

ApsaraDB - September 16, 2025

Alibaba Clouder - February 7, 2018

ApsaraDB - September 9, 2025

Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More ADAM(Advanced Database & Application Migration)

ADAM(Advanced Database & Application Migration)

An easy transformation for heterogeneous database.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn MoreMore Posts by ApsaraDB