To enhance the research and understanding of cutting-edge algorithms and technologies, I participated in the 2017 CIKM AnalytiCup international data mining competition. The Conference on Information and Knowledge Management (CIKM) is an annual computer science research conference held since 1992.

This competition was my first simple attempt; I tried to establish a simple natural language learning framework in my spare time from July to August. I achieved 16th position in the competition among 169 teams.

In this article, I will be sharing my experiences at the competition with you.

Lazada provided the competition's goal, which was to identify whether the titles of its products were clear and concise amidst an increasing number of e-commerce site transactions. The specific goal of the challenge was to score the clarity and conciseness of each product title (two scores in the [0,1] interval).

One can note that these two indicators are not the same. Clarity refers to whether the content of a title is easy to understand, and whether the description is comprehensive and accurate.

An example of an unclear sentence is: "Silver Crystal Diamante Effect Evening Clutch Wedding Purse Party Prom Bag Box." This doesn't clarify whether the product is a "Wedding Purse" or "Party Prom Bag Box."

While an example of a clear sentence is: "Soft-Touch Plastic Hard Case for MacBook Air 11.6 Case (Models:A1370 and A1465) with Transparent Keyboard Cover (Transparent)(Export)"

In contrast, conciseness refers to whether the content of a title is terse.

An example of a short but unconcise sentence is: "rondaful pure color window screening wedding banquet glass yarn," because the synonyms are redundant, and it is also unclear. An example of a concise sentence is "moonmini case for iPhone 5 5s (hot pink + blue) creative melting ice cream skin hard case protective back case cover".

To explore and solve this problem, Lazada provided a series of raw data from real scenarios, including the title, three-level category tree, description, price, and ID of the product. Lazada also provided some manually labeled information.

We were provided with standard datasets for evaluation. There were three datasets: the first was training data for training, the second was validation data for initial testing, and the third was test data for final testing.

This was a supervised learning problem, but the difficulty was that this involved a pure semantic-level short text mining problem. First, there was no feedback data, while the available information was very limited, only including basic information such as title, category, description, etc. However, what we had to identify was very high-dimensional and highly abstract. On the other hand, such problems have a vital practical significance. For internal services, using the simplest data to identify abstract business targets is a very valuable general technology.

In the process of solving this problem, I used related technologies in two fields: natural language processing and data mining. I will introduce the specific methods in detail below. Moreover, since clarity and conciseness were two separate goals, from the aspect of recognition, two independent models were constructed for these two goals for separate solutions.

In the solution, two independent regression models were built to rate for clarity and conciseness. As per my analysis, this was a short text mining problem. Therefore, first I constructed intermediate semantics under multiple dimensions and then used the intermediate semantics to solve the final target. Through the construction and expansion (as much as possible), we can generate more available information to solve this natural defect in short text data.

In the final solution, the intermediate dimensions in the following semantic dimensions were constructed and used as subsequent model training.

From the design of these dimensions, we can see that the system does not use any feedback information, and all intermediate semantic information gets derived from short texts such as titles and categories. The semantic information is not only helpful for the recognition of clarity and conciseness but also usually plays a vital role for various other common tasks such as relevance searching and automatic labeling.

We can see the essence of this process by decomposing one supervised learning problem into multiple sub-problems, thereby introducing more secondary targets, and then improving the accuracy of the final primary targets through secondary targets.

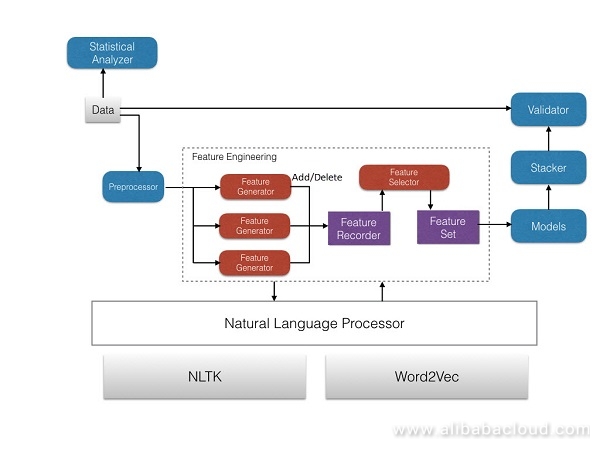

To effectively construct a multi-dimensional supervised learning system, I had implemented the entire system in terms of data, features, models, and verification. It was a machine learning framework based on natural language processing without relying on any specific service data. The framework included several core modules such as data preprocessing, visual analysis, feature engineering, model training, integrated learning, and cross-validation. The schematic design of the entire system is as follows:

I have described the functions and implementation of several major modules briefly below:

I used this module mainly for the classification of raw data and visual analysis in different dimensions. It is not a core process of machine learning but is important for an open business problem. We can use it to discover valuable regularity and interpretability from raw data, and its advantage is that it can find valuable information with the highest efficiency and lowest cost.

This section contains multiple feature generating modules (based on the natural language processing module) and feature selecting modules. Feature engineering was the most important task in this project. Its complexity was far greater than the variability of the models. The combination of a large number of features is an exponential level. For general machine learning problems, it is critical to find a set of comprehensive and appropriate feature sets in a short time.

Therefore, I had specifically designed a Feature Recorder and a Feature Selector in the implemented system. The Feature Recorder can easily store existing features and flexibly add and delete features, so as not to change original records. This had significantly improved the efficiency of the tests, as the number of various feature combinations was too large, and repetitive computing was time-consuming.

Dedicated reuse and the addition of features in a Feature Recorder requires flexible feature management. Otherwise, when managing a large number of features, the system gets very turbulent. So in the process of system design, for feature computing, I tried to perform a feature unit for a cluster of features and group_id of a sample. This allowed me to combine the feature units freely according to the configuration. It ensured high efficiency and clear code management, which is suitable for such large-scale learning tasks.

I had designed the Feature Selector with the best performing subset of features on a cross-validation data set. I had also used TOP K to pre-screen features first before making time-consuming selections.

This is the component for training models, which is completely decoupled from feature engineering and can be easily used to train any service data. The overall design is divided into three parts:

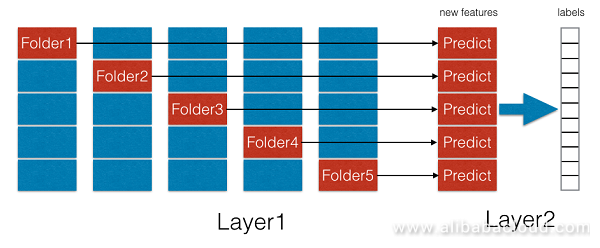

I had implemented a large number of commonly used models in the model learning module which one can select and load via configuration. I used the integrated learning module as a tool for accuracy improvement. This project used a stacking method to implement integrated learning. I divided the training structure into two layers; the first layer was for separate model training and the second layer was stacking training by XGBoost.

Feature engineering is one of the most important tasks for machine learning applications. In this competition, I used more than 100 features for training. Due to space limitations, here I have described the design ideas of some major features only.

Redundancy Features: Referring to the structured processing of language, I constructed a variety of features that characterize redundancy, such as the repetition of n-gram (3 grams - 8 grams), the repetition of n-char (3 chars - 8 chars), the number/proportion of uniques, the similarity of the word vectors (divided into multiple intervals), and the statistical values of max, min, average, sum, etc. for each feature.

Also, I used the n-gram and n-char here, which both regard the sentence as a collection of disordered words. They do not depend on the order and do not need to be consecutive words.

Category Features: I performed one-hot serialization (long-tailed part was normalized) first for the three-level categories, and then performed the PCA principal component analysis on one-hot for dimensionality reduction to form numerical features.

Relevance Features: I used word2vec here to consider whether the similarity between words and all-level categories was related to the accuracy of the title content. To fully characterize the description, I also used multiple statistical indicators, such as the most relevant part in a sentence, the sum of all relevance in a sentence, and top N relevant parts in a sentence.

The Embedding Features: I used Google news as a thesaurus to map each word as an embedding word vector, which is used by the deep learning algorithm directly. Deep learning can extract useful features from the original word vectors.

TF-IDF Features: I considered the title and description as a doc and considered a word as a keyword to construct TF-IDF map, which I used in the semantic distribution of the sentence.

Word Class Features: In addition to the above-mentioned general processing analysis through machine learning, I used the NVTK tool to analyze the word class of sentence entry, and constructed the statistical characteristics for nouns and adjectives in the sentence.

In the final solution, four major types of algorithms offered the most significant improvement: XGBoost model, CNN model, LSTM model, and integrated learning optimization. The following is a brief description:

XGBoost, which is one of the best 'supervised learning' algorithms in the industry today, had the best performance of the single model in this project. This is a boost algorithm that combines multiple weak classifiers into a strong classifier. The biggest feature is the use of second-order Taylor expansion to optimize the target, using both first-order and second-order derivatives, and introducing regular terms in the cost function. It has a better performance in the aspect of overfitting. Moreover, XGBoost uses the sampling method of random forests, which can also effectively prevent overfitting and boost computation. Regarding performance, XGBoost is currently the best boost algorithm. In terms of effect, it is very similar to Microsoft's LightGBM.

CNN, among all learning tasks, it had the second-best effect. Its learning method is to learn from the embedding word vectors directly, and automatically extract valid features through networks without using manually constructed features. In its implementation in the competition, I used a six-layer structure. The first two layers were convolution layers, and the last four layers were full-connected DNN structure.

LSTM, in addition to CNN, I also used the LSTM network, which is a particular type of RNN with a network structure that has a memory effect on the long term words. Since the title is short text, the effect of LSTM is almost the same as that of CNN, but due to different network structures, it complements the two.

Stacking, for further accuracy optimization, I also used a stacking method in this project to combine different types of algorithms to complement each other. However, unlike boosting, which converts multiple weak classifiers into one strong classifier, stacking changes multiple strong classifiers into one stronger classifier. In this project, the first layer of stacking integrated the effects of various models such as XGBoost, CNN, LSTM, SVM, and Lasso Regression. I combined the model features and original features as the input for the second layer of stacking. The design of the entire stacking training structure is as follows:

Image 2

As we can see in the figure, the stacking technology is also a type of network structure. In the implementation in the competition, I used it as a two-layer network to provide high accuracy. However, since we need to generate intermediate features for different models and different data samples, when the number of models and layers is large, the implementation complexity of stacking will also become very high.

From this competition, I think that there are some understandings and experiences worth sharing.

I used the design of the above solution as a basis for the final version of the competition. However, by using this method only, one cannot improve the effect significantly. One of the characteristics I found through the work here is that single title texts in similar feature sets have almost the same expression, while are still complementary. Taking redundancy as an example, intuitively understanding the weight of similarity definition and the weight of word coincidence can fully express redundancy. However, in the actual test process, it was evident that the expression was very similar or almost the same, such as the proportion and number of independent words, the proportion and number of words with a similarity less than 0.4, the maximum similarity, the number of coincidences, and the number of binary coincidences.

Even the re-defined feature sets, get significantly improved when accumulated in large quantities, after cleaning the sentences. About the essence of this phenomenon, I think that the feature definition of natural language is multi-dimensional. The features here are not independently distributed as the effect data, while are complementary to each other. I regard this complementarity as an image of the natural language in high dimensions, especially for the features of word granularity, which may complement with each other in the virtual image after similar features get accumulated. So in the competition, a lot of almost consistent features were used. This is one of the keys to improvement.

Not all algorithms can produce significant improvements after feature accumulation. For example, with SVM and LR, even when we use a large number of features, the improvement is very limited. Boost series models are most affected by feature accumulation. In principle, this is not a coincidence. It is just because the idea of boosting is to process bad samples individually or to optimize the residuals. Therefore, a large number of similar features play a significant role in further iterative optimization.

Also, on the issue of title classification, the data has an apparent hierarchical distribution; that is, one type of features are in one layer, and another type of features are in another layer. According to the visual analysis of the competition, the layer of data that was most difficult to determine was only 1% of the total samples. In addition to boost, CNN, and LSTM are other algorithms that are well-adapted to feature accumulation. Of course, in this issue, the system used the original vectors of words as features.

During the test in the competition, I found that many titles were very similar, some of them only had a difference of a bracket, but were still labeled differently.

I think that there was something wrong with the labeling information. Of course, it doesn't happen frequently. In fact, as it relies on manual labeling, a small percentage of mislabeling is acceptable. Therefore, a more practical training method is to train after identifying such data that is not completely accurate and removing the error labels from the training data. It can be more in line with the actual scenario, to achieve improvement.

In this competition attempt, I was able to achieve a relatively high accuracy rate through the models mentioned above and feature methods. In the process, I also summarized some general frameworks and experiences. This training method is a basic task that one can use in daily work and cutting-edge exploration.

At the same time, through this competition, I think the basic training ability can get improved in some ways. For example, to optimize a training framework from a general perspective, here are my ideas:

The Winners for 2018 CIKM AnalytiCup will be announced in August 2018.

From R-CNN to Faster R-CNN – The Evolution of Object Detection Technology

2,605 posts | 747 followers

FollowAlibaba Clouder - August 10, 2020

Alibaba Clouder - June 19, 2018

- January 17, 2018

Alibaba Clouder - October 11, 2018

amap_tech - March 23, 2021

Alibaba Clouder - July 7, 2020

2,605 posts | 747 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn MoreMore Posts by Alibaba Clouder