In 2012, Geoffrey Hinton changed the way machines "see" the world. Along with two of his students, Alex Krizhevsky and Ilya Sutskever, Dr. Hinton published a paper titled ImageNet Classification with Deep Convolutional Neural Networks. In the paper, he proposed a deep convolution neural network model named AlexNet, which won first prize in a large-scale image recognition competition in that year. AlexNet reduced the errors of Rank-1 and Rank-5 to 37.5% and 17.0% respectively, a significant improvement in terms of image recognition accuracy. With this success, Hinton joined Google Brain, and AlexNet became one of the most classic image recognition models widely used in the industry.

In 2017, together with his two colleagues at Google Brain, Sara Sabour and Nicholas Frosst, Hinton published the paper Dynamic Routing Between Capsules. The team proposed a new neural network model called the Capsule Network, which has better results for specific task than the traditional convolutional neural network (CNN). Unlike CNN, the Capsule Network helps machines understand images by giving them a new perspective, similar to the three-dimensional perspective that humans have.

Although there is much room for improvement, the Capsule Network has reached the highest accuracy in MNIST, Analysis as analyzed by Aurélien Géron, the author of the Hands-On Machine Learning with Scikit-Learn and TensorFlow. Its performance on the CIFAR10 dataset can be further enhanced, which is reassuring for the development of Capsule Network.

The Capsule Network also requires less training data. It delivers equivariant mapping, allowing for the preservation of position and pose information. This is promising for image segmentation and object detection. In addition, routing by agreement is great for overlapping objects. Capsule activations nicely map the hierarchy of parts, assigning each part to a whole. It offers robustness to rotation, translation and other affine transformations. Activation vectors are easier to interpret. Finally, as the idea of Master Hinton, "it is undoubtedly forward-looking."

The New York Times published an article recently about a visit to Hinton's laboratory in Toronto, interviewing Hinton and Sara Sabour, author of Dynamic Routing Between Capsules, who described her ambitious vision of the Capsule Network.

Can you combine the two models in the picture into a pyramid? This seemingly simple task is beyond the capabilities of most computers, and even humans. Image source: New York Times

If a traditional neural network is trained on images that show a coffee cup only from the side, for example, it is unlikely to recognize a coffee cup turned upside down. This is the limitation of traditional CNNs. However, Hinton wants to use the Capsule Network to realize human 3D vision.

In the report, the reporter described a two-piece pyramid puzzle held by Hinton and Sabour, as shown in the above picture. The two gypsum models can actually be put together to form a tetrahedral pyramid. Conceptually, it doesn't seem too hard, but most people actually fail this test, including the reporter and two tenured professors at the Massachusetts Institute of Technology. One declined to try, and the other insisted it wasn't possible.

Mr. Hinton explained, "We picture the whole thing sitting in three-dimensional space. And because of the way the puzzle cuts the pyramid in two, it prevents us from picturing it in 3-D space as we normally would."

With his capsule networks, Mr. Hinton aims to finally give machines the same three-dimensional perspective that humans have — allowing them to recognize a coffee cup from any angle after learning what it looks like from only one.

While overcoming the shortcomings of traditional neural networks, Hinton has also been devoted to understanding deep neural networks.

Recently, the 16th International Conference of the Italian Association for Artificial Intelligence (AIIA) was held. The Comprehensibility and Explanation in AI and ML, CEX workshop was also held concurrently with the AIIA 2017. As its name implies, the CEX workshop addresses fundamental questions for the nature of "comprehensibility" and "explanation" in an AI and ML context from a theoretical and an applied perspective. Research into philosophical approximations to what an explanation in AI and ML is (or can be) or how the comprehensibility of an intelligent system can formally be defined will be presented. Next to work addressing practical questions of how to assess a systems comprehensibility from a psychological perspective, or how to design and build better explainable AI and ML systems.

At the workshop, Hinton and his colleague Nicholas Frosst at Google Brain co-authored and submitted a paper entitled "Distilling a Neural Network Into a Soft Decision Tree".

Summary of the Paper

Deep neural networks have proved to be a very effective way to perform classification tasks. They excel when the input data is high dimensional, the relationship between the input and the output is detailed, and the number of labeled training examples is large. But it is hard to explain why a learned network makes a particular classification decision on a particular test case. This is due to their reliance on distributed hierarchical representations. If we could take the knowledge acquired by the neural net and express the same knowledge in a model that relies on hierarchical decisions instead, explaining a particular decision would be much easier. We describe a way of using a trained neural net to create a type of soft decision tree that generalizes better than one learned directly from the training data.

The excellent generalization abilities of deep neural nets depend on their use of distributed representations in their hidden layers, but these representations are hard to understand. For the first hidden layer we can understand what causes an activation of a unit and for the last hidden layer we can understand the effects of activating a unit, but for the other hidden layers it is much harder to understand the causes and effects of a feature activation in terms of variables that are meaningful such as the input and output variables.

Also, the units in a hidden layer factor the representation of the input vector into a set of feature activations in such a way that the combined effects of the active features can cause an appropriate distributed representation in the next hidden layer. This makes it very difficult to understand the functional role of any particular feature activation in isolation since its marginal effect depends on the effects of all the other units in the same layer.

These difficulties are further compounded by the fact that deep neural nets can make decisions by modeling a very large number of weak statistical regularities in the relationship between the inputs and outputs of the training data. However, there is nothing in the neural network to distinguish the weak regularities that are true properties of the data from the spurious regularities that are created by the sampling peculiarities of the training set. Faced with all these difficulties, it seems wise to abandon the idea of trying to understand how a deep neural network makes a classification decision by understanding what the individual hidden units do.

By contrast, it is easy to explain how a decision tree makes any particular classification because this depends on a relatively short sequence of decisions and each decision is based directly on the input data. Decision trees, however, do not usually generalize as well as deep neural nets. Unlike the hidden units in a neural net, a typical node at the lower levels of a decision tree is only used by a very small fraction of the training data so the lower parts of the decision tree tend to overfit unless the size of the training set is exponentially large compared with the depth of the tree.

In this paper, the authors proposed a novel way of resolving the tension between generalization and interpretability. Instead of trying to understand how a deep neural network makes its decisions, they use the deep neural network to train a decision tree that mimics the input-output function discovered by the neural network but in a completely different way. Now they have got a model, through which the decision made is interpretable.

Classification using a soft decision tree

The image above shows the visualization of a soft decision tree of depth 4 trained on MNIST. The images at the inner nodes are the learned filters, and the images at the leaves are visualizations of the learned probability distribution over classes. The final most likely classification at each leaf, as well as the likely classifications at each edge are annotated. If we take for example the right most internal node, we can see that at that level in the tree the potential classifications are only 3 or 8, thus the learned filter is simply learning to distinguish between those two digits. The result is a filter that looks for the presence of two areas that would join the ends of the 3 to make an 8.

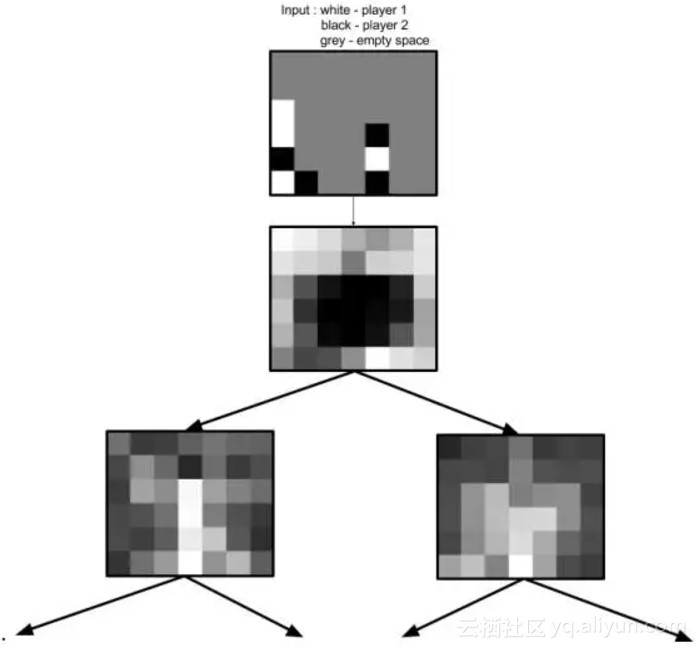

Analyzing the image of a Connect4 game

This is a visualization of the first 2 layers of a soft decision tree trained on the Connect4 data set. From examining the learned filters we can see that the game can be split into two distinct sub types of games - games where the players have placed pieces on the edges of the board, and games where the players have placed pieces in the center of the board.

The main motivation behind this work was to create a model whose behavior is easy to explain; in order to fully understand why a particular example was given a particular classification, one can simply examine all the learned filters along the path between the root and the classification's leaf node. The crux of this model is that it does not rely on hierarchical features, it relies on hierarchical decisions instead. The hierarchical features of a traditional neural network allow it to learn robust and novel representations of the input space, but past a single level or two, they become extremely difficult to engage with.

In this paper, the authors mentioned, "Some current attempts at explanations for neural networks rely on the use of gradient descent to find an input that particularly excites a given neuron, but this results is a single point on a manifold of inputs, meaning that other inputs could yield the same pattern of neural excitement, and so it does not reflect the entire manifold". Ribeiro et al. propose a strategy which relies on fitting some explainable model which "acts over absence/presence of interpretable components" to the behavior of a deep neural net around some area of interest in the input space. This is accomplished by sampling from the input space and querying the model around the area of interest and then fitting an explainable model to the output of the model. This avoids the problem of attempting to explain a particular output by visualizing a single point on a manifold but introduces the problem of necessitating a new explainable model for every area of interest in the input space, and attempting to explain changes in the model's behavior by first order changes in a discretized interpretation of the input space. "By relying on hierarchical decisions instead of hierarchical features we side-step these problems, as each decision is made at a level of abstraction that the reader can engage with directly."

If there is a large amount of unlabeled data, the neural net can be used to create a much larger labeled data set to train a decision tree, thus overcoming the statistical inefficiency of decision trees. Even if unlabeled data is unavailable, it may be possible to use recent advances in generative modeling (such as GAN) to generate synthetic unlabeled data from a distribution that is close to the data distribution. Moreover, without using unlabeled data, it is still possible to transfer the generalization abilities of the neural net to a decision tree by using a technique called distillation (Hinton et al., 2015; Buciluǎ et al., 2006) and a type of decision tree that makes soft decisions.

What's the latest progress in Hinton's work? Let's quote New York Times' story.

Hinton believes that the Capsule Network will eventually be applicable beyond computer vision and enjoy much broader prospects, including conversational computing. Hinton knows that many people are skeptical about the Capsule Network, just as many people were skeptical about the neural networks five years ago. According to the NYT report, Hinton stated his confidence in Capsule Network with the quote, "History will prove the same result, I think so".

Millisecond Marketing with Trillions of User Tags Using PostgreSQL

2,593 posts | 791 followers

FollowAlibaba Clouder - November 4, 2019

Alibaba Clouder - April 23, 2018

Alibaba Clouder - October 15, 2020

Farruh - December 5, 2025

Alibaba Clouder - March 2, 2018

Alibaba Clouder - July 12, 2018

2,593 posts | 791 followers

Follow Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Global Network Solution

Global Network Solution

Alibaba Cloud's global infrastructure and cloud-native SD-WAN technology-based solution can help you build a dedicated global network

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Clouder