Download the "Cloud Knowledge Discovery on KDD Papers" whitepaper to explore 12 KDD papers and knowledge discovery from 12 Alibaba experts.

By Wenhui Yu, Xiao Lin (Hanchi), Junfeng Ge (Beili), Wenwu Ou (Santong), and Zheng Qin

Recommendation algorithms are an important application of machine learning and are different from other machine learning algorithms in data characteristics. Data sparsity due to the long-tail effect of user behaviors is an inherent challenge in recommender systems. Moreover, implicit feedback data is widely used in datasets for recommendation algorithms. Specifically, users' feedback on recommended objects is obtained based on the collection of user behaviors instead of queries. The community has proposed many approaches and conducted a lot of classic work to tackle these two problems. However, they have not been completely solved.

To cope with data sparsity, the community usually introduces more information to assist collaborative filtering in recommendation algorithms, for example, introducing rich side information, such as text, tags, and images. For implicit feedback, a common practice is to perform negative sampling to generate negative samples and therefore help models learn. However, this leads to a consequence that many potential positive samples are mislabeled as negative ones, and the model is seriously misled by the label noise.

Based on existing work, we proposed transfer learning, which can solve both problems. We used a model that can draw inferences to transfer knowledge from a data-rich source domain to a data-sparse target domain. We also considered an extreme scenario, transferring knowledge from a domain to another domain without user and item overlap to assist in recommendation algorithms. Meanwhile, we performed negative sampling only in the source domain and then transferred the knowledge of negative samples to the target domain. This avoided directly performing negative sampling in the target domain and achieved effective learning in the target domain.

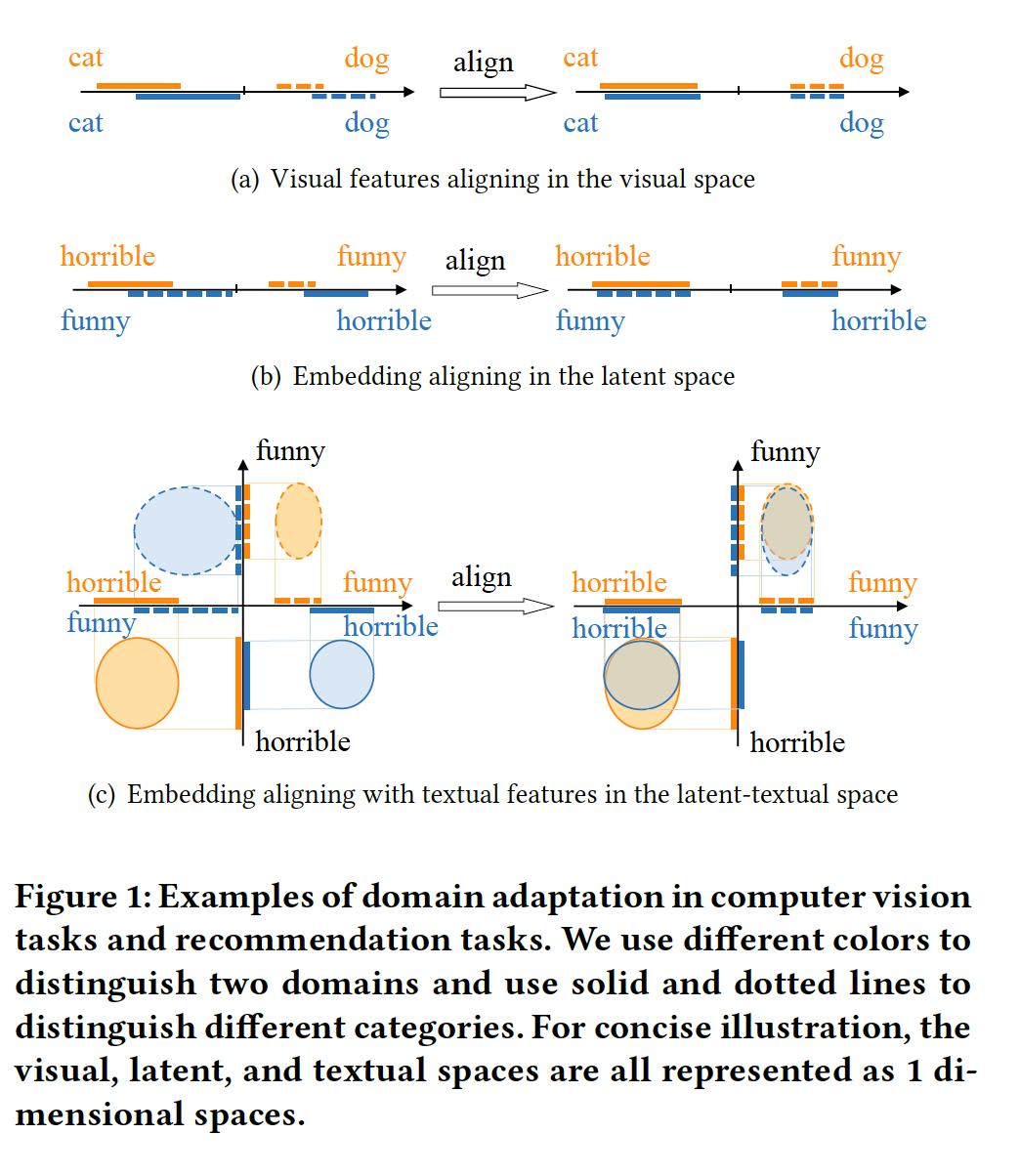

A recommendation algorithm relies heavily on user and item representations or embeddings. Most of the common transfer learning algorithms are based on shared cross-domain latent embedding spaces. However, embeddings in recommendation algorithms are distributed in latent spaces that do not have specific semantics. Therefore, direct cross-domain sharing of embeddings may cause misalignment of embeddings. This is largely different from sharing latent layers in computer vision (CV) tasks. In image tasks, the contour, color, and other information of an image have specific meanings. The cat or dog images in the two datasets are similar in contour and texture. In recommendation tasks, embeddings have no semantics in every dimension. Therefore, the embedding vectors of a horror movie in the source domain and a comedy movie in the target domain may be very similar. Direct transfer will cause a large semantic gap. To this end, we introduced textual information from reviews and aligned the embeddings of the two domains with the semantic spaces of the corresponding texts, respectively. We ensured that the embeddings of horror movies in the two domains were closer to the word "horrible," so the embeddings of the two domains could be aligned into spaces more accurately.

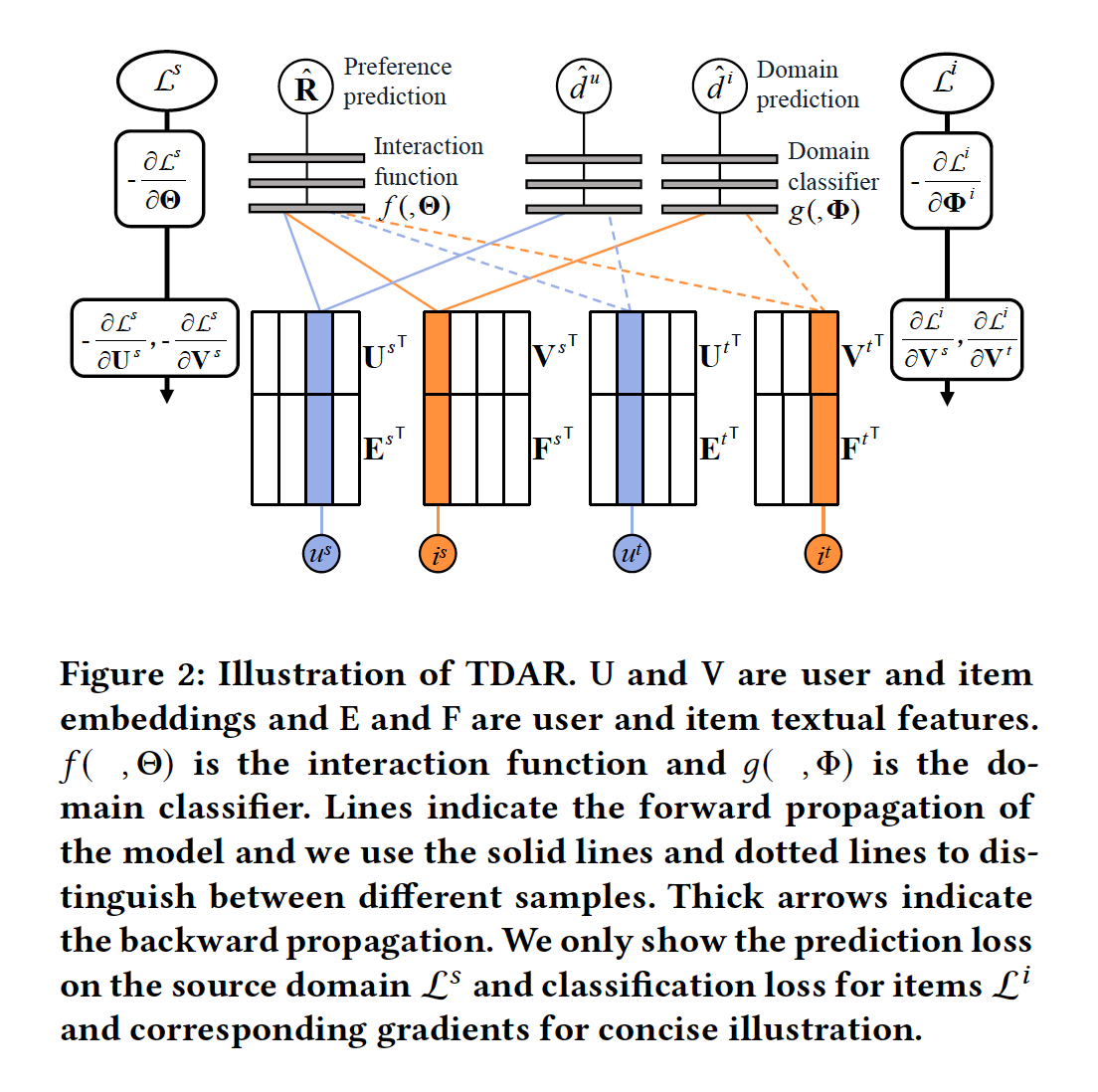

Therefore, we designed a text-based, semi-supervised recommendation algorithm that uses transfer learning, and named it the Text-Enhanced Domain Adaptation Recommendation (TDAR) algorithm. At first, we constructed implicit representations of users and items in textual spaces of the two domains, respectively. Specifically, we used a memory network to model the implicit representations of users and items into a linear combination of word embeddings in the review text. Next, we used a text-based recommendation task to train these representations. Then, we used the classic adversarial training in domain adaptation to design a domain classifier and a collaborative filtering module. The domain classifier used adversarial training to align the user and item embeddings with textual representations, so the user and item embeddings of the source domain could be in the same space as those of the target domain. To ensure that the embeddings of the two domains can be aligned based on text, we concatenated the text-based representations of users and items with the embeddings, and then input the concatenated textual representations and embeddings to the domain classifier for alignment.

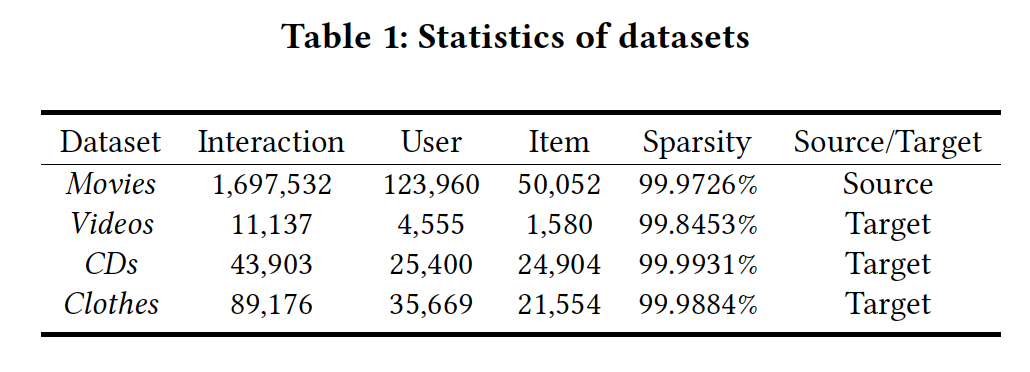

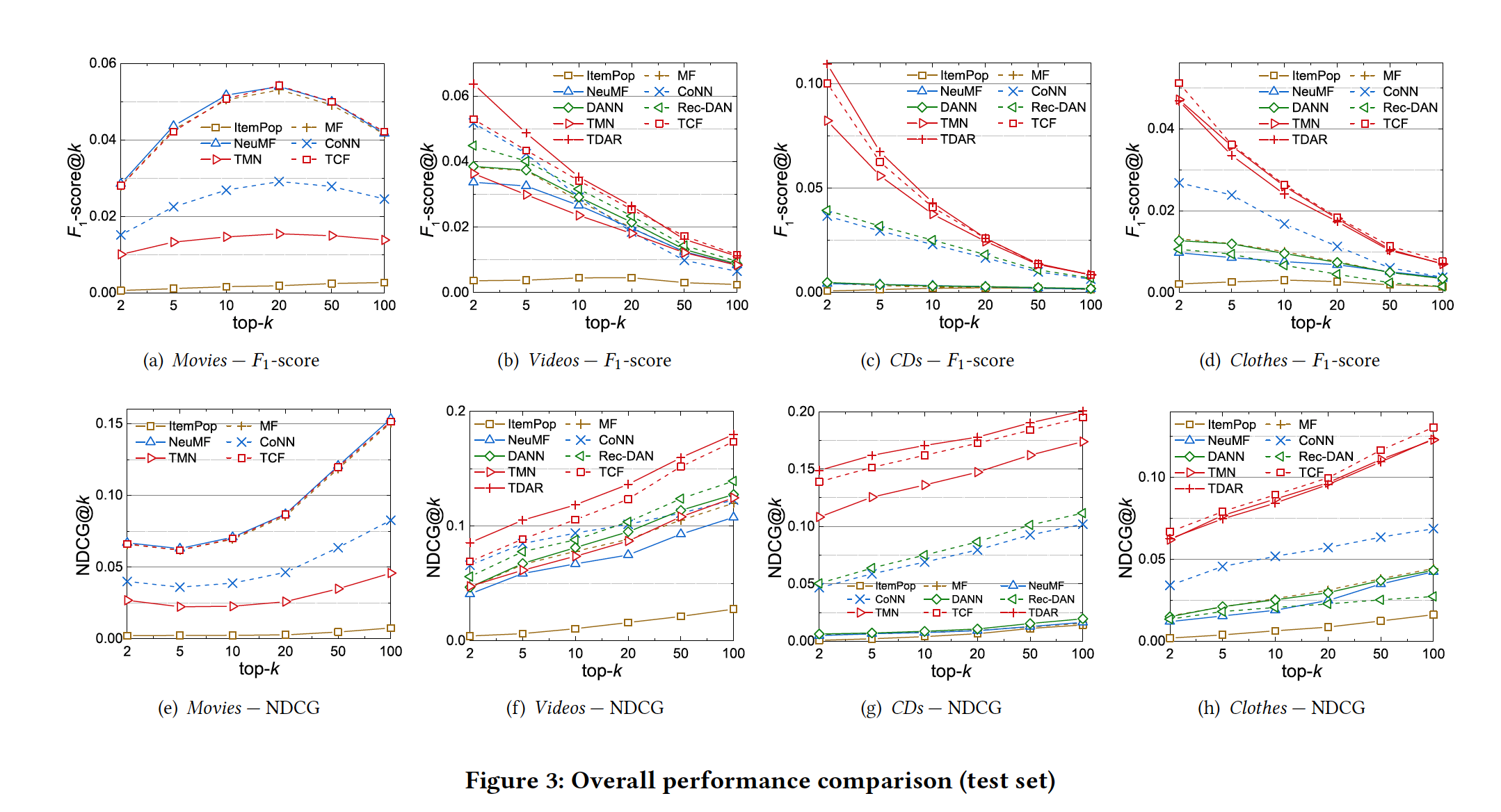

We conducted experiments on Amazon's public datasets, including movies, videos, CDs, and clothes. We also deleted data with user or item overlap between the domains. We used the most data-rich "movies" as the source domain and the other three domains as the target domains in our experiments. Meanwhile, we used the classic Matrix Factorization (MF) collaborative filtering algorithm, Neural Matrix Factorization (NeuMF) deep learning algorithm, Deep Cooperative Neural Networks (CoNN) text-based recommendation algorithm, classic Domain Adversarial Neural Network (DANN) adversarial learning algorithm, and Discriminative Adversarial Networks for Recommender Systems (Rec-DAN), a state-of-the-art cross-domain recommendation algorithm, as baselines for comparison. The results show that our algorithm outperformed the baselines on multiple datasets.

We also found that our algorithm was more outstanding in tasks with similar domains. This is also in line with our expectation because transferring knowledge from a largely different domain does not benefit the target domain much. This suggests that we should choose proper domains to help learning.

Our solution of using textual information to align representation spaces of users and items for cross-domain recommendation is highly-scalable and enlightens the industrial community on cross-domain recommendation. Considering that text is just one of the types of information, we will focus on using other types of information to facilitate the use of transfer learning in recommendation algorithms. Based on its achievements in the areas of CV and natural language processing (NLP), transfer learning will play an increasingly important role in future recommender systems.

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

GCC: Graph Contrastive Coding for Graph Neural Network Pre-training

2,593 posts | 791 followers

FollowAlibaba Clouder - October 15, 2020

Alibaba Clouder - June 22, 2020

Alibaba Clouder - October 15, 2020

Alibaba Clouder - October 15, 2020

Alibaba Clouder - July 18, 2018

Alibaba Clouder - October 15, 2020

2,593 posts | 791 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Intelligent Robot

Intelligent Robot

A dialogue platform that enables smart dialog (based on natural language processing) through a range of dialogue-enabling clients

Learn MoreMore Posts by Alibaba Clouder