Download the "Cloud Knowledge Discovery on KDD Papers" whitepaper to explore 12 KDD papers and knowledge discovery from 12 Alibaba experts.

By Wang Menghan (Xiangyu), Lin Yujie (Wanrong), Lin Guli (Zhongning), Yang Keping (Shaoyao), Wu Xiao-ming

Combining graph representation learning with multi-view data for recommendation is a new trend in the industry. Most existing methods can be categorized as multi-view representation fusion. They integrate multi-view data into a single vector for representation. However, this raises concerns in less sufficient learning and inductive bias. In this article, we use a multi-view representation alignment approach to address this issue. Particularly, we propose a multi-task multi-view graph representation learning framework (M2GRL) to learn node representations from multi-view graphs. M2GRL covers two types of tasks: intra-view tasks and inter-view tasks. Intra-view tasks focus on learning the node representations on homogeneous graphs, while inter-view tasks serve to learn the relations of nodes between two different views. In addition, M2GRL applies homoscedastic uncertainty to adaptively tune the loss weights of tasks during training to achieve better convergence of the model. According to offline and online data tests, the model significantly outperforms other industrial recommendation algorithms and has been applied to multiple services on Taobao. As far as we know, this is the first time the multi-view representation alignment method has been applied to large-scale graph representation learning in the industry.

Diversity recommendation is a metric on Taobao and refers to recommending items of the categories that users have not clicked recently. The diversity metric is essentially equivalent to the novelty or diversity metric of recommendations in academic communities. Improving the Click-Through-Rate (CTR) of diversity recommendations helps improve long-term user experience on Taobao. A common industrial recommender system can be divided into two stages: matching and ranking. This article focuses on the matching stage. Therefore, our topic can be abstracted into diversity matching. According to our analysis, the main problem with diversity matching is the significant sparsity of data, which increases the difficulty for models to learn item representations accurately. In reality, the discovery CTR is much lower than the non-discovery CTR. Learning how to model sparse data has always been a challenge. Meanwhile, diversity matching and basic matching are contradictory. Basic matching takes all user clicks as positive samples to learn user preferences. However, diversity matching may miss a large number of non-discovery clicks if it only uses discovery clicks as positive samples. These non-discovery clicks also contain a large amount of user preference information. If they are discarded as negative samples, the learning ability of the model will be greatly compromised. Learning how to tackle the mismatch between discovery click samples and user preferences and how to reflect the mismatch in the final loss function remain significant challenges. Then, we analyze whether our company's existing technologies can solve these problems. A feasible solution is to match item2item data first, and then filter out non-discovery items according to users' behavior sequences. However, this method cannot be optimized by the model and requires further consideration.

A natural idea for improving the diversity metric is to recommend items in the categories that the users have not clicked in recent days; the higher the CTR, the better. In a recommender system, matching determines the recommendation candidates. Therefore, we started with matching and defined this problem as diversity matching. Due to the sparsity of user behavior data, traditional collaborative filtering (CF) techniques face great challenges in making recommendations, especially in diversity matching. Item embedding can reduce the impact of sparsity. There are many methods to learn item embedding representations. The methods based on graph embedding have been widely used in the Group. It is a common practice that all user clicks are used as positive samples to learn user preferences. However, diversity matching may miss a large number of non-discovery clicks if it only uses discovery clicks as positive samples. These non-discovery clicks also contain a large amount of user preference information. If they are discarded as negative samples, the learning ability of the model will be greatly compromised. Learning how to tackle the mismatch between discovery click samples and user preferences and how to reflect the mismatch in the final loss function remain significant challenges.

Then, we break down the problem into two parts: learning global basic representations and learning metrics of heterogeneous categories. This is similar to the currently popular idea of pretraining and finetuning in natural language processing (NLP.) First, we use global basic representations to learn the basic representations of items. Note: we use all user clicks here, including discovery and non-discovery. This way, we learn the global preferences of users, without discarding useful information to fulfill the diversity matching purpose. Then, we use a heterogeneous metric learning model as a downstream task. We use all the pretrained representations in learning metrics of heterogeneous categories for the matching. This decoupled design can well address the two preceding challenges we highlighted. In the pretraining stage, all user clicks could be taken into account, whereas in the finetuning stage, only the loss of diversity matching needs to be considered. In addition, we also introduce more node information to global representations, such as the knowledge graphs, which can better model representations of items and the potential connections behind cross-category user behaviors.

Next, we break down the problem further. First, we break down the problem into a heterogeneous category matching. Diversity matching can be regarded as a subset of heterogeneous category matching. Diversity matching must be heterogeneous category matching, whereas heterogeneous category matching is not necessarily diversity matching. Compared with the basic matching and rule filtering methods, we further contract and restrict the matching space. Second, we break down the problem into two parts, learning global basic representations and learning metrics of heterogeneous categories. This is similar to the currently popular idea of pretraining and finetuning in NLP. First, we use global basic representations to learn the basic representations of items. Note: We use all user clicks here, including discovery and non-discovery. This way, we learn the global preferences of users, without discarding useful information to fulfill the diversity matching purpose. Then, we use a heterogeneous metric learning model as a downstream task. We use all the pretrained representations in learning metrics of heterogeneous categories for the matching. This decoupled design can well address the two preceding challenges we highlighted. In the pretraining stage, all user clicks could be taken into account, whereas in the finetuning stage, only the loss of diversity matching needs to be considered.

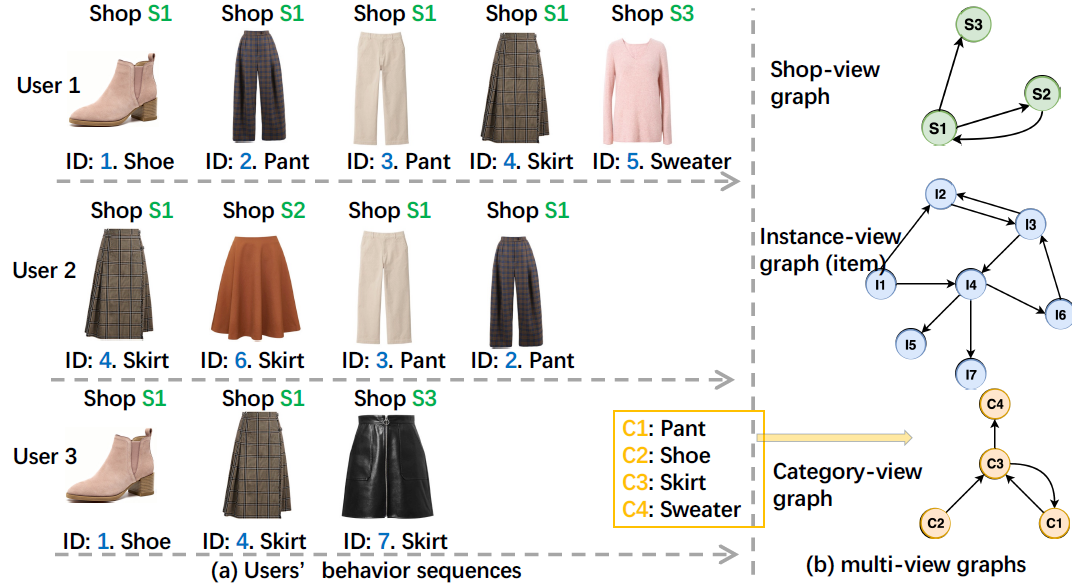

The preceding figure shows the original ideas of our model. We built three homogeneous graphs based on user behavior sequences that we called the store sequence, the item sequence, and the category sequence, respectively. As the figure shows, the graphs vary in structure. The traditional methods of using the item graph only or building only one heterogeneous graph will miss the structural data. Our goal is to use such structural data for better learning of representations.

For the convenience of expressions and in correspondence with our paper, from this point forward, we will refer to learning global basic representations as M2GRL and learning metrics of heterogeneous categories as the multi-view metric model. Next, we will describe their respective details.

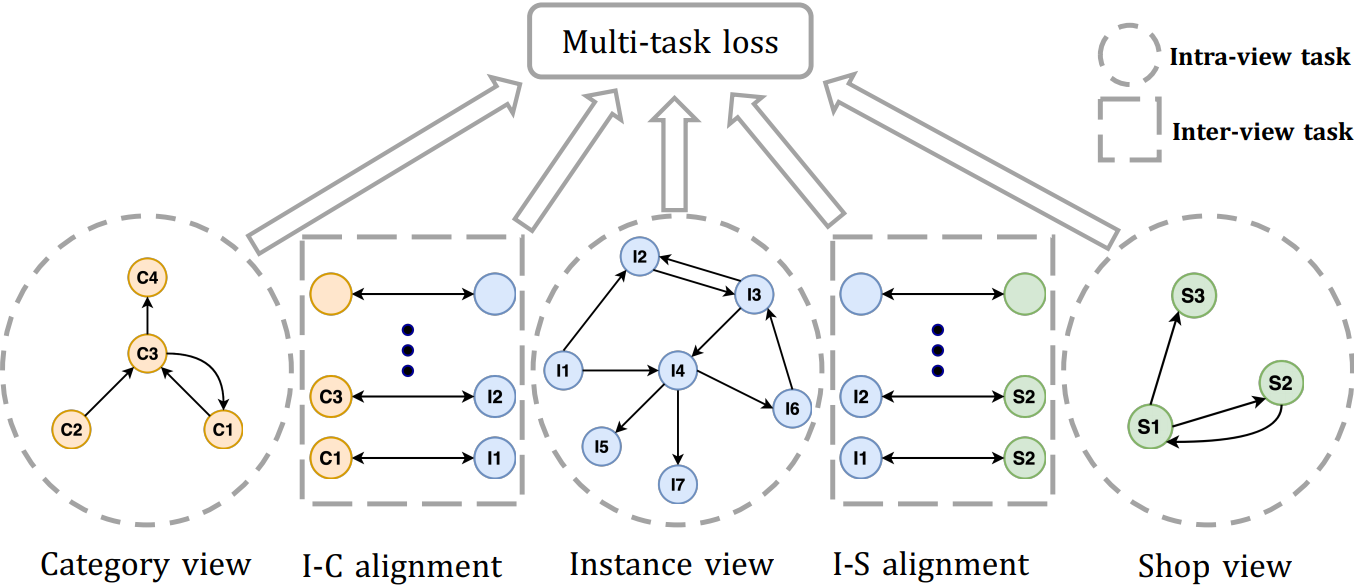

For M2GRL, we use the information from cognitive graphs and focus on the popular graph representation learning. The e-commerce scenarios feature a wide variety of information to showcase items from different angles. This can be regarded as a multi-view learning problem and the different features can be regarded as multi-view data. The current practices of large-scale graph representation learning use multi-view data in two ways. One is to establish a network with items and introduce multi-view data to the network as attributes of the items for representation learning. The other is to create a heterogeneous graph containing item and multi-view data and then learn the representations from the heterogeneous graph by using graph representation learning techniques, such as metaath2vec. Here we propose a new idea. First, we build multiple homogeneous graphs (such as category graph, item graph, or shop graph) about e-commerce entities (single-view data), and learn the representations from each graph. Then, we perform multi-view representation alignment according to the connections between different entities. In light of this idea, we propose M2GRL, a new multi-task multi-view graph representation learning framework. M2GRL contains two types of tasks: intra-view tasks and inter-view tasks. The graph model is shown in the following figure.

1. Intra-View Task

An intra-view task can be regarded as a representation learning problem on a homogeneous graph. In theory, we can use any graph representation learning algorithm to learn node representations in a homogeneous graph. In practice, we use the skip-gram model with negative sampling (SGNS.) This model is highly scalable and convenient for parallelization and has been proved to be effective.

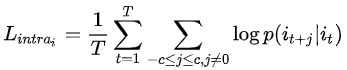

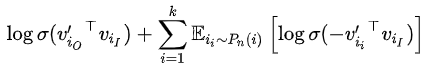

For a given behavior sequence, the skip-gram formula maximizes the average log probability.

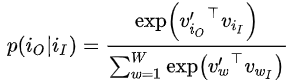

Where,  is defined as follows.

is defined as follows.

We use the negative sampling method to approximately maximize the log probability of the function. The preceding formula can be transformed into the following form.

2. Inter-View Task

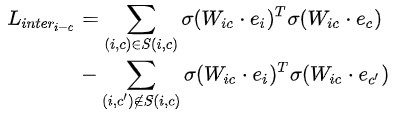

The goal of an inter-view task is to learn the relations between different entities. For example, the I-C alignment in the figure represents learning the relationship between the items and categories. Items and categories are in different embedding spaces. Therefore, we need to map them to a common relational embedding space and learn their relations in this space. Specifically, we use an alignment transformation matrix W for mapping. Therefore, the loss of the inter-view task can be presented in the following formula.

3. Learning Task Weights with Homoscedastic Uncertainty

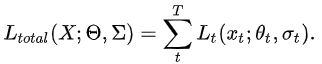

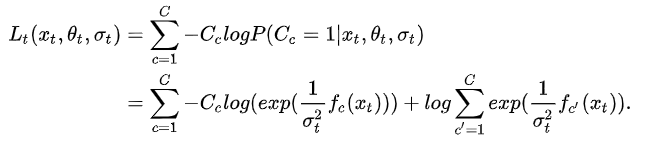

M2GRL requires a globally optimized loss function. A common practice is to perform a weighted linear combination. However, the weights are hyperparameters and require manual tuning, which is resource-consuming in scenarios with a large amount of data. Instead, we use the homoscedastic uncertainty idea to automatically weigh the loss of each task during model training. We assign a Gaussian likelihood distribution to the prediction result of each task and use its standard deviation as the noise disturbance to measure the uncertainty. Therefore, we rewrite the loss function as follows:

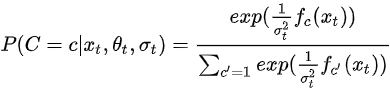

Both types of tasks can be regarded as category classification tasks. Therefore, we can use a softmax function to represent the likelihood.

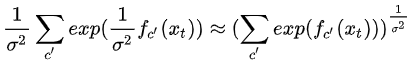

Then, we put it into the loss function and the function is changed to the following form:

After some simplification,

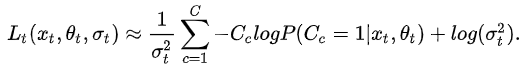

The final loss function is as follows:

In short, the reciprocal of the uncertainty (the variance) serves as the weight of each task, and the weight is not standardized. When a task has high uncertainty, the calculation will feature large gradient errors. Therefore, we need to assign a low weight for this task. On the contrary, when the uncertainty of a task is low, the weight of the task will increase. This way, we can dynamically adjust the weights of multiple tasks.

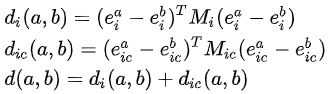

On Taobao, recommendation diversity is an important metric to measure long-term user experience. Customers will be bored if the recommender system keeps recommending similar items in the same category, such as the same skirts with different colors. Diversity recommendation on Taobao means the virtual categories of recommended items do not appear in the user's recent behavior logs of the past 15 days. In traditional recommendation algorithms, items of the same category tend to have a higher similarity score, which contradicts the goal of our diversity matching. We believe that the multiple representations learned by the M2GRL model can be used for diversity matching recommendation. Heuristically, the diversity recommendation is to find two items that are close in the instance-view embedding space but far away in the category-view embedding space. This way, we can ensure the recommended items are diverse and of interest to the users. Therefore, we have proposed a simple multi-view metric model. We used two representations: one is from the instance-view embedding space, and the other is from the instance-category relational embedding space.

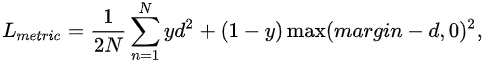

We used the contrastive loss to learn the metric matrix.

The learned embeddings in the metric space can be used for subsequent matching operations.

We proposed M2GRL to build a web-scale recommender system. For information about the modeling details and experimental results, see the two links below.

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

KDD 2020: Semi-Supervised Collaborative Filtering by Text-Enhanced Domain Adaptation

2,593 posts | 793 followers

FollowAlibaba Clouder - October 15, 2020

Alibaba Cloud Data Intelligence - July 18, 2023

Alibaba Clouder - September 25, 2020

Alibaba Clouder - September 17, 2020

Alibaba Clouder - June 22, 2020

Alibaba Clouder - September 17, 2020

2,593 posts | 793 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba Clouder