The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

Double 11 Global Shopping Festival has come and gone. This year's Double 11 had hundreds of millions of buyers and sellers. The success of Double 11 was only possible with the strong support from the Alibaba Cloud Product Technical Team. Tablestore, of Alibaba Cloud Storage Team, also provided support.

Ten months ago, DingTalk faced the rigid online office requirements caused by the sudden outbreak, posing significant challenges to the cost, maintenance, and stability of existing storage systems. After a lot of research, DingTalk chose Tablestore from the Alibaba Cloud Storage Team as the unified storage platform. Through the close cooperation between the DingTalk Team and the Tablestore Team, all of the message synchronization data and full message data of the IM system of DingTalk and Multi-tenant PaaS was migrated. During the 2020 Double 11 Shopping Festival, Tablestore provided full support for the Instant Messaging (IM) of Alibaba Group for the first time to ensure smoother communication between hundreds of millions of buyers and sellers.

This article describes the IM storage architecture of DingTalk and a series of works done by Tablestore to meet migration requirements in terms of stability, functionality, and performance.

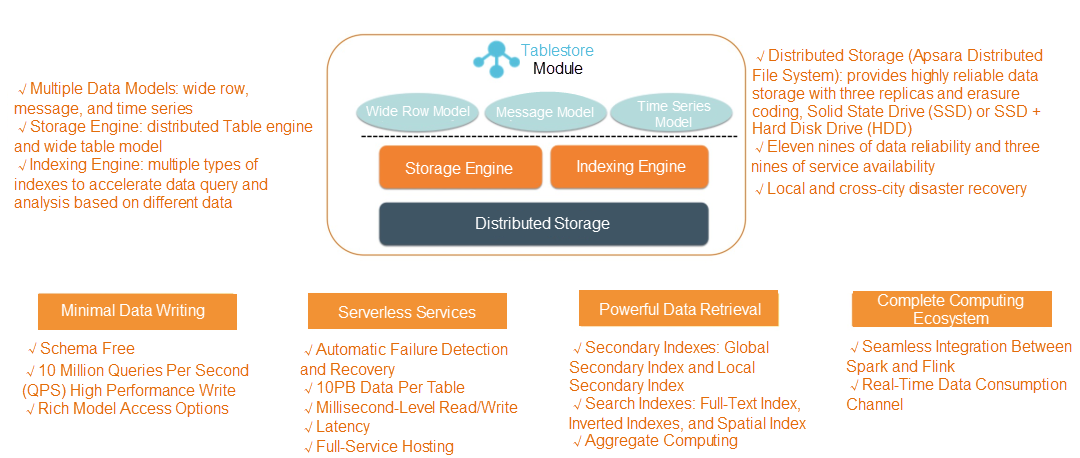

Alibaba Cloud Tablestore is an online data platform that can store, search, and analyze large amounts of structured data. It can scale up to PB-level storage for a single table and provide a service capacity of hundreds of millions of queries per second (QPS). Tablestore was developed by Alibaba Cloud Intelligence. It features strong performance, low costs, easy scalability, full-hosting, high reliability, high availability, hierarchical storage of cold and hot data, and flexible computing and analysis. It has rich practical experience and accumulation, especially in metadata, big data, monitoring, message, Internet of Things, trace tracing, and other types of applications. The following figure shows the product architecture of Tablestore.

The DingTalk message systems include the DingTalk IM system and the IMPaaS system. The DingTalk IM system carries the IM services. As a multi-tenant IM platform, IMPaaS can be used by multiple teams within the Alibaba Group. Currently, it has supported major IM services in Alibaba Group, such as chat on DingTalk, the customer service chat on Taobao Mobile, Tmall, Qian Niu, Eleme, and AMAP. The two systems have the same architecture, so they will not be differentiated in this article. For convenience, they are described as the IM systems. The IM system is responsible for receiving, storing, and forwarding individual and group messages. It also supports session management, read notification, and status synchronization. The IM system is responsible for end-to-end communication.

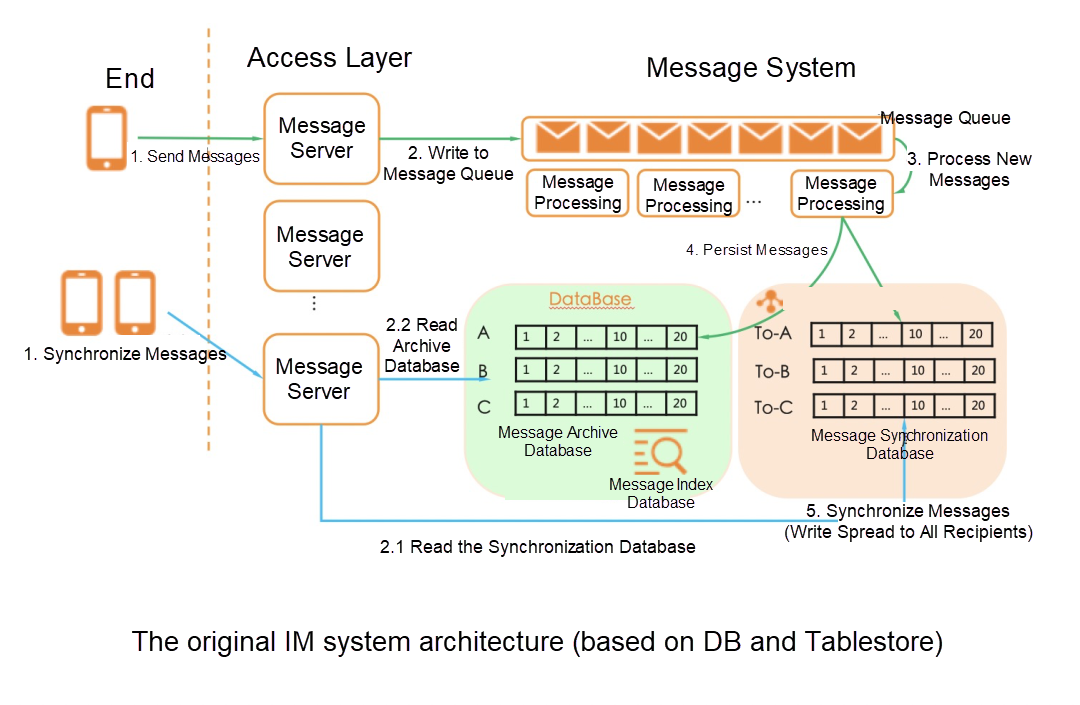

The IM system involves storage systems in the architecture, including DB (InnoDB, X-Engine) and Tablestore. The DB is used to store sessions and messages. Tablestore is used for the synchronization protocol that is responsible for the message synchronization push. During the outbreak, the number of DingTalk messages increased rapidly. This made the DingTalk Team realize that using the traditional relational database engine to store streaming messages had great limitations in terms of write performance, scalability, and storage costs. The distributed NoSQL with the log-structured merge (LSM) architecture is a better choice.

The preceding figure shows the original architecture of the IM system. In this system, a message is stored twice after it is sent:

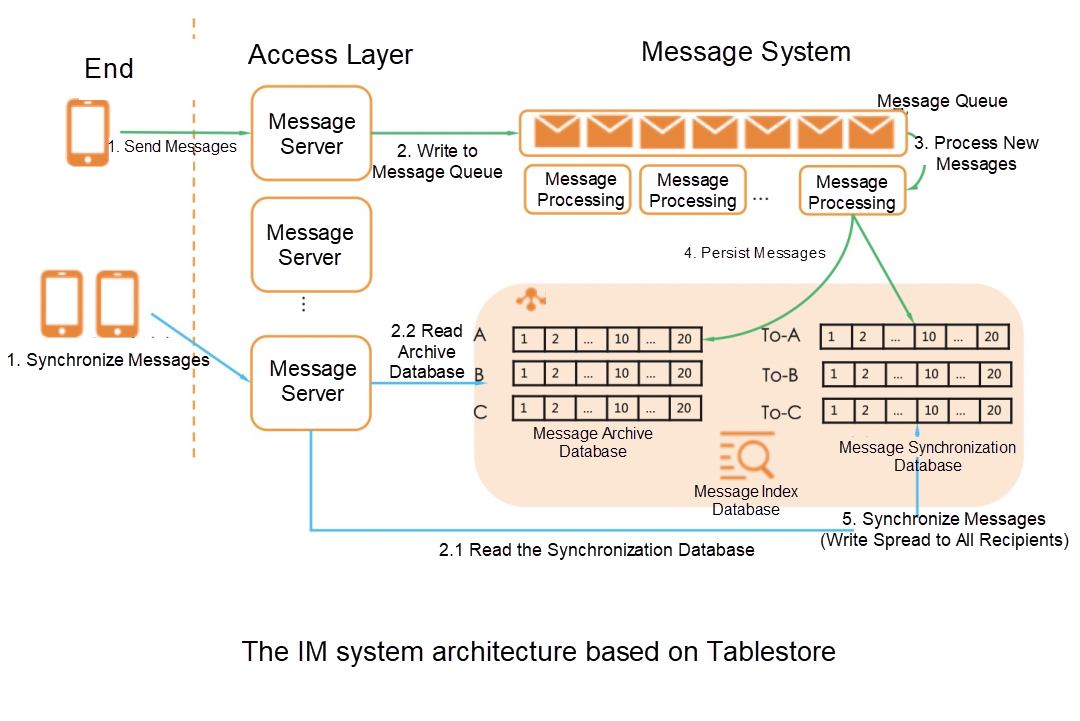

After the architecture upgrades, the dependency on DB is removed from full message storage, and all data is written into Tablestore. After the upgrading, the IM system storage depends only on Tablestore, bringing the following benefits:

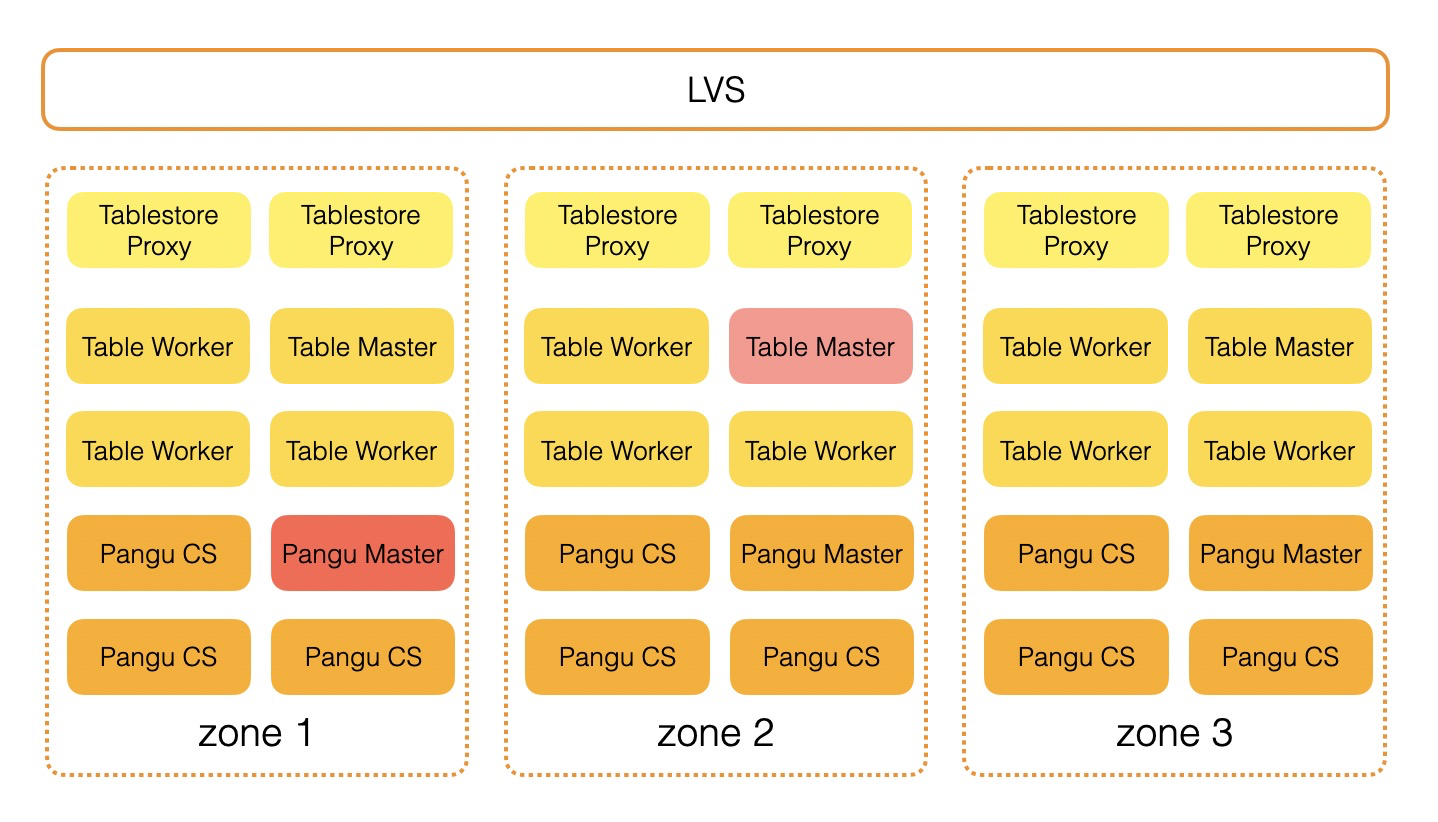

Stability is the foundation of everything and the biggest concern for selecting a business. In a complex distributed system, every component may have problems, ranging from broken optical cable in the data center to Bit Flip in the network interface controller (NIC). Therefore, every missed problem may cause catastrophic failure and trigger serious public opinion risks. The core of the stability effort is to fully consider these possibilities and provide fault tolerance as much as possible. As a basic product of Alibaba Cloud Intelligence, Tablestore is deployed in all service areas of Alibaba Cloud worldwide, ensuring its stability. While providing services for DingTalk, it has been specially enhanced in stability.

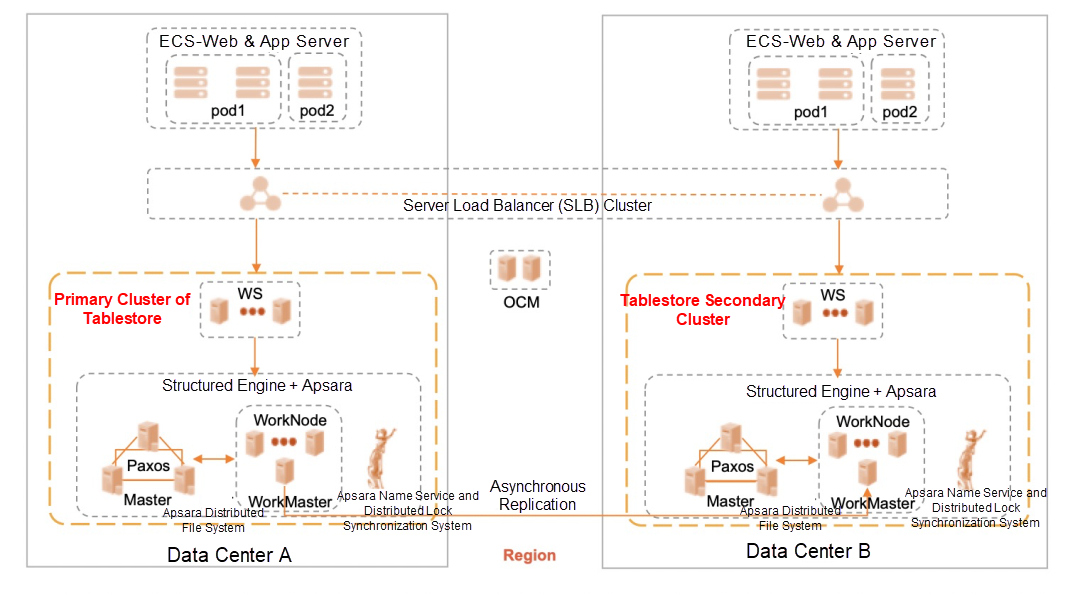

To avoid service unavailability caused by a fault on a single data center, Tablestore supports disaster recovery based on primary and secondary clusters. Primary and secondary clusters are two independent clusters. Data is replicated in the asynchronous background mode. This disaster recovery method cannot ensure strong data consistency and causes a delay of several seconds. When the primary cluster fails in a single data center, all traffic is switched to the secondary cluster, which provides services to ensure service availability.

Although primary/secondary disaster recovery can solve the disaster recovery problem of a single data center, strong data consistency cannot be achieved when the primary cluster is down unexpectedly and the switchover process requires coordination with the business (tolerating data inconsistency for a time and subsequent data patching.) The three available zone (3AZ) disaster recovery modes can solve these problems.

The 3AZ disaster recovery deployment method is to deploy the system on three physical data centers. When writing data, the Apsara Distributed File System evenly distributes the three copies of the data across the three data centers. This can ensure that when the failure occurs in any data center, all of the data in the other two data centers must be available to prevent any data loss. Each time Tablestore returns a successful data write, three copies are written to disks in three data centers. Therefore, the 3AZ architecture ensures strong data consistency. When one of the data centers fails, Tablestore schedules the partitions to the other two data centers to continue to provide services. The 3AZ deployment mode has the following advantages over the primary/secondary clusters:

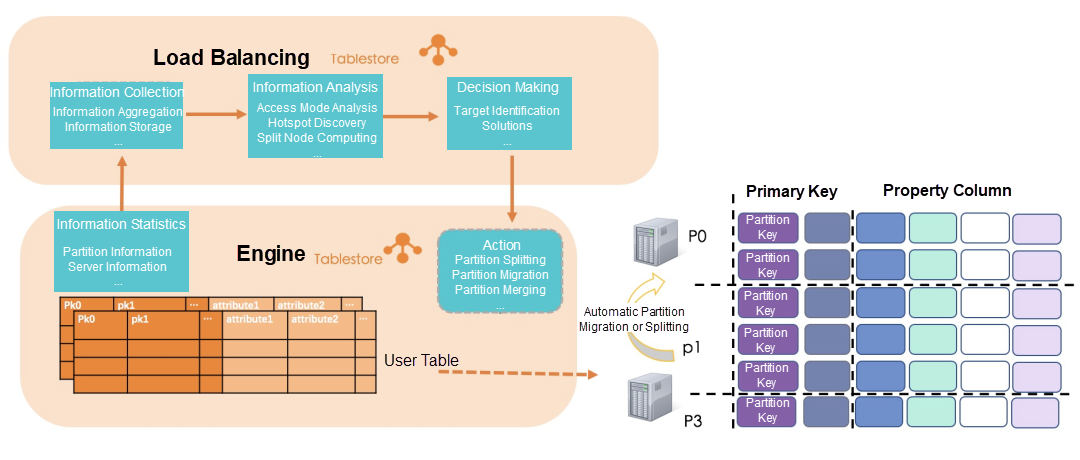

In Tablestore, table storage is logically divided into partitions for high-scalability. The table is partitioned (range partitions) based on the partition key range, and these partitions are scheduled to different machines to provide services. To achieve optimal throughput and performance of the entire system, you need to balance the load of each machine in the system. In other words, the access volume and data volume distributed on each machine need to be balanced.

During system operation, design or service issues often lead to unbalanced data access and distribution, such as data skew and local hotspot partitioning. There are two big problems to be solved:

The emphasis on automation here is mainly because Tablestore, as a multi-tenant system, runs a large number of instances and tables. If it relies on people for feedback and processing, it is not only costly but also has no operability. To solve this problem, Tablestore has designed its own load balancing system. There are three main points:

On this basis, the load balancing system has also developed dozens of strategies to solve various types of hot issues.

This load balancing system can automatically identify and process 99% of hot issues that occur in clusters. It provides the capability to automatically resolve problems in minutes and locate and process problems in minutes. The system is currently running in all domains.

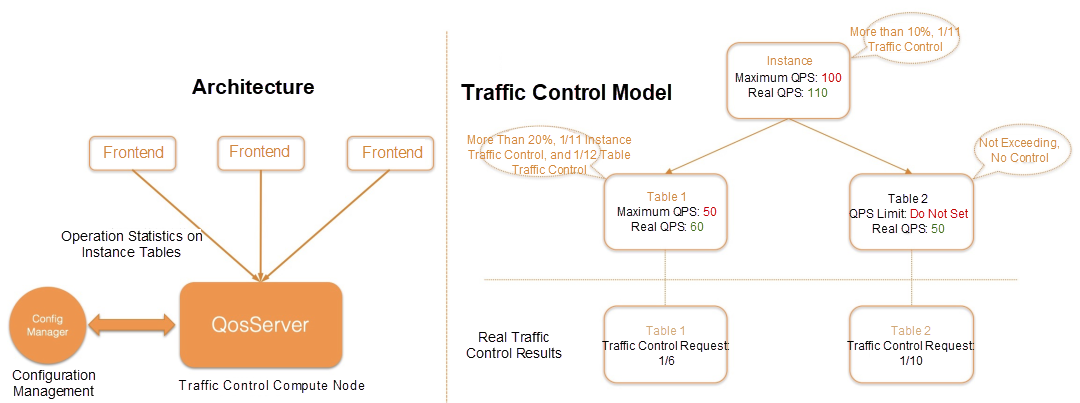

Tablestore provides a complete set of traffic control systems, which are listed below:

1. Instance-Level and Table-Level Global Traffic Control: The purpose is to solve the problem when the traffic exceeds the expectation and prevents the cluster traffic from exceeding the limit. As shown in the following figure, the traffic control architecture is on the left, and the traffic control model is on the right, where access traffic can be controlled at the instance and table levels, respectively. If the instance-level traffic exceeds the upper limit, all of the requests of the instance will be affected. You can also set the maximum table traffic at the table level to avoid affecting other tables when the traffic volume of a single table exceeds the upper limit. Based on this system, Tablestore can precisely control the traffic of the specific instances, tables, and operations, provide multi-dimensional personalized control measures, and guarantee the Service Level Agreement (SLA) of services. During the early stages of the pandemic, the cluster traffic of DingTalk exceeded the upper limit many times, and the global traffic control solved this problem.

2. Active Partition Traffic Control: Tablestore partitions data by range. When business data is skewed, reading and writing the partition hotspots often occur. The active partition traffic control mainly solves the single-partition hotspot problem to prevent single-partition hotspots from affecting other partitions. Access information about each partition is counted during the process, including but not limited to access traffic, the number of rows, and the number of cells.

The traffic control system implements a multi-level defense and guarantees service stability.

Tablestore is a typical architecture that separates storage and computing resources. Tablestore has many dependent components and complex request links. Therefore, performance optimization cannot be limited to a specific module. It is necessary to have a global perspective to optimize the components on the request links to achieve better full-link performance.

In the DingTalk scenarios, after a series of optimization, under the same SLA and hardware resources, the read/write throughput was improved by more than three times, and the read/write latency was reduced by 85%. The improvement in read/write performance also saves more machine resources and reduces business costs. The following is a brief introduction to the main performance work.

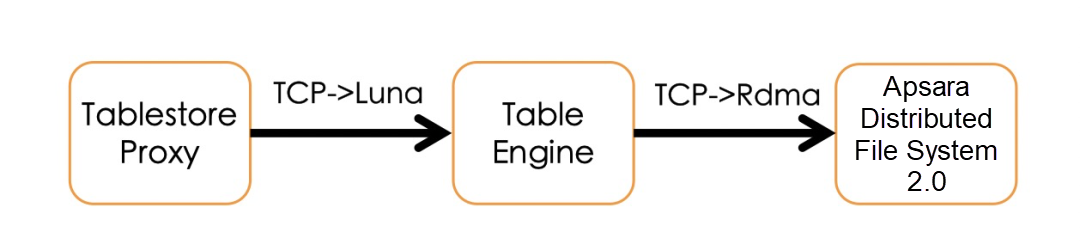

On the core datalinks of Tablestore, there are three main system components:

In the request links, the first-hop network (Tablestore proxy to Table engine) and the second-hop network (Table engine to Apsara Distributed File System) are based on the kernel tcp network framework. Data will be copied, and the system CPU will be consumed multiple times. In terms of storage network optimization, the Luna, the new generation of user-state Remote Procedure Call (RPC) framework, is used as the data transmission mode for the first hop, reducing the data copy times of the kernel and the system CPU consumption. In the second-hop of data persistence, the Remote Direct Memory Access (RDMA) network library provided by the Apsara Distributed File System is used to further reduce data transmission latency.

As a storage base, the Apsara Distributed File System 2.0 is applied to the next-generation networks, storage software, and hardware for architecture design and engineering optimization. It releases the dividend of the development of software and hardware technology. It achieves great performance improvements on the data storage links, and the read/write latency has reached 100 microsecond-level. Based on the optimized architecture of the storage base, the Table engine has upgraded its data storage path, and has been connected to the Apsara Distributed File System 2.0 to connect to the I/O path.

The table model of Tablestore supports a wide range of data storage semantics:

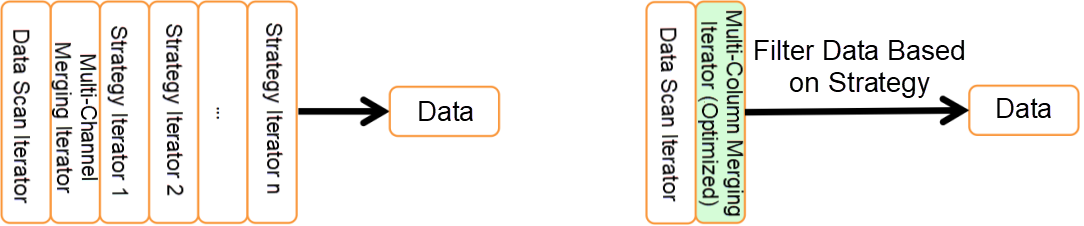

During the implementation of each strategy, to achieve fast iteration, the iterator system is implemented in the form of a volcano model (as shown in the figure on the left half.) One problem caused by this method is that the iteration level gets deeper as the strategy gets more complex. Even if the strategy is not used, it will be added to the system. The dynamic polymorphism in this process results in the high costs of function call. From the perspective of R&D, it is difficult to form a unified perspective, which also brings obstacles to the follow-up optimization.

To optimize the data iteration performance, the context information required by each strategy is assembled into a strategy class, and the policy-related iterators are flattened from a global perspective. Thus, the performance loss of a nested iterator level in reading links is reduced. In multi-channel merging, the multi-column primary key jump capability provided by the column storage is used to reduce the comparison costs of multi-column merging and sorting, improving data reading performance.

In addition to storage format improvement, one of the major problems in memory processing is the performance loss caused by lock competition. Locks are ubiquitous in all modules of the system. Apart from explicit locks for synchronization or concurrent critical sections, there are also optimistic lock performance problems for atomic operations that are easily ignored, such as the global metric counters and the Compare and Swap (CAS) instruction conflicts. The memory allocation of small objects may also cause a serious lock conflict in the underlying memory allocator. The lock structure has been optimized in the following aspects:

As a NoSQL product developed by Alibaba, Tablestore gives full play to its advantages in the cooperation with DingTalk and develops multiple new features in combination with DingTalk services, further reducing CPU consumption, optimizing performance, and upgrading services without affecting the business.

The DingTalk synchronization protocol uses the primary key auto-increment function of Tablestore. This function returns an auto-increment ID after a user successfully writes a row of data. A brief introduction of how DingTalk uses the auto-increment ID to realize synchronous message push is listed below:

One special scenario is that the user has only one unpushed message. If the returned message ID is guaranteed to be auto-incremented, it also ensures that the number is automatically increased by one. Then, after the application server writes a message, if it finds that the returned ID is the only one that is different from the ID pushed last time, it does not need to query from Tablestore and can directly push the message to the user.

With the introduction of the "primary key auto-increment +1 function," the number of application-side reads has been reduced by more than 40%, and the CPU consumption of Tablestore servers and your own business servers has been reduced. Therefore, the business costs for DingTalk have also been reduced.

Prior to this, Tablestore already had the asynchronous global secondary index (GSI) feature. However, in the DingTalk scenarios, most queries are based on the data of a user. Indexes only need to take effect on the data of a user. To address this, we have developed a highly consistent LSI feature and built dynamic indexes for existing data.

In the scenario below, users can directly find all of the messages that mention them.

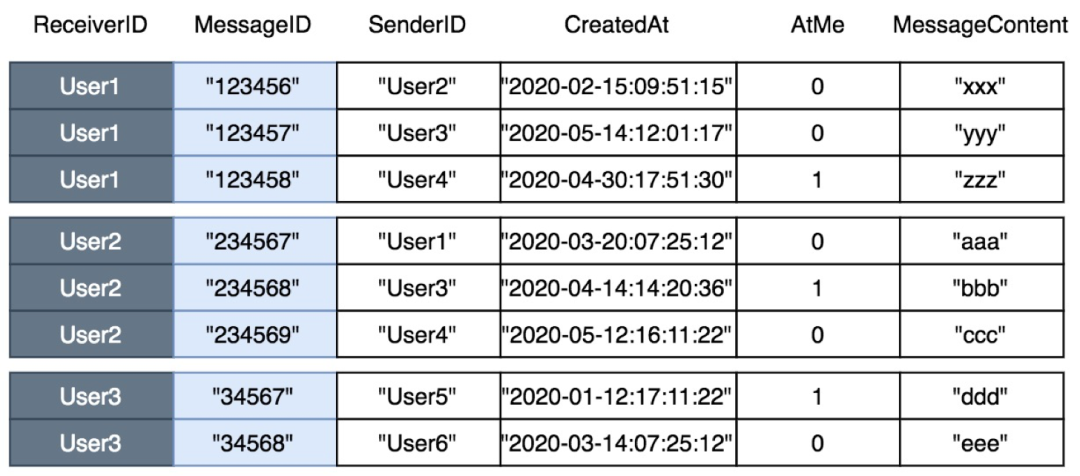

For the business side, a user message table stores all of the messages of the user. In this table, an LSI table of the latest messages that mention the user is created. Then, the user can scan the LSI table to obtain all the messages that mention the user. The two table structures are shown in the following figures:

The Main Message Table

The LSI Table

For the building of incremental data, we examined each module of the full link and made extreme performance optimization. It takes about 10us to create a row of an index:

The building of existing data is a challenge in the industry since LSI requires consistency. If an index is written successfully, it can be read from the index table. At the same time, during the building index of existing data, we hope to minimize the impact on online writing since writing can't be stopped.

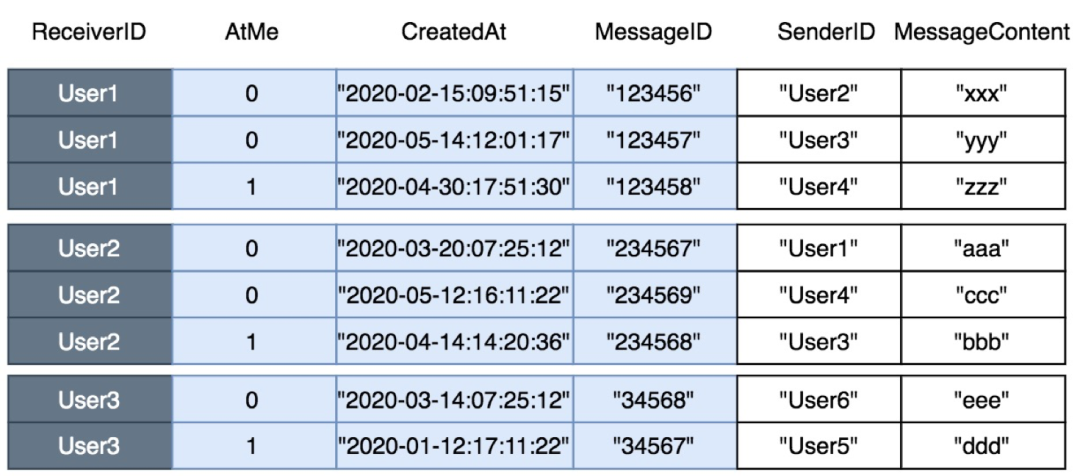

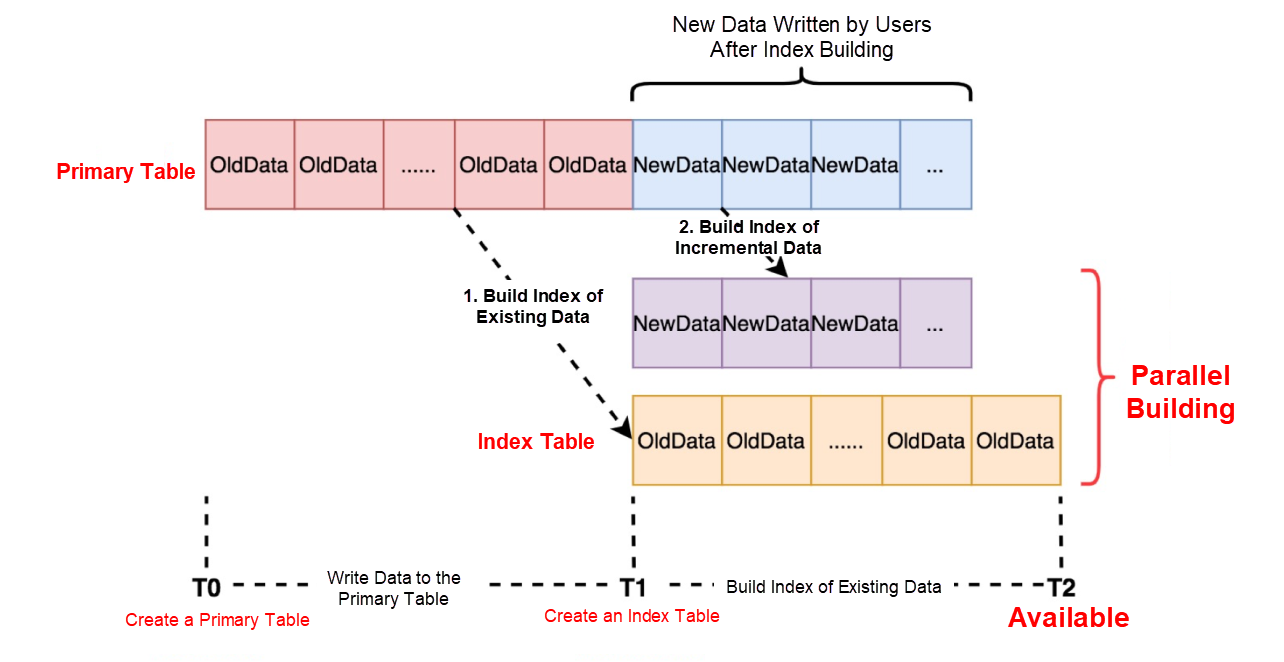

As shown in the following figure, traditional databases do not solve this problem properly. During the conversion from the building of existing data to incremental data, tables need to be locked, which forbids user writing, resulting in service suspension. DynamoDB does not support the construction of LSIs, including the existing data on the table.

The Method for the Building Index of Incremental Data of Traditional Databases

The Method for Building an Index of Existing Data in Tablestore

Tablestore uses a feature from its engine to skillfully solve this problem. It builds indexes for existing data and incremental data, and it can build the LSIs on the existing data without stopping the online writing. In addition, after the existing data indexes are built, the entire indexing process ends without affecting the business layer.

The process of digital transformation is not "changing the engine while driving the aircraft," but "changing the engine when the aircraft is accelerating." During rapid business development, ways to ensure the smooth and efficient upgrading of the storage system and the whole business system will be a problem that every Chief Information Officer (CIO) needs to solve.

2,593 posts | 789 followers

FollowAlibaba Clouder - November 27, 2019

Alibaba Clouder - October 26, 2018

XianYu Tech - November 4, 2019

XianYu Tech - October 10, 2019

Alibaba Clouder - December 20, 2018

AlibabaCloud_Network - December 19, 2018

2,593 posts | 789 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Backup and Archive Solution

Backup and Archive Solution

Alibaba Cloud provides products and services to help you properly plan and execute data backup, massive data archiving, and storage-level disaster recovery.

Learn More Cloud Backup

Cloud Backup

Cloud Backup is an easy-to-use and cost-effective online data management service.

Learn MoreMore Posts by Alibaba Clouder