By Zongzhi Chen

In database scenarios, concurrent data insertion or import is a common occurrence. In these scenarios, the auto-increment ID is not specified and is generated automatically by the database during the insertion process. This type of scenario is commonly referred to as the AUTO_INC data insertion scenario.

Typical business scenarios that fall into this category include the simultaneous login and registration of multiple users during a game launch or the backend order pushing to vendors in e-commerce activities.

This article focuses on how PolarDB optimizes performance in concurrent INSERT scenarios like these.

In concurrent insertion scenarios, the auto-increment ID follows an incremental pattern. Therefore, one might assume that the data would be inserted into the rightmost page of the Btree.

However, this is not always the case.

In this scenario, the continuity of the inserted data is not guaranteed. As a result, the value of the data to be inserted may be smaller than the minimum value of the rightmost page, causing it to be inserted into the second page on the right. Consequently, the data may be inserted into multiple rightmost pages.

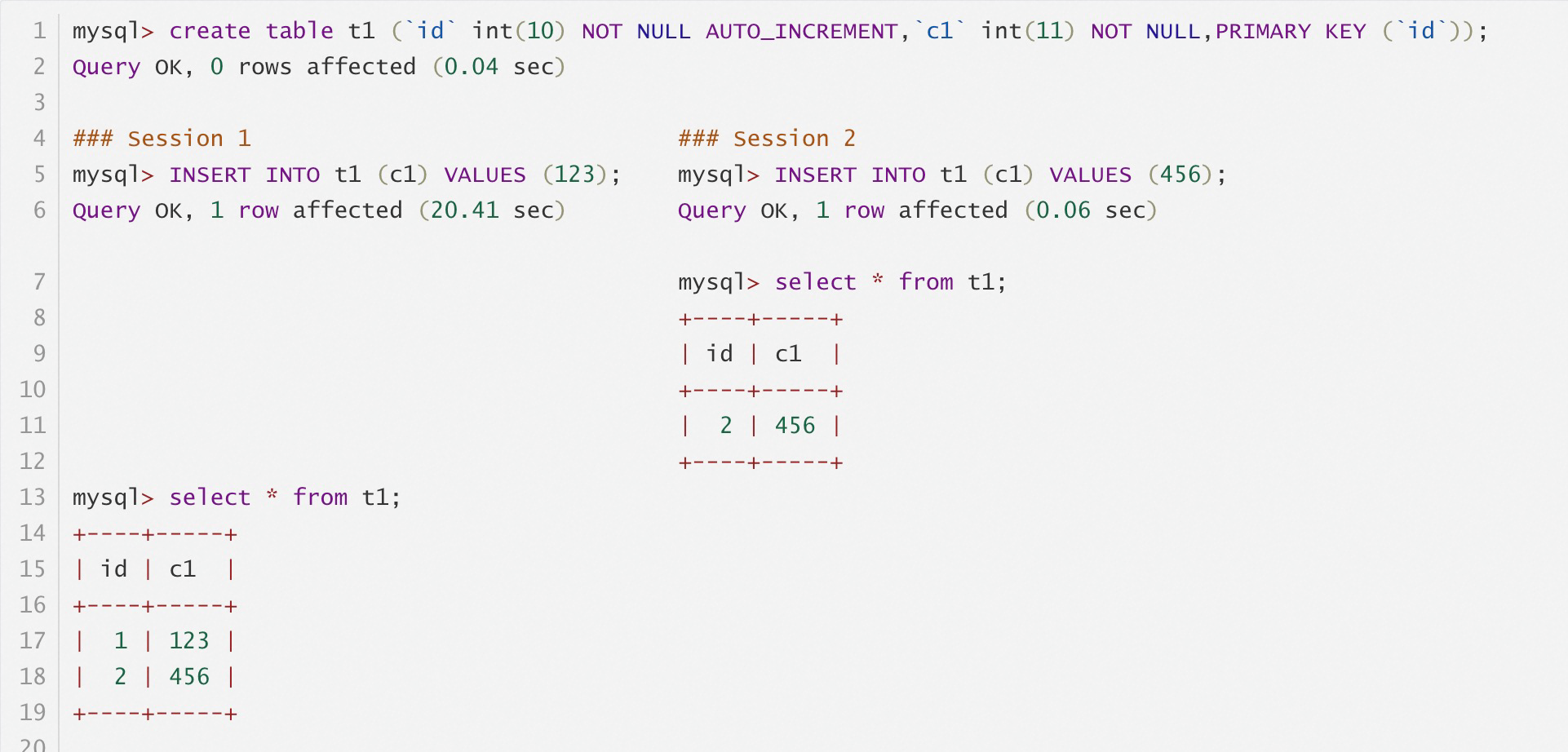

The reason for this behavior is that the code, from the thread obtaining the AUTO_INC value to the actual data insertion, is not locked. As a result, the thread that first obtains the AUTO_INC value may be dispatched later, causing the thread that obtains the AUTO_INC value later to insert their record first.

Therefore, when inserting records concurrently, it is possible for rows with values smaller than the current AUTO_INC value to be inserted later.

To optimize performance, PolarDB allows records to be inserted into multiple Level 0 pages simultaneously. This provides room for performance improvements, as opposed to always inserting records into the rightmost page like a queue, which offers no optimization opportunities.

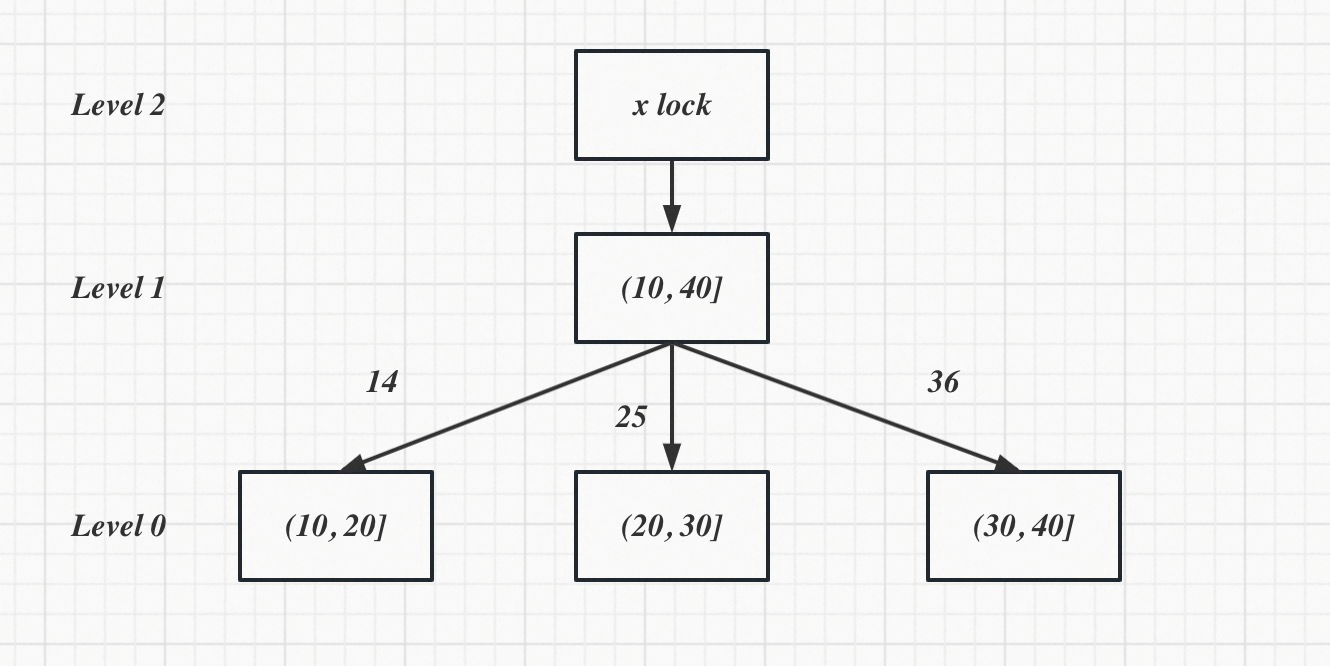

In the given scenario, as shown in the figure, three threads are concurrently performing optimistic insertions with values 14, 25, and 36. Each of these threads holds an X lock on their respective page, enabling concurrent insertions into three pages.

If only the rightmost page is inserted when thread = 1, the performance would undoubtedly be inferior to concurrent insertions into three pages. Theoretically, allowing more leaf pages to be inserted simultaneously leads to higher concurrency and better performance.

To further optimize performance in concurrent INSERT scenarios, the implementation performs Structure Modification Operations (SMO) as early as possible. This involves splitting the rightmost page early on, creating more unfilled pages available for simultaneous insertions.

Therefore, the next steps in our optimization process is to perform SMO as early as possible.

What then is the actual scenario in InnoDB for concurrent INSERT operations?

During our testing, we discovered that due to scheduling issues, it is possible for approximately 3 to 4 pages to be inserted simultaneously in the AUTO_INC scenario, as shown in the previous figure.

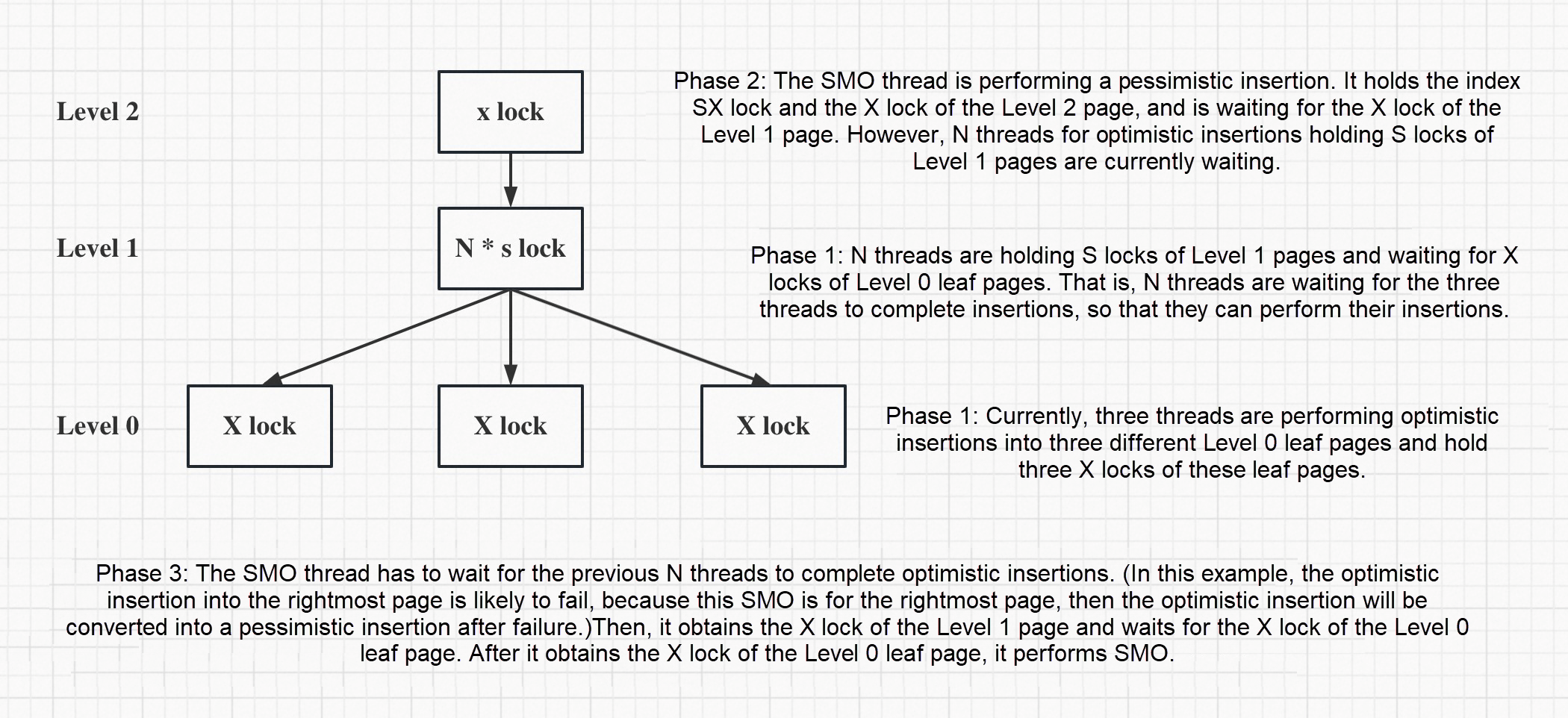

It is evident that the SMO thread needs to wait for the N threads to finish their optimistic insertions before it can carry out the SMO operation. The greater the concurrency and the more threads engaged in optimistic insertions, the longer the SMO thread waits, making early execution of SMO difficult and preventing performance enhancement.

Why then does performance improve after limiting Innodb_thread_concurrency?

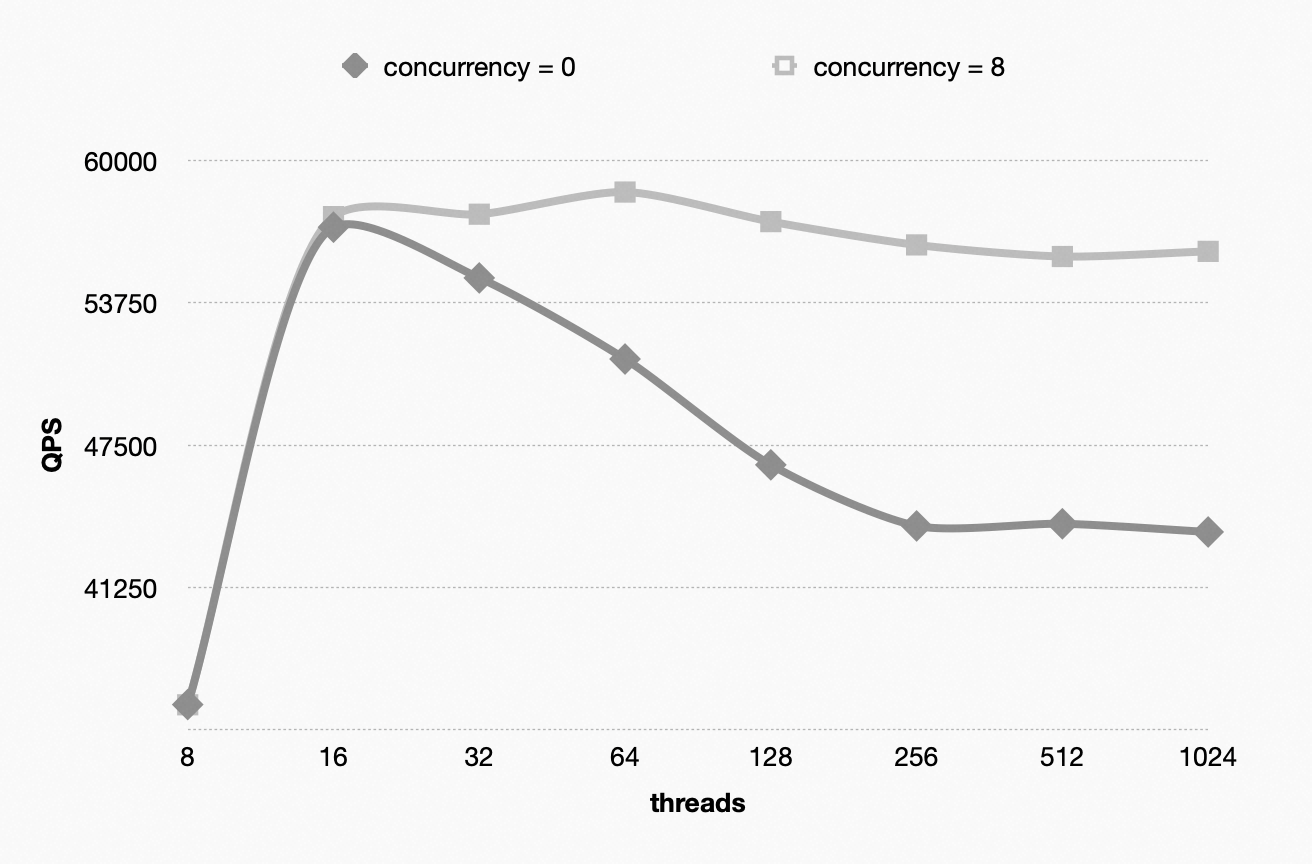

As analyzed above, in the AUTO_INC scenario, there are only a few pages (about three to four) that allow concurrent insertions. An excess of threads will cause the SMO thread to be held up, waiting for these optimistic insertions to be attempted and completed. The more threads there are, the longer the wait. Ideally, if there are no threads currently waiting to perform optimistic insertions into the rightmost page, the SMO thread does not need to wait, thus fulfilling the goal of early SMO execution. Limiting Innodb_thread_concurrency effectively restricts the number of threads performing optimistic insertions, thereby achieving better performance.

In practice, setting Innodb_thread_concurrency to 8 nearly attains the best performance.

How does Blink-tree improve this situation?

When analyzing the issues in the existing InnoDB Btree versions, we proposed a solution to reduce the number of concurrent threads by setting Innodb_thread_concurrency = 8 to ensure high insertion performance. However, setting Innodb_thread_concurrency = 8 is relatively low, and in practical usage, there are also query operations, making such settings rare. So, how does Blink-tree achieve high-performance AUTO_INC insertion while allowing multi-threaded concurrency?

Therefore, we implement priority scheduling for locks in Blink-tree to perform SMO as early as possible.

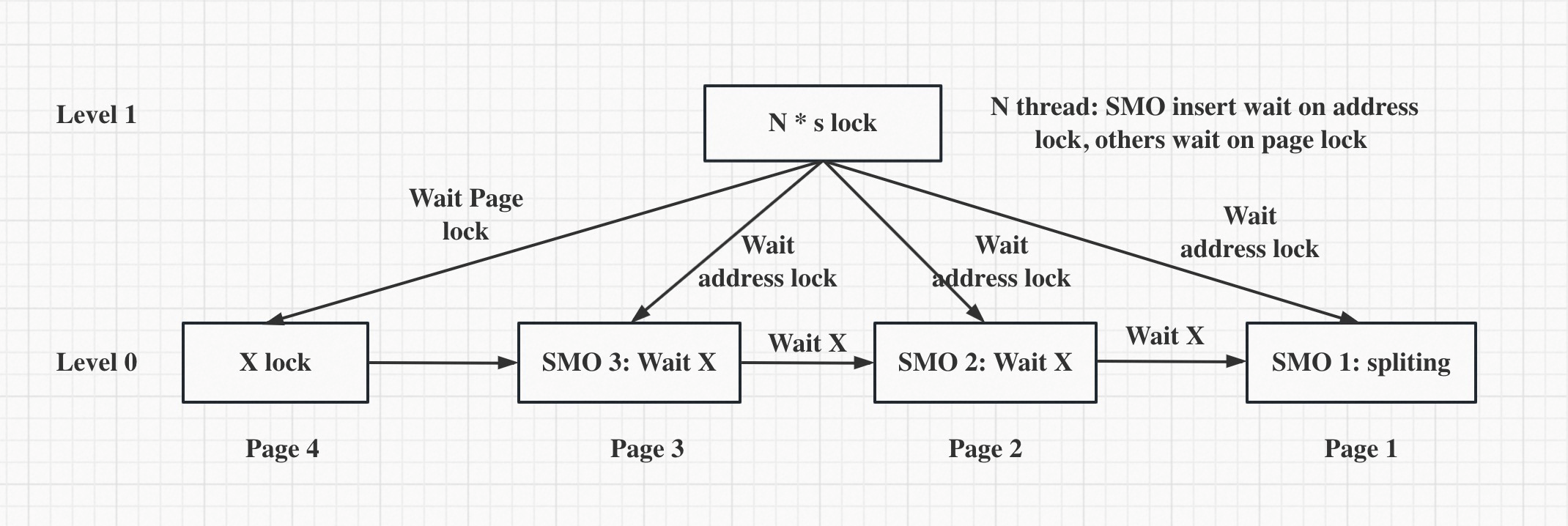

In the previous Phase 3, the SMO thread competes with threads for optimistic insertions for execution priority, resulting in low efficiency of the SMO threads. With priority scheduling for locks, SMO threads are given the highest priority. SMO threads waiting for page X locks are awakened first, followed by threads for optimistic insertions waiting for address locks. This ensures that SMO is performed as early as possible.

The specific implementation is shown in the following figure:

Blink-tree employs lock coupling for adding locks, and even in scenarios of pessimistic insertion, Level 1 pages are held with S locks.

It can be seen that Blink-tree performs SMO as early as possible by adding concurrent SMO threads while introducing lock priority scheduling. In this way, Blink-tree achieves higher performance than InnoDB Btree.

In fact, there is another difference between Blink-tree and InnoDB Btree implementations. In Blink-tree, the threads waiting for the address lock still perform optimistic insertions when awakened. In contrast, in the InnoDB Btree, threads perform pessimistic insertions when awakened after waiting for the page lock. Pessimistic locking involves a wider range and entails greater overhead, leading to further performance degradation.

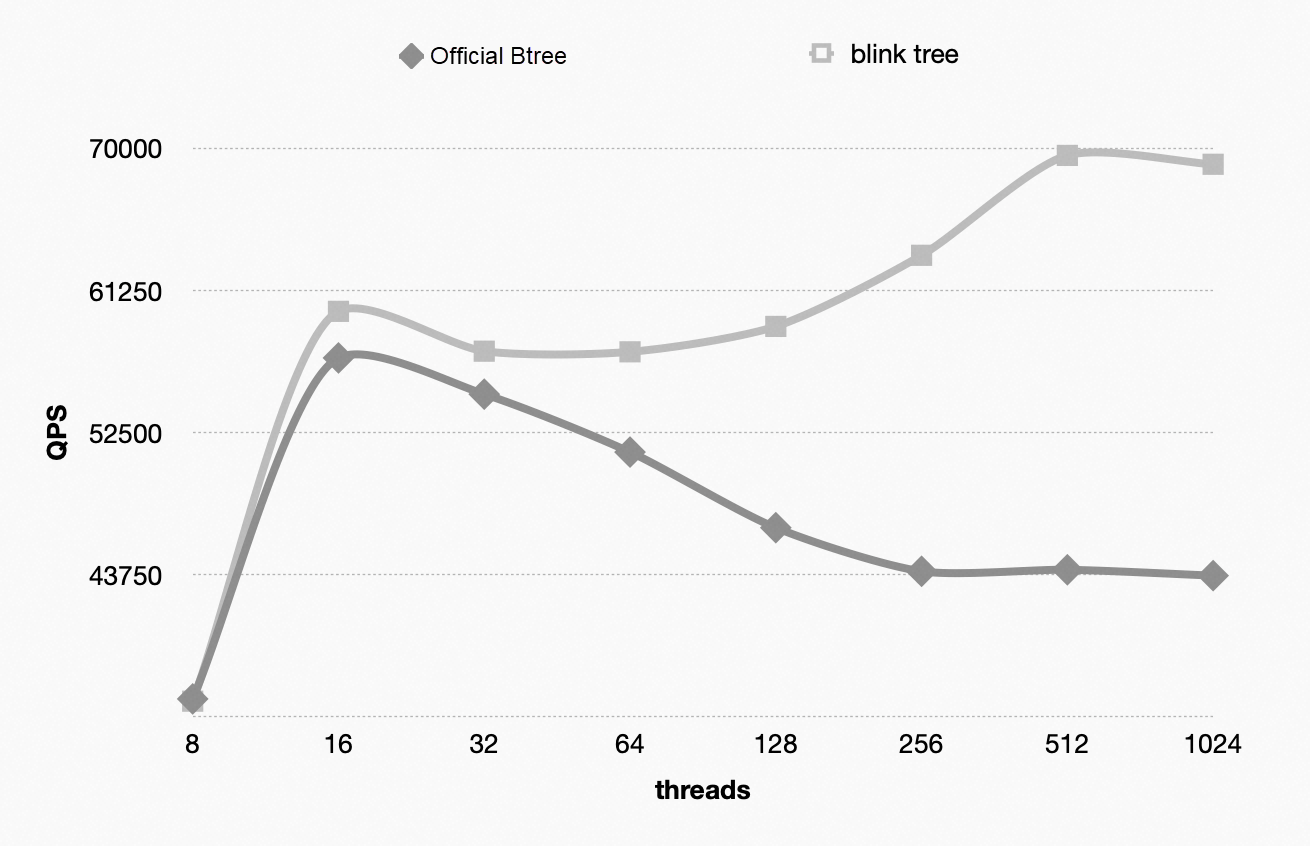

The specific data from the tests is as follows:

In the Blink-tree scenario, it is evident that the performance in the concurrent AUTO_INC scenario is twice as high as the official Btree version. Additionally, Blink-tree outperforms enabling Innodb_thread_concurrency by approximately 13%.

Addressing Dirty Page Flushing Constraints in PolarDB's Physical Replication

About Database Kernel: Why Do We Need to Evolve to PolarDB for MySQL 8.0.2?

ApsaraDB - February 10, 2026

ApsaraDB - September 19, 2022

ApsaraDB - October 16, 2024

ApsaraDB - January 8, 2025

ApsaraDB - June 4, 2024

ApsaraDB - November 7, 2024

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB