By Liu Xing, nicknamed Fanti, who is a senior technical expert at Alibaba.

Independently developed by Alibaba, DataWorks is used to build and administer 99% of the data-driven and data-focused business operations of Alibaba Group by tens of thousands of data and algorithm development engineers every day.

Initially released in 2010, DataWorks has undergone through many technological changes and architecture upgrades up to what is the current version, unfortunately resulting in a great deal of historical baggage. Technological innovation and business development often work well together and complement each other, but they can also restrict each other and cause various problems. The latter is the case with DataWorks. The big data product has some long-standing problems, of which include slow access, extensive code changes required to fix a single bug, and environmental complexity. Problematically, previous iterations have not fundamentally upgraded DataWorks and resolved all of these problems. Rather, they have only improved performance, optimized the underlying engineering structures, and reduced repeated code.

This article will take a look at how we can resolve some of the problems that have plagued DataWorks by adopting the wildly popular microservice architecture and explore how we can transform the technical architecture of DataWorks in a practical manner while avoiding jumping through several complicated engineering hoops.

Let's first discuss the pain points currently encountered by DataWorks users, which are the primary driving force for its technical transformation.

DataWorks has been upgraded multiple times over the years, with both simultaneously developed versions and a couple of technology stack transformations at the frontend and backend. This has left a variety of historical problems. For example, its online applications are difficult to deprecate and several relevant businesses still depend on external APIs that were developed five years ago.

These historical problems remain unsolved today because the previous people in charge may have left the company. Our services are unattended while running properly. Once a service is taken offline, at Alibaba we often receive complaints from users. A bug in any onscreen function may cause a disaster because Alibaba has a large user base. For example, I witnessed a flood of complaints and problem report tickets when a minor and rarely used function was missing from the new version.

A benchmark for big data development platforms, DataWorks features a simple interface with many useful functions. To rebuild such a data development platform that has been verified and modified by countless data development engineers in the various business operations at Alibaba, we have to consider what our platform has experienced over the past decade.

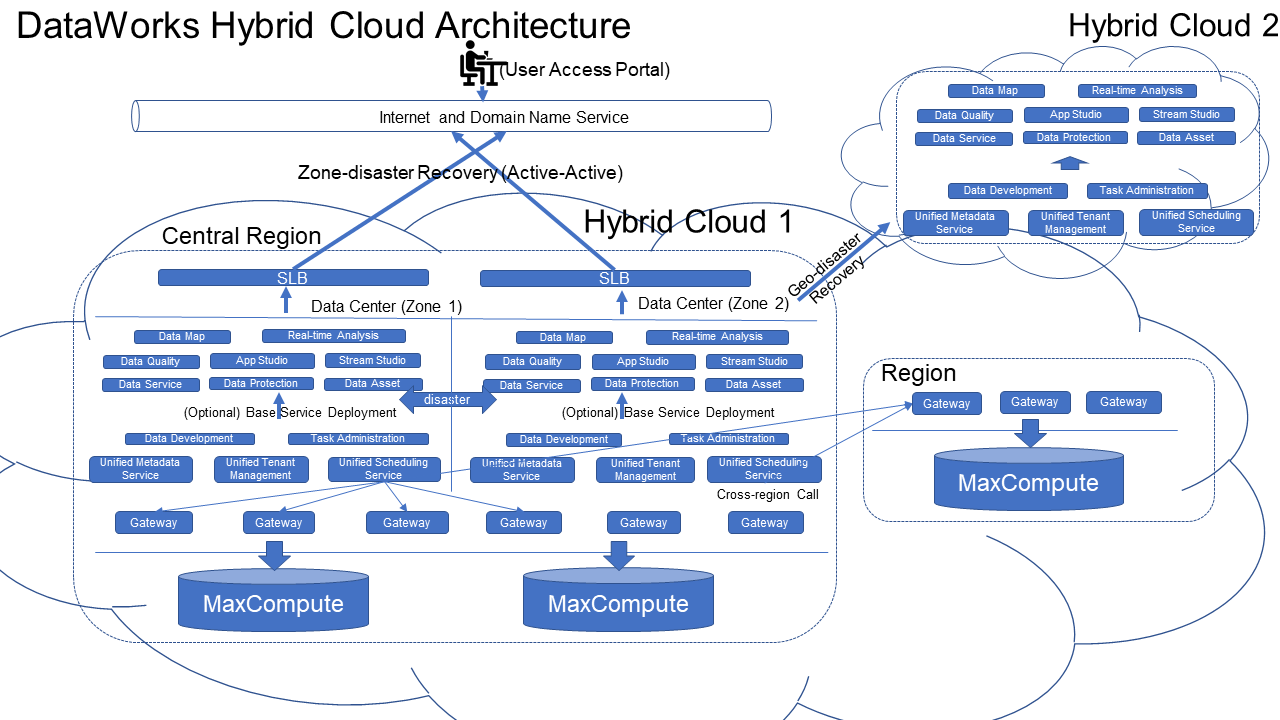

DataWorks runs in a relatively complex environment when compared with other runtime environments at Alibaba. To support operation in the Apsara Stack, a hybrid cloud environment that is proprietary, independent, and closed, we have to redevelop DataWorks after the three-in-one version through technologies that are available in triple environments, without depending on the sophisticated internal middleware system. When a dependency is missing from an environment, we can develop it in-house or through an open source system if the missing dependency cannot be implemented through switches (On/Off) or is not complex.

Most kinds of problems related to network environments on the public cloud have to be solved manually. This results in a huge daily workload for manual troubleshooting. To survive the challenges of the trade war, we had to integrate the DataWorks runtime environment with a China-made chip dependent on the ARM instruction set, in addition to the x86 instruction set. To attract more users from small- and medium-sized enterprises, we need to tailor DataWorks to a more agile design.

Based on a large and complex architecture, DataWorks must be designed with detachable components in a flexible and lightweight manner, so it can run in increasingly complex software and hardware environments. With lightweight and detachable components, DataWorks can meet user expectations in different business scenarios. To survive competition, we have to empower DataWorks with flexible and evolving capabilities so that it can provide the most suitable solution at the lowest price.

Theoretically speaking, a service-oriented architecture (SOA) is prone to complex engineering linkages when it reaches a certain development stage. Initially appropriate designs may fall apart and boundaries may become fuzzy as the SOA is scaled up by different developers to meet more requirements. A typical example is the RESTful APIs between monolithic services. We can add but cannot remove elements of the API schema because the dependency provider does not know how many services depend on the API. For example, when a service on a hibernating server wakes up only to find that the API schema has changed, we have to restore the API schema so that the service can run properly. This problem is more prominent in architectures with frontend-backend isolation.

A common solution is to minimize the impact of schema changes by configuring the backend to transparently transmit a large string to the frontend to avoid unresponsive pages. We are also dealing with complex engineering linkages in DataWorks after scaling up to a certain degree. We have to be extremely careful when modifying every function to avoid unknown effects on other modules. Otherwise, we will have to spend a lot of time investigating the impact of functional modifications. Even so, we may miss a minor detail, and a single bug may make all our efforts useless.

Cooperation among developers is often problematic. GitLab can solve code conflicts between versions, but cannot solve conflicts throughout the product release cycle. At Alibaba Cloud, we need to launch new features at a fast pace to meet requirements and release a major version monthly on the hybrid cloud, while packaging new features into Apsara Stack in a way similar to the waterfall model. We also have to release many features in different version iterations at different paces on the internal cloud, public cloud, and hybrid cloud.

The past deployment lockdown mechanism increased risks in the release window. After multiple people work to develop various features for a single SOA-based monolithic service, the features must be published at an appropriate frequency so that we do not create a huge gap between adjacent versions. The release frequency is even more important if the features depend on each other.

Next, placing a product onto the international market poses a series of complex problems, including the problem of different time zones, daylight saving time, languages, habits, and localized icons. Such problems are delicate and we have to deal with them when operating DataWorks in 20 regions in the world. We have made our wealth of experience in dealing with internationalization open source at https://github.com/alibaba/react-intl-universal.

Spring Boot provides starters to allow us to improve the code reuse rate and prevent the repeated occurrence of problems in the development process. However, a flawed starter may affect all dependent projects. A bug that is introduced when a highly relied upon starter is modified may cause a system crash.

Generally, a search engine finds optimal algorithms by filling candidate algorithms into different buckets. The optimal algorithm is the one the shows the best performance metrics as traffic is sent through the buckets. This is an architecture-based grayscale mechanism that does not require manual intervention for traffic redirection. Different search requests from an ingress are automatically distributed to different algorithm buckets. The optimal algorithm becomes apparent as access traffic increases dramatically.

An SOA-based grayscale mechanism depends on predefined switches (On/Off). This is especially the case for DataWorks. To verify whether a function is defective, we often ask a frontend developer to design a switch mechanism to let some users try the new function, while we can adjust the function to solve bugs based on their feedback. This can also minimize possible negative impacts on end users.

However, this grayscale mechanism is not repeatable, arbitrary, or natural. The grayscale mechanism is costly to implement due to the manual intervention and related design and development required. Some developers even do away with grayscale verification to avoid these annoyances. Moreover, the grayscale mechanism is ineffective under a conventional architecture when grayscale verification is performed on functions that are highly localized, unrelated to users or the workspace, or if we don't know if users will actually use the function, then it would be difficult for us start on the design.

The architecture-based grayscale mechanism is not applicable to SOA-based monolithic services. However, the mechanism can be used for the large number of machines in the gateway cluster of Alisa, an underlying scheduling service of DataWorks. We can deploy the new version for verification on a small portion of the hundreds of gateways and locate problems by comparing different versions after version delivery. However, the number of machines deployed for monolithic services at the DataWorks backend is far less than the number in the gateway cluster. As a result, most SOAs support grayscale mechanisms based on manual intervention rather than architecture-based grayscale mechanisms.

Externally associated services are complex, unreliable, and unstable. They involve constant changes and are prone to downtime or network interruption. What's worse, notifications on external service upgrades are not promptly sent, causing frequent failures. This problem is especially prominent for Data Integration, an application used to move data among dozens of engines and thousands of database instances. To overcome the uncertainty introduced by external services, we use extremely robust designs for applications with many dependencies. However, this greatly complicates code logic and makes it difficult to locate sporadic problems in the highly robust code. A P1 major fault may occur when problems accumulate to reach the fault threshold.

We are currently short-handed at the frontend when operating the DataWorks R&D platform. This is a common problem for all R&D teams of highly interactive products. We can reuse only a limited number of frontend components due to a wide range of differences at the frontend in terms of interaction, style, business, and our business knowledge in the R&D process. Cost transfer is supported only by a small number of class libraries, components, and styles at the frontend.

Moreover, our frontend developers have expended a great deal of effort in tuning styles and interactions to implement a design model based on a mainstream frontend framework with frontend-backend isolation. This provides a better user experience than the combined frontend-backend design. We hope that every design made by frontend developers can be reused.

In addition to the major pain points we've already mentioned, we also have to deal with pain points of lesser importance that affect the DataWorks R&D platform. For example, we often need to make extra effort to provide experimental functions for trial use so that we can see how it is used. Then, we can provide the results for the product designer's (PD) reference. For example, the requirements of a data developer may not be accurately understood by the PD or an R&D engineer. Some data development teams want to directly develop required functions on our platform. If we cannot make the platform ready in the short term, they may build a portal by themselves to develop the tools they need. To solve these problems, we need to change our architecture design to meet the individual needs of both internal and external users.

The DataWorks R&D platform provides a range of functions to assist with daily development work. Users can experience the design features of various functions when using the platform. This is something that is still lacking in platform R&D in general. The PD and the user experience designer (UED) collect requirements and try out the functions themselves. However, without a background in data development, the PD and UED cannot experience the subtle disappointment that is unique to data developers after long-term use. The usage of the DataWorks R&D platform varies greatly in different sectors, like finance, banking, government, large state-owned enterprises, Internet companies, traditional enterprises, private enterprises, and education. Some customers may not know how to use DataWorks. Moreover, users' needs vary and they have different knowledge and skills.

After frontline delivery teams or companies apply DataWorks in fields we have not considered, requirements are collected from these industries and sent to the PD for analysis. Frontline teams can package some DataWorks APIs and provide them as products to customers in specific industries to help solve their problems.

New products are being planned. The engine team uses DataWorks to improve the user-friendliness of designed products. It is difficult to scale up DataWorks to meet the requirements of product planning and improvement if only developers are working according to the schedule. Considering the frontend and backend architectures and countless instances of cooperation and competition, we need to achieve a technical revolution to break away from the SOA and introduce more user-side R&D capabilities. We hope this will allow us to make DataWorks more robust.

Rod Johnson, the founder of the Spring Framework, believes that technologies evolve at a gradual pace. Johnson believes in the proverb "Don't Reinvent the Wheel" has implications in technology circles. Johnson also believes that there is no "best" architecture, rather only the most appropriate architecture. This is reflected in his works on an evidence-based architecture. Johnson's ideas formed the prototype of the framework evolution theory. This theory holds that architectures must evolve continuously to meet changing business requirements.

In a conventional SOA, services tend to be stable and centralized. Monolithic services are equal or similarly structured. Services dependencies take the form of network communication. Each SOA-based monolithic service may be jointly developed by multiple developers. Replacing a single monolithic service is a daunting task. It takes massive manpower resources to carry out a major technology stack change, such as upgrading WebX 3.0 to the Spring Web model-view-controller (MVC) framework or upgrading Spring MVC to Spring Boot. The upgrade cycle is often measured in years. In addition, it is impossible to replace web services designed on the J2EE platform with the Go language or Django, which is a high-level Python web framework. Technical systems are so deeply rooted that it is difficult for us to revolutionize our architecture.

In a conventional SOA, we can improve engineering efficiency by extracting and designing reusable code as class libraries in a second-party package so that the code can be called by different monolithic service dependencies. This practice may complicate configuration but it follows the adheres to the principle "Don't Reinvent the Wheel."

When trying to design a new architecture in 2015, I used a different approach. I abstracted reusable code into a second-party package and used an automatic code generator to generate the code that could not be abstracted. A proven SOA project is basically stable from the directory structure to the configuration assembly. Ant changes are minor adjustments according to the three-layer MVC architecture. Therefore, we can use an automated code generation tool to generate code that cannot be abstracted or contains complex logic and requires flexible adjustment, and provide redundant code functions if at all possible. Developers can subtract as much as possible from the generated project code or modify only a few parts of the code according to the business logic. This will improve the project tidiness and development efficiency.

Services can also be reused. We design centralized monolithic services to encapsulate functions that contain complex logic, are slow to start, or require caching in core services. This reduces the volume and complexity of applications dependent on centralized services, but may cause a single point of failure. However, it is not difficult to ensure the reliability of core services of a certain volume.

Even so, there is still a ceiling on improving SOA efficiency. The efficiency of project creation early on is difficult to maintain throughout long-term business growth. The pain points mentioned earlier gradually emerge and no effective solution has yet been devised. Developers can cooperate with each other efficiently by using the same technology stack or project directory tree structure. However, this prevents the team from implementing internal competition in advanced technologies. Some R&D engineers try to figure out how to improve efficiency and performance while working on an outdated framework. They ignore the fact that substantial changes often come from a new technology stack or a different language. As the ecosystem built on Google's Kubernetes system has matured, we are trying to figure out how to technically transfer DataWorks to the flexible microservices model. This is, again, a reflection of the belief that there is no best architecture, only the most appropriate architecture.

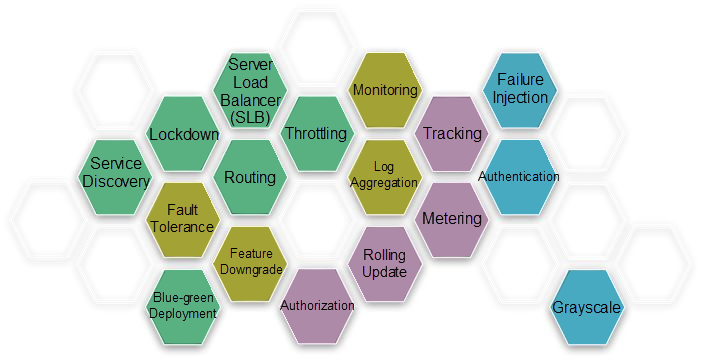

Microservices are associated with the cloud native architecture, which has the following three features:

With these three features, cloud native is well-suited to running microservices. The microservices model can be used to manage the engineering groups of products that have been developed to a sufficient volume, which have many subproducts and complex interactions and dependencies. This article does not detail the basic concepts and general practices of microservices.

Transforming to the microservices model may not solve all the problems of the DataWorks R&D platform with its many products and applications, but it can solve the problems arising from the current development mode.

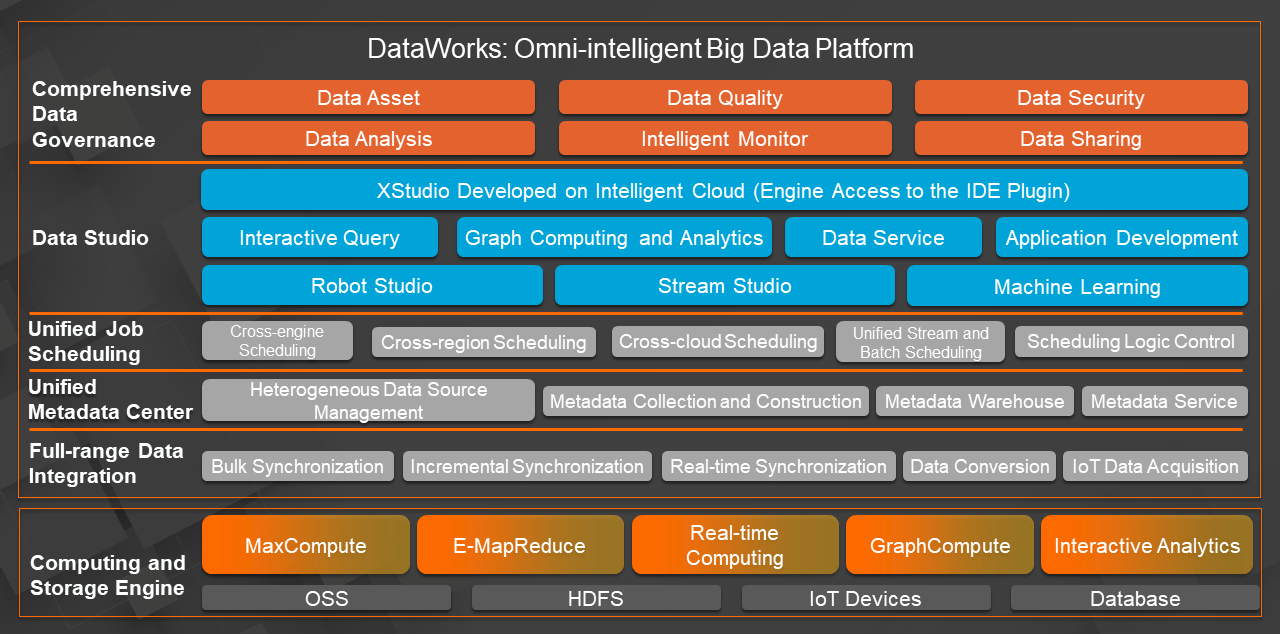

The DataWorks R&D platform is a typical platform-as-a-service (PaaS) application, but Data Services is developed at the software as a service (SaaS) layer. We can use the microservices model to solve SOA-related pain points and provide custom capabilities to customers. Gradually, we will switch our R&D work from PaaS to SaaS.

By leveraging the microservices model based on Kubernetes containerization, we can develop a microservice platform for developers to integrate the DataWorks APIs. Developers can also integrate the APIs of external applications to consolidate data and compile business logic in microservices. This allows developers to expose a series of APIs that are accessible from the DataWorks frontend and used by frontend function modules. Some pain points can be solved in this process.

We can gradually divide the outdated architecture into loosely coupled monolithic services and replace the functions included in these services one by one instead of all at once in a long cycle. We do not need to upgrade legacy projects but only need to keep elementary functions in operation. This avoids a long complete replacement cycle and the resulting faults and rollback difficulty. We can perform canary release to verify whether new piecemeal services perform as well as the functions of the corresponding modules of the outdated architecture. Then, we can perform blue-green deployment to quickly unpublish problematic services. We can also perform A/B testing to identify services with higher performance and better design.

Let's look at personalized requirements as an example. By using the microservice platform coupled with DataWorks businesses and establishing R&D-capable business teams, such as the data or report development team, we can design requirements as microservices at the DataWorks backend. Then, the business team can carry out design and development on certain pages by using plugins at the frontend. We can connect engines to DataWorks in the same way and simplify the access to some modules under DataWorks, such as checkers and powerful custom nodes. You can access these modules after completing simple development as instructed in the documentation. However, access to custom functions is more complex. For example, a visualized table creation function is being designed for AnalyticDB in DataWorks. This function is highly complex and must be developed by connecting to frontend slots through microservices. This process, which will be described in detail later, achieves automatic access to complex business logic.

Let's take a look at the architecture-based grayscale mechanism. With the microservices model, you can easily implement blue-green deployment, canary release, and A/B testing. Microservice design should be field-oriented as much as possible. Of course, it is unlikely to be fully field-oriented. High cohesion and low coupling are still the design objectives of a single microservice. We can release multiple versions of a microservice to test whether problems in a certain field are solved. We can also release multiple microservices based on different frameworks or languages to find the optimal solution in a certain field. The release of microservices is effective and reliable when using the architecture-based grayscale mechanism and cloud native. Any problematic microservices can be promptly deprecated to prevent major impact.

For other pain points, we will not describe the specific solutions based on the microservices model. For example, extensive code changes are no longer required after the microservices model is fully built on the DataWorks platform. Each of us can manage multiple field-oriented microservices of a small size. When you want to redesign an interface, you can simply redesign the microservices under this interface at a low cost, rather than replace the interface. After traffic is switched to new microservices, you can unpublish the microservices under the old interface. For example, in Spring Boot, the coupling introduced by Starter is decoupled through service discovery in the microservice framework, and dependencies at the code level are no longer needed for coupling.

In this section, I would like to focus on microservices. Microservices do not necessarily indicate services of a small size. Microservices are based on the SOA but are more lightweight and field-oriented than conventional SOA-based services. For example, Robot Factory allows you to configure intent redirection to your designed response logic. This function is currently developed based on the microservices model. After the function is published, you can design a language-independent response microservice based on input. This function ensures that properly running microservices are not affected by the malfunction of poorly designed microservices. Robot Factory solutions can be designed as a function as a service (FaaS) so that you can implement a custom response logic by compiling functions. In this case, usage is measured by traffic.

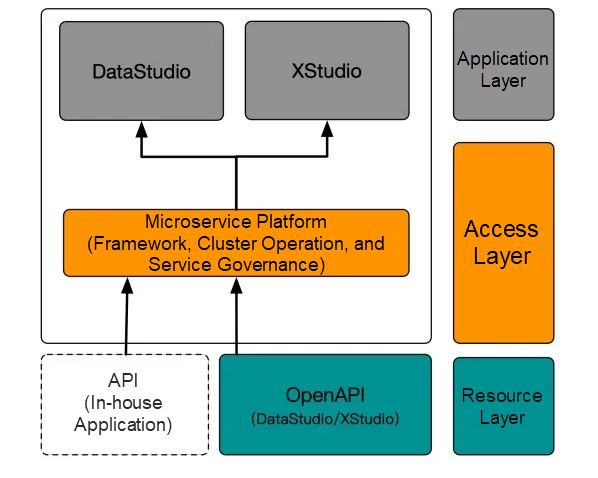

Robot factory applied to microservices

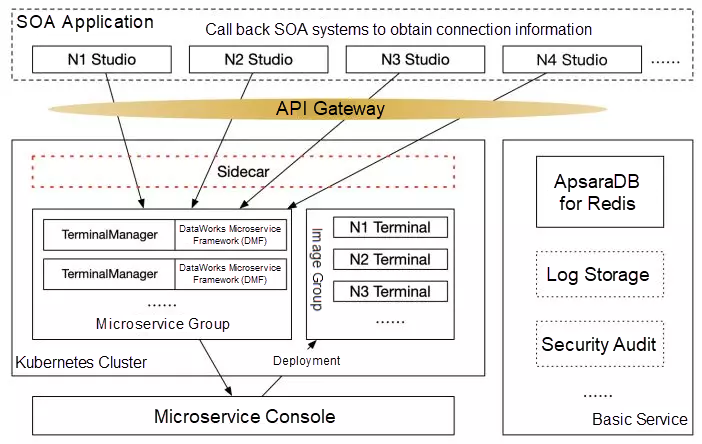

The DataWorks team designed a microservice platform based on service mesh, which can encapsulate some workloads into frontend microservices. System-level microservices and developer-designed microservices run in the same pod. This simplifies developer-designed microservices. The service mesh is like HandlerInterceptor or Filter in the Spring framework. Developers specializing in aspect oriented programming (AOP) can develop interceptors and filters in projects. In the microservices model integrated with service mesh, developers can replace system-level microservices for some conventional interceptors, such as logon redirection, permission control, service discovery, throttling, monitoring, and log aggregation.

Service mesh

The service mesh allows you to focus on business without having to consider logon configuration and log configuration during project development. We have designed the DataWorks Microservice Framework (DMF) in different languages to help you quickly get familiar with microservice development. In the future, we will publish the development and design details of the DMF so that more business teams can contribute their most appropriate microservices models. To better support DevOps, which is strongly related to our business, we have developed DataWorks Microservice Platform (DMSP) to control the deployment and release of microservices and conduct O&M work such as service governance.

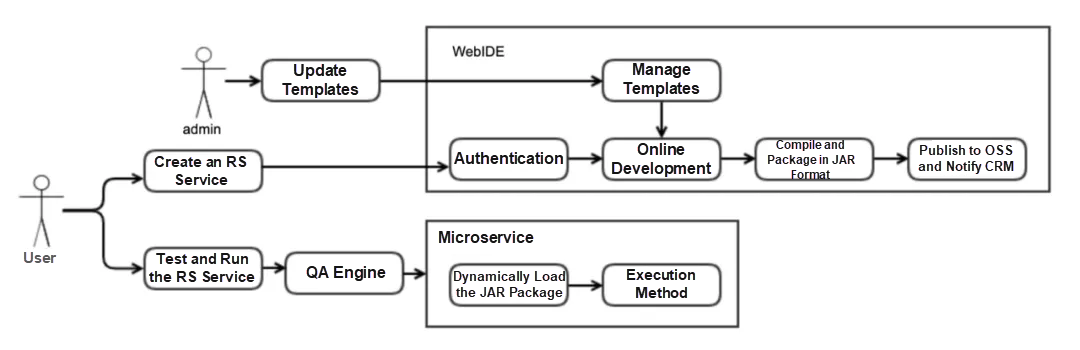

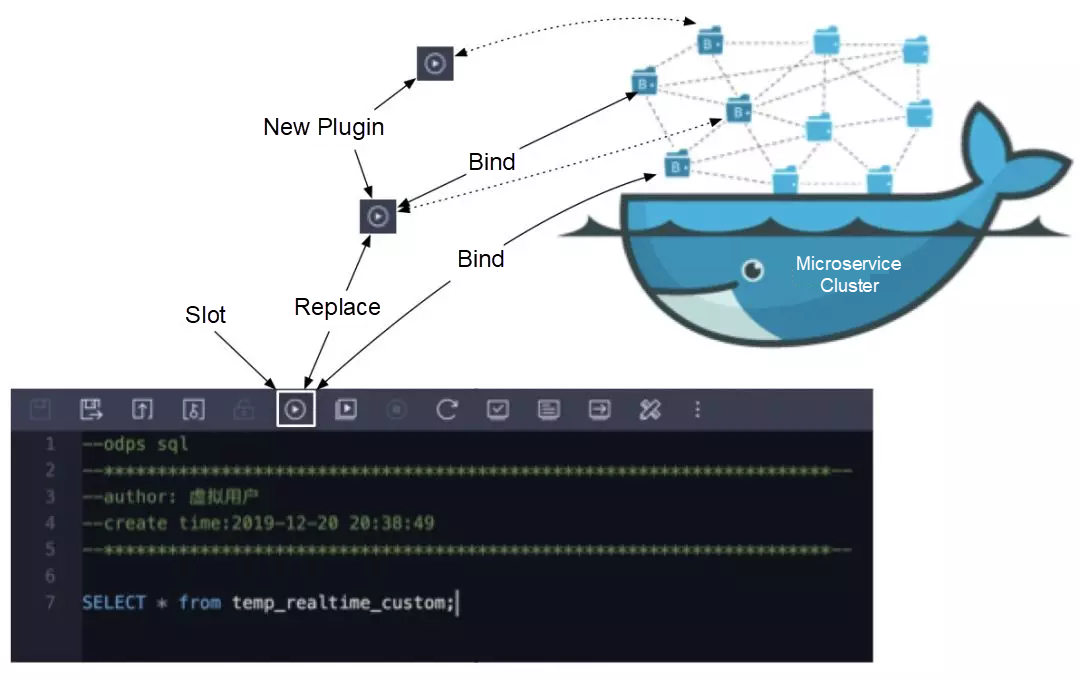

Our frontend team developed the XStudio plugin, which can be integrated with backend microservices to form a complete solution. The DataWorks frontend team hopes to explore methods to improve the frontend R&D efficiency. The XStudio plugin is implemented based on single-spa and Qiankun. The framework provides the multi-instance mode, slot mechanism, visual plugin orchestration, and other important features, further improving the plugin development efficiency. The following figure shows the schematic drawing of the XStudio plugin.

At the frontend, we can reserve slots on XStudio-designed pages for any type of component, such as buttons. Then, we can bind a microservice to each component and quickly assemble on-page functions by replacing the slot-housed component and backend microservice. A function can be used in different scenarios after being developed only once. To provide slot-housed content, the business development team can design a frontend- and backend-compatible plugin and place the plugin in a frontend slot. This enables custom development to meet personalized needs.

In conventional plugin-based design, developers either provide second-party packages compliant with an API protocol or provide a series of protocol-compliant APIs and export these APIs to the frontend through an SOA. However, such a design causes many problems, such as SOA service intrusion, reduced security and reliability of SOA services, limits posed by programming languages, and inflexibility. These problems can be solved by microservices and plugins.

For designs that take up more page space, we can set large areas as replaceable components, such as the editor in the preceding figure. Users can replace the page content in these areas by themselves, associate one or more backend microservices, and embed them into the frontend pages of DataWorks. This allows the business team to implement more complex custom business logic. AnalyticDB provides a visualized table creation function that was developed based on the preceding solution and connected to DataWorks by the AnalyticDB engine team.

The data monitoring and alert functions are an important part of the frontend system. We have designed multidimensional reports and metrics to monitor the usage of components that belong to internal businesses or components written by external business teams through the automatic full instrumentation technology. This means they don't have to write additional code.

Heat map

Some XStudio-based frontend components are combined with backend microservices to implement plugin encapsulation, such as Terminal, DWEditor, directory tree, and checker. For example, after the Terminal plugin is designed, it is inserted into different studios and connected to different engines. Container instances can be automatically scaled up or destroyed based on actual usage, saving runtime resources.

After the Terminal plugin is connected to multiple engines, microservices at the frontend and backend do not need to be redeveloped, improving the development efficiency. The plugin encapsulation design saves development resources and allows multiple applications to use a set of microservices in a centralized manner. Elastic orchestration and automatic scaling ensure service performance without wasting server resources.

Architecture design of the Terminal plugin

We can implement SaaS applications based on the microservices model, such as FaaS, backend as a service (BaaS), and backend for frontend (BFF). For example, BFF can reduce the network consumption of H5 pages on mobile terminals with DataWorks enabled. The interfaces provided by multiple backend microservices are assembled by Gateway and provided to mobile terminals. This achieves the aggregation of microservices. BFF supports server-side rendering (SSR), allowing you to render your application to an HTML string on the server and then send it to the browser. This reduces the rendering performance loss on mobile terminals.

DMSP is designed to integrate frontend and backend services through DataWorks. DMSP binds the release of frontend components to backend microservices and encapsulates frontend and backend services that have been deployed as business plugins through Swagger. The frontend and backend members of a team can implement DevOps on DMSP and publish new functions to customers through continuous delivery.

DMSP is applicable to the internal cloud, public cloud, and hybrid cloud. We can use DMSP to continuously deliver plugins that have been developed to 20 regions on the public cloud and package microservices in Apsara Stack. DMSP shields plugin developers from complex external deployment environments.

We hope to design most page content in DataWorks as flexible, lightweight, and detachable plugins. Development modes and product profiles are increasingly architecture-driven.

An ecosystem is an evolving technical system. We build ecosystems to survive in competition. The microservices model is superior to evidence-based architecture in terms of evolving capability. Evidence-based architecture is a use-it-or-lose-it route of technology evolution with a top-down design, whereas the microservices model adopts a survival of the fittest approach with a bottom-up design. Each microservice is equally encapsulated in a container, independent of languages and frameworks. We can develop multiple microservices for a function in a similar way to using algorithm buckets. Then, we select the microservice that can best implement the function. Under ideal conditions, applications can be self-built into best practices after evolution without the intervention of upper-layer architects. The best practices under ideal conditions are impossible in the real world due to limiting factors, such as developers' technical prowess, external dependencies, and KPIs. However, the microservices model allows us to approach the ideal conditions through teamwork.

The microservices model also allows us to conduct custom development for vertical business. Field-oriented delivery teams can use the plugin capability of DataWorks to build a custom smart R&D platform that can be adapted to different industries. We can build an innovative ecosystem on the DataWorks R&D platform to provide customers with more diverse choices. The microservices model will drive the ecosystem to evolve in a more competitive direction.

The DataWorks R&D team hopes to use the microservices model to consolidate Alibaba Cloud Intelligence projects and build mutually beneficial cooperation on the internal cloud and public cloud.

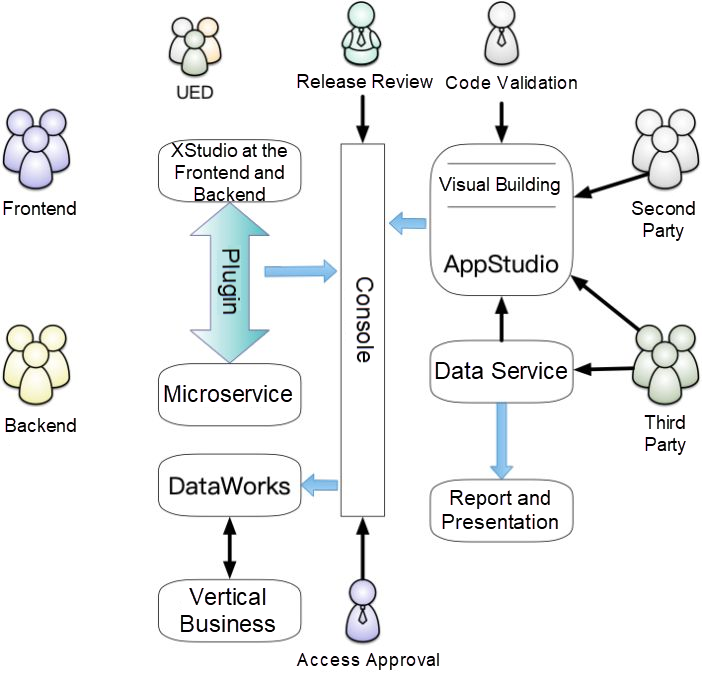

An architecture not only must solve technical problems but also provide guidance on human resource allocation. While using the microservices model for development, the DataWorks R&D team considers how to divide responsibilities between the frontend and backend. We form framework teams at both the frontend and backend, which are responsible for guiding the design of frontend components and backend microservices to influence the evolution of the microservices model. The team checks whether each plugin is field-oriented and reviews the plugin design schemes submitted by second parties (non-team members on the internal cloud) and third parties (delivery teams on the public cloud or industrial developers on the customer side). This can prevent flawed applications from affecting system construction.

The microservices model removes technical gaps due to its language independence. If you are familiar with Node.js, you can also design microservices by combining technologies at the frontend and backend. We developed App Studio for WebIDE, with support for the data egresses of data services, FaaS functions, and microservice development. You can use DataWorks, an omni-intelligent big data platform, to develop data, design reports, write business logic through microservices, and export data as needed.

Architecture-driven transformation of responsibilities

The preceding figure shows how to divide the responsibilities of a team in the future, allowing R&D engineers at the frontend and backend to solve pain points, help users overcome technical difficulties, and build a vigorous ecosystem.

What is the future of technology and architecture? In my thinking, the R&D technologies for software engineering are an increasingly intelligent field with no boundaries. Many DataWorks products are designed with intelligent capabilities. For example, we apply the Markov algorithm to the intelligent programming plugin based on VSCode. The future of the R&D team depends greatly on the system architecture. We encourage innovation and exploration on the frontiers and boundaries of technology. We must not let conventions limit the way we think. If intelligent programming starts to replace manual development, developers can take on new responsibilities in the new architectures by changing the architecture design.

The world is changing, but there are patterns to this change. Our technological vision is to build a mature and evolving engineering system for this changing world. We look forward to the future, embrace change, and go beyond the cloud.

Are you eager to know the latest tech trends in Alibaba Cloud? Hear it from our top experts in our newly launched series, Tech Show!

Alibaba Clouder - May 12, 2021

Alibaba Clouder - March 9, 2021

Alibaba Clouder - November 6, 2017

Alibaba F(x) Team - September 2, 2021

Alibaba Clouder - July 5, 2018

Alibaba Cloud MaxCompute - March 2, 2020

Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Omnichannel Data Mid-End Solution

Omnichannel Data Mid-End Solution

This all-in-one omnichannel data solution helps brand merchants formulate brand strategies, monitor brand operation, and increase customer base.

Learn More