By Zhu Zhu (Changgeng)

Flink is a distributed data processing framework and the Flink cluster is used to submit the business logic in the form of Job. As a Flink engine, Flink Runtime is responsible for running and finishing the jobs normally. These jobs can be either stream computing jobs or batch processing jobs running either on the bare computer or Flink clusters. Flink Runtime must support jobs of all types and run under different conditions.

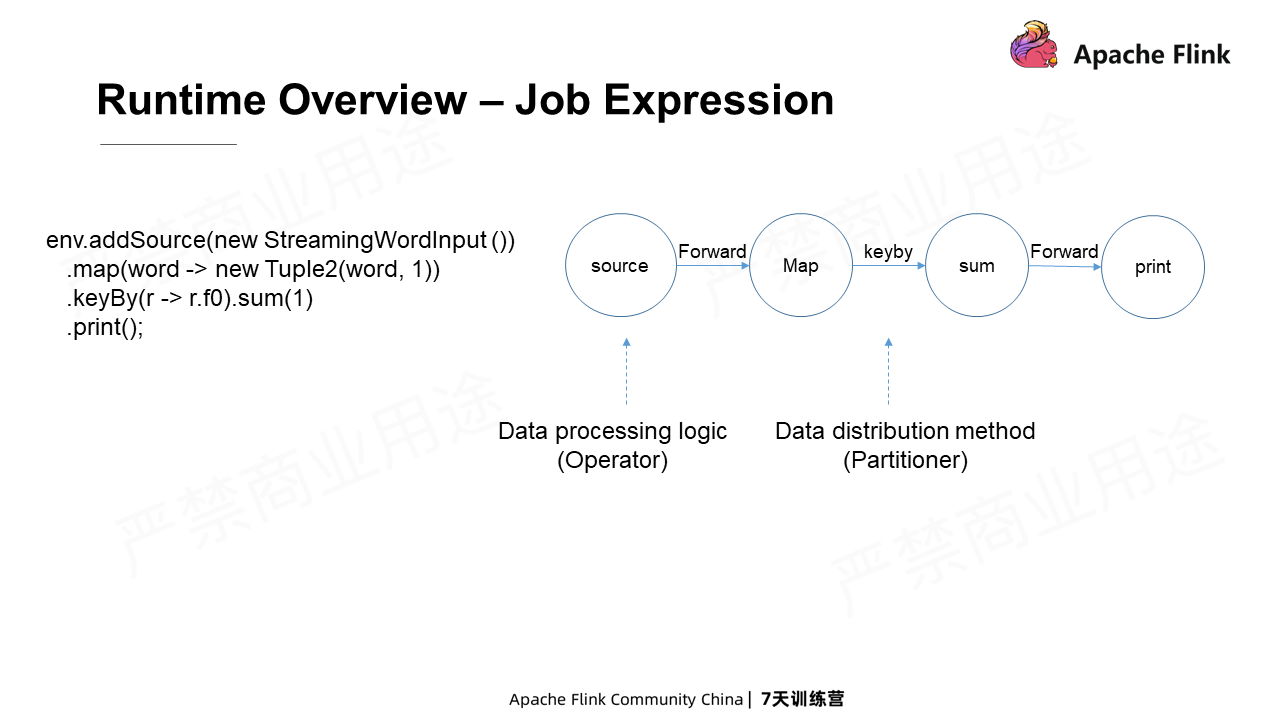

It is necessary to understand how a job is expressed in Flink before executing it.

Users write a job through API. For example, the StreamWordInput on the left of the preceding figure can constantly output words one by one. The following Map operation maps words to a binary group. A keyBy is then used to put the binary groups with the exact words together, sum them, and print the final results.

The job on the left corresponds to the logic topology (StreamGraph) on the right. There are four nodes in this topology: source, map, sum, and print. These are data processing logics, also called operators. Lines connecting nodes indicate the data distribution methods and affect the way data is distributed downstream. For example, the keyBy between map and sum indicates that the data generated by the map with the same key must be distributed to the same downstream.

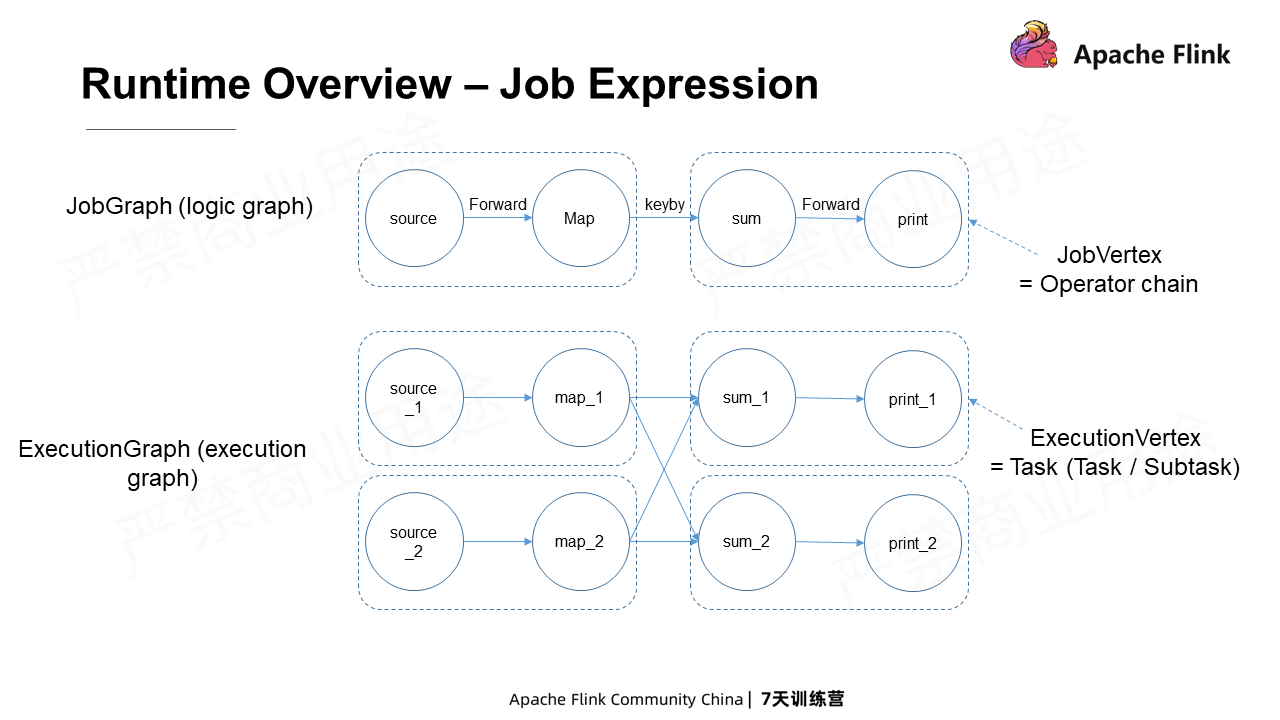

Flink Runtime translates the StreamGraph further into a JobGraph. Different from StreamGraph, JobGraph chains some nodes to form an Operator chain. The Chain requires the same concurrency of two operators and the one-to-one exchanging mode of their data. The Operator chain formed is also known as JobVertex.

The Operator chain aims to reduce unnecessary data exchange so that the chain operators can be executed in the same place. The logic graph will be further translated into the execution graph - ExecutionGraph during the actual job execution. The execution graph is a view of the logic graph at the concurrency level. As shown in the figure above, the ExecutionGraph expresses that the concurrency of all operators in the logic graph above is 2.

Why can't the map and sum in the figure above be embedded? Because their data involves multiple downstream operators and are not exchanged one to one. A node in the logic graph JobVertex corresponds to several concurrent execution nodes ExecutionVertex. Each ExecutionVertex node corresponds to tasks. These tasks are finally deployed to Worker nodes as entities and execute the actual data processing business logic.

As a distributed data processing framework, Flink has a distributed architecture that consists of Client, Master, and Worker nodes.

The Master node is the control center of Flink clusters with one or more JobMasters. Each JobMaster corresponds to a job, and these JobMasters are centrally managed by a control called Dispatcher. There is also a ResourceManager in the Master node for resource management. The ResourceManager manages all the Worker nodes and serves all jobs at the same time. In addition, there is a Rest Server in the Master node, which responds to various Rest requests from the Client. The Client includes the Web Client and the Client of the command line. The requests initiated by the Client include submitting jobs, querying job status, and stopping jobs. The jobs that comprise different tasks are finally executed on the Worker node based on the execution graph. Workers are TaskExecutors, which are containers for running tasks.

JobMaster consists of three core components: JobMaster, TaskExecutor, and ResourceManager. JobMaster is used to manage jobs, while the TaskExecutor is used to run tasks. ResourceManager is used to manage resources and serves resource requests from JobMaster.

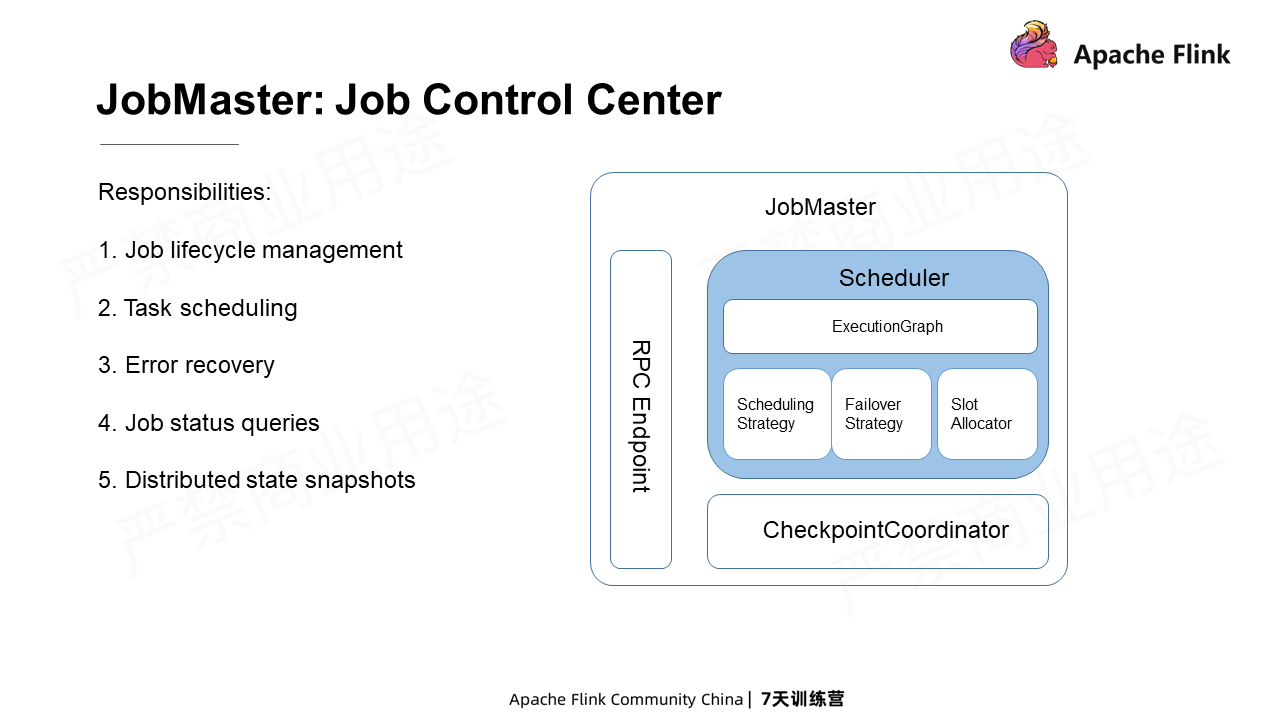

The JobMaster is mainly responsible for job lifecycle management, task scheduling, error recovery, status queries, and distributed status snapshots.

Distributed state snapshots include Checkpoints and Savepoints. Checkpoints mainly serve for error recovery, while Savepoints are primarily used for job maintenance, including upgrade, migration, and so on. Distributed snapshots are triggered and managed by the CheckpointCoordinator component.

The core component in JobMaster is Scheduler, which covers job lifecycle management, job status maintenance, task scheduling, and error recovery.

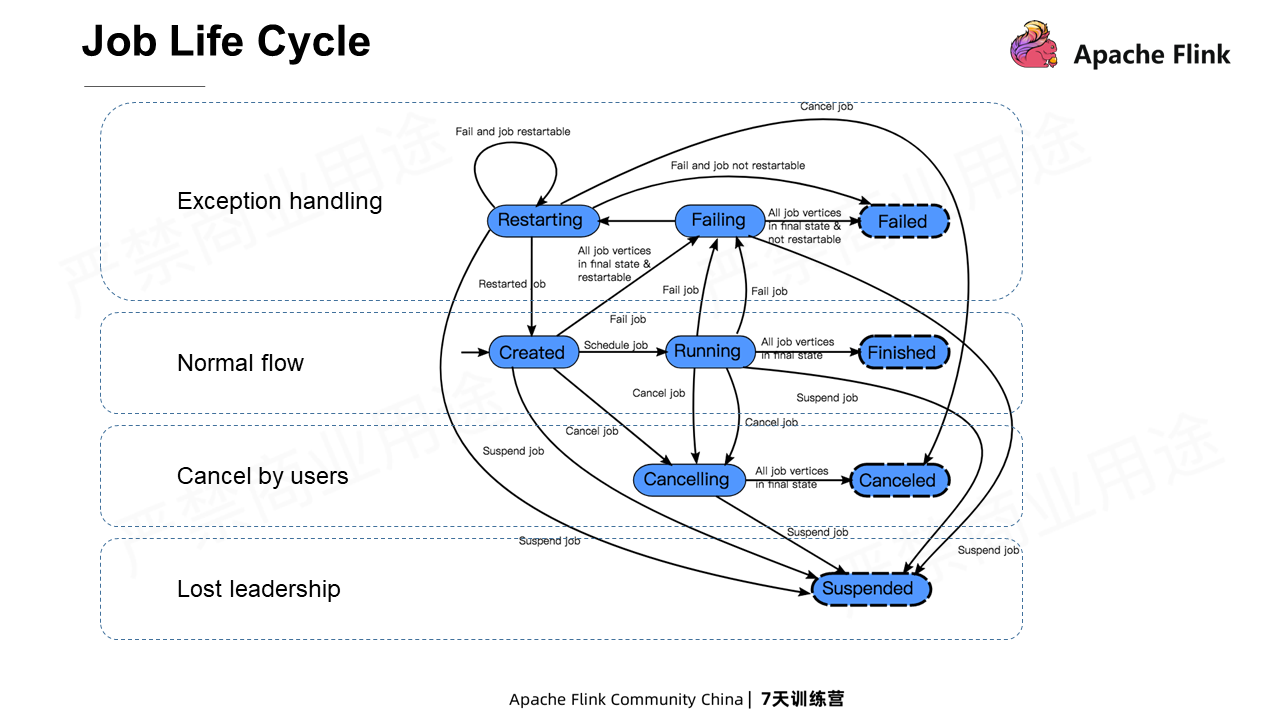

The figure below shows the job states in its life cycle and all its possible state changes.

In a typical process, a job may have three states: Created, Running, and Finished. The job is initially in the Created state. Once being scheduled, the job enters the Running state and schedules tasks. When all tasks are successfully completed, the job goes to the Finished state, reports the result, and exits.

However, a job may encounter some problems during execution, which means that exception handling status may occur. If a job-level error occurs during job execution, the job procedure will enter the Failing state, and all tasks are canceled. After all tasks, Failed, Canceled, or Finished, enter the final state, the errors will then be checked. If the exception is unrecoverable, the entire job procedure enters the Failed state and exits. If the exception is recoverable, the state enters the Restarting state for restart. If the number of restarts is under the upper limit, the job will go to the Created state for rescheduling. If the number is up to the upper limit, the job will go to the Failed state and exit. (Note: in Flink versions later than 1.10, if a recoverable error occurs, the job will directly enter the Restarting state rather than the Failing state. When all tasks are restored to normal, the job returns to the Running state. If the tasks cannot be restored, the job enters the Failed state and ends.

The job will not enter the states of Cancelling and Canceled unless users manually Cancel the job. When users manually explore a job in the Web UI or using the Flink command, Flink will first transfer the states to the Cancel and then cancel all tasks. After all, tasks enter the final states; the entire job enters the Canceled state and exits.

The job enters the Suspended state only when high availability is configured, and JobMaster loses leadership. This state only indicates that the JobMaster has terminated due to an error. Generally, when the JobMaster gets back the leadership or a standby Master obtains the leadership, the job will be restarted on the nodes with the leadership.

The figure below shows the job states in its life cycle and all its possible state changes.

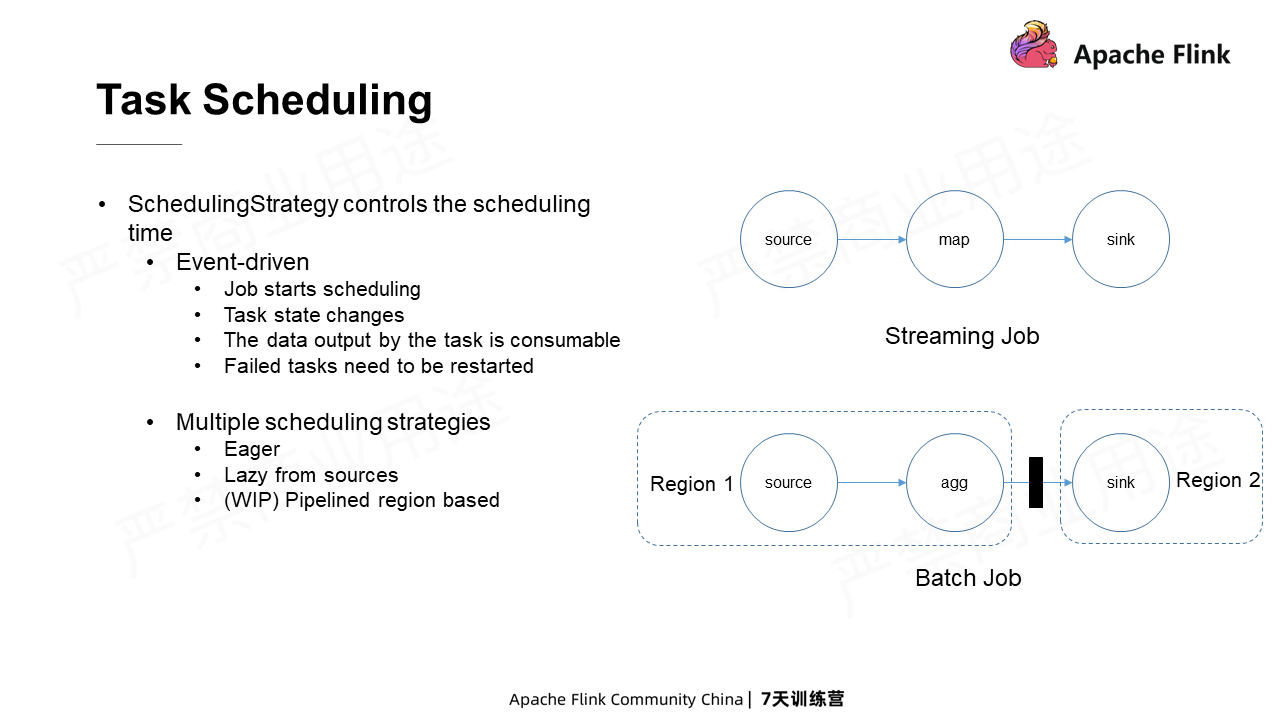

Task scheduling is one of the core responsibilities of JobMaster. The first problem to schedule tasks is to decide when to schedule tasks. The SchedulingStrategy controls the timing of task scheduling. It is an event-driven component that listens to the events that job starts scheduling, task state changes, the data output by tasks becomes consumable, and the failed tasks need to be restarted. As such, it can flexibly determine the time to start a task.

Currently, there are multiple scheduling strategies, including Eager and Lazy, from sources. EagerSchedulingStrategy mainly serves streaming jobs. It directly starts all tasks when the job begins scheduling, which can reduce the scheduling time. Lazy from sources mainly serves batch jobs. It only schedules the Source nodes when the job starts. Other nodes are not scheduled until the input data in the previous node can be consumed. The following figure shows that the agg node is scheduled when the Source nodes start to output data. And after the agg node ends, the sink node is scheduled.

Why different scheduling strategies for Batch jobs and Streaming jobs? The answer lies in the blocking shuffle data exchange mode in the Batch job. In this mode, the downstream can consume the dataset only after all data is output by the upstream. If the downstream is scheduled in advance, it will only waste resources there. Compared with the Eager strategy, Lazy from sources can save a certain amount of resources for batch jobs.

Currently, a scheduling strategy called Pipelined region-based is under development. This strategy is similar to the Lazy from source strategy. The difference is that the former uses the Pipelined region as the granularity for task scheduling.

A Pipelined region is a set of tasks connected by pipelines. Pipelined indicates streaming data exchange between upstream and downstream nodes. That is, the upstream writes the data as the downstream reads and consumes the data. The Pipelined region-based task scheduling parallelizes the upstream and downstream tasks and reduces the scheduling time as the Eager strategy does to a great extent. Meanwhile, it retains the Lazy from sources to avoid unnecessary resource waste. Scheduling part of the tasks as a whole can users know how many resources are required for the jobs to be run simultaneously to perform deeper optimizations.

(Note: starting from Flink 1.11, Pipelined region strategy is the default scheduling strategy and serves both streaming and batch jobs.)

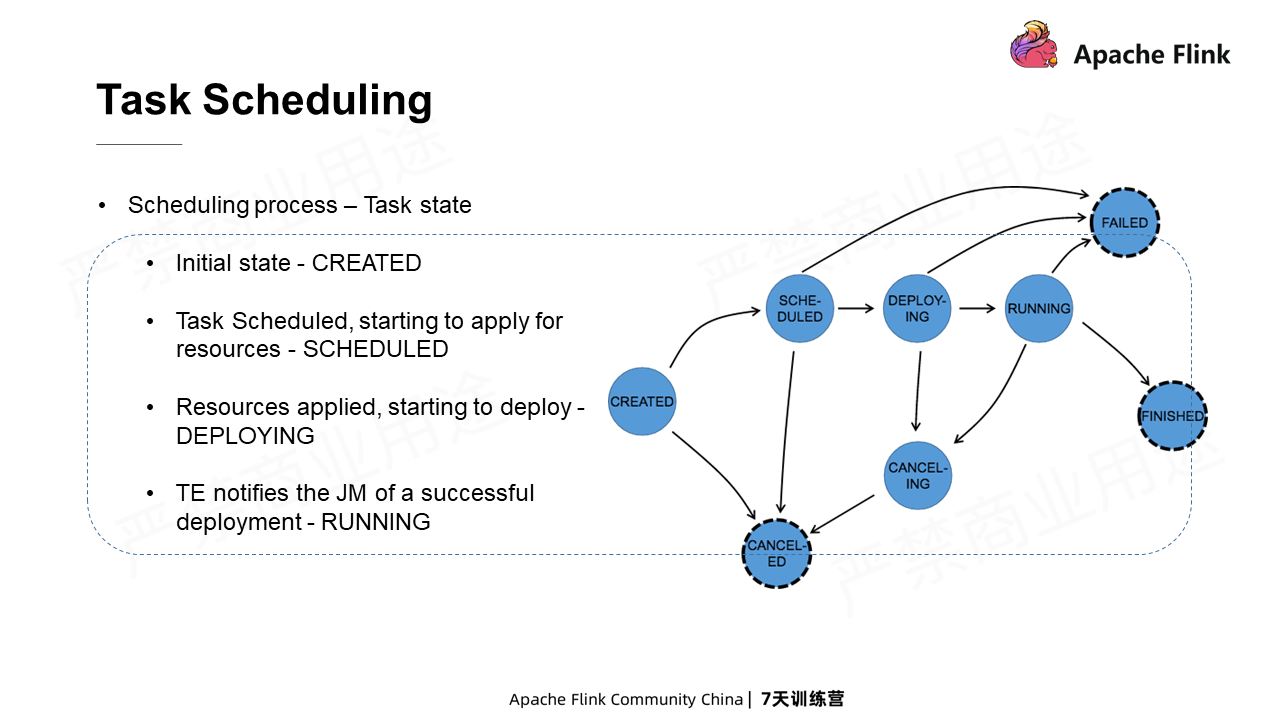

A task has many different states, with the Created state as its initial state. When the scheduling strategy determines that the task can be scheduled, the task will change to the Scheduled state and start to apply for resources, namely Slots. After a Slot is received, the task changes to the Deploying state to generate the task description and will be deployed to the Worker node for startup. After the task is started, it changes to the Running state on the Worker node and notifies the JobMaster. Then, the task will be changed to the Running state on JobMaster.

For a job with an unlimited flow, the Running state is the final state of a task. For a job with a limited flow, once all data is processed, the task will switch to the Finished state. This will mark that the task is finished. When an exception occurs, the task changes to the Failed state. At the same time, other affected tasks may be canceled and enter the Canceled state.

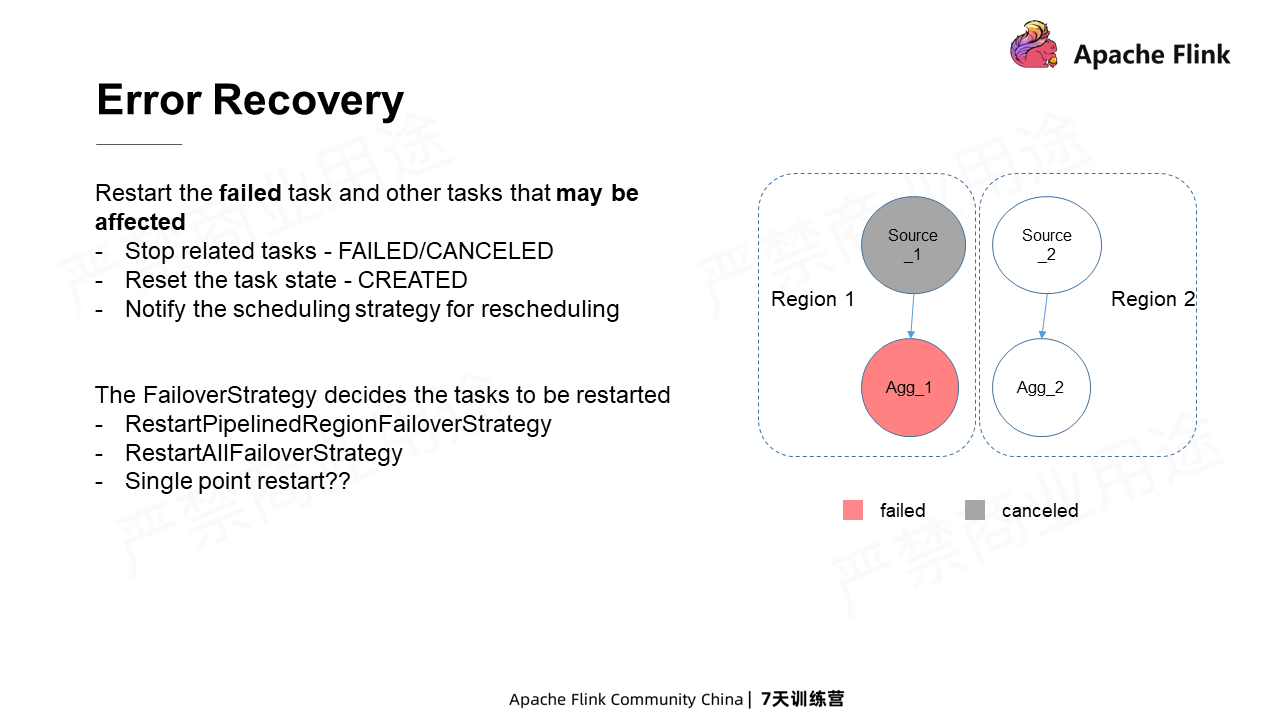

When errors occur in a task, the strategy or the basic idea of JobMaster is to restore the data processing of the job by restarting the failed tasks and the tasks that may be affected. Three steps are included:

What are the tasks that may get affected, as mentioned above? The FailoverStrategy decides it.

Currently, RestartPipelinedRegionFailoverStrategy is the default FailoverStrategy in Flink. With this policy, if a task fails, it will restart the Region where it is located. This is related to the Pipelined data exchange mentioned above. In the Pipelined data exchange, if any one of the nodes fails, the other correlated nodes fail as well, which avoids data inconsistency. Therefore, to prevent multiple failovers caused by a single task, all the other tasks are canceled and restarted together with the first failed task.

The RestartPipelinedRegion strategy restarts the downstream regions of the failed tasks and the Region where the failed tasks are located. The reason is that the output of a task is often non-deterministic. For example, a record is distributed to the first concurrent node in the downstream to run. When allocated to the second concurrent node downstream to rerun, once the two downstreams are in different regions, one may lose the record, and even different data may be generated. To avoid this, PipelinedRegionFailoverStrategy can be used to restart the Region where the failed tasks are located and all the downstream regions.

In addition, the RestartAllFailoverStrategy restarts all tasks of a job when any task fails. Generally, this strategy is not often used. However, under some special circumstances, for example, when a task fails, and users want to stop all functions for a whole recovery instead of running the task locally, it can be used.

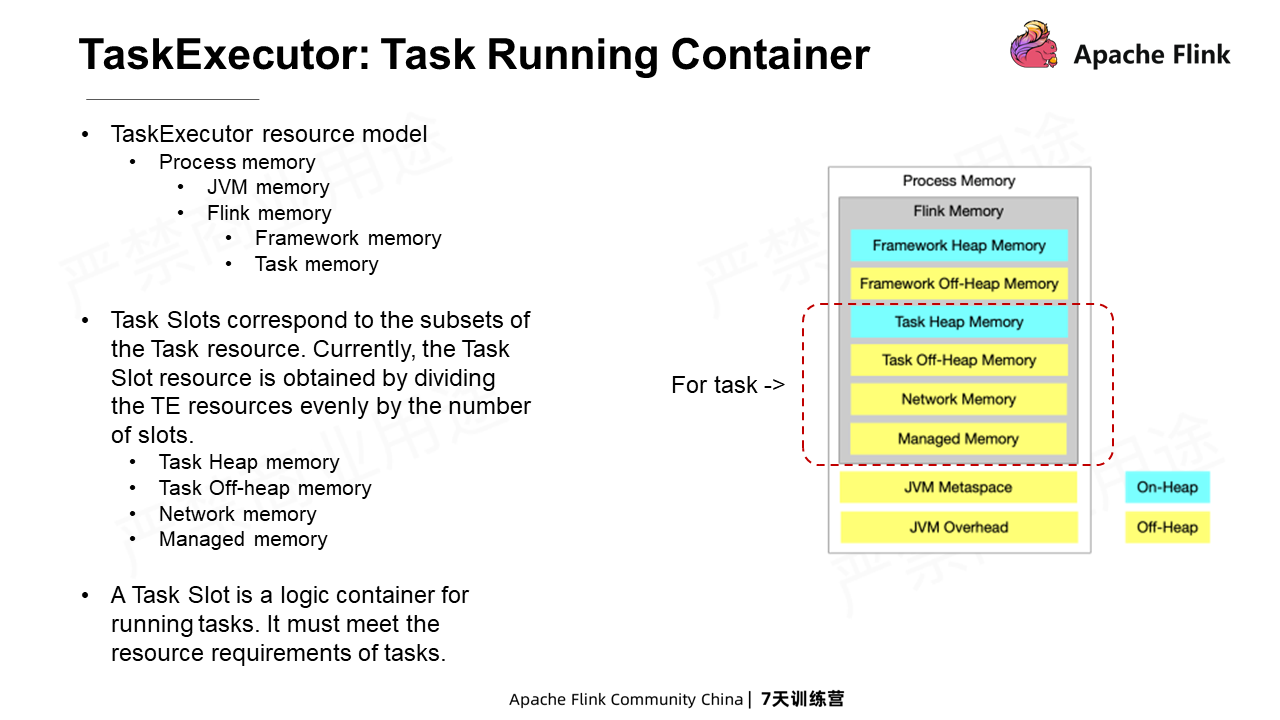

TaskExecutor is a task executor with various resources for task running, as shown in the following figure. The memory resources are introduced here.

All memory resources can be configured separately. TaskManagers also manage their configuration by layers. The outermost layer is Process Memory, which stores the total resources of the entire TaskExecutor JVM. It includes the Memory occupied by JVM and the Memory occupied by Flink. What's more, the Memory occupied by Flink also includes the Memory occupied by the framework and the tasks.

The task-occupied Memory includes Task Heap Memory, which is the Memory occupied by the Java object of the task. The Task Off-Heap Memory is generally used for native third-party libraries. Network Memory is used to create Network buffers to serve the input and output of tasks. Managed Memory is the Off-Heap Memory under control. It is used by some components, such as operators and StateBackend. These Task resources are divided into Slots, which are logic containers for running tasks. Currently, the size of a Slot is decided by dividing the resources of the entire TaskExecutor evenly by the number of Slots.

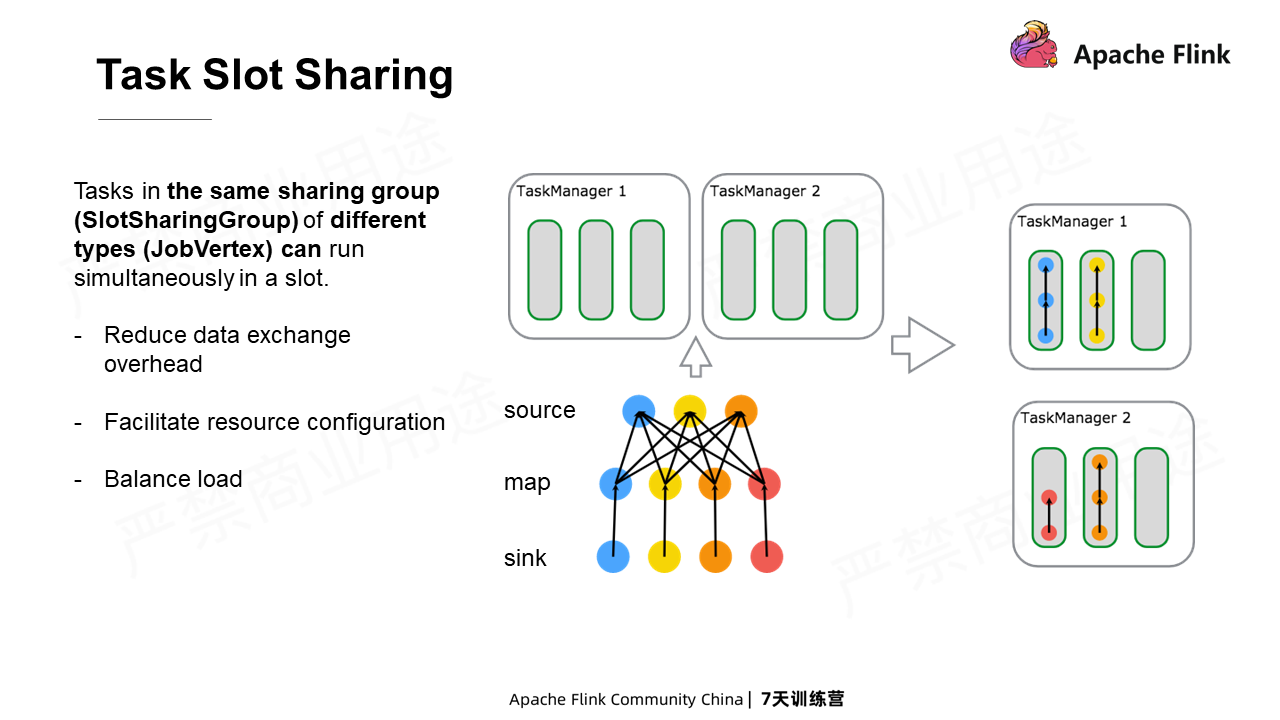

One or more tasks can be run in a Slot. However, only different types of tasks in the same sharing group can be run in one slot simultaneously. Generally, tasks in the same PipelinedRegion are in the same sharing group, and all tasks of the streaming job are also in the same sharing group. Different types indicate that they belong to different JobVertexes.

On the right of the preceding figure is a job including source, map, and sink. After the deployment, there are three slots, all containing three tasks: the source, map, and sum, respectively. However, there is a slot with only two tasks. This is because the source only has three concurrencies, and no more concurrencies can be deployed into the source.

The first advantage of SlotSharing is that it can reduce the overhead of data exchange. Nodes such as map and sink exchange data one-to-one. As the nodes with physical data exchange share the Slot, their data exchange can be carried out in memory with lower overhead than that in the network.

The second advantage is that it is convenient for users to configure resources. Users only need to configure "n" Slots to ensure that a sum job can always run through SlotSharing. Here, "n" is the largest concurrency of the operators.

The third advantage is that SlotSharing improves load balance when the concurrency of each operator is slightly different. Since each Slot has one operator of each type, some heavy-load operators are prevented from being squeezed into the same TaskExecutor.

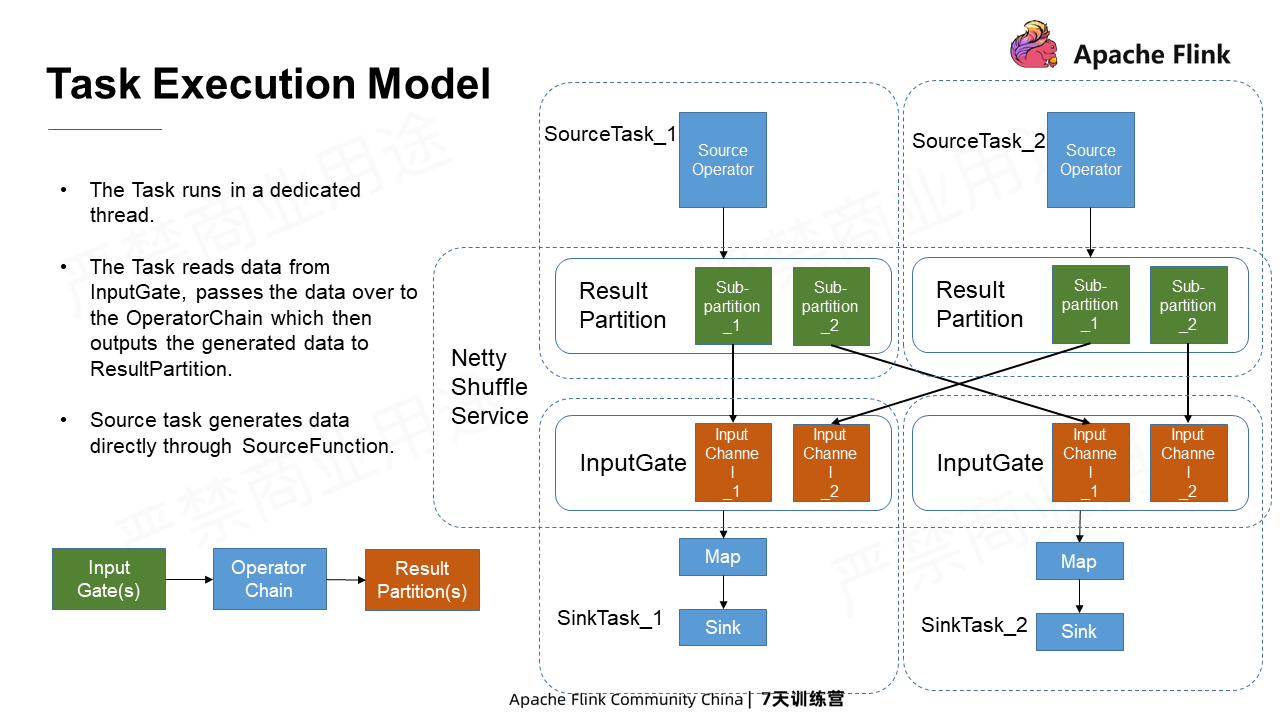

As mentioned above, each task corresponds to an OperatorChain. Generally, each OperatorChain has InputGate as its input and ResultPartition as its output. The tasks are commonly executed in a dedicated thread. The tasks read data from InputGate and pass it over to the OperatorChain which then processes the business logic and outputs generated data to ResultPartition.

But the Source task is an exception here. It generates data directly by the SourceFunction method instead of reading data from InputGate. The ResultPartition in the upstream and the InputGate in the downstream exchange data through ShuffleService. ShuffleService is a plug-in of Flink with NettyShuffleService as the default ShuffleService. The InputGate in the downstream obtains data from ResultPartition in the upstream through Netty.

ResultPartition is composed of multiple SubPartitions, each of which corresponds to a downstream consumer concurrency. Likewise, InputGate is composed of InputChannels, each of which corresponds to an upstream concurrency.

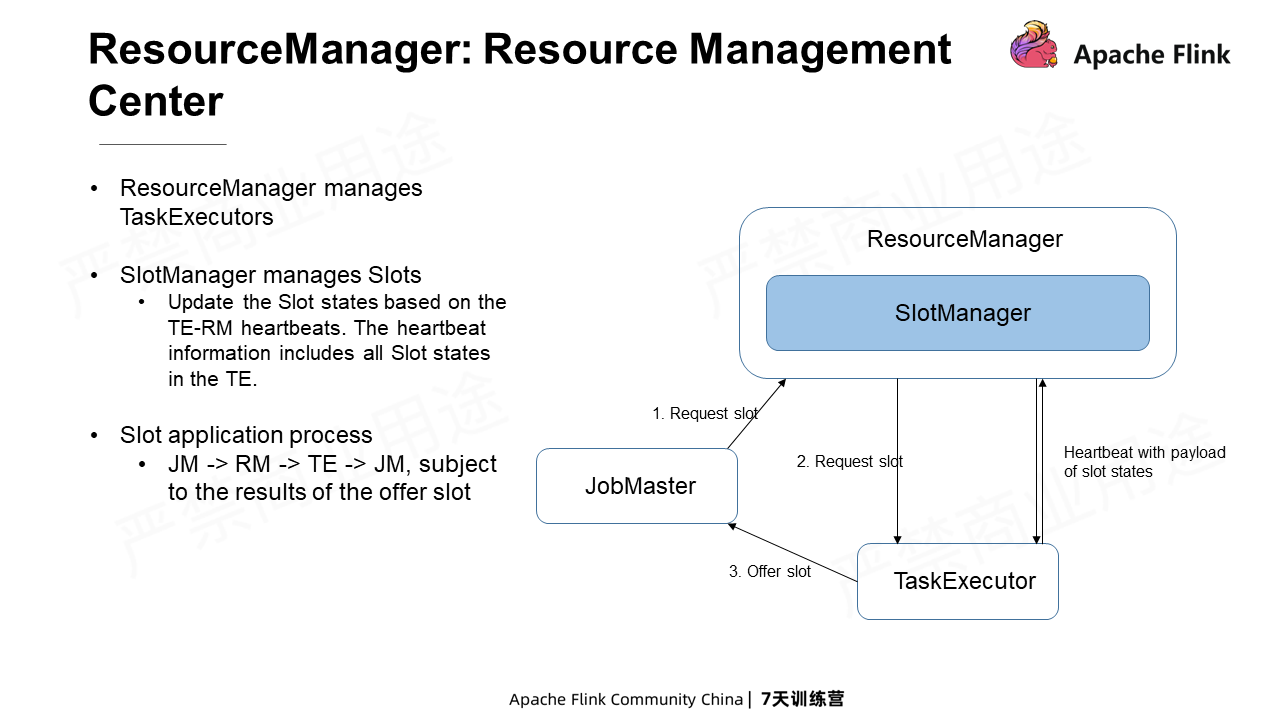

The ResourceManager is the resource management center of Flink. As mentioned earlier, the TaskExecutors contain a variety of resources. The ResourceManager manages these TaskExecutors. A newly started TaskExecutor needs to be registered with the ResourceManager before its resources can serve the job requests.

There is a key component in the ResourceManager called SlotManager, which manages the states of Slots. The Slots states are updated by the heartbeats between the TaskExecutor and the ResourceManager. The heartbeat information includes the states of all Slots in the TaskExecutor. With the states of all existing Slots, the ResourceManager can serve the resource applications of jobs. When scheduling a task, the JobMaster sends a Slot request to the ResourceManager. The ResourceManager transfers the request to the SlotManager, which checks whether available slots meet the request. If any, it initiates a Slot application to the corresponding TaskExecutor. If the application succeeds, the TaskExecutor will automatically offer the Slot to the JobMaster.

Such a complicated operation aims at avoiding the inconsistency caused by distribution. As mentioned earlier, the Slot states in the SlotManager are updated through heartbeats, so there is a certain latency. In addition, the Slot states may change throughout the entire Slot application process. Therefore, the Slot offer and its ACK are needed as the final result of all applications.

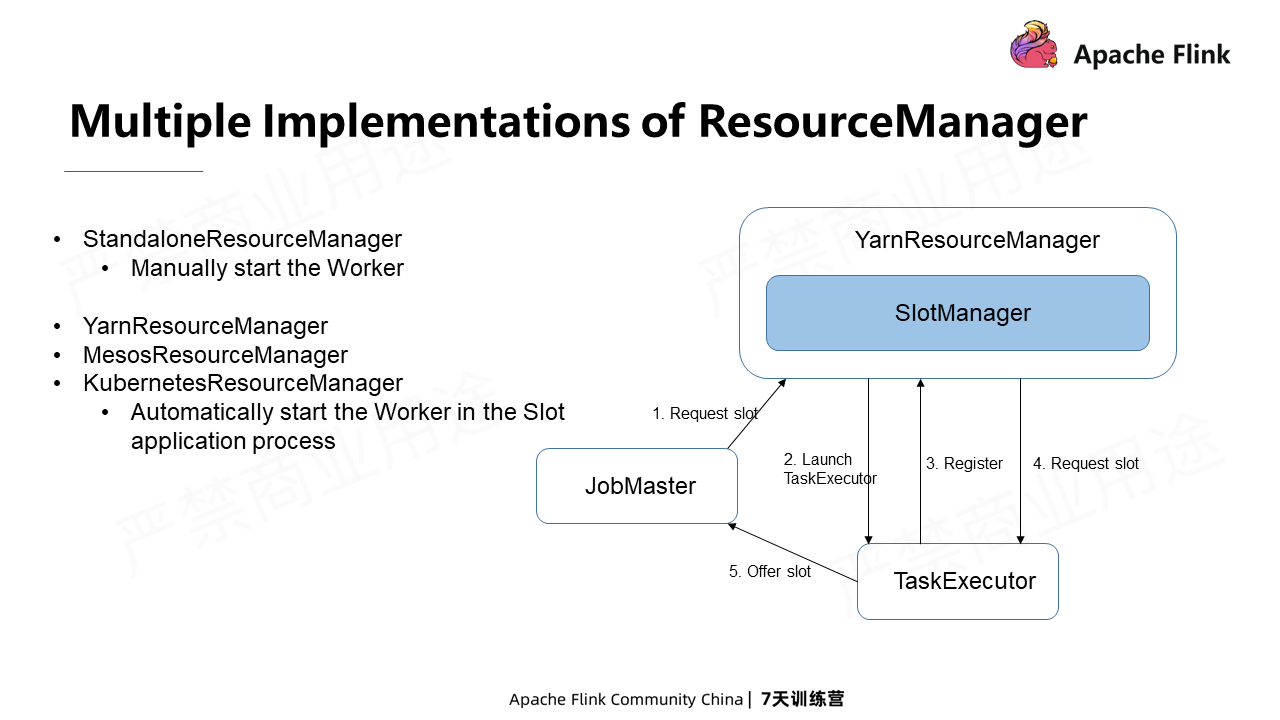

There are various implementations of ResourceManager. The ResourceManager used in Standalone mode is StandaloneResourceManager which requires the user to start the Worker node manually. In this case, users need to know the total amount of resources needed for the job first.

Besides, some ResourceManagers automatically apply for resources, including YarnResourceManager, MesosResourceManager, and KubernetesResourceManager. When they are not met, the ResourceManager automatically starts the Worker node during the Slot request.

Take YarnResourceManager as an example. JobMaster requests a Slot for a task. YarnResourceManager sends this request to SlotManager. If SlotManager finds that no Slots meet the application, it notifies YarnResourceManager. YarnResourceManager then sends a container request to the actual external YarnResourceManager. After receiving the container, the YarnResourceManager then starts a TaskExecutor. When the TaskExecutor is started, it will be registered with the ResourceManager and inform it of the available Slot information. After receiving the information, the SlotManager attempts to meet the pending SlotRequests. If they are met, the JobMaster will send a Slot request to the TaskExecutor. If the request succeeds, the TaskExecutor will offer the Slot to the JobMaster. In this way, users do not need to calculate the resource amount required by the job at the beginning. Instead, users only need to ensure the size of a single Slot to execute tasks.

Flink Course Series (2): Stream Processing with Apache Flink

206 posts | 56 followers

FollowApache Flink Community - August 4, 2021

Apache Flink Community China - August 11, 2021

Apache Flink Community China - August 4, 2021

Apache Flink Community China - June 2, 2022

Apache Flink Community China - December 25, 2019

Apache Flink Community China - August 19, 2021

206 posts | 56 followers

Follow Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Apache Flink Community