Alibaba Cloud Kernel Storage team, a member of SIG of high-performance storage of the OpenAnolis community.

Containerization is a trend in the devops industry in recent years. Through containerization, we will create a fully packaged and self-contained computing environment. It enables software developers to create and deploy their applications quickly. However, for a long time, due to the limitation of the image format, the loading of the container startup image is slow. To understand more about the details of this issue, please refer to this article "Container Technology: Container Image". To accelerate the startup of containers, we can combine optimized container images with technologies such as P2P networks, which reduces the startup time of container deployment and ensures continuous and stable operation. The article, "Dragonfly Releases the Nydus Container Image Acceleration Service", discusses this topic.

In addition to startup speed, core features such as image layering, deduplication, compression, and on-demand loading are important in the container image field. However, since there is no native file system support, most of them chose the user-mode solution. So did Nydus initially. With the evolution of solutions and requirements, user-mode solutions have more challenges, such as large performance gaps compared with native file systems, and high resource overhead in high-density deployment scenarios. The main reason is that the image format parsing and on-demand loading in the user state will bring a lot of kernel/user state communication overhead.

Facing this challenge, the OpenAnolis community made an attempt. We designed and implemented the RAFS v6 format compatible with the kernel native EROFS file system, expecting to sink the container image scheme to the kernel state. We also try to push this scheme to the mainline kernel to benefit more people. Finally, with our continuous improvement, erofs over fscache on-demand loading technology was merged into the 5.19 kernel (see the link at the end of the article). Thus, the next-generation container image distribution scheme of the Nydus image service became clear. This is the first natively supported out-of-the-box container image distribution solution for the Linux mainline kernel. Thus, container images feature high density, high performance, high availability, and ease of use.

This article will introduce the evolution of this technology from Nydus architecture review, RAFS v6 image format, and EROFS over Fscache on-demand loading technology. It will show the excellent performance of the current solution by comparing the data. I hope you can enjoy the quick container startup experience as soon as possible!

Refer to https://github.com/dragonflyoss/image-service for more details about Nydus project. Now you could experience these new features with this user guide https://github.com/dragonflyoss/image-service/blob/master/docs/nydus-fscache.md

The Nydus image acceleration service is an image acceleration service that optimizes the existing OCIv1 container image architecture, designs the RAFS (Registry Acceleration File System) disk format, and finally present the container image in file system format.

The basic requirement of container image is to provide container root directory (rootfs), which can be carried through file system or archive format. It can also be implemented by a custom block format, but anyway it needs to present as a directory tree, providing the file interface to containers.

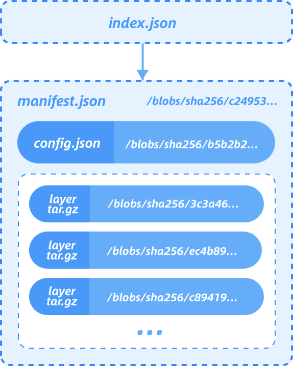

Let's take a look at the OCIv1 standard image first. The OCIv1 format is an image format specification based on the Docker Image Manifest Version 2 Schema 2 format. It consists of manifest, image index (optional), a series of container image layers, and configuration files. For details, please refer to relevant documents. In essence, an OCI image is an image format based on layers. Each layer stores file-level diff data in tgz archive format, as follows.

Due to the limitation of tgz, OCIv1 has some inherent problems, such as the inability to load on demand, the deduplication granularity of thicker levels, and the variable hash value of each layer.

The custom block format also has principle design defects. For examples:

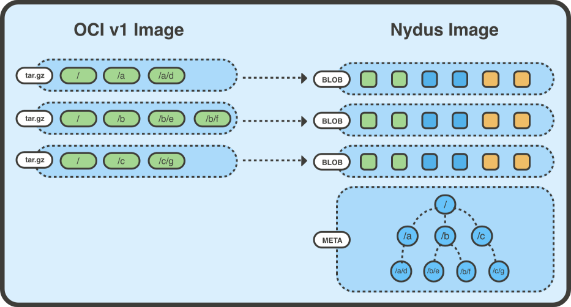

Nydus is a container image storage solution based on file system. The data (blobs) and metadata (bootstrap) of the container image file system are separated so that the original image layer only stores the data part of the file. The file is divided by chunk granularity, and the blob in each layer stores the corresponding chunk data. Chunk granularity is adopted, so this refines the granularity of deduplication. Chunk-level deduplication makes it easier to share data between layers and between images, and it is also easier to load on demand. Metadata is separated into one place. Therefore, you do not need to pull the corresponding blob data to access the metadata. The amount of data to be pulled is much smaller and the I/O efficiency is higher. The following figure shows the Nydus RAFS image format.

Before the introduction of the RAFS v6 format, Nydus used a fully userspace implemented image format to provide services through the FUSE or virtiofs interface. However, the user-state file system solution has the following defects in design.

These problems are the natural limitations of the user-state file system solution. If the implementation of the container image format is sunk to the kernel state, these problems can be solved in principle. Therefore, we have introduced the RAFS v6 image format, which is a container image format based on the kernel EROFS file system and is implemented in the kernel state.

The EROFS file system has existed in the Linux mainline since the Linux 4.19 kernel. In the past, it was mainly used in the embedded and mobile devices fields, existing in the current popular distributions (such as Fedora, Ubuntu, Archlinux, Debian, Gentoo, etc.). The user state tool erofs-utils also exists in these distributions and OIN Linux system definition lists. The community is active.

The EROFS file system has the following features:

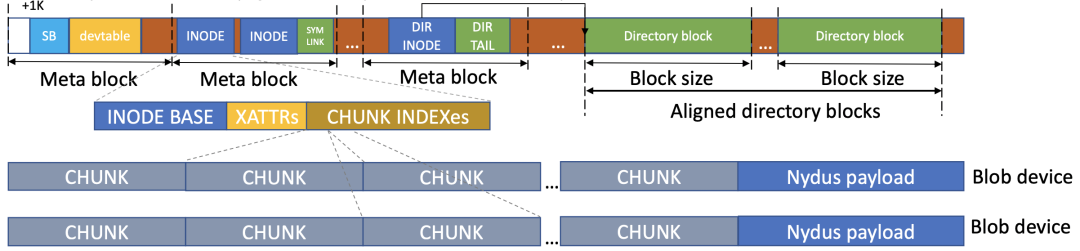

In the past year, the Alibaba Cloud kernel team has made a series of improvements to the EROFS file system. Its usage scenarios under cloud-native are expanded to adapt it to the requirements of the container image storage system. Finally, it is presented as a container image format RAFS v6 implemented in the kernel state. In addition to sinking the image format to the kernel state, RAFS v6 also optimizes the image format, such as block alignment and streamlined metadata.

The following is the new RAFS v6 image format:

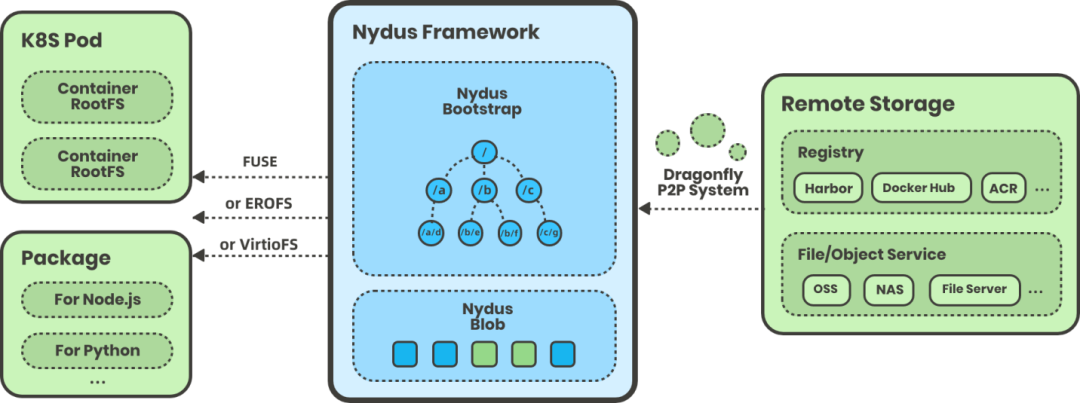

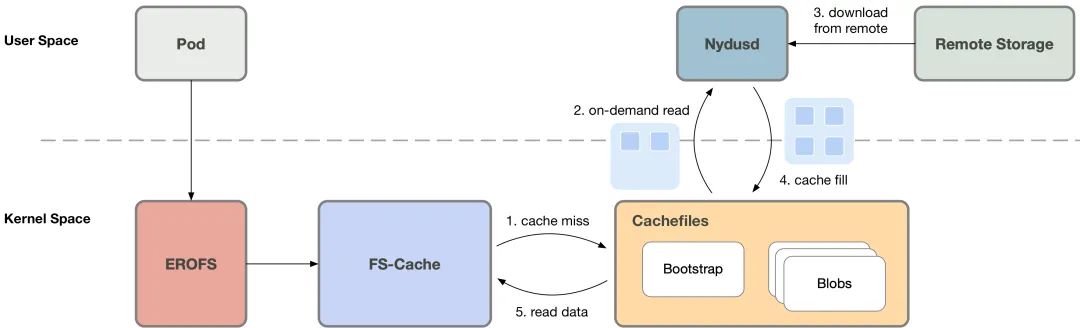

The improved Nydus image service architecture is shown in the following figure, adding support for the (EROFS-based) RAFS v6 image format.

Erofs over fscache is the next-generation container image on-demand loading technology developed by the Alibaba Cloud kernel team for Nydus. It is also the native image on-demand loading feature of the Linux kernel. It was merged into the Linux kernel mainline in version 5.19.

Prior to this, the existing on-demand loading in the industry was almost all user-state solutions. User-state scenarios involve frequent kernel/user state context switching and memory copying, causing performance bottlenecks. This problem is especially prominent when all container images have been downloaded to the local computer. As such, the process accessing files will still be switched in the service process of the user state.

We can decouple the two operations of 1) cache management of on-demand loading and 2) fetching data through various channels (such as network) on cache miss. Cache management can be executed in kernel state so that when the image is locally ready, the switching between kernel and user state context can be avoided. This is also the value of erofs over fscache technology.

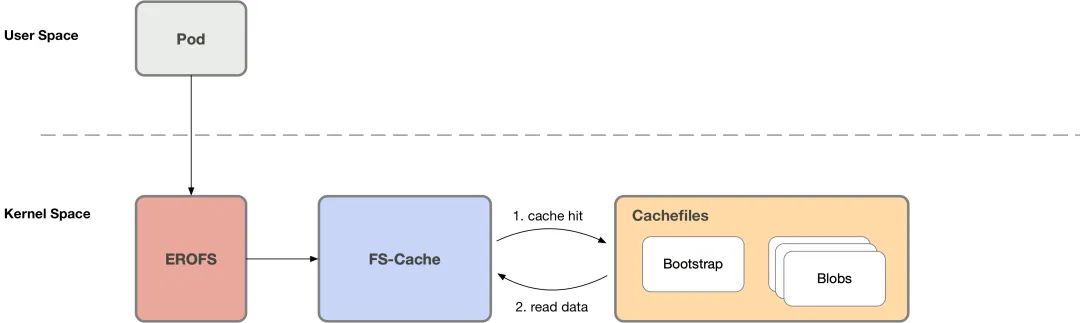

Fscache/cachefiles (hereinafter referred to as fscache) is a relatively mature file caching scheme in Linux systems. It is widely used in network file systems (such as NFS and Ceph). Our work is to make it to support the on-demand loading feature for local file systems (such as erofs).

When the container accesses the container image, fscache checks whether the requested data has been cached. On cache hits, it directly reads the data from the cached file. This process is in the kernel state throughout and will not fall into the user state.

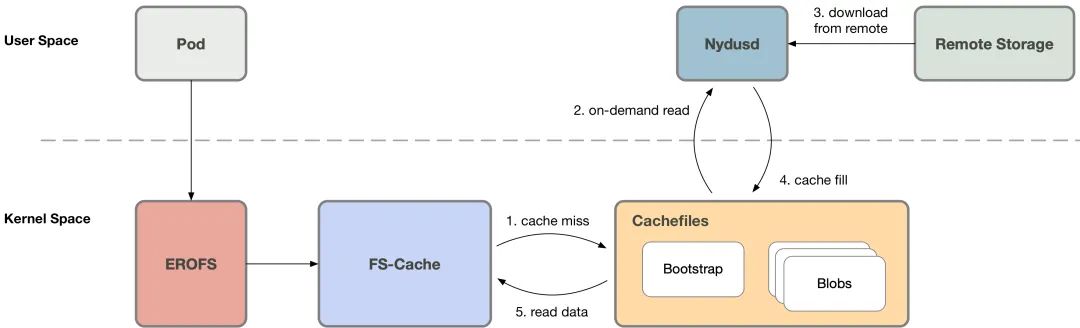

Otherwise (cache miss), the user-mode service process Nydusd will be notified to process this request. The container process will fall into a sleep waiting state. Nydusd obtains data from the remote end through the network, writes the data into the corresponding cache file through fscache, and then notifies the process that was previously in the sleep waiting state that the request has been processed. After that, the container process can read data from the cache file.

As described earlier, in the case that the image data has been downloaded to the local, the user state scheme will cause the process accessing the file to frequently sink into the user state. It also involves the memory copy between the kernel/user state. However, in erofs over fscache, it will no longer be switched to the user state, so that on-demand loading is really "on demand", thus realizing almost lossless performance and stability in the scenario of downloading container images in advance. Finally, a truly one-stop and lossless solution is obtained in the scenarios of on-demand loading and downloading container images in advance.

Specifically, erofs over fscache has the following advantages over user-state solutions.

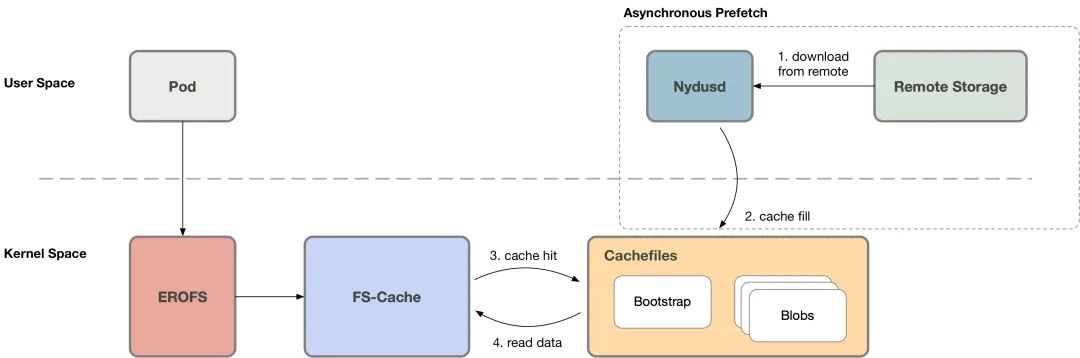

After the container is created, when the container process has not triggered cache miss, Nydusd in the user state can download data from the network and write it to the cache file. Then, when the data accessed by the container happens to be within the prefetching range, the process will read data directly from the cache file without falling into the user state. This optimization is unable to be implemented in the user state scheme.

When cache miss is triggered, Nydusd can download more data from the network once than the actual requested data and write the downloaded data to the cache file. For example, when the container requests for 4K, Nydusd can actually download 1MB of data at a time to reduce the network transmission latency per unit file size. After that, when the container accesses the remaining part within this 1MB, it does not need to switch to the user state. The user-mode solutions cannot achieve this optimization, since processes accessing data within the prefetched range will still switch to user mode.

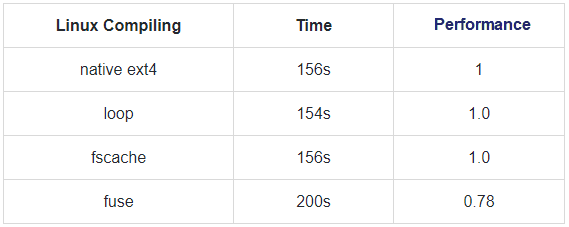

When all the image data has been downloaded locally (the impact of on-demand loading is not considered in this case), the performance of the erofs over fscache is better than that of the user-mode solutions. It also achieves similar performance compared to the native file system. The following is the performance testing data under several workloads [1].

read/randread IO

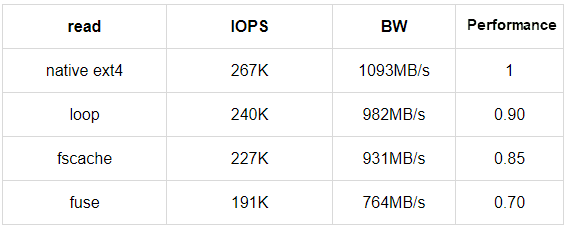

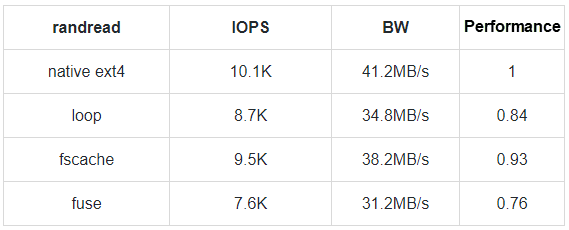

The following is a performance comparison of file read/randread buffer IO [2].

The "native" means that the test file is located in the local ext4 file system.

The "loop" indicates that the test file is located in the erofs image. Mount the erofs image through the DIRECT IO mode of the loop device.

The "fscache" indicates that the test file is located in the erofs image. Mount the erofs image through the erofs over fscache scheme.

The "fuse" indicates that the test file is located in the fuse file system [3].

The "performance" column normalizes the performance in each mode and compares the performance in other modes based on the performance of the native ext4 file system.

The read/randread performance in fscache mode is the same as that in loop mode and is better than that in fuse mode. However, there is still a gap in the performance of the native ext4 file system. We are further analyzing and optimizing it. Theoretically, this solution can reach the level of the native file system.

File Metadata Operation Test

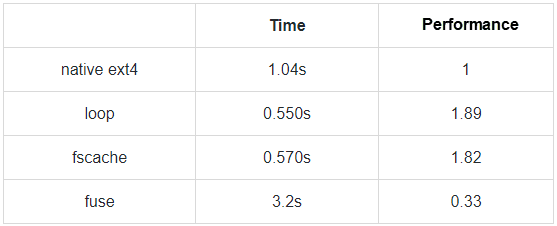

Test the performance of file metadata operations by performing tar operations on a large number of small files [4].

It can be seen that the metadata performance of container images in the erofs format is even better than that of the native ext4 file system. It is caused by the special file system format of erofs. Since erofs is a read-only file system, all its metadata can be closely arranged together. And ext4 is a writable file system, its metadata is distributed in multiple BG (block groups).

Typical Workload Test

Test the performance of the Linux source code compiling [5] as the typical workload.

The Linux compiling load performance in fscache mode is the same as that in loop mode and native ext4 file system and is better than that in fuse mode.

Erofs over fscache solution is implemented based on files, which means each container image appears as a cache file, so it naturally supports high-density deployment scenarios. For example, if a typical node.js container image corresponds to ~ 20 cache files in this scenario, you only need to maintain thousands of cache files on a machine that is deployed with hundreds of containers.

When all the image files are downloaded to the local computer, the access to the files in the image no longer requires the intervention of the user state service process. Therefore, the user state service process has more time windows to implement fault recovery and hot upgrade functions. Futhermore, the user state service process is even no longer required, which promotes the stability of the solution.

With the RAFS v6 image format and erofs over fscache on-demand loading technology, Nydus is suitable for both runc and Kata as a one-stop solution for container image distribution.

In addition, erofs over fscache is a truly one-stop and lossless solution in the two scenarios of on-demand loading and downloading container images in advance. On the one hand, it implements the on-demand loading feature. When the container is started, it does not need to download all container images to the local computer, thus realizing the ultimate container startup speed. On the other hand, it is compatible with the scenario where the container image has been downloaded to the local computer. It will no longer switch to userspace during file access, thus achieving near-lossless and stable performance with the native file system.

We will keep improving erofs over fscache solutions, such as image reuse between different containers, FSDAX support, and performance optimization.

In addition, at present, the erofs over fscache scheme has been merged into the Linux 5.19 mainline. We will also turn the scheme to OpenAnolis (5.10 and 4.19 kernel) in the future so that the OpenAnolis kernel is truly out of the box. You are welcome to use it at that time.

Last but not least, I would like to thank all the individuals and teams who have supported and helped us in the process of program development. I would like to thank all the contributors for their strong support for the program, including but not limited to community support, testing, code contribution, etc. You are welcome to join the OpenAnolis community. Let us work together to build a better container image ecology.

[1] Test environment ECS ecs.i2ne.4xlarge (16 vCPUs, 128 GiB Mem), local NVMe disk

[2] Test command "fio -ioengine=psync -bs=4k -direct=0 -rw=[read | randread] -numjobs=1"

[3] Use passthrough_hp as fuse daemon,e.g. "passthrough_hp "

[4] Test the execution time of the "tar -cf /dev/null " command

[5] Test the execution time of the "time make -j16" command

Inclavare Containers: The Future of Cloud-Native Confidential Computing

SMC-R Interpretation Series – Part 2: SMC-R: A hybrid solution of TCP and RDMA

96 posts | 6 followers

FollowOpenAnolis - January 11, 2024

Alibaba Cloud Community - June 17, 2022

Alibaba Developer - July 20, 2021

OpenAnolis - June 19, 2023

OpenAnolis - February 27, 2023

OpenAnolis - August 3, 2022

96 posts | 6 followers

Follow Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by OpenAnolis