By Wang Liang, CEO of YouYue Beijing Tech Inc.

I started my own company in the business of big data for promotion. A major difficulty that I had to conquer was processing offline data and cold backup data.

We needed to collect hundreds of millions of search results from the Apple's App Store. To reduce costs, we only kept data from the past one month in the online database and backed up historical data in Alibaba Cloud Object Storage Service (OSS). When we needed the historical data, we had to import it to the database or Hadoop cluster. Similar methods are used for data like users' click logs by companies with limited budgets. This method is inconvenient as it requires a large amount of work for ETL import and export. Currently, frequent requirements such as historical data backtracking and analysis, due to increasingly complex data operations, are consuming a considerable part of R&D engineers' time and energy.

Alibaba Cloud's Data Lake Analytics was like a ray of hope. It allows us to query and analyze data in the OSS using simple database methods that supports MySQL. It simplifies big data analysis and delivers satisfying performance. To a certain extent, it can directly support some of the online services.

Here I'd like to share my experience, using an example of big data analysis for blockchain logs. I picked blockchain data because the data volume is huge, which can fully demonstrate the features of Data Lake Analytics. Another reason is that all the blockchain data is open.

The data set used in this article contains all the data of Ethereum as of June 2018. More than 80% of today's DApps are deployed in Ethereum, so the data analysis and mining are of high values.

The data logic of Ethereum is chained data blocks, and each block contains detailed transaction data. The transaction data is similar to common access logs, which include the From (source user address), To (destination user address), and Data (sent data) fields. For example, ETH exchange between users and Ethereum program (smart contract) invoking by users are completed through transactions. Our experimental data set contains about 5 million blocks and 170 million transaction records.

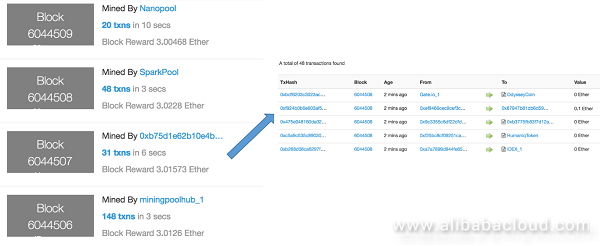

We can see the data structure of Ethereum in Ethscan.io, as shown in the following figure:

Figure 1. Data structure of Ethereum (blocks on the right and transaction data on the right)

By the time when this article was completed (July 25, 2018), there were around 6 million blocks and over 200 million transaction records in Ethereum. We also took out the Token application data from the transaction data because Token (also known as virtual currency) is still a main application. Based on the data, we can analyze token transactions that are worth thousands of billions of US dollars.

The process of obtaining Ethereum data includes three steps. The entire process is completed in the CentOS 7 64-bit ECS server environment of Alibaba Cloud. We recommend preparing two 200 GB disks, one for original Ethereum data and the other for data exported from Ethereum, and ensure that the memory is not less than 4 GB.

Download the Geth client for Linux 64-bit systems from https://geth.ethereum.org/downloads/ .

wget https://gethstore.blob.core.windows.net/builds/geth-linux-amd64-1.8.12-37685930.tar.gzDecompress the package and run the Geth program to connect to the public blockchain.

Run the following command:

nohup ./geth --datadir ./data/ --cache=1024 &Note:

You can perform the following steps to view the data synchronization progress.

Enter the Geth command line and run the following command in the Linux shell:

./geth attach ipc:./data/geth.ipc2 Run the following Ethereum command in the Geth command line to view the synchronization progress:

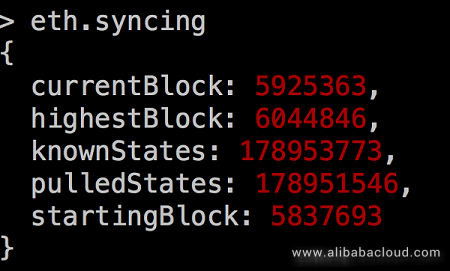

eth.syncingThe results are shown in the following figure:

Figure 2. Results of command execution in the Geth command line

In the results, currentBlock is the block that is being synchronized, and highestBlock is the fastest block in Ethereum.

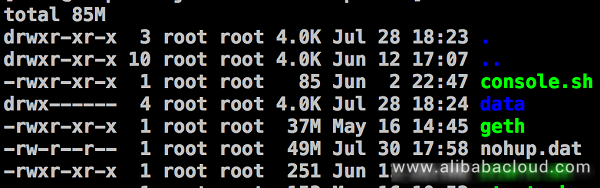

For your convenience, we have placed the Geth client and static node configuration file in the GitHub link at the end of this article. You can execute start.sh to directly start data synchronization. The complete directory structure of the Geth environment is as follows:

Figure 3. Directory structure of the Ethereum client

Here we use Ethereum ETL, which can be downloaded from GitHub.

git clone https://github.com/medvedev1088/ethereum-etlTo run Ethereum ETL, you need to install Python3 (Python36 is recommended) and pip3.

Currently, the Alibaba Cloud CentOS source does not provide Python36. To install it, run the following commands:

yum install epel-release -y

yum install https://centos7.iuscommunity.org/ius-release.rpm -y

yum install python36u -y

ln -s /bin/python3.6 /bin/python3

yum install python36u-pip -y

ln -s /bin/pip3.6 /bin/pip3 After Python36 and pip3 are installed, run the following commands:

cd ethereum-etl

pip3 install -r requirements.txtAfter the installation is complete, export the Ethereum data into CSV files.

Run the following command in the ethereum-etl directory:

nohup bash export_all.sh -s 0 -e 5775105 -b 100000 -i data/geth.ipc -o output &In the preceding command:

Perform this operation when at least 5 million blocks are synchronized to the Ethereum client, and this operation takes around 10 hours.

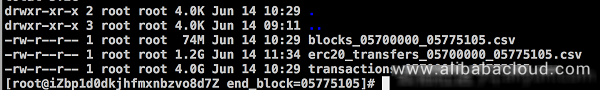

Assume that the export file directory is output. The data files in this directory are as follows:

Figure 4. Exported blockchain data

The file that starts with blocks_ contains the block data.

The file that starts with erc20_transfers_ contains the token transaction data.

The file that starts with transactions_ contains all the transaction data.

Files of the same type must be stored in the same directory in the Alibaba Cloud OSS.

In this article, the OSS is set as follows:

Store block data in oss://ethblock/export/blocks/.

Store all the transaction data in oss://ethblock/export/transactions/.

Store token transaction data in oss://ethblock/export/erc20_transfers.

After creating the preceding directories, download the OSS command line program ossutil. (You are advised to copy the program to /usr/bin, so you can execute it without adding the path.) For details, see Downloading and Installing ossutil.

After completing the OSS configuration, upload all the Ethereum data to the OSS. Access the ethereum-etl directory and run the following commands:

ossutil cp -r output oss://ethblock/export/blocks --include "blocks_*"

ossutil cp -r output oss://ethblock/export/transactions --include "transactions_*"

ossutil cp -r output oss://ethblock/export/erc20_transfers --include "erc20_*"

The preceding commands upload files of the same type to the corresponding directories in the OSS without changing the original directory structure.

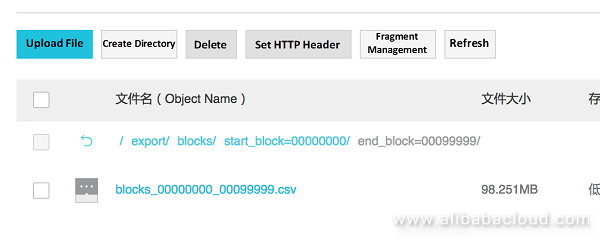

The directory structure in the OSS is similar to the following:

Figure 5 Directory structure in OSS

The directory structure is similar to "start_block=00000…", which supports the partition name format of HIVE.

After the files are uploaded, you can use the analysis services of Data Lake Analytics.

(Note: Partitioning has minor impact on the query speed. Partition update may be involved when files are added later. You can also simply store files of the same type in one directory without using the HIVE directory structure. In this case, run the following command:find –name "*blocks_*.csv"|xargs –I ossutil cp {} oss://ethblock/export/blocks/ )

After data is uploaded to OSS, you can use Data Lake Analytics to create tables. Unlike software cluster services such as open source HIVE and Presto, Data Lake Analytics uses the serverless technology. There is no direct connection between data and data tables, and users do not need to know how many resources are used by the analysis and computing services. Users only need to pay according to the data volume scanned during query. Therefore, you only need to use DDL statements to create tables and describe the data structure of OSS for Data Lake Analytics. Then you can query data in OSS using MySQL-compatible methods without converting and importing the data.

Currently, you need to apply to use Data Lake Analytics. For details, see Alibaba Cloud Data Lake Analytics.

Here we use the Ethereum blockchain data imported to OSS as an example to explain how to use Data Lake Analytics.

As mentioned above, only one step is required to query OSS data with Data Lake Analytics. That is to use DDL statements to describe the OSS data structure for Data Lake Analytics. The syntax is basically the same as that of Hadoop HIVE.

The following operations should be performed in the DMS console of Alibaba Cloud Data Lake Analytics:

Run the following command to create a schema, which is similar to creating a database:

create schema ethereumetl;(Note: The schema name is universal in an Alibaba Cloud region. Therefore, change the schema name and ensure that it is special to avoid conflicts.)

Run the following command to use the database:

use ethereumetl;Then, create tables. Here we create three tables for the three directories in OSS.

Firstly, create the blocks table to describe the block data format:

CREATE EXTERNAL TABLE IF NOT EXISTS blocks (

block_number BIGINT,

block_hash STRING,

block_parent_hash STRING,

block_nonce STRING,

block_sha3_uncles STRING,

block_logs_bloom STRING,

block_transactions_root STRING,

block_state_root STRING,

block_miner STRING,

block_difficulty DECIMAL(38,0),

block_total_difficulty DECIMAL(38,0),

block_size BIGINT,

block_extra_data STRING,

block_gas_limit BIGINT,

block_gas_used BIGINT,

block_timestamp BIGINT,

block_transaction_count BIGINT

)

PARTITIONED BY (start_block BIGINT, end_block BIGINT)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

WITH SERDEPROPERTIES (

'serialization.format' = ',',

'field.delim' = ',',

'escape.delim' = '\\'

)

STORED AS TEXTFILE

LOCATION 'oss://ethblock/export/blocks'

TBLPROPERTIES (

'skip.header.line.count' = '1'

);Because partitions are used, run the following command to synchronize the partition information:

MSCK REPAIR TABLE blocks;(Note: LOCATION must be replaced with the actual path in OSS.)

Secondly, create the transactions table to describe the transaction data:

CREATE EXTERNAL TABLE IF NOT EXISTS transactions (

tx_hash STRING,

tx_nonce BIGINT,

tx_block_hash STRING,

tx_block_number BIGINT,

tx_index BIGINT,

tx_from STRING,

tx_to STRING,

tx_value DECIMAL(38,0),

tx_gas BIGINT,

tx_gas_price BIGINT,

tx_input STRING

)

PARTITIONED BY (start_block BIGINT, end_block BIGINT)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

WITH SERDEPROPERTIES (

'serialization.format' = ',',

'field.delim' = ',',

'escape.delim' = '\\'

)

STORED AS TEXTFILE

LOCATION 'oss://ethblock/export/transactions'

TBLPROPERTIES (

'skip.header.line.count' = '1'

);Then, run the following command:

MSCK REPAIR TABLE transactions;Thirdly, create the erc20_transfers table to describe token transactions:

CREATE EXTERNAL TABLE IF NOT EXISTS erc20_transfers (

erc20_token STRING,

erc20_from STRING,

erc20_to STRING,

erc20_value DECIMAL(38,0),

erc20_tx_hash STRING,

erc20_log_index BIGINT,

erc20_block_number BIGINT

)

PARTITIONED BY (start_block BIGINT, end_block BIGINT)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

WITH SERDEPROPERTIES (

'serialization.format' = ',',

'field.delim' = ',',

'escape.delim' = '\\'

)

STORED AS TEXTFILE

LOCATION 'oss://ethblock/export/erc20_transfers'

TBLPROPERTIES (

'skip.header.line.count' = '1'

);Then, run the following command:

MSCK REPAIR TABLE erc20_transfers;After creating the three tables, run a test statement:

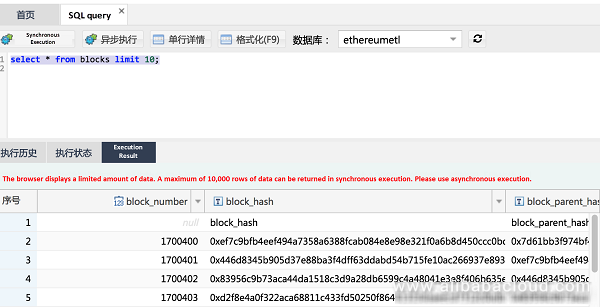

select * from blocks limit 10;Click Synchronous Execution. The result is as shown in the following figure:

Figure 6. Data Lake Analytics SQL execution result

Data Lake Analytics provides a MySQL JDBC-compatible interface. Therefore, you can quickly query OSS data like you do in a common database.

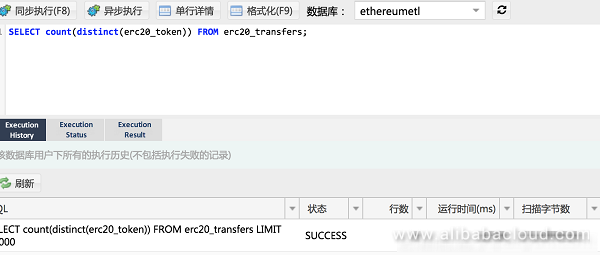

Let's use a SQL query statement to analyze the Ethereum data and see how many types of Tokens Ethereum sends.

SELECT count(distinct(erc20_token)) FROM erc20_transfersThe result is as shown in the following figure:

Figure 7. Querying the number of Tokens with Data Lake Analytics

As you can see, the system scanned around 170 million data records and generated related statistics in around 10s, which is quite fast. (The performance is continuously improved.)

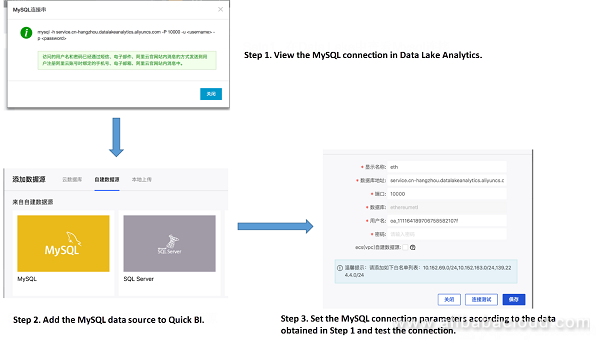

Data Lake Analytic supports the MySQL protocol, so it can directly connect to the BI system for more data analysis. Here the Quick BI of Alibaba Cloud is used as an example to analyze the historical trend of Ethereum transaction volume.

Connect to the data source. We can choose Create Data Source > MySQL in Quick BI to connect to the MySQL compatibility service of Data Lake Analytics, as shown in the following figure:

Figure 8. Using Data Lake Analytics services in BI

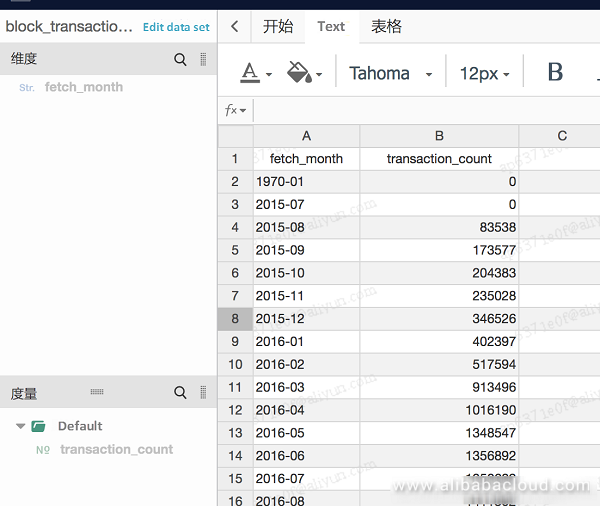

After the BI system connects to the data source of Data Lake Analytics, run the following MySQL commands to generate the BI data set:

select sum(block_transaction_count) as transaction_count,fetch_month from

(

SELECT block_transaction_count,DATE_FORMAT(FROM_UNIXTIME(block_timestamp),'%Y-%m') as fetch_month

FROM blocks

) as transaction_data

group by fetch_month

order by fetch_monthAfter the data set is generated, the BI table is generated, as shown in the following figure:

Figure 9. BI system table

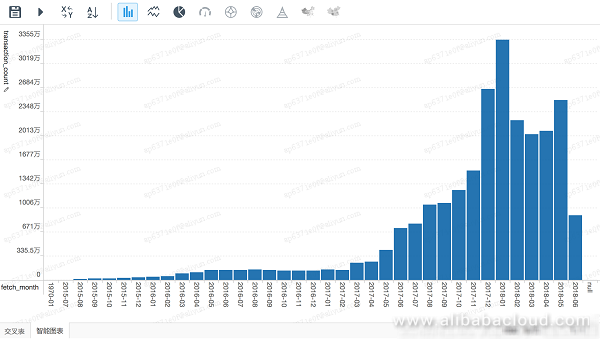

Then, present the table in a diagram, as shown in the following figure:

Figure 10. Visualized BI system data presenting (x-axis: month, y-axis: transaction volume)

As you can see in the figure, the Ethereum transaction volume reached its peak in early 2018, which is regarded as the first year of the blockchain era.

In addition to basic BI analysis, we can use Data Lake Analytics for deep mining in services related to AI machine learning. Easy data export is a main advantage of Data Lake Analytics. The following is a simple example.

The blockchain system is anonymous and decentralized. Therefore, users in the blockchain world should also be decentralized and not affiliate to any organizations. Whether "communities" exist in the blockchain is an interesting topic. To discuss that, let's do a simple experiment of "community discovery" mining for Ethereum users.

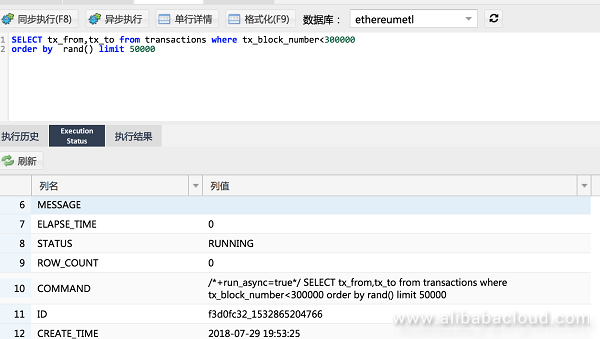

In this experiment, we need to first export the From and To data in transactions to create the user relationship network diagram. Thanks to Data Lake Analytics, using MySQL statements to export data has become simple. We hope that we can analyze the user relationship network in the 300,000 blocks before 2016. To do that, we run the following statements to randomly collect 50,000 pieces of transaction data from these blocks:

SELECT tx_from,tx_to from transactions where tx_block_number<300000

order by rand() limit 50000Because the DMS of Data Lake Analytics can return only 10,000 pieces of data at a time, we need to export the data in asynchronous mode and then read the data from OSS. The result is as follows:

Figure 11. Asynchronous execution result of Data Lake Analytics

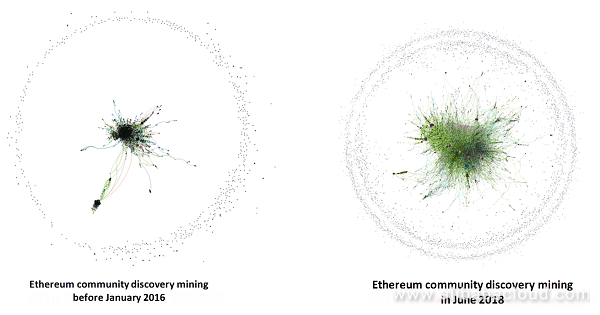

For comparison, we randomly exported 100,000 pieces of data generated in June 2018 for community discovery mining. The data mining result is as follows:

Figure 12. Ethereum community discovery mining (based on the Force Atlas2 algorithm)

It would be troublesome if we use commands such as awk to directly operate files in OSS to generate data. By contrast, using Data Lake Analytics to export data is much easier.

As you can see in Figure 12, in the early time of the Ethereum, most active users were in several "communities", and there were only a few trial users in the outer circle. When the rapid development period arrives, the proportion of trial users increased significantly, and active users are distributed in tens of "communities", most of which are token contract-centric. This means, though the blockchain system is decentralized, its users exist in an organizational structure similar to that in the real world.

The primary advantage of Data Lake Analytics is that it is easy to use, greatly lowering the threshold of big data analysis. You do not need to build the Hadoop/HIVE system or worry about big data import and export. Personnel with basic LAMP technology stack knowledge, even the operations staff, can use it. Through seamless combination with systems such as BI and DataV, it makes big data analysis more accessible.

The second advantage of Data Lake Analytics is its low cost. You need to pay only tens of yuan per month to use OSS and Data Lake Analytics to analyze similar amount of data used in this article. If you use Hadoop/HIVE or import data to MySQL/mongoDB, it will cost you at least 1,000 yuan.

Last but not least, the execution speed of Data Lake Analytics is fast, making it an ideal substitute for some of the traditional DB-based online services. In the BI example used in this article, Data Lake Analytics completes execution within seconds, which can surely meet the requirements of online big data query. Moreover, today's service frameworks such as Think PHP provide query cache, powerful enough for online services.

2,605 posts | 747 followers

FollowAlibaba Clouder - August 7, 2020

Alibaba Clouder - June 22, 2020

Alibaba Clouder - October 18, 2019

Alibaba Clouder - February 7, 2018

Alibaba EMR - April 27, 2021

ApsaraDB - March 3, 2020

2,605 posts | 747 followers

Follow OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn MoreLearn More

Quick BI

Quick BI

A new generation of business Intelligence services on the cloud

Learn MoreMore Posts by Alibaba Clouder