By Priyankaa Arunachalam, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

In the previous article of this series, we have shown how to build a big data environment on Alibaba Cloud with Object Storage Service and E-MapReduce.

In this blog series, we will walk you through the entire cycle of Big Data analytics. Now that we are familiar with the basics and with cluster creation, it is time to understand the data which is acquired from various sources and the most suitable data format to ingest it into Big Data environment. Let’s divide the source of data into four quadrants

The second quadrant is usually unstructured, which we process to perform analytics. Big Data mostly concentrates here. As mentioned in our previous articles, the storage part of Hadoop is HDFS, a file system which does not impose any data format or schema. So you can store the file in any format you wish and hence comes the most reasonable question “Which file format should I use?”

Secondly, assume new columns are added to your table in database. This gets reflected in your csv dumps and as a result the schema changes. Thus schema changes takes place frequently which will be another drawback. Hadoop supports various file formats among which some are easy for human to handle but some might provide better performance optimizations.

"Data is only as useful as the context in which it is gathered and presented – Josh Pigford"

Data obtained from various sources can be of different formats. Let’s quickly take a look at the file formats supported by the Hadoop for better understanding and quick processing of data. Few major file formats are listed below

Which one will I choose from these file formats? A better file format must satisfy two major conditions

Block Compression

It is a compression technique for reducing texture size up to 3/4th of the original and hence applications can see a drastic performance increase with the use of block compression.

Schema Evolution

As mentioned, once the initial schema is defined, applications might evolve it over time and schema changes might take place frequently. To handle this better, your file format should support schema evolution.

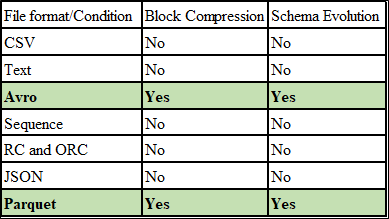

Take a look at the comparison table to arrive at a better solution

The most common file format which strikes our mind first is Text file, as it is generally used by human in daily life and hence easy to use and troubleshoot. But remembering the mantra of Big Data-“Write Once, Read many times” which means Performance is a main factor to consider. Hence there should be no overhead in data querying and unfortunately, this was the main drawback in text files

From the comparison table, it is clear that Avro and Parquet file formats are dominant in Hadoop as they support Block Compression and Schema evolution. On the other hand, the other file formats are least used in Hadoop ecosystem. However it is based on the storage type which is preferred by the Organizations, but better file formats are preferred for optimized performance.

The main challenge in Big Data is dealing with large data sets. Storing these massive data volumes can cause I/O and network related issues. The solution to eliminate this problem is Data Compression. Some compression techniques are splittable, which can enhance performance when reading and processing large compressed files

The four widely used Compression techniques in Hadoop are

We already have an EMR cluster which is all set for use. You can submit jobs and execution plans in the UI else log on to the master node of the cluster. So what will you need to access the cluster?

SSH on Windows

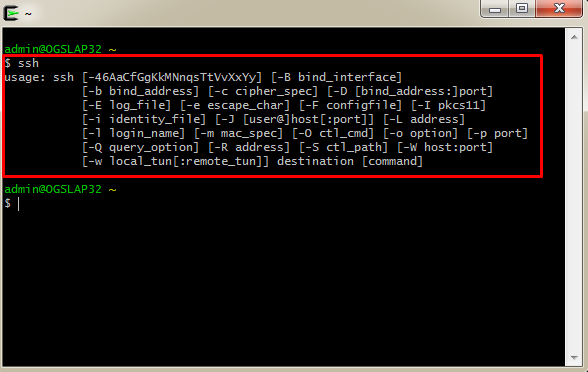

Secure Shell (SSH) provides strong authentication and encrypted data communication between two systems

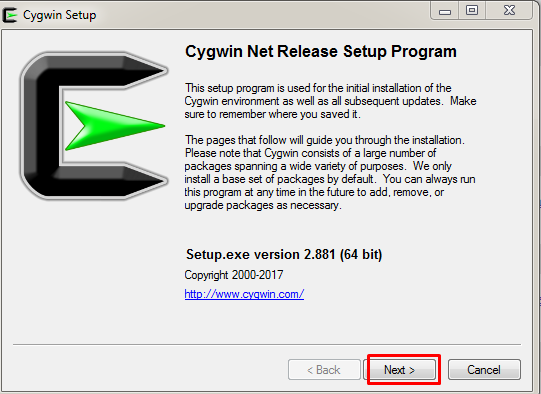

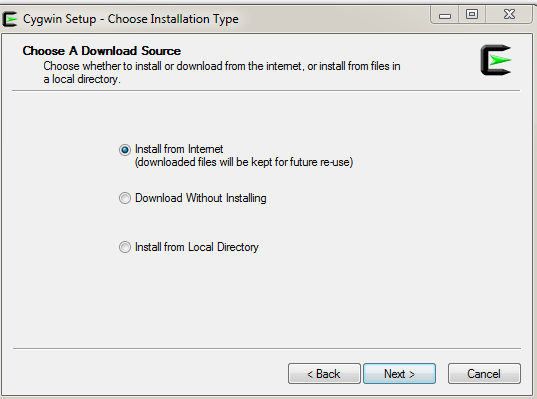

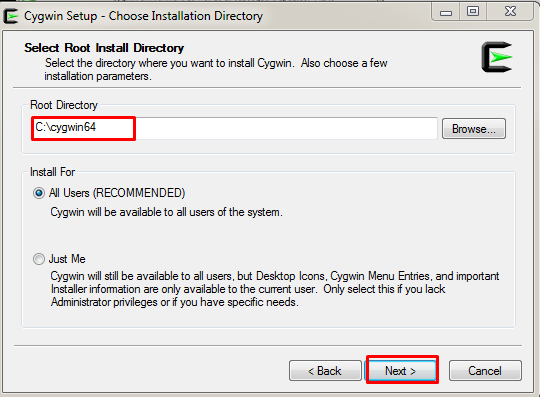

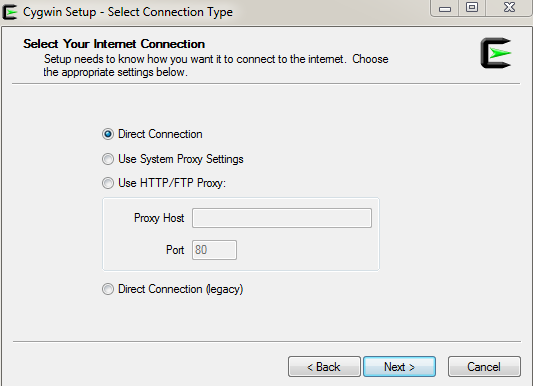

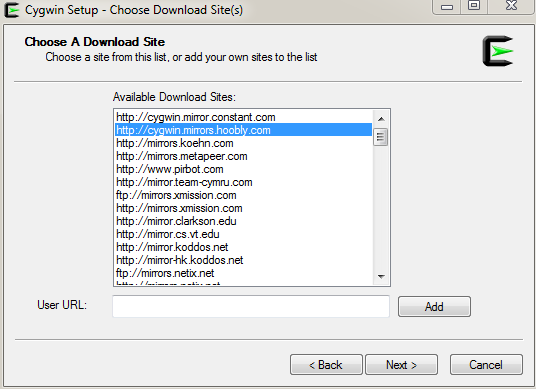

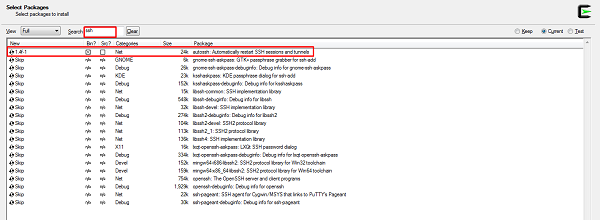

In this article, we will use Cygwin and the Cygwin Installation steps are mentioned below.

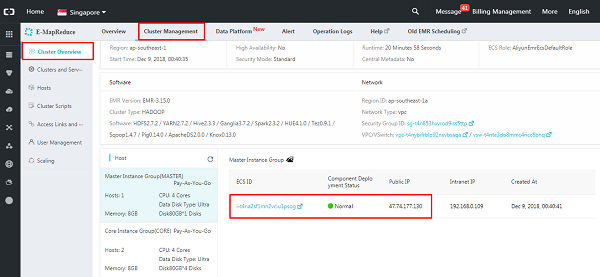

The next prerequisite to access the cluster is IP. Now navigate to the cluster overview page where the public network IP address of the master exists.

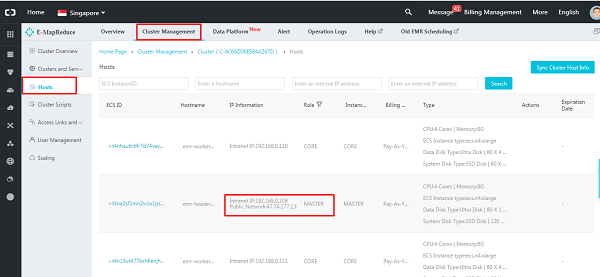

You can also obtain the IP from the Hosts page. Navigate to “Hosts”, where the list of running hosts (master and core nodes) will be displayed. Extract the public IP of the master node.

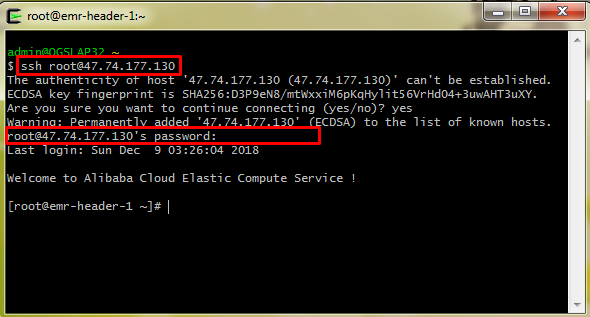

Let’s login to the cluster by giving ssh root@47.72.177.130 in the Cygwin terminal where

Give ‘yes’ to continue connecting.

Give the password which was provided during the cluster creation

You are all set to explore and play with various settings and states in the cluster.

Big Data is acquired in various ways, either as batch data or as real time streaming data. Hence, let’s divide this into two data processing pipelines such as

Batch data processing is an efficient way of processing huge volumes of data where a group of transactions is collected over a period of time. Hadoop focuses on batch data processing

Real time data processing or Stream processing is where we obtain continual input and hence processing is done with the streaming data. Data is processed in near real time. Most organizations use batch data processing, but sometimes there are in need of real time processing too. Real time analytics can help an organization to take immediate actions whenever necessary.

At this initial stage, we will look at Batch Processing as an example for better understanding.

Let me take Tourism as a use case, for us to easily relate with.

If you are planning for a trip, you would probably acquire more sources by browsing through the internet, from buying tickets to reserving accommodations and identifying the best spots. Members of the tourism industry are slowly turning to big data to improve decision-making and overall performance in a different aspect.

Download the open source tourism dataset from here

Here you have various files in csv and json format. From the data sources the tripadvisor_merged excel will be the concatenated sheet of all the other data. It is a US tourism dataset comprising of various museums and its reviews collected from Trip Advisor. It has columns like Museum name, Location, Review Count, Rating by the Tourists, Rank of the Museum, Fee (whether there is entry fee for the Museum), Length of Hours-which means how much time a tourist has spent in the Museum (this can indirectly mean to the interesting features of the Museum), Family and Friends Count (Count of people visiting the Museum) and more.

Let’s try to recollect few terms from our first article

Now, let’s preserve the data we collected by ingesting it into HDFS and process it to find out the top museums by visitor count and visitor spending time, the museum rankings by State, etc. These insights can help a tourism industry in better decision making and identify the best spots which are attracted by the tourists. The source of this data comes exactly from the comments, ratings and feedbacks which were left by the tourists and this huge data is scattered. An interconnection of this scattered information can be made possible through big data. With this understanding of data, we will focus on data ingestion and processing in the next article.

In the next article, we will take a closer look into the concepts and usage of HDFS and Sqoop for data ingestion.

Drilling into Big Data – Getting started with OSS and EMR (2)

2,605 posts | 747 followers

FollowAlibaba Clouder - September 26, 2019

Alibaba Clouder - April 8, 2019

Alibaba Clouder - April 4, 2019

Alibaba Clouder - April 8, 2019

Alibaba Clouder - April 4, 2019

Alibaba Clouder - April 9, 2019

2,605 posts | 747 followers

Follow E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Alibaba Clouder