AnalyticDB for MySQL is highly compatible with MySQL protocols. It supports millisecond-level updates and sub-second-level queries and allows for performing multi-dimensional analysis and business exploration on large amounts of data in real-time. The newly released version (AnalyticDB for MySQL Data Lakehouse Edition) provides low-cost offline processing capabilities to clean and process data and provides high-performance online analysis capabilities to explore data. The new version has provided the customers with the scale of the data lake and the experience of a database. It helps enterprises reduce costs and increase efficiency by building an enterprise-level data analysis platform.

AnalyticDB for MySQL Pipeline Service (APS) Introduction: While improving the capacity of building data lakehouse, AnalyticDB for MySQL Data Lakehouse Edition introduces an APS tunnel component to provide real-time data stream services for customers to realize low-cost and low-latency into the lake and the house. This article describes the challenges and solutions of SLS using APS to quickly enter the lake with Exactly-Once consistency. In the construction of a data tunnel, we choose Flink as the basic engine. Flink is a well-known big data processing framework in the industry. Its stream-batch integrated architecture helps handle a variety of scenarios. The lake of AnalyticDB for MySQL is built on Hudi. As a mature data lake base, Hudi has been used by many large enterprises. AnalyticDB for MySQL has also accumulated many years of experience on it. Today, AnalyticDB for MySQL Data Lakehouse Edition deeply integrates the lake and house to provide an integrated solution.

The Challenges of Exactly-Once Consistency into the Lake: Exceptions may occur in the tunnel. For example, in scenarios (such as upgrade and scaling), the link may restart and trigger a replay of some processed data from the source end, resulting in duplicate data on the target end. One approach to solve this problem is to configure the business primary key and use the Upsert capability of Hudi to achieve idempotent writing. However, the throughput of SLS into the lake is at the GB level per second (4 GB/s for a business), and the cost needs to be controlled, making Hudi Upsert difficult to meet the requirements. As SLS data features Append, Hudi's Append Only mode is used to write data to achieve high throughput, and other mechanisms are used to avoid data duplication and loss.

The consistency assurance of stream computing generally includes the following types:

| At-Least-Once | Data is not lost during processing but may be duplicated. |

| At-Most-Once | Data is not duplicated during processing but may be lost. |

| Exactly-Once | All data is processed once without duplication and loss. |

Among all consistency semantics, Exactly-Once is the most demanding. In stream computing, Exactly-Once refers to the exact consistency of the internal state. However, business scenarios require end-to-end Exactly-Once. When a Failover occurs, the data on the target must be consistent with the source, and the data is not duplicated and lost.

It is necessary to consider the failover scenario to achieve Exactly-Once consistency. That refers to how to recover to a certain consistent state when the system downtime task is restarted. Flink is called stateful stream processing because it can save the state to the backend storage through the checkpoint mechanism and restore the state to a consistent state from the backend storage upon restart. However, in state recovery, Flink only ensures that the state of itself is consistent. However, in a complete system, including source, Flink, and target, data loss or duplication may still occur, resulting in end-to-end inconsistency. Let's discuss data duplication through the following example of string concatenation.

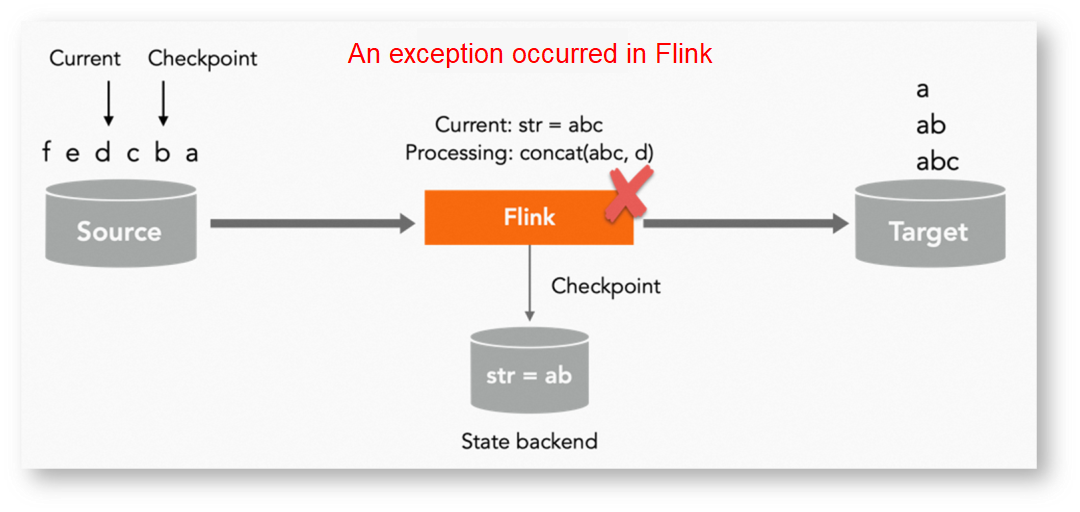

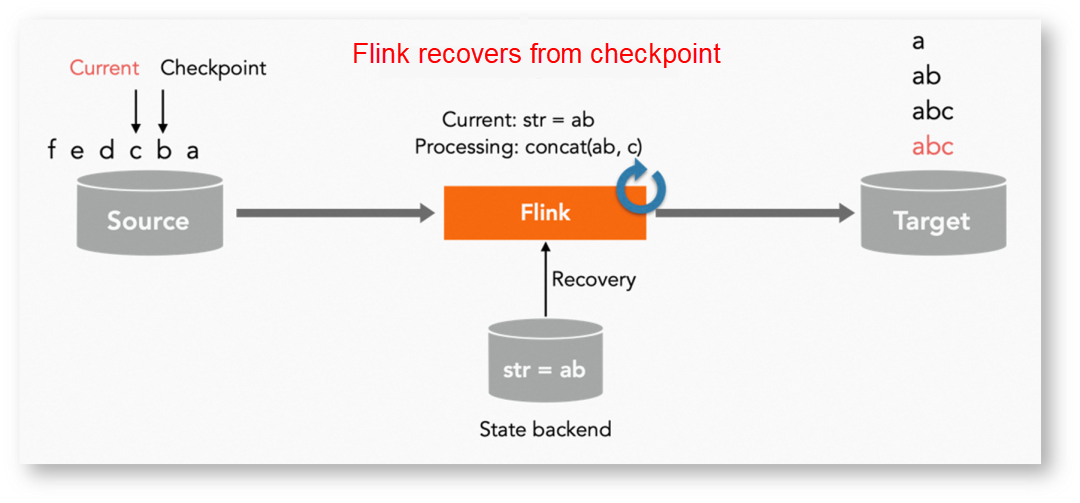

The following figure shows the processing of string concatenation. The processing logic is to read characters one by one from the source and connect them. Each character concatenated is output to the target, and multiple non-repeating strings like a, ab, and abc are output in the end.

In this example, the checkpoint of Flink stores the completed character concatenation ab and the corresponding source point (the point pointed by the checkpoint arrow). Current points to the currently processed point. At this time, a, ab, and abc have been output to the target. When an abnormal restart occurs, Flink restores its state from checkpointing, rolls back the point and reprocesses the character c, and outputs abc to the target again, causing abc to be repeated.

In this example, Filnk restores its status through checkpointing, so it does not repeatedly process characters (such as abb or abcc), nor does it lose characters (such as ac), which ensures its Exactly-Once. However, two duplicate abc appear on the target. Therefore, end-to-end Exactly-Once is not guaranteed.

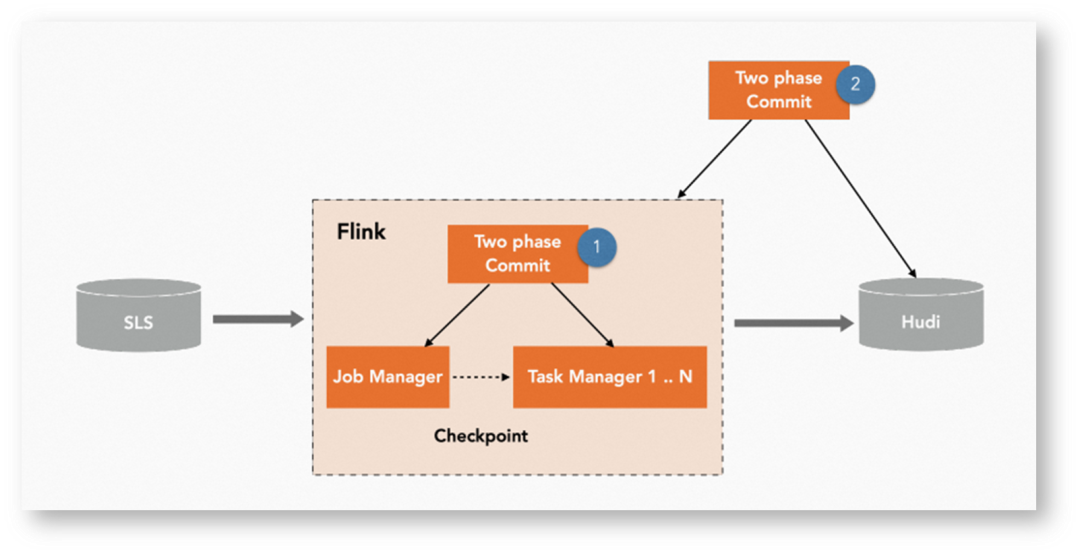

Flink is a complex distributed system that contains operators (such as source and sink) and parallel relationships (such as slot). In such a system, the two-phase commit is expected to achieve Exactly-Once consistency. The checkpointing of Flink is an implementation of a two-phase commit.

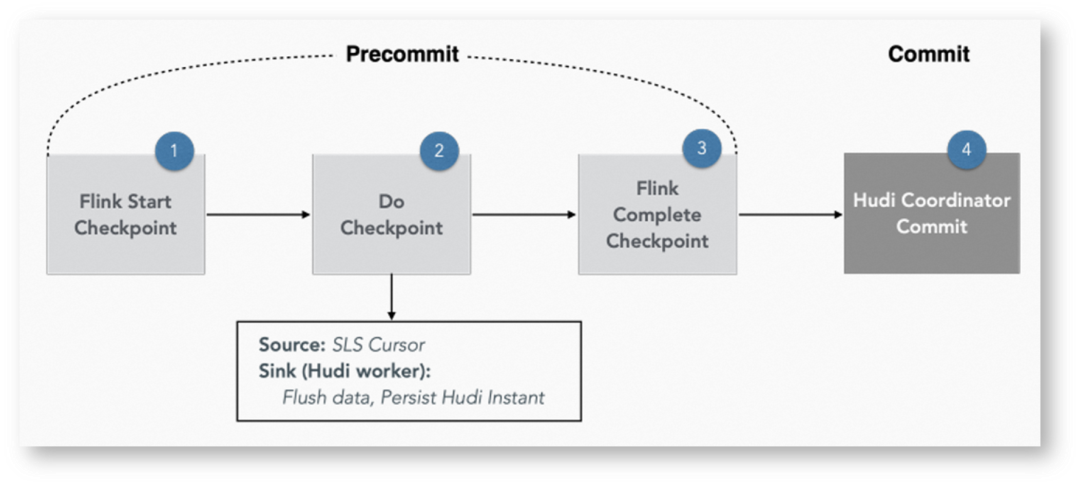

In end-to-end, Flink and Hudi form another distributed system. Another set of two-phase commit protocols is required to achieve Exactly-Once consistency in this distributed system. (We will not discuss the SLS side here because Flink does not change the status of SLS in this scenario but only uses the point replay capability of SLS.) Therefore, in end-to-end, the two-phase commits of Flink and Flink + Hudi are used to ensure Exactly-Once consistency (please see the following figure):

The two-phase checkpointing of Flink will not be described in detail. The following section focuses on the implementation of the two-phase commit of Flink + Hudi, defining which are the precommitting phase and the committing phase and how to recover from faults when exceptions occur to ensure that Flink and Hudi are in the same state. For example, if Flink has completed checkpointing, but Hudi has not completed committing, how can we restore it to the consistent state? This question will be discussed in the subsequent sections.

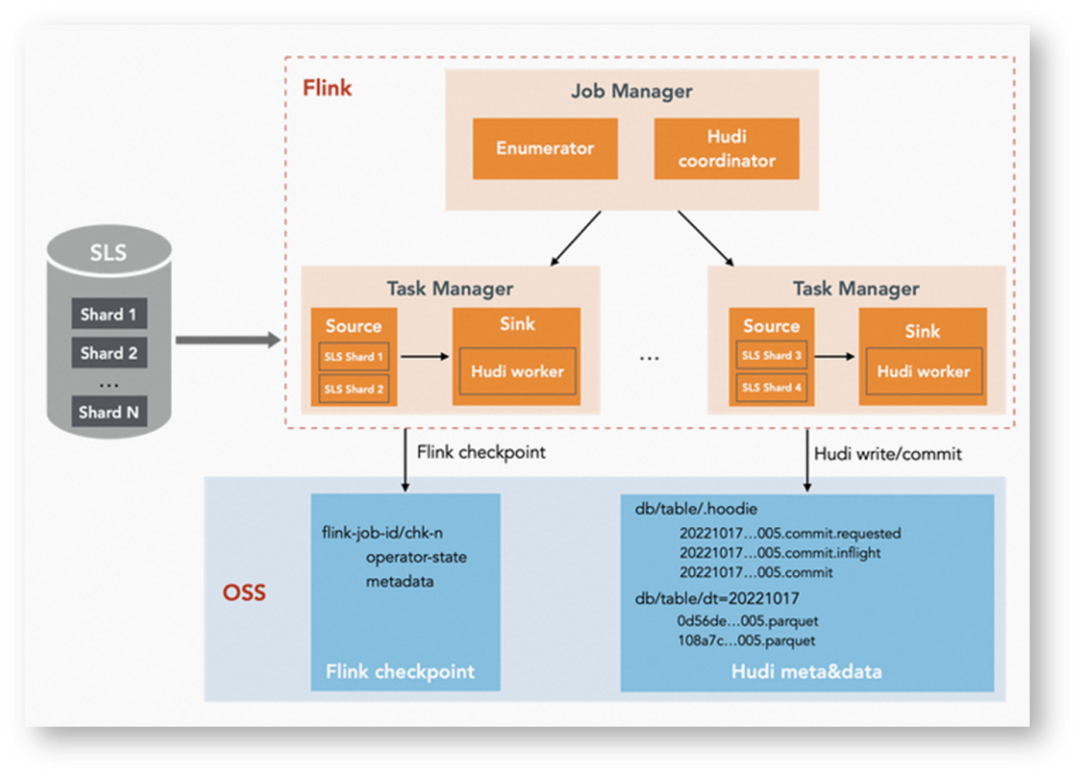

The following describes how to implement the Exactly-Once consistency of SLS into the lake. The overall architecture is listed below. The components of Hudi are deployed on JobManager and TaskManager of Flink. SLS as a data source is read by Flink and then written to a Hudi table. Since SLS is multi-shard storage, it will be read in parallel by multiple sources of Flink. After the data is read, the sink calls the worker of Hudi to write the data to the table of Hudi. The actual process contains logic (such as Repartition and hot spot scattering), which is simplified in the figure. The backend storage of the checkpoint of Flink and the storage of Hudi data are in OSS.

How to implement the source to consume SLS data has been extensively described, which is not repeated here. This article describes two consumption modes of SLS: Consumer Group Mode and General Consumption Mode, as well as their differences.

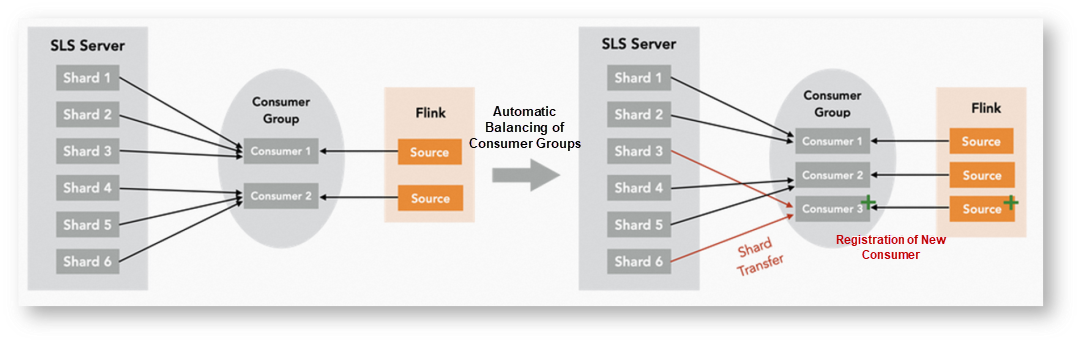

As the name implies, multiple consumers can be registered to the same consumer group. SLS automatically assigns shards to these consumers for reading. The advantage is that the consumer group of SLS manages SLB. In the left part of the following figure, two consumers are registered in the consumer group. Therefore, SLS evenly distributes six shards to these two consumers. When a new consumer is registered (right in the following figure), SLS will automatically balance and migrate some shards from the old consumers to the new, which is called shard transfer.

The advantage of this mode is automatic balancing, and consumers are automatically allocated when SLS shards are split or merged, but this mode causes problems in our scenario. We store the current consumer offset of each shard in the checkpoints of Flink to ensure Exactly-Once consistency. During operation, the source on each slot holds the current consumer offset. If a shard transfer occurs, how can we ensure that the operator on the old slot is no longer consumed and the offset is transferred to the new slot at the same time? This introduces a new consistency problem. In particular, if a large-scale system has hundreds of SLS shards and hundreds of Flink slots, some sources are likely registered to SLS before others, resulting in inevitable shard transfer.

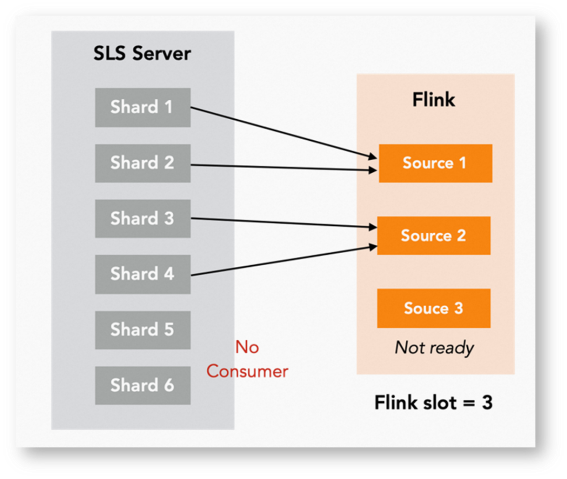

In this mode, the SLS SDK is called to specify shards and offsets to consume data instead of being allocated by the SLS consumer group. Therefore, shard transfer does not occur. As shown in the following figure, the slot of Flink is 3. Therefore, we can calculate that two shards will be consumed by each consumer and allocated accordingly. Even if Source 3 is not ready, Shard 5 and Shard 6 are not allocated to Source 1 and Source 2. You still need to migrate shards for load balancing (such as when the load of some TaskManagers is too high). However, in this case, the migration is triggered by us, and the status is more controllable, thus avoiding inconsistency.

The following section describes some concepts about Hudi submission and how to work with Flink to implement two-phase commit and fault tolerance to achieve Exactly-Once consistency.

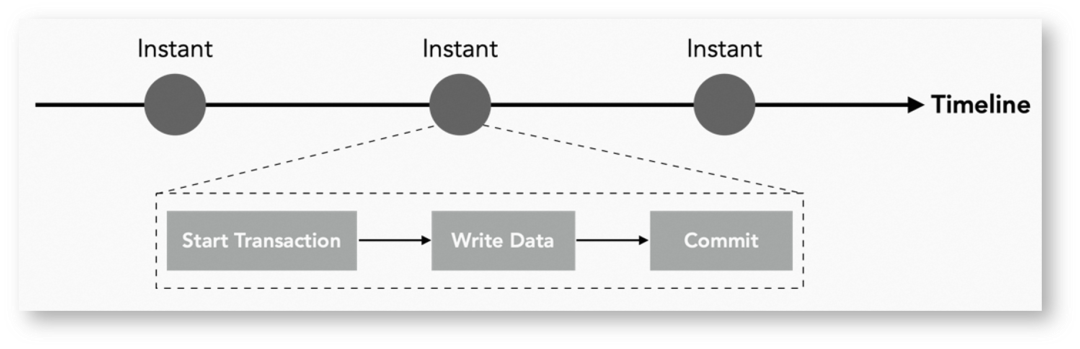

Hudi maintains a timeline. Instant integrates actions initiated at a specific time to the table and the states of the table. An Instant can be understood as a data version. The action includes Commit, Rollback, and Clean. Their atomicity is guaranteed by Hudi. Therefore, the instant in Hudi is similar to the transactions and versions in the database. In the figure, we use Start Transaction, Write Data, and Commit, which are similar to database transactions, to express the execution process of an instant. In the instant, some actions have the following meanings.

There are three states of the instant:

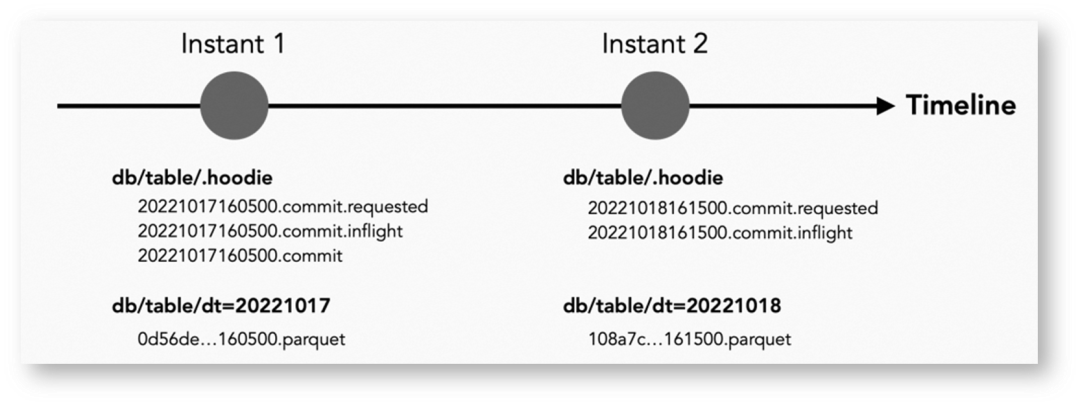

The time, action, and state of the instant are all described in the metadata file. The following figure shows two successive instants on the timeline. The metadata file in the .hoodie directory of Instant 1 indicates that the start time is 2022-10-17 16:05:00, the action is Commit, and the 20221017160500.commit file indicates that the commit is completed. The parquet data file corresponding to the instant is displayed in the partition directory of the table. On the other hand, Instant 2 happens the next day, and the action is executed but not yet committed.

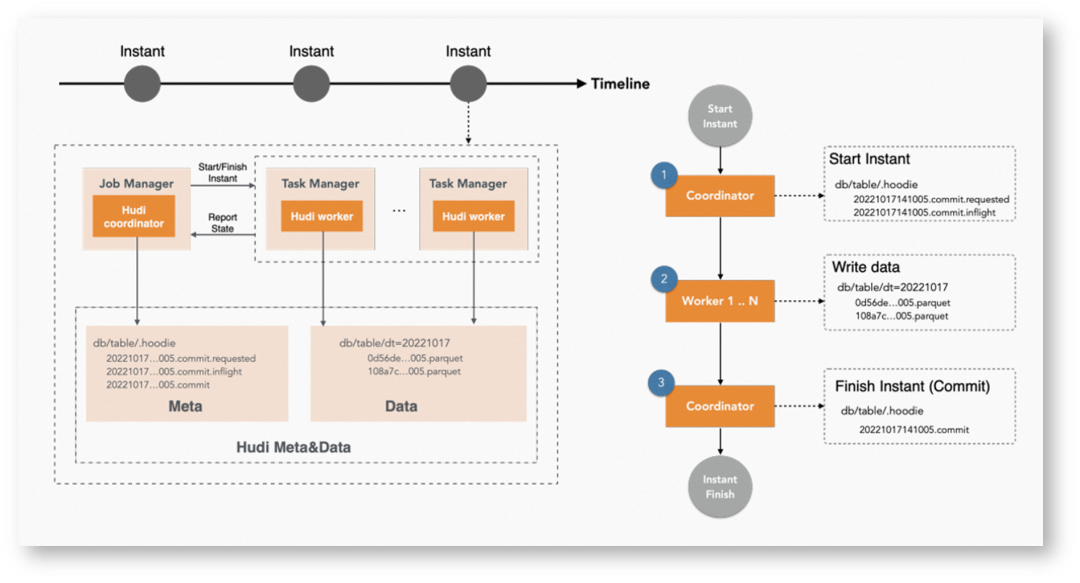

The process can be understood in the following figure. Hudi has two types of roles. The coordinator is responsible for initiating the instant and committing, and the worker is responsible for writing data.

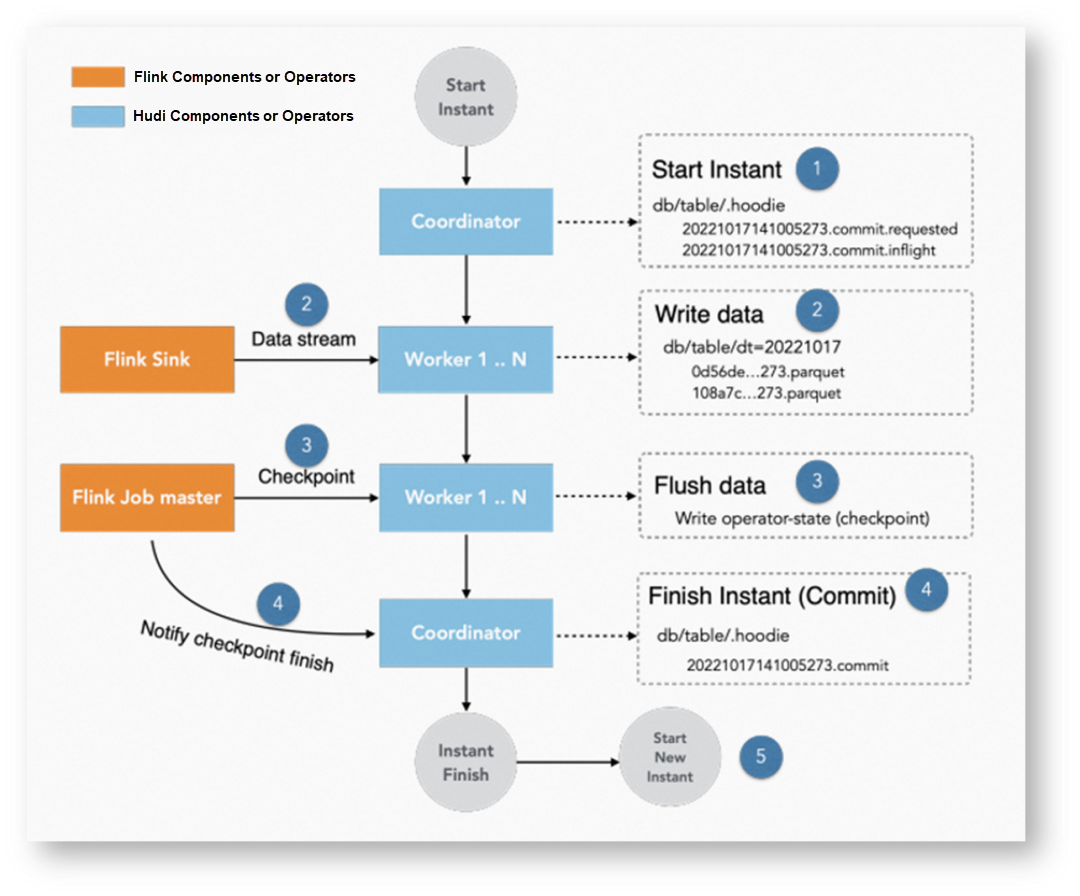

The following figure shows how Hudi works with Flink to write and commit data:

The actual submission can be simplified to the process above. As shown in the figure, 1 to 3 are the checkpointing logic of Flink. If an exception occurs at these steps, the checkpoint fails, and the job is restarted. The job is resumed from the previous checkpoint. This is equivalent to a failure in the precommit phase of the two-phase commit, and the transaction is rolled back. If an exception occurs between 3 and 4, the states of Flink and Hudi are inconsistent. As such, Flink considers that the checkpointing has ended, but Hudi has not committed it. If we do not handle this situation, data would be lost because the SLS offsets have been moved forward after Flink completes checkpointing. This part of the data has not been committed to Hudi. Therefore, the focus of fault tolerance is on how to handle the inconsistency caused by this phase.

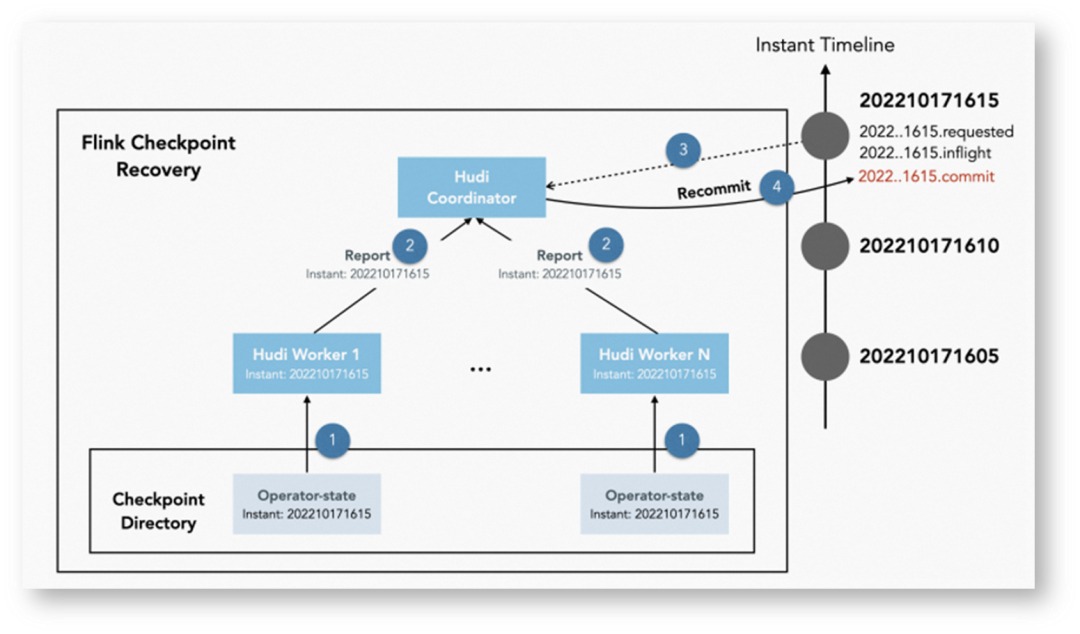

The solution is that when the job of Flink restarts and recovers from the checkpointing if the latest Instant of Hudi has uncommitted writes, they must be recommitted. The following figure shows the procedure of recommitting:

When restarting, are some Hudi workers in the latest instants while some workers are in the old instants? The answer is no because the checkpointing of Flink is equivalent to the precommitting of a two-phase commit. If checkpointed, Hudi has precommitted, and all workers are in the latest instants. If the checkpointing fails, the system returns to the previous checkpointing when the system restarts. As such, the status of the Hudi worker is the same.

During failover processing when data is put into the lake, the source does not replay the processed data through the persistent log file in the checkpoint. This ensures that the data is not duplicated. The sink uses the two-phase commit and recommitting mechanism implemented by Flink and Hudi to ensure that the data is not lost. Finally, Exactly-Once is implemented. After the actual measurement, the impact of this mechanism on performance is about 3% to 5%. This mechanism has achieved high throughput and real-time data lake with a minimum cost and ensures Exactly-Once consistency. In a project where massive logs are put into the lake, the tunnel runs stably, with the daily throughput reaching 3 GB/s and the peak throughput reaching 5 GB/s. In addition, with the offline and online integrated engine of AnalyticDB for MySQL Data Lakehouse Edition, the mechanism has made real-time data lake and offline and online integrated analysis a reality.

In addition to Exactly-Once consistency, the tunnel has many challenges to achieve high-throughput writing and query (such as automatic hot spot scattering and small file merging), which will be introduced in subsequent articles.

Alibaba Cloud AnalyticDB for MySQL: Create Ultimate RuntimeFilter Capability

Apache Flink Community - September 30, 2025

ApsaraDB - December 27, 2023

Apache Flink Community - May 10, 2024

Apache Flink Community China - July 21, 2020

Apache Flink Community - October 15, 2025

Apache Flink Community - April 16, 2024

AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by ApsaraDB