By Digoal.

Any service-oriented product will have its related service channels for the end users-or consumers-of such product. When it comes to analyzing service channels, these service channels are usually separated-and then later joined together for further analysis-a point we'll get into a bit later in this article.

But, first of all, you're probably wondering, by service channel, what exactly do we mean? Well, what we mean is any channel through which, or by which, the customer or end user can reach customer service. Among these, the typical ones are by phone, over the Internet, or in person.

Interestingly, all of these channels are now becoming increasingly automated with the use of algorithm- or robot-supported customer service systems. In fact, these are popping up in places that are traditionally "human" channels, including on the phone, in web chat boxes, and in person scenarios!

Of course, there's a lot of things to consider when it comes to service-oriented products and service channels-especially now when it comes to how to implemented automated ones and robot-supported ones nowadays. Well, to speak business speak, there's a lot of pivotal indicators, aka key performance indicators (or KPIs) that enterprises providing these services have to consider. For example, of these pivotal indicators, there's the service resolution rate, resolution duration bar chart, and one-time resolution rate of different dimensions, as well as problem classification and region classification.

No idea what these are? Well, don't feel lost! Let's get into at least one of them here-to clear things up. The term "one-time resolution rate" refers to the ratio of problems solved by a phone robot, robot-to-human service, or a human-to-robot service at any particular time-this is an important one for which we will go into more detail later in this article. Now say, if a problem is transferred multiple times, the one-time resolution rate will be low. This generally equates to poor customer service.

Pivotal analysis can be used as an important reference for service-providing enterprises and companies alike to provide and ensure customer service quality and improve the customer's overall experience.

What all goes into pivotal analysis? Well, consider the elements or data points that go into a pivotal analysis in an e-commerce scenario as an example. A pivotal analysis in this industry may involve the following things:

For these, for reference, the "one-time resolution rate" refers to the ratio of problems solved through a specific channel, such as with the phone robot, robot-to-human service, or the human-to-robot service at a time. If the problem is transferred multiple times, the one-time resolution rate will be low as a result.

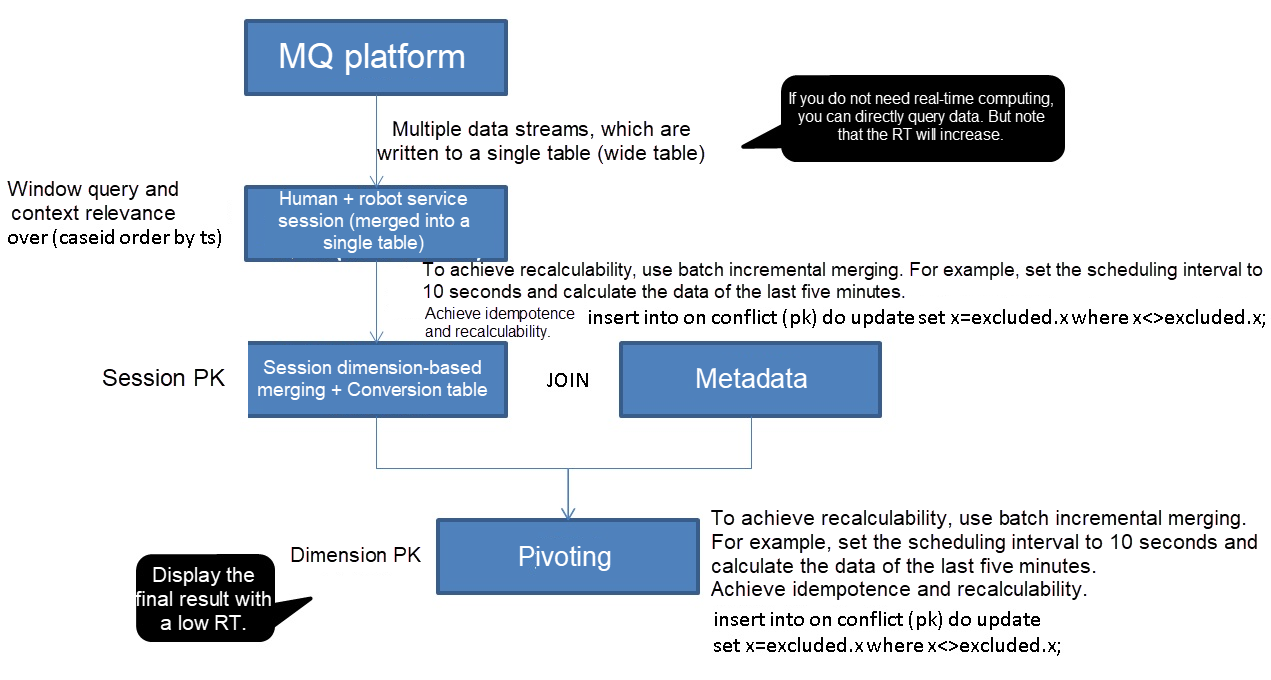

Now, all of this data has to go through the pivot analysis. To illustrate this clearer, below is a diagram to show the process of pivot analysis and specifically how all of these data points work with each other and how the entire system works.

In this system-as see below-there is a Message Queuing (MQ) platform from which multiple data streams are sent to both human and robot service sessions. Then, metadata along with the session dimension-based merging of table information is joined, and then sent to the pivoting to achieve re-calculability. At the pivoting stage, the final result is displayed.

Now, with all that cleared up, let's further consider this question: how can you implement real-time pivoting? Also, how easy is it to merge all of the channels together? Well, you can use the PostgreSQL functions (either synchronous or asynchronous batch consume with atomicity) and INSERT ON CONFLICT idempotent functions. We will do this below.

Now let's discuss the problem of arrival time issues related to having multiple data streams. But before we can get into that, we need to cover some bases.

First of all, usually, in a large company or enterprise, business is broken down between several different departments. This is true even with the relevant customer service system of any business in reality also. Typically, for instance, manual processing and telephone processing often belonging to two separate business lines.

However, data streams are often transferred through the Message Querying (MQ) platform, as the one we saw above. That is, for data streams of different business lines, the arrival times or consumption times may be different. In other words, if or when you choose to pivot customer service quality data, you need to use multiple data streams and thus will inevitably encounter this problem of different arrival times. For example, in the following case: The human service occurs at 09:00, and the robot service occurs at 09:01-which isn't good for what we want to do with the data.

However, as they belong to different data streams, their final arrival times are reversed. As a result, an error may occur when the one-time resolution rate is calculated. To eliminate this error, you need to perform recalculation on window retention so that the real-time statistical results can be adjusted and the increment can be recalculated. This can be implemented by the following statement:

Insert on conflict do update xx = exclude. xx where xx <> excluded. xxThe following demo considers a pivotal analysis for finding the one-time resolution rate-the pivotal indicator we discussed earlier-as an example. The pivotal analysis method used for one-time resolution rate is similar when other dimensions are used.

In this demo, we will develop and stress test our pivotal analysis.

To start out with the development of our pivotal analysis, for this demo, the data stream will be as follows:

Session streams (multiple tables) > Stream merging table > Conversion table > (Conversion table + Metadata table) pivoting result table Next, in this demo, we will be first looking at the session data streams:

create table tbl_robot (

caseid int8, -- Session ID

crt_time timestamp, -- Message time

message text, -- Interaction information

custom_id int8, -- Consumer ID

pro_id int, -- Problem ID

others text -- Other fields

); create table tbl_human (

caseid int8, -- Session ID

crt_time timestamp, -- Message time

message text, -- Interaction information

custom_id int8, -- Consumer ID

xiao2_id int8, -- Attendant ID

pro_id int, -- Problem ID

others text -- Other fields

); Next, there's the data stream merging table. You can use partition tables here to facilitate maintenance.

create table tbl_session (

caseid int8, -- Session ID

crt_time timestamp, -- Message time

message text, -- Interaction information

custom_id int8, -- Consumer ID

xiao2_id int8, -- Attendant ID

pro_id int, -- Problem ID

stream_id int, -- Stream ID. The value 1 indicates the robot service, and 2 indicates the human service.

others1 text, -- Other fields of stream 1

others2 text -- Other fields of stream 2

);

create index idx_tbl_session_1 on tbl_session (crt_time);

create index idx_tbl_session_2 on tbl_session (caseid, crt_time); Now, create a source rule to automatically merge session data streams into the data stream merging table. With this rule, the data is automatically merged to the merging table.

create or replace rule r1 as on insert to tbl_robot do instead

insert into tbl_session

(caseid, crt_time, message, custom_id, pro_id, others1, stream_id)

values (NEW.caseid, NEW.crt_time, NEW.message, NEW.custom_id, NEW.pro_id, NEW.others, 1);

create or replace rule r1 as on insert to tbl_human do instead

insert into tbl_session

(caseid, crt_time, message, custom_id, pro_id, others2, xiao2_id, stream_id)

values (NEW.caseid, NEW.crt_time, NEW.message, NEW.custom_id, NEW.pro_id, NEW.others, NEW.xiao2_id, 2); Now, it comes to the metadata table (which we won't discuss here). Then, after that, there's the Session status conversion table. This table can be recalculated in batches. Multiple sessions involve multiple records. The purpose of this particular demo is to find an answer from the robot after a human reply, so as to identify the human reply efficiency.

However, the robot reply efficiency can also be obtained:

create table tbl_session_etl (

caseid int8 primary key, -- Session ID

s_crt_time timestamp, -- Start time of the session

e_crt_time timestamp, -- Time of the last record of the session

robot_to_human boolean, -- Whether the robot-to-human service is included

human_to_robot boolean -- Whether the human-to-robot service is included

); The following session conversion SQL statement is used for scheduling and can be repeatedly executed. The window size can be adjusted to tolerate the differences in the arrival times of different data streams.

select caseid, max(s_crt_time) s_crt_time, max(e_crt_time) e_crt_time,

bool_or(lag=1 and stream_id=2) as robot_to_human,

bool_or(lag=2 and stream_id=1) as human_to_robot

from

(

select caseid, min(crt_time) over w1 as s_crt_time, max(crt_time) over w1 as e_crt_time,

(case when (row_number() over w1) = 1 then stream_id else lag(stream_id) over w1 end) as lag,

stream_id

from tbl_session

where crt_time > now() - interval '10 min' -- Session data within 10 minutes. You can adjust this window as desired.

window w1 as (partition by caseid order by crt_time)

) t

group by caseid; You'll want to merge and write data using the following SQL statement, which can be repeatedly executed. The window size can be adjusted to tolerate the differences in the arrival times of different data streams.

insert into tbl_session_etl (caseid, s_crt_time, e_crt_time, robot_to_human, human_to_robot)

select caseid, max(s_crt_time) s_crt_time, max(e_crt_time) e_crt_time,

bool_or(lag=1 and stream_id=2) as robot_to_human,

bool_or(lag=2 and stream_id=1) as human_to_robot

from

(

select caseid, min(crt_time) over w1 as s_crt_time, max(crt_time) over w1 as e_crt_time,

(case when (row_number() over w1) = 1 then stream_id else lag(stream_id) over w1 end) as lag,

stream_id

from tbl_session

where crt_time > now() - interval '10 min' -- Session data within 10 minutes. You can adjust this window as desired.

window w1 as (partition by caseid order by crt_time) -- Use the window function for query.

) t

group by caseid

on conflict (caseid)

do update set

s_crt_time = excluded.s_crt_time,

e_crt_time = excluded.e_crt_time,

robot_to_human = excluded.robot_to_human,

human_to_robot = excluded.human_to_robot

where -- When the values change after data conversion, the data is merged and written.

tbl_session_etl.s_crt_time<>excluded.s_crt_time

or

tbl_session_etl.e_crt_time<>excluded.e_crt_time

or

tbl_session_etl.robot_to_human<>excluded.robot_to_human

or

tbl_session_etl.human_to_robot<>excluded.human_to_robot

; Now, you'll want to create functions so that they can be called conveniently.

create or replace function f_tbl_session_etl(interval) returns void as

$$

insert into tbl_session_etl (caseid, s_crt_time, e_crt_time, robot_to_human, human_to_robot)

select caseid, max(s_crt_time) s_crt_time, max(e_crt_time) e_crt_time,

bool_or(lag=1 and stream_id=2) as robot_to_human,

bool_or(lag=2 and stream_id=1) as human_to_robot

from

(

select caseid, min(crt_time) over w1 as s_crt_time, max(crt_time) over w1 as e_crt_time,

(case when (row_number() over w1) = 1 then stream_id else lag(stream_id) over w1 end) as lag,

stream_id

from tbl_session

where crt_time > now() - $1 -- Session data within n minutes. You can adjust this window as desired.

window w1 as (partition by caseid order by crt_time) -- Use the window function for query.

) t

group by caseid

on conflict (caseid)

do update set

s_crt_time = excluded.s_crt_time,

e_crt_time = excluded.e_crt_time,

robot_to_human = excluded.robot_to_human,

human_to_robot = excluded.human_to_robot

where -- When the values change after data conversion, the data is merged and written.

tbl_session_etl.s_crt_time<>excluded.s_crt_time

or

tbl_session_etl.e_crt_time<>excluded.e_crt_time

or

tbl_session_etl.robot_to_human<>excluded.robot_to_human

or

tbl_session_etl.human_to_robot<>excluded.human_to_robot

;

$$

language sql strict; The following provides the scheduling method, which can complete corrections automatically:

Next, there's the Session statistical table, which is used to calculate the one-time resolution rate. This table is optional. If you don't need the statistics, you can query data directly.

There's the Day-dimension table:

create table tbl_session_stat_day (

stat_dim text primary key,

robot_to_human_cnt int8,

human_to_robot_cnt int8

); Also, the Minute-dimension table:

create table tbl_session_stat_min (

stat_dim text primary key,

robot_to_human_cnt int8,

human_to_robot_cnt int8

); This is the SQL statement for statistical scheduling, which can be executed repeatedly. Next, here's the Day-dimension:

select to_char(s_crt_time, 'yyyymmdd') as stat_dim,

sum(case when robot_to_human then 1 else 0 end) robot_to_human_cnt,

sum(case when human_to_robot then 1 else 0 end) human_to_robot_cnt

from tbl_session_etl

group by 1; And the Minute-dimension:

select to_char(s_crt_time, 'yyyymmddhh24mi') as stat_dim,

sum(case when robot_to_human then 1 else 0 end) robot_to_human_cnt,

sum(case when human_to_robot then 1 else 0 end) human_to_robot_cnt

from tbl_session_etl

group by 1; The following is the SQL statements for writing and merging data, which can be repeatedly executed:

insert into tbl_session_stat_day

select to_char(s_crt_time, 'yyyymmdd') as stat_dim,

sum(case when robot_to_human then 1 else 0 end) robot_to_human_cnt,

sum(case when human_to_robot then 1 else 0 end) human_to_robot_cnt

from tbl_session_etl

group by 1

on conflict (stat_dim) do update

set

robot_to_human_cnt = excluded.robot_to_human_cnt,

human_to_robot_cnt = excluded.human_to_robot_cnt

where

tbl_session_stat_day.robot_to_human_cnt <> excluded.robot_to_human_cnt

or

tbl_session_stat_day.human_to_robot_cnt <> excluded.human_to_robot_cnt

;

insert into tbl_session_stat_min

select to_char(s_crt_time, 'yyyymmddhh24mi') as stat_dim,

sum(case when robot_to_human then 1 else 0 end) robot_to_human_cnt,

sum(case when human_to_robot then 1 else 0 end) human_to_robot_cnt

from tbl_session_etl

group by 1

on conflict (stat_dim) do update

set

robot_to_human_cnt = excluded.robot_to_human_cnt,

human_to_robot_cnt = excluded.human_to_robot_cnt

where

tbl_session_stat_min.robot_to_human_cnt <> excluded.robot_to_human_cnt

or

tbl_session_stat_min.human_to_robot_cnt <> excluded.human_to_robot_cnt

; Now you can create functions so that they can be called conveniently:

create or replace function f_tbl_session_stat_day() returns void as

$$

insert into tbl_session_stat_day

select to_char(s_crt_time, 'yyyymmdd') as stat_dim,

sum(case when robot_to_human then 1 else 0 end) robot_to_human_cnt,

sum(case when human_to_robot then 1 else 0 end) human_to_robot_cnt

from tbl_session_etl

group by 1

on conflict (stat_dim) do update

set

robot_to_human_cnt = excluded.robot_to_human_cnt,

human_to_robot_cnt = excluded.human_to_robot_cnt

where

tbl_session_stat_day.robot_to_human_cnt <> excluded.robot_to_human_cnt

or

tbl_session_stat_day.human_to_robot_cnt <> excluded.human_to_robot_cnt

;

$$

language sql strict;

create or replace function f_tbl_session_stat_min() returns void as

$$

insert into tbl_session_stat_min

select to_char(s_crt_time, 'yyyymmddhh24mi') as stat_dim,

sum(case when robot_to_human then 1 else 0 end) robot_to_human_cnt,

sum(case when human_to_robot then 1 else 0 end) human_to_robot_cnt

from tbl_session_etl

group by 1

on conflict (stat_dim) do update

set

robot_to_human_cnt = excluded.robot_to_human_cnt,

human_to_robot_cnt = excluded.human_to_robot_cnt

where

tbl_session_stat_min.robot_to_human_cnt <> excluded.robot_to_human_cnt

or

tbl_session_stat_min.human_to_robot_cnt <> excluded.human_to_robot_cnt

;

$$

language sql strict; Now that our demo is developed, let's go on to stress test performance. First, let's look at this highly concurrent writing of session information:

vi test.sql

\set caseid1 random(1,1000000)

\set caseid2 random(1,1000000)

\set custom_id1 random(1,100000)

\set pro_id1 random(1,1000)

\set custom_id2 random(1,100000)

\set pro_id2 random(1,1000)

\set xiao2_id random(1,100)

insert into tbl_robot values (:caseid1, now(), 'test', :custom_id1, :pro_id1, 'test');

insert into tbl_human values (:caseid2, now(), 'test', :custom_id2, :xiao2_id, :pro_id2, 'test');

\sleep 500 us

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 32 -j 32 -T 120 Write session information one record at a time, at about 0.176 million rows per second. If the session information is written in bulk, the speed can reach over one million rows per second.

transaction type: ./test.sql

scaling factor: 1

query mode: prepared

number of clients: 32

number of threads: 32

duration: 120 s

number of transactions actually processed: 10655120

latency average = 0.360 ms

latency stddev = 0.466 ms

tps = 88792.101825 (including connections establishing)

tps = 88804.892722 (excluding connections establishing)

script statistics:

- statement latencies in milliseconds:

0.001 \set caseid1 random(1,1000000)

0.001 \set caseid2 random(1,1000000)

0.000 \set custom_id1 random(1,100000)

0.000 \set pro_id1 random(1,1000)

0.000 \set custom_id2 random(1,100000)

0.000 \set pro_id2 random(1,1000)

0.000 \set xiao2_id random(1,100)

0.178 insert into tbl_robot values (:caseid1, now(), 'test', :custom_id1, :pro_id1, 'test');

0.178 insert into tbl_human values (:caseid2, now(), 'test', :custom_id2, :xiao2_id, :pro_id2, 'test'); Next, there's the step of Real-time conversion scheduling. For this, you'll want to enable concurrent writing at 0.142 million rows per second.

psql

select f_tbl_session_etl(interval '5 sec');

\watch 1

Sat 09 Dec 2017 07:05:42 PM CST (every 1s)

f_tbl_session_etl

-------------------

(1 row)

Time: 4515.817 ms (00:04.516) It takes 4.5 seconds to process the most recent 0.71 million rows. The processing speed is about 0.157 million rows per second.

Next, there's Real-time statistical scheduling:

postgres=# select f_tbl_session_stat_day();

f_tbl_session_stat_day

------------------------

(1 row)

Time: 926.839 ms

postgres=# select f_tbl_session_stat_min();

f_tbl_session_stat_min

------------------------

(1 row)

Time: 1162.713 ms (00:01.163) And then there's the matter of the Data volume, which is 0.179 billion rows:

postgres=# select count(*) from tbl_session;

count

-----------

179639156

(1 row)

Time: 1635.908 ms (00:01.636)

postgres=# select count(*) from tbl_session_etl;

count

---------

1000000

(1 row)

Time: 47.540 ms And the Performance indicators:

| Concurrency | Write throughput | Write latency |

| 32 | 0.176 million rows per second | 0.178 ms |

If the writing of 0.179 billion rows is distributed throughout the day, the response speed will be faster.

| Concurrency | Conversion throughput | Conversion latency |

| 1 | 0.157 million rows per second | 1s |

| Concurrency | Statistics throughput | Statistics latency |

| 1 | 1,000,000 rows | 1s |

Statistics query performance, with a latency of several milliseconds:

postgres=# select * from tbl_session_stat_day ;

stat_dim | robot_to_human_cnt | human_to_robot_cnt

----------+--------------------+--------------------

20171209 | 80160 | 80453

(1 row)

Time: 6.476 ms

postgres=# select * from tbl_session_stat_min;

stat_dim | robot_to_human_cnt | human_to_robot_cnt

--------------+--------------------+--------------------

201712091758 | 56558 | 56531

201712091800 | 4 | 4

201712091759 | 509 | 501

201712091757 | 236638 | 236657

201712091802 | 7273 | 7177

201712091817 | 8336 | 8358

201712091812 | 0 | 0

201712091814 | 12 | 8

201712091815 | 127 | 144

201712091813 | 1 | 1

201712091816 | 1688 | 1761

201712091905 | 56645 | 57046

201712091904 | 411 | 391

201712091906 | 23104 | 23015

201712091902 | 0 | 1

(15 rows)

Time: 6.695 ms The scenario presented in this article can be summarized as follows: Data in comes from multiple data streams-as a result of being in different service channels-and these data streams may arrive at different times. Given this, the objective typical, as was for our demo in this article, is to perform real-time pivot computing based on the context relevance of multiple data streams.

The data stream merging and window retention methods were used in our demo. Some conclusions we can arrive at after conducting our little pivotal analysis is that these methods must support recalculation and overwriting. The rule is used to merge data streams. The configurable window and INSERT ON CONFLICT are used for window retention and recalculation.

Nick Patrocky - March 4, 2024

Alex - July 9, 2020

Alibaba Clouder - July 10, 2018

Rupal_Click2Cloud - November 15, 2023

Alibaba Cloud Community - February 11, 2022

Nick Patrocky - February 17, 2024

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn MoreMore Posts by digoal