Docker is a service that has reformed the way of conducting continuous delivery. Docker allows you to package an application with all of its dependencies into a standardized unit for software development and guarantees that the software will always run the same, regardless of its environment. The first part of this series gave an outline of the overview, challenges, and environment and build requirements for using Docker. This post will explore the specific methods for using Docker along with the delivery processes.

The Docker runtime environment has only one limitation, that is, the Linux operation kernel must be higher than version 3.10. Docker provides a convenient method to install and setup a Docker environment on your machine using Docker Toolbox. This installer allows you to easily install Docker regardless of the underlying OS. It also supports a variety of cloud servers, including Alibaba Cloud's Elastic Computing Service (ECS) servers.

O&M personnel need to list all items originally required for building an environment and describe them with the Dockerfile. Dockerfile is not a development language, but only a defined description language. To create a description language, you must include the FROM instruction and an environment in the FROM. When this is defined, the user can perform tasks with roles and responsibilities assigned, including running for executing commands, event as an environmental variable, as well as add, expose, and cmd commands.

Upon successful establishment of a standard Java environment, one can use it directly by applying jar to it.

If services are disassembled into micro-services (each service is not a war package but a jar), then all the micro-services should share a common Docker image. Many languages have the API function, and in most cases do not require dependencies but require the running environment of the language.

Docker images for varied environments are available at many official websites. You can use images from the FROM official website directly by applying jar to them. Further, some open source software allows the user to run them directly after being downloaded, such as Gitlab. With the Docker technology, it also becomes much easier to build several open source tool systems.

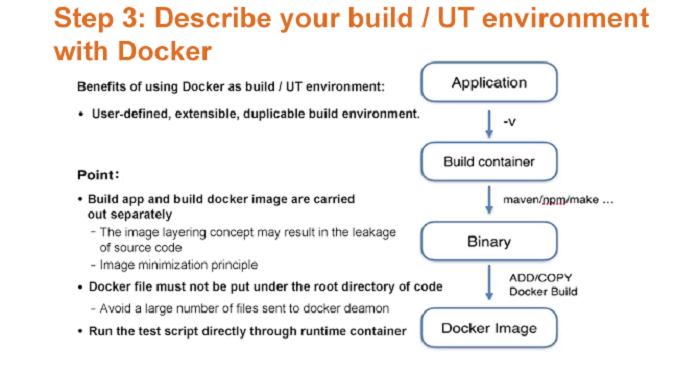

When compiling applications and Docker environments together, it is necessary to copy the application code to the Docker environment. However, during the process of building a Docker environment, if application code is built within the Docker environment, they will be deleted. Given the hierarchical structure of the Docker (with harmonized uppercases and lower cases throughout a context), any .java copied to the Docker file for building a jar will be deleted after the jar is built. Since access to the source code is simply via the .java. Besides this, the code is undesirable in a production environment when the Docker container runs over there.

Based on the minimum Docker image principle (installing only what is essential), a Java (harmonized uppercases and lowercases) + NodeJS running environment does not require ping after a production environment starts to run, and is only necessary for debugging during O&M. Compiling may depend much more on the compiling environment, but the running of a production environment does not require dependencies. Therefore, we recommend separating the environments, and creating a compiling environment also by using Docker.

A compiling environment is only for building, while a testing environment is only for running. The process of using an environment for building procedures follows this simple principle:

Code uploading can proceed by using the -v method to a container called "build", where you can use different languages for building files such as binary dependency packages; then add these files to the Docker file for the Docker build. Through the two steps of building, you will successfully build Docker images that can run.

Other than source code, binary created through the first step of building and added to the Docker file is also run.

After building an image, you can activate different scripts, such as activating a production script for running production, or activating a testing script for running a test. You may use Docker to run an integrated test as well. For a Dockerized application, once a Docker is run for executing a test command, it will execute a testing process, regardless of whether it is a database or not. Anything that can be scripted for testing can be written with Docker.

An application cannot do with only images but also depends on a database, if not another application. For example, a database is necessary when performing data testing.

Docker also offers a tool called Docker Compose, which can manage multiple Docker images. Refer to the following steps to use Docker Compose:

When an application needs to depend on a database, you can write a link and name it "link DB". By using the Docker Compose command, you will be able to run a group of Docker images, and you will be able to describe the dependencies therein using compose .yml.

To test a web application, you do not need to write a MySQL ID, and only MySQL may do as the MySQL described in "compose". Then obtain access to the database by entering the password. For Redis, you only need to name it and provide the port, and it only needs the cache service.

During the running of a production environment, data storage takes place in a cloud server, regardless of the implementation. No matter whether you have used NAS or Alibaba Cloud Object Storage Services (OSS), you can put together a directory under a local directory, instead of uploading the data using SDK or API to a cloud storage device.

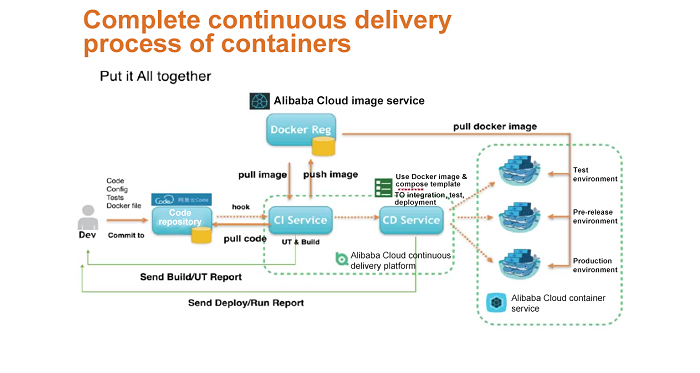

In addition to code, configuration, and test scripts, developers also need to write the Dockerfile. Upon pushing the code to a code repository, the CI service will tell a new commit via the code repository hook. It will then pull and copy the Code for build and UT. During the build process, it will pull the image on which it depends from the Docker Registry; once the build process is complete, it will push the image to the Docker Registry unit. Then CI will have a hook to inform CD, and the Deploy Service will deploy the image to a pretest, test, or production environment based on the Docker, image description, and compose description.

The whole deployment process is to pull a build-ready image from the Registry and run it. Since the most complicated content is in the image and "compose" files, the whole process is simplified.

1. What does Docker delivery bring?

It makes development clearer and more flexible to developers. Previously, the concern was on Java dependency, and it was quite hard to build a Java environment locally. However, now that concern has shifted to the dependency of the whole environment, it makes Java environment build much easier. With this approach, O&M personnel no longer need to change their schedule due to software dependencies, and do not have to constantly change the configuration. What can Docker be applied to? Every project and environment for everyone. It is also the most effective implementation of DevOps. With Docker, you can apply programming to operations and manage environmental operations using development practices.

2. What scenarios should be Dockerized?

Complete definition related to this is yet to emerge. However, my understanding is that the greatest benefit of conversion into a container is the realization of shorter life cycles and statelessness. For example, for web applications such as REST API or CI/CD, you can pull the whole Docker container for running during a test, and then discard it after the test is complete to avoid resource occupation. In a task-performing process, you can save on resources by using Docker.

Databases, especially those for storage, are the most difficult to Dockerize, and thus may not directly benefit from containers. If a server bears a much greater load, it serves as file storage. However, this does not mean it is not doable, but may be of little significance.

Changing the Way of Continuous Delivery with Docker (Part 1)

2,593 posts | 790 followers

FollowAlibaba Clouder - August 31, 2017

Alibaba Clouder - December 28, 2020

Neel_Shah - June 24, 2025

Alibaba Clouder - December 31, 2020

Alibaba Cloud Community - March 8, 2022

Alibaba Clouder - December 28, 2020

2,593 posts | 790 followers

Follow Alibaba Cloud Flow

Alibaba Cloud Flow

An enterprise-level continuous delivery tool.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn More Hybrid Cloud Solution

Hybrid Cloud Solution

Highly reliable and secure deployment solutions for enterprises to fully experience the unique benefits of the hybrid cloud

Learn MoreMore Posts by Alibaba Clouder