Recommendation, search, and ad placement are all core tasks for providing internet content and data distribution to businesses. They are also a classic use case of big data and machine learning technologies. In practice, because of the strict requirements of online business on performance and response time, we will always break the above processes into two phases, Matching and Ranking.

Taking Taobao's recommendation system as an example, the core of the matching phase is to recall the appropriate TopK candidates from a pool of products according to the user's information. The ranking phase then aims to take the TopK candidates and separate and rank them according to finer detailed information, and finally display them to the user.

Because the candidate pool is much smaller, we can introduce complicated models like deep learning to optimize the final result of the ranking phase. In the matching phase, because the scope of the issue is quite large, applying a complex model to this phase is quite difficult. Research on techniques in this phase, particularly deep learning is still in the developmental stage.

The matching phase has always faced many technical challenges in commercial recommendation systems. For example, when the candidate pool is quite large, we need to select a TopK group from the pool, but we cannot accept the linear increase in time complexity brought about by increases in candidate number. Many solutions developed in academia are not useful in practical recommendation systems due to their need to compute the entirety of the data {User, Item}. Under the conditions of limited computing resources, the work of selecting a group of high-quality TopK candidates from the entire group of candidates needs to balance computing efficiency and correctness precisely.

A recommendation system in a practical application requires that the computing time allotted to the matching phase be limited according to the below formula:

T represents the amount of time required of a single computation, while N is the number of times a user has to callback all of the TopK candidates. Under the constraints of the above formula and the issue of improving matching results, matching algorithms have gone through several generations of evolution, from the statistically based heuristic rule method to the vector search method based on the internal product model.

However, regarding technical selection, these methods have all made significant contributions to the task of fitting the matching stage to the above mentioned computational efficiency constraints. Finding more advanced complex deep learning models that meet the computational efficiency constraints of the matching stage has become a primary developmental direction for next-generation matching systems.

As described above, matching technology has gone through an evolution from the statistically based heuristic rule method to the vector search method based on the internal product method.

A classic example of this type of method is the Item-based Collaborative Filtering method (referred to below as Item-CF), which is also the method that finds widespread application in the industrial world.

This method is effective in controlling the total number of computations N because the Trigger Item group belonging to the user is limited. Similarly delineated candidate groups are also limited; therefore we can avoid computing the entire group of candidates and simultaneously use scoring rules to effectively control the time consumed by a single computation T. Computing time for this method is quite short and completely satisfies the requirements of a real-time application.

However, this method has a natural downside; the method limits the ability to provide a user with recommendations that has not been exhibited but that the user may be interested in.

In theory, this kind of model-based matching seeks to balance the user's interest toward all products and select the most suitable to act as recommendation results. This introduces a problem: it is almost impossible to compute scenarios with large pools of candidate products.

How do we dissolve this issue? Researchers have brought forward the idea of using vector distances to balance the user and degrees of interest. Herein, we represent the user and the products in the form of a vector which serves as the foundation for constructing an index structure based on vector clustering to speed up balancing.

The inner product model and vector engine have become the most important technologies in the field of matching solutions in recent history. However, the problem is that this type of methodology does not fully utilize machine learning to solve the issue of matching as the limitations on machine learning models are enormous. We cannot divide most higher-order deep learning methods into inner-product forms. For example, in CTR estimation, crossovers between the user and product features are incredibly useful, but we cannot represent most of them by inner products.

The core to the development of matching technology is to increase the accuracy of interest balancing as much as possible within the system performance constraints (from rule algorithm to introducing cutting-edge models) and range of coverage (from a limited candidate pool to the entire body of candidates). Because of this, we have attempted researching the next generation of matching and recommendation technology. We have independently brought forth a more general matching and recommendation algorithm framework that allows us to accommodate any advanced model and unlimited inner product form, as well as perform improved matching on the entire body of candidates.

Whether in a public dataset or Alibaba dataset, the recall rate and reason-ability proportion of the new method is leaps and bounds ahead of those of the previous two generations of technology. Our method expands from the issues posed by recommendation systems, which are the same scenarios under which we have conducted our experiments. It is worth mentioning that the issue of matching is itself the core issue to the recommendation, search, ad placement, and other tasks, meaning that our method possesses stronger universality.

As mentioned above, the second generation solution, based on inner product model vector searches, limits the structure of the model to improve search efficiency. Therefore, if we want to realize the capability of a model further, then we must decouple the design of the entire search structure from the design of the model structure (vector inner product search and inner product models are a type of strong decoupling).

We attack this problem by using a 'coarse to fine search' method to gradually decide and refine the results, finally giving the most optimized recommendation. Based on this line of thinking, we determine the search direction to be on a layered tree structure to increase search efficiency.

However, while the concept of the vague upper layer seems easy to grasp, the difficulty lies in building an actionable channel on the foundation of math and technology, including:

After we came up with the idea of using a layered tree structure to increase search efficiency, we first attempted a probabilistic concatenation tree form. This form already applies to some extent in Natural Language Processing work and research (for example Hierarchical Softmax, Word2Vec, etc.). Even though we finally realized that the probability form is unfortunately unable to aid in solving the issue of matching correctly, it is helpful in providing a useful method that addresses the core issue and difficulty of clear matching.

Taking hierarchical softmax (HS) as an example, each leaf node (word) has a unique path to the route node encoding. With the above context, we can model the probability of the next appearing word as the multiplication of the classification probability on the coding path. Using a probabilistic concatenation tree means that HS can effectively avoid the issue of having to traverse all of the leaf nodes to perform denominator normalization each item in traditional Softmax modeling.

Theoretically speaking, it appears that HS can solve the issue of matching. However, our analysis and practice demonstrate that this is not the case. In the practice of applying the HS method to matching and recommendation, even the YouTube team described in their article on inner product model vector search matching their application of the HS method in learning the relationship between a user and the videos they choose, but the results were far from ideal. At first, we tried using the HS method in Alimama ad test groups, and the results were indeed suboptimal.

The thought behind the overturn probability connection tree is that we need to construct an all-new method to establish tree structures, then integrate based on the tree's training sample, TopK search, and node interest level. Returning to the issue of matching itself, assuming that all of the products in the candidate pool are a leaf node, then the current user has a certain level of interest in each of the leaf nodes.

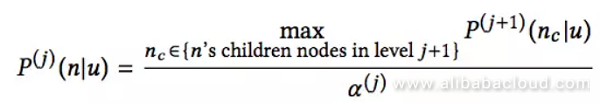

We do not know the exact value, and we can only use the prediction produced by the probability sampling (actual user feedback). For the average model, we can model the probability of the leaf nodes; however, if we want to find the TopK, then we need to traverse all of the nodes, which requires massive computing power. Therefore, we innovated and proposed the concept of a Max Heap like Tree, which defines the probability of the nodes on the tree as follows:

The probability that the user is positively interested in the father node on layer J is directly proportionate to the maximum value among the user's interest in all over the children nodes on the J+i layers. Of them, α(j) is a normalized factor of the interest probability of the nodes on J layer.

According to the very definition of Max Heap Tree, if we already know the order of the probabilities of the nodes (same layer) on each layer, then we can quickly find the overall TopK, that is, to start with the root node and find the TopK from the current layer. Then, we continue to find the TopK from the child node cluster on the lower layer corresponding to the TopK nodes from the upper layer.

However, the issue is that the node probabilities on this tree are completely unknown. Nonetheless, we can find a way to obtain an estimation that fits the sequence of probabilities of the nodes on the tree, and then use deep learning to fit the sample and obtain the order of the node probabilities. Specifically, any leaf node sample i for which the user has behavior contains the order of the leaf layer: .

According to our definition of the probabilities of tree nodes, we can recursively push the ordering of the probability of each node back upwards. According to this order, we can sample from this negative sample, then use a deep learning model to learn from this sample and then approximate the order of each layer of the Max Heap Tree.

Continuing the above description, when we construct the model of the interest nodes on each layer, we need to strictly satisfy the Max Heap probability formula, which is quite difficult. However, we can obtain a sequence sample that fits the probability of the tree's nodes. Furthermore, in the search process, we only need the order of probability of the nodes on each layer to confirm the TopK. Here we need to mention that even though the TopK candidates of each layer are decided by the father of each layer, the ranking of the TopK of that layer is not affected by the father generation. This means that the ranking of the TopK from each layer is independent, that is, the rank of the father is not taken into consideration when calculating the rank of the child. This is where our solution differs from the probability connection tree computing method.

Therefore, we can model the interest determination of the nodes on each layer to build a global classifier targeted at each layer and thereby guarantee the ability to accurately classify each layer. We begin with modeling the leaf node layer to confirm the sampling method, while also combining the Max Heap probability definition to confirm the sampling and modeling methods of each layer.

This kind of design means that the model obtained by training has a better deterministic ability in single layer classification. Even if the previous layer classification is false, the classification of the current layer is still capable of reaching a positive result. Of course, designing a global classifier layer by layer poses new challenges to the sampling process. The following section on model training goes into detail on the specific sampling method. The comprehensive design described above limits the number of computations used to search the TopK from the entire database to the same magnitude as the log (range of candidates), effectively minimizing N, and does not require that a single computation is limited to an inner product or other methods. It also allows us to accommodate a more advanced data scope.

We can intuitively define the structure of the Max Heap Tree as a layered structure of user interest. If the user is interested in the actual product, for example, an iPhone, then the user should be interested in the layers above the iPhone (the cell phone layer). The structure of the user's interest is layered by nature, and the Max Heap Tree structure represents a method that passes the user's interest from a fine-grained level to a coarse-grained level, sculpting the layered structure of the user's interest.

Of course, illustrating the layered structure of the user's interest is not limited to a Heap Tree structure. However, the Max Heap Tree structure methodology is unique in that it unifies the construction process of the tree structure, the modeling and sampling method of tree nodes, and the TopK search process behind the interest tree on the methodology layer.

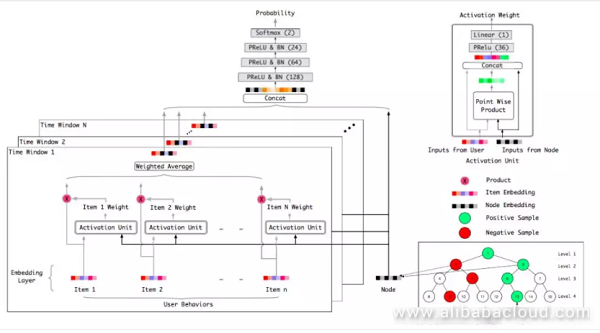

To summarize the above description, beginning with the definition of the Max Heap Tree structure, we have proposed the Tree-based Deep Match (henceforth referred to as TDM) algorithm framework. TDM uses the Taobao product system as an initialization dependency, then constructs an interest layer tree from the top down, from coarse to fine. On this foundation, the system then applies a deep learning network for user interest recommendation modeling to energize the complex model for single point computations and then uses layered search methods to recommend the user's TopK product interest candidates from among the entire selection.

Based on the above design and realization, TDM makes the following contributions to work in the field:

On the basis of the TDM algorithm framework, we continued to innovate to construct a training framework that connects the tree and the model. By initializing the cycle of tree - model training - tree construction - model retraining, the structure of the tree is optimized continuously during the model's training. This means that the tree better fits the user interest groups and distribution, and the model training benefits from the optimized tree structure by further reducing loss and improving test results. Combining training into TDM brings at least a 10% boost to test results.

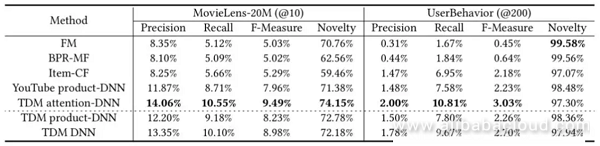

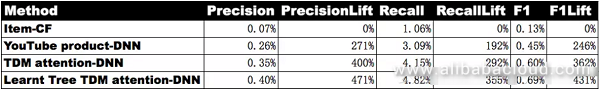

We have conducted comparative experiments of TDM using the public dataset MovieLens, as well as the Alimama ad dataset of user behavior. Evaluation indicators included accuracy, callback rate, accuracy callback rate adjusted for F1, and novelty. The methods for comparison include:

From the comparison of results in the above table, we can see that:

In the field of e-commerce, novelty results on the Category is a critical indicator to watch. We passed the recommendation results of each model into a filter of past user behavior, then applied Precision, Recall, and F1 comparisons to the filtered results.

As we can see from the table, the TDM solution for attention-DNN had impressive performance across all indicators, including a 292% improvement in callback rate over the popular Item-CF solution, and 34% improvement over YouTube's solution. Furthermore, after going through the tree-learning connected system, Learnt Tree TDM produced improved results, including a 355% improvement in callback rate over Item-CF, and a 56% improvement over YouTube. The improvements seen by these indicators demonstrate that the TDM solution performed very well in terms of novelty on the category level and adjacently verified that tree-model connected learning offers tremendously improved results.

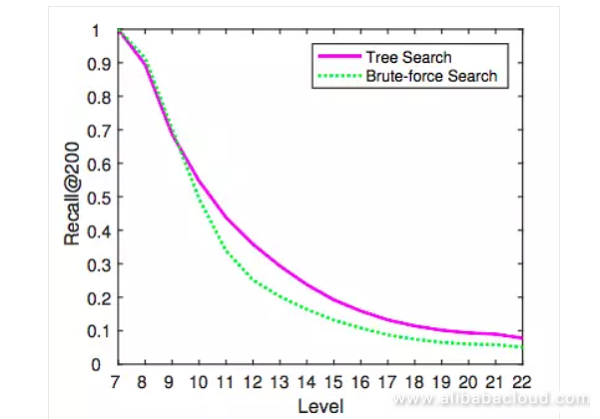

We know that the tree in TDM as a carrier for search features excellent time complexity, and energizes single point complex calculations. The tree itself is a layered interest structure, which takes the large issue of recommendation and matching and divides it into several layered global classification issues and solves them recursively. In the experiments, we noticed that a layered structure like the tree structure could better accelerate improvements in results.

We can see that after the number of layers in the tree reaches a certain number (9+), the TopK layered search method (image 2) is preferable in terms of callback rate to the brute-force method on the same layer. This is because we believe that layered tree search in TDM avoids the bad nodes on the upper layer from affecting the sequencing of nodes on the lower layer. Instead, it takes the candidates from the lower layer and includes them in the children of the TopK from the upper layer. This method significantly reduces computation difficulty compared to the brute-force method, and improves classification and callback results.

Tree-based Deep Match (TDM) independently and innovatively provides a complete deep learning recommendation and matching algorithm framework based on the tree structure. It uses a layered tree structure as per user's interests to realize highly effective full database searches. Consequently, it uses this foundation to better enable deep models to adopt leading computation structures like Attention, thereby producing results that far outstrip those of traditional methods in terms of accuracy, callback rate, and novelty.

Furthermore, the TDM design created a complete, next-generation connected training framework consisting of tree-model training, tree reconstruction, and model re-training, to further improve results. Connected training makes the TDM algorithm framework universally applicable, introduces new use scenarios for TDM. The migrative expansion of the new field provides an excellent theoretical foundation and enormous engineering action-ability.

TDM builds a complete deep learning recommendation and matching theory and technology based on the tree structure, and has produced outstanding results and dramatic improvements in Alimama's ad placement. However, TDM is still in its beginning stages of development. In the future, there are still many areas in which we can improve the system. For example, it currently utilizes the KMeans categorization method, which does not include a threshold as its learning method. In the future, we will consider applying a method that does have a threshold to tune and prune the system and optimize the tree structure further.

2,605 posts | 747 followers

FollowAlibaba Clouder - May 11, 2021

Alibaba Clouder - September 25, 2020

Alibaba Clouder - September 17, 2020

Alibaba Clouder - September 17, 2020

Alibaba Clouder - September 17, 2020

Alibaba Clouder - October 15, 2020

2,605 posts | 747 followers

Follow E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Tablestore

Tablestore

A fully managed NoSQL cloud database service that enables storage of massive amount of structured and semi-structured data

Learn MoreMore Posts by Alibaba Clouder