11.11 Big Sale for Cloud. Get unbeatable offers with up to 90% off on cloud servers and up to a $300 rebate for all products! Click here to learn more.

By Miukeluhan.

Over the past 15 years, Ant Financial has completely reshaped how people make payments in China. However, these advancements in everyday finance wouldn't have been possible without the support of several important technological innovations. During this year's Apsara Conference held in Hangzhou, Ant Financial shared its experience in tech over the past 15 years and its plans for future innovations in the financial technology space. This article covers the announcements presented at the conference.

With the rapid development of Internet technology, we are moving into the cloud-native age. However, how should the financial industry embrace cloud native? During the past two years, Ant Financial implemented cloud native technology in the financial space, gaining some practical, real-world experience in this area. In this presentation, I will share what I think is the ideal cloud-native architecture for Ant Financial, the problems Ant Financial has encountered during the process of introducing cloud native, and some solutions to these problems.

Cloud computing has been a booming industry full of much growth and innovation for the past several years now. At present, it is easy to migrate to the cloud. But, in the future, we need to consider how we can use cloud in an even better and more efficient way. According to the latest statistics by RightScale in 2019, public cloud accounts for 22% of all cloud, with only 3% customers using private cloud. The vast majority of customers use a hybrid of public and private cloud solutions, in short hybrid cloud, to achieve a balance with their data privacy, security, efficiency, and elasticity priorities.

From the perspective of the global IT industry, public cloud only accounts for a 10% market share of the entire IT infrastructure market. In other words, the marketplace still has much room for growth in cloud, and many customers engaged in the market are traditional enterprises. An important reason that traditional industries cannot make full use of public cloud is that they have invested in, and have been building, their own IT systems over a long time, and many of them also have their own data centers. In addition, some enterprises for the stability and security of their businesses are not interested in using public cloud services. Therefore, these enterprises often tend to develop hybrid cloud policies. For example, they may store their core businesses in a private cloud, but migrate marginal or innovative businesses to a public cloud.

In addition to these distinctive characteristics, the financial industry has another two characteristics:

Therefore, hybrid cloud is more suitable for financial institutions. This conclusion is also backed up by research. As reported by Nutanix, the global financial industry has developed faster than other industries in terms of hybrid cloud application. Presently, 21% of financial institutions have deployed hybrid clouds, exceeding the global average of 18.5%.

So, what type of hybrid cloud is suitable for financial institutions? This article takes the evolution process of Ant Financial as an example.

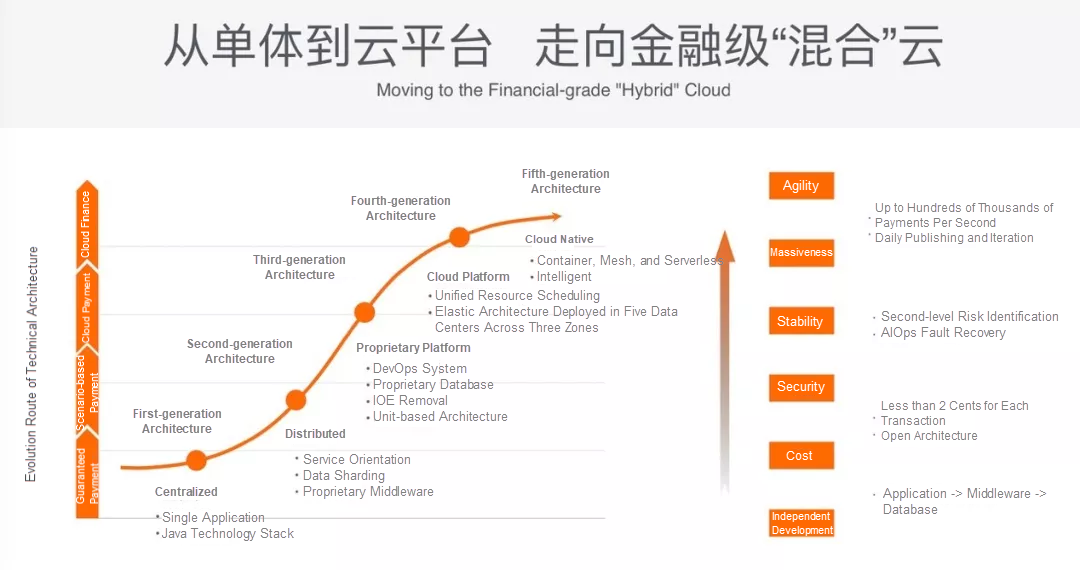

The fourth-generation of Ant Financial's IT architecture was transformed into a cloud-based one, specifically an elastic hybrid cloud architecture that can meet the elasticity requirements and burstable bandwidth of Ant Financial's online services. At present, Ant Financial has already entered the fifth-generation of its IT architecture, which is now a hybrid cloud-based cloud-native architecture.

Here, let's discuss the process of how Ant Financial moved from a hybrid cloud architecture to a cloud-native one. Throughout the process, one common thread was that Ant Financial had strong requirements and held high standards at all stages of research and development, allowing for a completely independent development process with good cost control, security, and stability. All of these standards can be seen in Ant Financial's cloud-native architecture.

To establish a financial-grade online transaction system, the first step that Ant Financial needed to take was to implement a financial-grade distributed architecture. Ant Financial has two representative technologies in this area, SOFAStack and OceanBase. Both are now in widespread use after an initial beta phase. SOFAStack is used to build a scalable architecture at the entire application layer or the stateless services layer. OceanBase is used to deploy the storage infrastructure, which is typically a database, or a stateful service layer over the architecture. Both technologies have the following features:

These four key features are critical to financial businesses and must be implemented in applications and storage in end-to-end mode.

Consider consistency for example. Data consistency can be ensured in a single database. However, in large-scale applications, a single database will always be a bottleneck and data will need to vertically split based on finer granularity, such as transaction, payment, or account data, which is similar to the splitting of services or applications. When data is stored in different database clusters, consistency must be ensured at the application layer. To support mass data, a database cluster is also internally divided and stored in multiple replicas. OceanBase is one of such distributed database products that is used to implement distributed transactions internally. The consistency across a distributed architecture is exclusively dependent on this cooperation.

Now, consider scalability as another example. Some systems may be said to use a distributed architecture when, in reality, they use a micro-service framework for service optimization at the application layer. For these systems, horizontal scaling cannot be used at the database layer. And, in such systems, scalability is greatly limited by the shortcomings of the data layer.

With the above considerations, we know then that a real distributed system must take advantage of end-to-end distribution to achieve a high level of performance and scalability without these above limitations. In addition, a real financial-grade distributed system must provide end-to-end high availability and consistency.

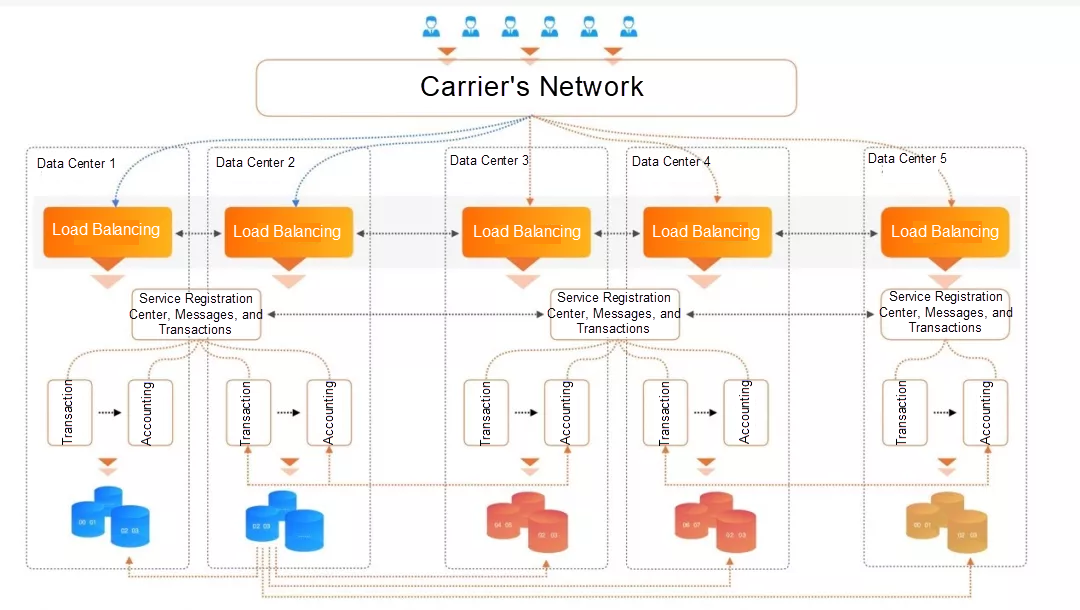

Pictured above is the architecture behind Ant Financial's Active Geo-redundancy Architecture that works in five data centers across three zones.

The main goal for the high-availability architecture at Ant Financial was to prevent data loss and ensure that service is uninterrupted. With this goal, we designed and implemented an active geo-redundancy architecture in five data centers across three zones. The core advantages of this architecture is city-level disaster recovery, cost-effective transactions, and unlimited scalability as well as an RPO of 0 and a PTO of less than 30 seconds. Ant Financial demonstrated network cable cutting at ATEC 2018. The demonstration showed how Ant Financial can achieve fast recovery in the cases of inter-city active geo-redundancy and disasters. In addition to meeting the goal of high availability, Ant Financial also worked hard to reduce risks. In summary, Ant Financial was able to ensure the security of capital, immunity to change, and fast fault recovery on top of high availability.

In addition to high availability, the most frequently mentioned topic in the finance industry is security. In the cloud-native age, we need to resolve end-to-end security risks through the entire process. The security issue consists of the following concerns:

In a speech called Cloud Native Security Architecture for Financial Services, the security advantages of this architecture were outlined. Click here to see the collated speech script. To sum up, the main capabilities are to provide financial-grade high availability and end-to-end security.

In the next section, let's look at some of the problems that Ant Financial encountered when moving to cloud-native.

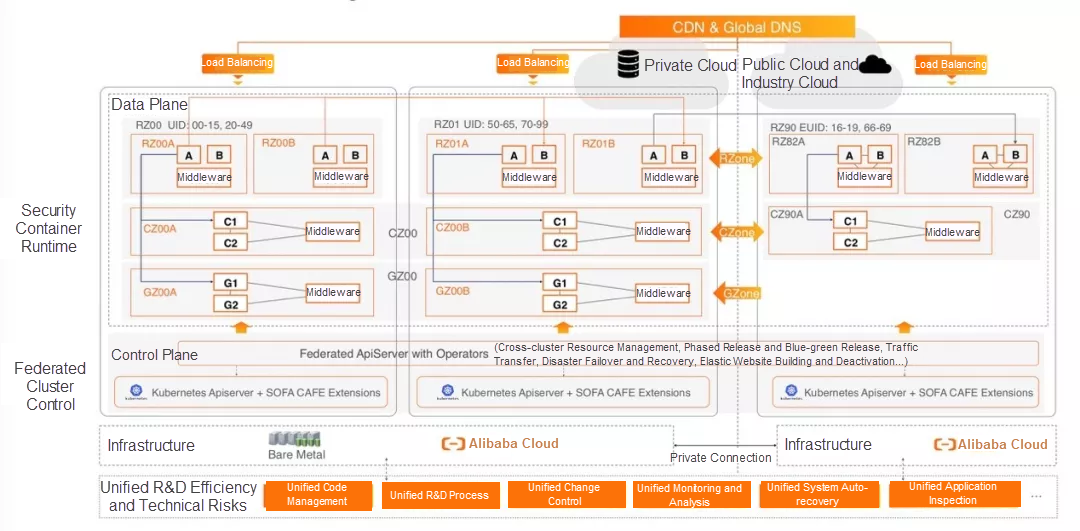

Pictured above is Ant Financial's transition from a unit-based architecture to a cloud-native architecture that is elastic. This transition is done to help Ant Financial be able to more effectively respond to traffic spikes in online services.

To understand this transition, we need to first look into what exactly the unit-based architecture is. Part of this architecture is the database layer, which involves database sharding and table sharding. Sharding is used to improve the subpar computing performance of the centralized storage of the system. The core idea of unit-based architecture is to move data sharding to entry request sharding. At the network access layer of a data center, user requests are sharded based on a dimension such as a user ID. In this context, each data center is similar to a huge stateful database sharding.

For example, when your user ID ends with 007 or 008 and your request is sent to the data center from a mobile phone or a webpage domain name, the access layer can identify whether to route your request to China East or China South. When you are in the data center for a region, most requests can be processed inside the data center. Sometimes, services need to be called across data centers. For example, a user whose data is in data center A transfers to another user whose data is in data center B. In this case, stateful design must be completed in the data centers.

As we enter the cloud-native age, we can rethink the overall architecture based around Kubernetes. In a unit-based architecture, we can deploy a Kubernetes cluster in each unit and globally deploy logical federated API servers that can manage multiple Kubernetes clusters and deliver control commands. Control metadata is stored in an Etcd cluster to maintain the consistency of global data. However, Etcd clusters can only implement disaster recovery between data centers in the same city, but not among multiple data centers in different cities. Considering this, Ant Financial has moved Etcd to the key-value (KV) engine of OceanBase. The storage format and semantics of Etcd are maintained at the engine layer, and the storage layer can provide the high availability of five data centers across three zones.

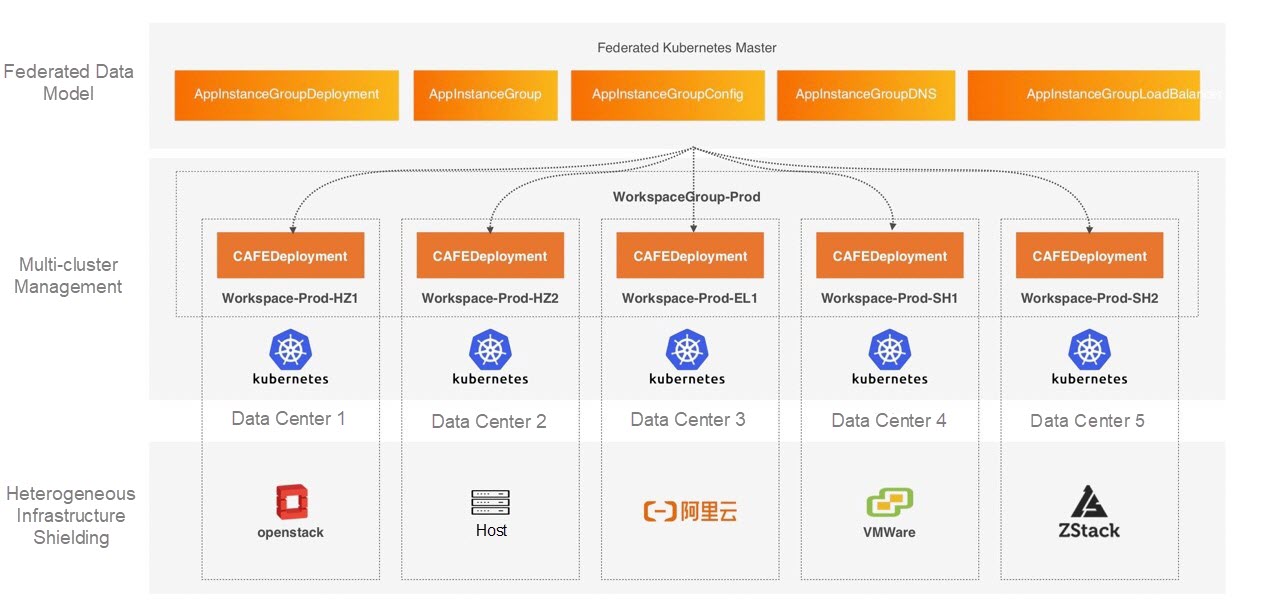

Pictured above is the heterogeneous infrastructure of financial institutions.

With this architecture is applicable for Ant Financial's technical architecture, we encountered several new challenges when opening our technology to external customers. For example, the data center of a customer used several different heterogeneous infrastructure systems, which meant that we had to implement multi-cloud adaptation based on the standards of a cloud provider.

Many financial institutions, including Ant Financial, were not the designers of their old systems as they are with these new cloud-native system. Many systems had stateful dependencies on infrastructure, such as IP address dependency, and as such, it was difficult for them completely use immutable infrastructure to support the system.

Following this, the high requirements for business continuity, the operation and maintenance mode of the native Kubernetes workload were simply unacceptable. For example, when native deployment was used for a phased or canary release, the processing of applications and traffic was rather primitive, which could in turn lead to exceptions and interruptions to business during operation and maintenance changes.

Ant Financial was able to resolve these problems, however, by expanding the native deployment into CAFEDeployment, which made the releases, phased releases, and rollback of large-scale clusters more refined, complying with the Ant Financial's "three principles for handling technical risks". This all made for a more suitable architecture for financial services.

So, to summarize things, Ant Financial discovered that a financial-grade hybrid cloud must be able to allow for elasticity, support system heterogeneity, and provide the stability needed for the operations and maintenance of large-scale architectures. And, we think, with new changes and the development of new businesses, the financial industry will need to focus on how to implement more sustainable development, while also retaining traditional development and operation and maintenance models to support existing business services and operations.

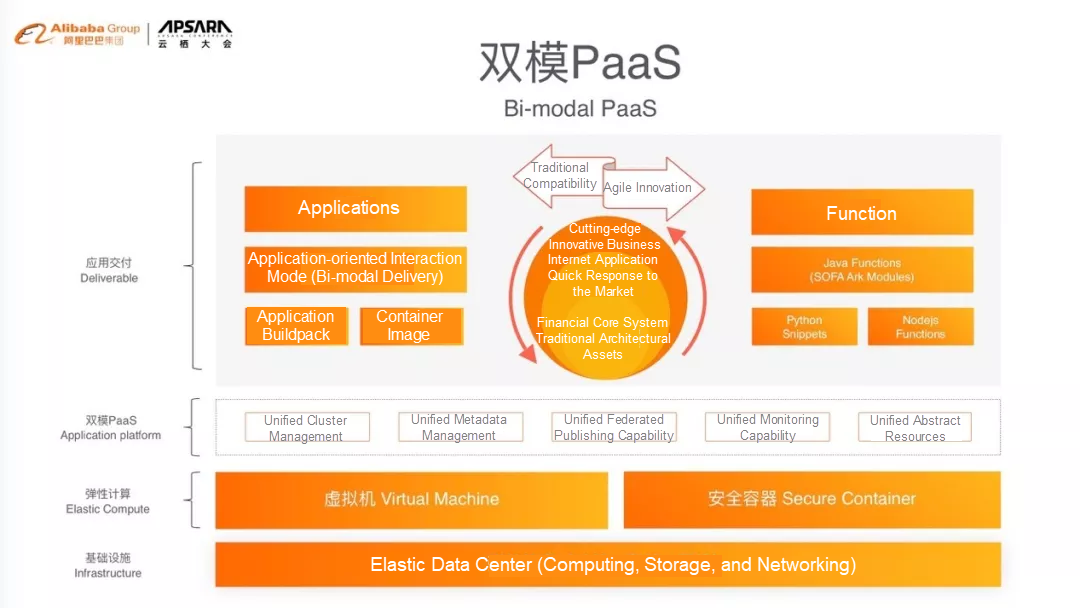

Pictured about is the multimode infrastructure Platform-as-a-Service (PaaS).

Cloud native is derived from a Platform as a Service (PaaS) model. When changing architectures one issue we needed to confront was how to convince customers to consider new delivery models when they want to stick to their original way of doing things. In the traditional model, customers were used to delivering code packages and to virtual machine-based operation and maintenance. However, in the cloud-native age, given that container images are used as delivery carriers, and running instances are container instances of images, we were able to simulate the running mode of virtual machines with containers, and simulate the operation and maintenance mode of virtual machines through expanding deployment when the container mode is supported.

In addition, the main operations of implementing a PaaS based on a traditional architecture and Kubernetes are the same. This is true for creating websites, as well as with launching, restarting, and scaling services, and even for going offline. It is clear that implementing two PaaSs wastes resources and increases maintenance costs. The functions for users are the same. Therefore, we used Kubernetes to implement the public part, including the unified metadata, unified operation and maintenance operations, and unified resource abstraction. In addition, we provided two interfaces, one at the product layer and the other at the operation and maintenance layer. We also supported the traditional application mode and technology stack mode for delivery as well as image-based delivery. In addition to applications, we can also support functions.

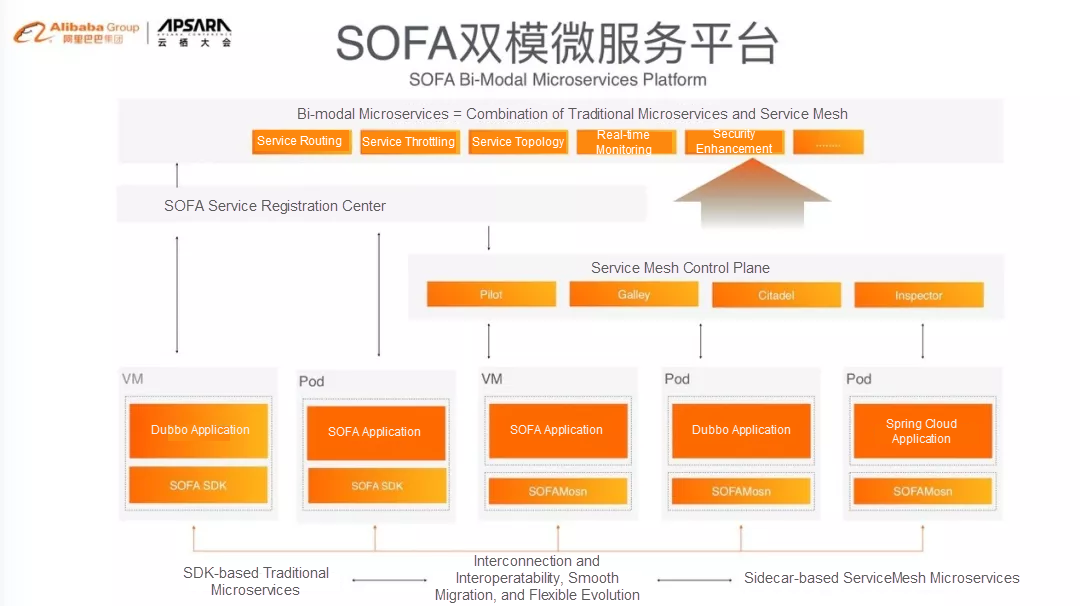

Pictured above is the SOFA bi-modal microservice platform.

The next step is the bi-modal microservice platform, which also happens to involve challenges that are related to transitioning from old and new systems. However, many of these problems can be mitigated with the help of mesh. In the cloud-native architecture, mesh is the Sidecar in a pod. However, old systems usually do not run on Kubernetes and do not support the operation and maintenance mode of the pod and Sidecar. Therefore, we must manage the mesh process in agent mode so that mesh can help to optimize services for applications in the old architecture and to manage services in the new and old architectures in a unified manner.

The data plane must be deployed in two modes and the control plane also needs to support both modes. Traditional SDK-based microservices register services with the old service registration service, but mesh register services are based on the control plane. Therefore, we can integrate the control plane with the old service registration service, and use the latter to provide the service registration and discovery service to achieve the visibility and routing of global services. For those who have learned about the service registration system of Ant Financial, they know how we implemented the high-availability design in an ultra-large scale and multi-data center environment. It is difficult to implement these capabilities on the community control plane shortly. We are gradually migrating these capabilities to the new architecture. Therefore, this bi-modal control plane is also applicable when the service architecture smoothly evolves to the cloud-native architecture in hybrid mode.

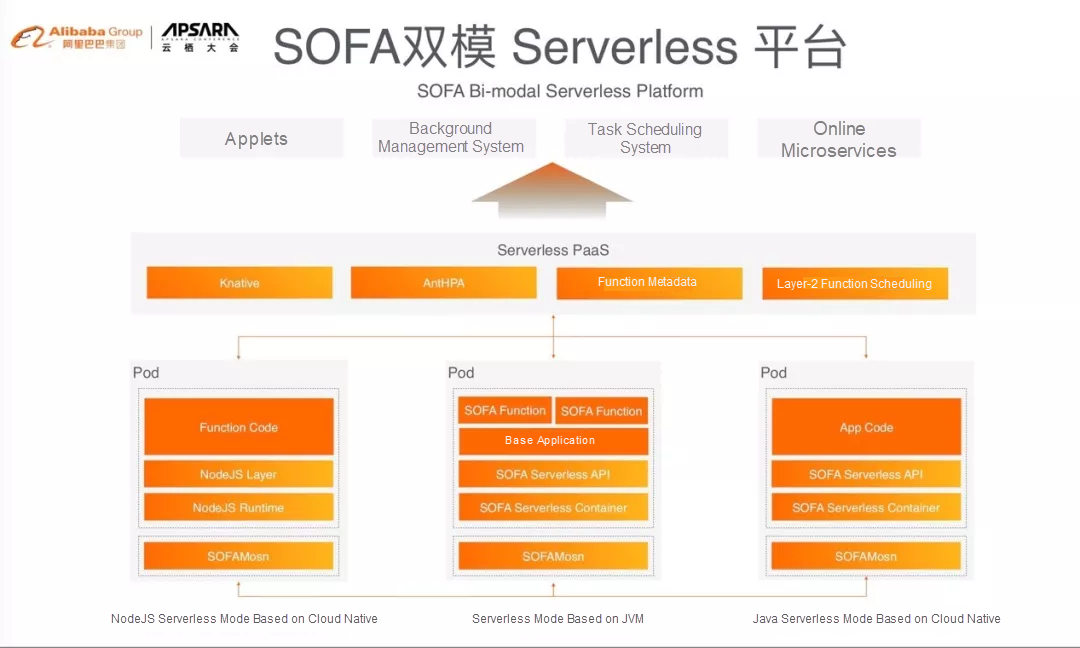

The last one is the Serverless architecture. Recently, the Serverless architecture is popular because it applies to many scenarios. However, this architecture imposes demanding requirements for performance and requires that each application can quickly start up. Otherwise, the architecture cannot be used in the production environment.

The internal node system of Ant Financial uses the Serverless architecture at a large scale and optimizes the startup speed. Currently, the average speed is around 4 seconds and will be accelerated to less than 1 second after further optimization. However, the Serverless architecture is not applicable for Java applications because common Java applications require 30 seconds to 1 minute to complete startup. Therefore, Java applications cannot share the benefits of the Serverless architecture due to slow startup.

We used the support vector machine (SVM) technology of Java Virtual Machine (JVM) to perform static compilation on applications and optimized the startup time of an application from 60 seconds to 4 seconds. However, this was achieved at the expense of some dynamic features such as reflection. In addition, we modified the SDKs of some middleware to keep the applications unchanged and mitigate the impact of the adaptation on the applications. When advanced technologies can support application startup within 1 second, the entire ecosystem of Java technology can more quickly migrate to this architecture, and more applicable scenarios will be supported by then. However, this process takes a long time and requires that more people in the community engage in the anti-dynamic transformation of open source class libraries. Therefore, we use the class isolation of our application containers to support different modules or different versions of the same module running in one Java runtime without interfering with each other. In addition, the fast cold startup and fast scaling in the Serverless architecture can be simulated.

The Java runtime that has the isolation capability and supports fast loading and unloading of modules is called an SOFA Serverless container. The smallest runtime module is called an SOFA function. These small code snippets are programmed with a series of Serverless APIs. In the transition phase, we deployed a Scalable Open Financial Architecture (SOFA) Serverless container as a cluster, in which multiple SOFA functions can be scheduled. In this case, the SOFA Serverless container and the SOFA functions are deployed in N:1 mode. In the future, if the Serverless architecture can support the fast startup of Java applications, these SOFA functions can be smoothly deployed in pod mode. Then, one SOFA function can run in one SOFA Serverless container.

To summarize, the financial-grade hybrid cloud must be evolutionary and iterative to ensure the smooth evolution of technologies. Therefore, we provide the bi-modal "hybrid" capability at the PaaS, microservices, and Serverless layers.

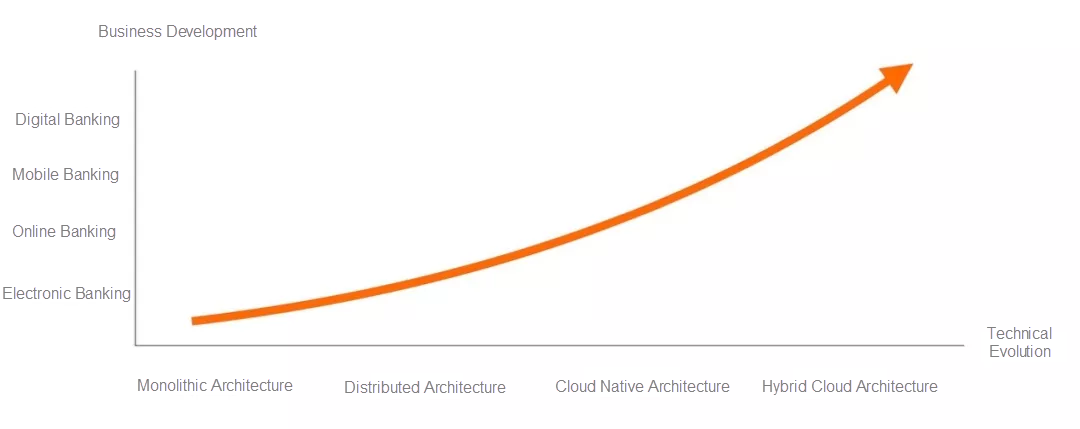

Above is a graph showing development trends of businesses and technologies in the financial industry.

Finally, we can see that both the development trends in banking and the entire financial field have a direct mapping to the evolution trends of the technical architecture, with different capabilities are required at different stages. I believe that many banks are undergoing digital and mobile transformation. However, after completing mobile transformation and fully incorporating themselves into the Internet, many financial institutions will also encounter the same problems as Alipay did.

KDD 2019: A Deep Dive into Adversarial Learning on Heterogeneous Information Networks

The Now and Future of Financial Data Intelligence at Ant Financial

12 posts | 2 followers

FollowAlipay Technology - May 14, 2020

Alipay Technology - February 20, 2020

Alibaba Cloud New Products - June 2, 2020

Alipay Technology - August 21, 2019

Alipay Technology - November 6, 2019

Alibaba Clouder - November 7, 2019

12 posts | 2 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Database Security Solutions

Database Security Solutions

Protect, backup, and restore your data assets on the cloud with Alibaba Cloud database services.

Learn More Hybrid Cloud Solution

Hybrid Cloud Solution

Highly reliable and secure deployment solutions for enterprises to fully experience the unique benefits of the hybrid cloud

Learn MoreMore Posts by Alipay Technology