By Yuanqi

A cost estimator is a key component of the Cost-Based Optimizer (CBO). Simply put, it can evaluate costs on disk I/O and CPU when using an access path to obtain data for a table. The supported access paths, I/O, and CPU cost calculations are closely associated with the storage engine features of the database. Therefore, while the cost estimator is a crucial component of the optimizer, its implementation is more closely linked to the storage engine.

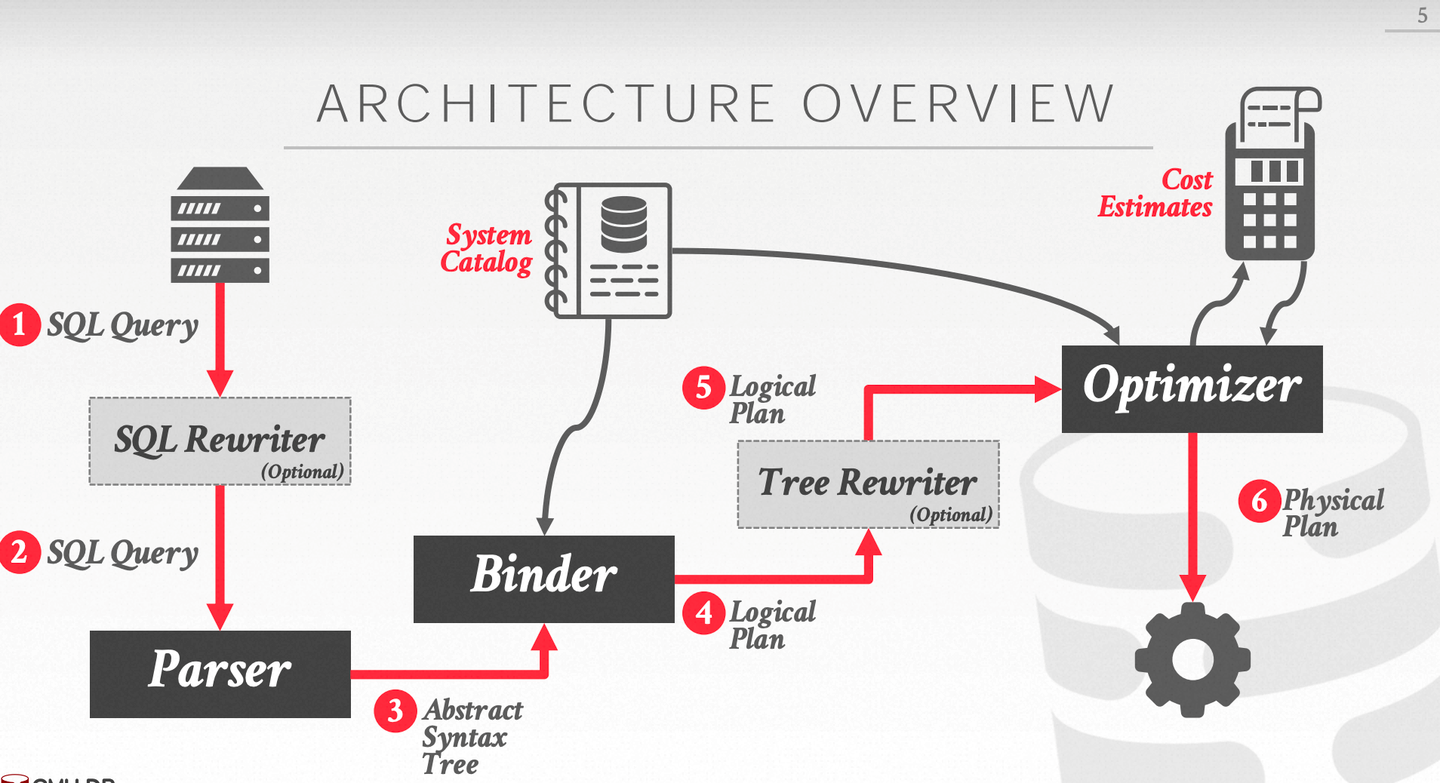

The following figure provides an overview of the optimizer's architecture, showing that despite serving the optimizer, the cost estimator is a relatively independent component.

CMU 15-721 Spring 2020 Optimizer Implementation: Optimizer Architecture Overview

Next, let's talk about what the access path of a table is.

Create a simple table where id is the primary key and create a secondary index on col1:

CREATE TABLE t1 (

id INT PRIMARY KEY,

col1 INT,

col2 INT,

KEY index_col1 (col1)

) ENGINE = InnoDB;For the simplest single-table query statement:

SELECT * FROM t1 where t1.col1 < 5;It is logically simple to find all records with t1.col1 < 5. However, how can we obtain data from the table? At least there are the following approaches:

It can be seen that these two approaches can achieve logically consistent results, but the data they access and their access methods are completely different. Therefore, the actual execution method to obtain data for each table is referred to as the access path of this table. When the access path of a table is changed, the actual physical execution plan of the operator is changed. In other words, queries that logically obtain the same data will have many differences in physical execution and efficiency, namely, logical plan and physical plan. If we change the access path of a table, we change its physical plan. A similar example is changing the join method of two tables. If we change the Nested Loop Join to Hash Join, it is also a change to the physical plan.

Reading data from a table has a variety of access paths, so you may ask: Which one is better?

Look at the following two situations:

The table only contains 10,000 records and all these records satisfy the condition t1.col1 < 5.

• To use the first access path, you need to scan the full table on the primary key index.

• To use the second access path, you need to scan the full table on the secondary index once, and each record needs index lookups from the primary key index once.

Obviously, the first is better.

The table contains 10,000 records and only one of the records satisfies the condition t1.col1 < 5.

• To use the first access path, you need to scan the full table on the primary key index.

• To use the second access path, you need to scan the secondary index, but only one record will be obtained. For this record, you can search for the complete record from the primary key index.

Obviously, the second is better.

From this example, it can be seen that which access path is better is strongly related to the data. This is not to say that if we specify a predicate related to the secondary index in the WHERE clause, the secondary index must be selected.

From the above analysis, it can be seen that:

Next, we will analyze the cost estimator based on the source code and case. The source code is based on MySQL 8.0.34.

Another important concept in the cost estimator is cost. Before making an estimation, it is necessary to define what cost represents. In MySQL, cost refers to the estimated consumption of CPU and I/O during the execution of a physical query plan. CPU cost evaluates the number of CPU cycles required to execute the physical plan, while I/O cost evaluates the number of times data needs to be read from the storage engine during the execution of the physical plan.

Now that we have defined the concept of cost, it is important to understand how to compare costs. As we know, CPU and I/O are two distinct resources. Let's consider a scenario where plan A has low CPU cost but high I/O cost, while plan B has high CPU cost but low I/O cost. How should we choose between the two?

MySQL takes a simple approach in this situation. Despite the different physical meanings of these two costs, they cannot be directly converted into comparable physical quantities. Therefore, different weights can be assigned to the two costs, and their weighted sum becomes the final cost. These weights can be adjusted by users. It is worth noting that these weights largely depend on the hardware configuration. If the I/O resources on the database host are limited but there are a large number of CPU cores available, it is advisable to increase the weight assigned to I/O resources and decrease the weight assigned to CPU resources.

In MySQL, the constants used in the cost calculation process can be viewed by querying the mysql.server_cost and mysql.engine_cost tables.

MySQL [(none)]> select * from mysql.server_cost;

+------------------------------+------------+---------------+

| cost_name | cost_value | default_value |

+------------------------------+------------+---------------+

| disk_temptable_create_cost | NULL | 20 |

| disk_temptable_row_cost | NULL | 0.5 |

| key_compare_cost | NULL | 0.05 |

| memory_temptable_create_cost | NULL | 1 |

| memory_temptable_row_cost | NULL | 0.1 |

| row_evaluate_cost | NULL | 0.1 |

+------------------------------+------------+---------------+

MySQL [(none)]> select * from mysql.engine_cost;

+-------------+-------------+------------------------+------------+---------------+

| engine_name | device_type | cost_name | cost_value | default_value |

+-------------+-------------+------------------------+------------+---------------+

| default | 0 | io_block_read_cost | NULL | 1 |

| default | 0 | memory_block_read_cost | NULL | 0.25 |

+-------------+-------------+------------------------+------------+---------------+Each type of cost is given a default value in these two tables. Next, let's explain the meaning of each cost:

• disk_temptable_create_cost: cost of creating a temporary table on disk

• disk_temptable_row_cost: cost of reading or writing a record in the temporary table on disk

• memory_temptable_create_cost: cost of creating a temporary table in memory

• memory_temptable_row_cost: cost of reading or writing a record in the temporary table in memory

• io_block_read_cost: cost when the storage engine randomly reads a page from disk

• memory_block_read_cost: cost when the storage engine randomly reads a page from the Buffer Pool

• row_evaluate_cost: cost of evaluating a record

• key_compare_cost: cost of comparing keys, which is mainly used for the retrieving of B+ trees

These constants have no units, but the values set for them should be comparable. For example: 1 CPU cost and 1 I/O cost under the normal load of the database have the same impact on the database SQL execution speed. It can be seen that these constant values are critical to the choice of execution plan, but they are difficult to set. In most cases, these constant values are not modified.

In MySQL, the code for cost estimation is concentrated in two functions: bool JOIN::estimate_rowcount() and bool Optimize_table_order::choose_table_order().

The bool JOIN::estimate_rowcount() estimates the number of rows output by each access path and the corresponding cost for each table (obtaining the number of rows output by each access path is very important for the Join Reorder algorithm and we will explain it in future articles). This function only considers the available access path when this table is the first read table.

The bool Optimize_table_order::choose_table_order() uses a search algorithm to calculate the optimal access path and the corresponding cost for each table under different JOIN ORDERs. In this function, a new access path can be expanded for the table by using the JOIN condition.

This article mainly interprets the code related to single-table cost estimation in the bool JOIN::estimate_rowcount(). It does not involve the JOIN reorder algorithm when optimizing multi-table JOIN query statements and we will describe this part in detail in future articles.

This function calculates the available access path and the corresponding cost for all tables involved in JOIN when they are used as the first table.

To understand this function, we can first look at this simple example:

select * from t1, t2 where t1.key1 = t2.key2 and t1.key1 < 15;This is an SQL statement that is used to join two tables. The JOIN condition is t1.key1 = t2.key2. There is also the filter condition t1.key1 < 15 for table t1.

If the first read table is t1, there are two possible access paths:

If the first read table is t2, there are also two possible access paths:

This function can find all available single-table access paths for each table, so the general logic of this function is quite simple.

for each table:

for each Access Path:

if (Access Path can be applied):

calculate the rowcount and costTraverse each table, traverse the access paths supported by each storage engine, determine whether this access path can be applied, and calculate the rowcount and cost if so.

At this point, we know that the key to this function is to traverse all available access paths. Therefore, the next code interpretation will be classified according to the access path.

This type of access path has only one record in the query result. It may be that the table has only one row (corresponding to the system) or the table has point queries of the primary key or the unique index (corresponding to the const). Such queries will be processed in advance and will not participate in the JOIN reorder. Both the cost and rowcount are fixed to 1. The cost here is not differentiated by CPU cost or I/O cost but just heuristically set to 1.

if (tab->type() == JT_SYSTEM || tab->type() == JT_CONST) {

// rows:1,cost:1

trace_table.add("rows", 1)

.add("cost", 1)

.add_alnum("table_type",

(tab->type() == JT_SYSTEM) ? "system" : "const")

.add("empty", tab->table()->has_null_row());

// Only one matching row and one block to read

// Set the number of found records to 1

tab->set_records(tab->found_records = 1);

// Set read_time to the worst value of an index query

tab->worst_seeks = tab->table()->file->worst_seek_times(1.0);

tab->read_time = tab->worst_seeks;

continue;

}Summary

Rows: 1

Cost: 1

This type of access path is actually a full table scan. Because InnoDB is an index-organized table, it is actually a primary key index scan.

First, it obtains the number of records in the table from the statistical information which is maintained by the storage engine. For InnoDB, the number of rows is inaccurate because of MVCC and transaction isolation. Indeed an accurate number of rows cannot be obtained.

ha_rows records = table->file->stats.records;

if (!records) records++; /* purecov: inspected */

double scan_time =

cost_model->row_evaluate_cost(static_cast<double>(records)) + 1;

// Cost of the full table scan

Cost_estimate cost_est = table->file->table_scan_cost();

// Fixed I/O overhead (correction amount)

cost_est.add_io(1.1);

// Add the cost of evaluation to the CPU cost

cost_est.add_cpu(scan_time);A full table scan cannot guarantee that all records meet the filter conditions. Therefore, we must evaluate whether each record meets the filter conditions. The cost of this part also needs to be calculated. The scan_time describes this cost and the calculation formula is simple: row_evaluate_cost * records. That is to multiply the number of rows by the cost of evaluating each record. The evaluation mainly consumes CPU resources, so scan_time will be recorded in the CPU cost.

A full table scan results in I/O cost, which is calculated by the following formula:

Number of file pages * Cost of reading each page

= Number of clustered index pages * Cost of reading each page

= Number of clustered index pages * (Proportion of in-memory pages * memory_block_read_cost +

Proportion of on-disk pages * io_block_read_cost)In MySQL, the specific calculation code is as follows:

// table_scan_cost = Number of file pages * Cost of reading each page

Cost_estimate handler::table_scan_cost() {

const double io_cost = scan_time() * table->cost_model()->page_read_cost(1.0);

Cost_estimate cost;

cost.add_io(io_cost);

return cost;

}

// scan_time() obtains the number of pages in the clustered index

double ha_innobase::scan_time() {

...

ulint stat_clustered_index_size;

stat_clustered_index_size = m_prebuilt->table->stat_clustered_index_size;

return ((double)stat_clustered_index_size);

}

// page_read_cost(1.0) calculates how much I/O cost is generated by reading a page in the average sense

double Cost_model_table::page_read_cost(double pages) const {

// Obtain the percentage of pages that are cached in the Buffer Pool

const double in_mem = m_table->file->table_in_memory_estimate();

const double pages_in_mem = pages * in_mem;

const double pages_on_disk = pages - pages_in_mem;

// Proportion of in-memory pages * memory_block_read_cost +

// Proportion of on-disk pages * io_block_read_cost

const double cost =

buffer_block_read_cost(pages_in_mem) + io_block_read_cost(pages_on_disk);

return cost;

}Add the I/O cost to the final cost.

It should be noted that MySQL adds a fixed correction value of 2.1 to the cost of a full table scan to make the optimizer not inclined to a full table scan.

Summary

Rows: stats.records

Cost: I/O cost of the scan + CPU cost of the evaluation + I/O cost correction value (1.1) + scan cost correction value (1)

If a secondary index contains all columns required by the query, we can scan the secondary index to reduce the I/O cost. The covering index mentioned here only refers to retrieving the secondary index instead of the primary key index during a full table scan.

if (!table->covering_keys.is_clear_all()) {

// Find the shortest index that contains all the columns required by the query

int key_for_use = find_shortest_key(table, &table->covering_keys);

// find_shortest_key() should return a valid key:

assert(key_for_use != MAX_KEY);

// Calculate the cost of the scan on this index

Cost_estimate key_read_time = param.table->file->index_scan_cost(

key_for_use, 1, static_cast<double>(records));

// Similar to the cost calculation of the table scan, calculate the cost of evaluation

key_read_time.add_cpu(

cost_model->row_evaluate_cost(static_cast<double>(records)));

bool chosen = false;

// If the value is less than the cost of the table scan, set this access path to the best

if (key_read_time < cost_est) {

cost_est = key_read_time;

chosen = true;

}

Opt_trace_object trace_cov(trace, "best_covering_index_scan",

Opt_trace_context::RANGE_OPTIMIZER);

trace_cov.add_utf8("index", table->key_info[key_for_use].name)

.add("cost", key_read_time)

.add("chosen", chosen);

if (!chosen) trace_cov.add_alnum("cause", "cost");

}The entire code is also simple: first obtain the shortest index, and then calculate the cost of index_scan. The formula to calculate the cost of index_scan is as follows:

Number of index pages * Cost of reading each index page

= (Number of records / Number of records per page) * Cost of reading each index page

= (Number of records / (Page size / (Length of secondary index key + Length of primary key)))* Cost of reading each index page

= (Number of records / (Page size / (Length of secondary index key + Length of primary key)))*

(Proportion of in-memory index pages * memory_block_read_cost +

Proportion of on-disk index pages * io_block_read_cost)The specific formula in MySQL can be seen in Cost_estimate handler::index_scan_cost. The general logic of this function is shown in the above formula.

Summary

Rows: stats.records

Cost: I/O cost of index scan + CPU cost of evaluation

This access path is mainly used for SQL statements with GROUP clauses and at the same time with aggregate functions such as MIN or MAX in SELECT statements. A simple example is as follows:

SELECT key_part1, key_part2, min(key_part3)

FROM t1

WHERE key_part2 = 1

GROUP BY key_part1;For this SQL statement, you can use the access path of Group Range to generate a GroupIndexSkipScanIterator executor. During execution, it locates an interval based on key_part1 and key_part2, and then only reads the first record of key_part3 in this interval to complete the query. Therefore, this is a skip scan specially optimized for GROUP BY and MIN/MAX aggregate functions.

The cost calculation for this access path is complicated, so the formula is not put here.

the total number of rows in the table / records_per_key value of key_part1 to record the number of groups. It actually calculates the cardinality of key_part1. In MySQL, the cardinality is calculated by the total number of rows /records_per_key.2 × group × B+ tree height. However, more information can be used to obtain a more accurate estimate, such as whether the equivalence condition of key_part2 will cause overlap of pages read by different groups, how many pages can be occupied by data in a group, and whether there are MIN/MAX aggregate functions for key_part3. After knowing how many pages should be read, the I/O cost can be calculated like the table scan.tree height × key_compare_cost. Finally, there is the cost evaluation for each record obtained.It can be found that this scan method may reduce I/O cost, but it will increase CPU cost due to the retrieval on the B+ tree. Therefore, whether to choose this access path depends on which one is greater, the reduced I/O cost or the increased CPU cost.

// Record the Optimizer Trace for Group Range

if (group_path) {

DBUG_EXECUTE_IF("force_lis_for_group_by", group_path->cost = 0.0;);

param.table->quick_condition_rows =

min<double>(group_path->num_output_rows(), table->file->stats.records);

Opt_trace_object grp_summary(trace, "best_group_range_summary",

Opt_trace_context::RANGE_OPTIMIZER);

if (unlikely(trace->is_started()))

trace_basic_info(thd, group_path, ¶m, &grp_summary);

if (group_path->cost < best_cost) {

grp_summary.add("chosen", true);

best_path = group_path;

best_cost = best_path->cost;

} else

grp_summary.add("chosen", false).add_alnum("cause", "cost");

}Summary

Rows: Cardinality of keys used in GROUP BY statements

Cost: I/O cost of Skip Scan + CPU cost of evaluation + CPU cost of retrieving B+ trees

This access path is also able to skip some records. The example is as follows:

select key_part1, key_part2, key_part3 from t1 where key_part3 > 10;For this SQL statement, although there is a filter condition on key_part3, it is not necessary to perform a complete scan on this index. We can locate each group of key_part1 and key_part2 to the beginning of an interval, and then start the scan from the record with key_part3 > 10 in the interval. It can be found that this scan can skip all scans for records with key_part3 <= 10.

The cost calculation of this access path is similar to that of Group Range. In short, it also compares the reduced I/O cost of Skip Scan with the increased CPU cost of retrieving the B+ tree.

Taking the above SQL as an example, first, the records_per_key of key_part1 and key_part2 are used to estimate how many groups will be divided, and then determine how many B+ trees need to be retrieved. In order to calculate the number of rows output, this access path first assumes that the proportions of data that the predicate can obtain in different groups (that is, selectivity) are the same. If a histogram is built on key_part3 and it is known that the proportion of key_part3 <= 10 to the total data is 10%, then we can get the number of rows output by multiplying 0.1 by the total number of rows in the table. If no histogram is built, then a default selectivity is given.

// Record the Optimizer Trace for Skip Scan

if (skip_scan_path) {

param.table->quick_condition_rows = min<double>(

skip_scan_path->num_output_rows(), table->file->stats.records);

Opt_trace_object summary(trace, "best_skip_scan_summary",

Opt_trace_context::RANGE_OPTIMIZER);

if (unlikely(trace->is_started()))

trace_basic_info(thd, skip_scan_path, ¶m, &summary);

if (skip_scan_path->cost < best_cost || force_skip_scan) {

summary.add("chosen", true);

best_path = skip_scan_path;

best_cost = best_path->cost;

} else

summary.add("chosen", false).add_alnum("cause", "cost");

}Summary

Rows: Histogram-derived selectivity / Default selectivity × stats.records

Cost: I/O cost of Skip Scan + CPU cost of evaluation + CPU cost of retrieving B+ trees

Index Range Scan is actually the most common access path. This access path uses a single index to obtain records that meet the index filter condition. However, two things should be clarified here: a single index means that at most one secondary index is retrieved in this way (the index lookups may be required to read the primary key index). If the index filter condition is met, the records obtained are actually a superset of the records that the query wants to obtain. Therefore, we need to filter the records subsequently to obtain the records that really meet all the filter conditions.

Before introducing this access path, we need to first introduce a data structure: SEL_TREE.

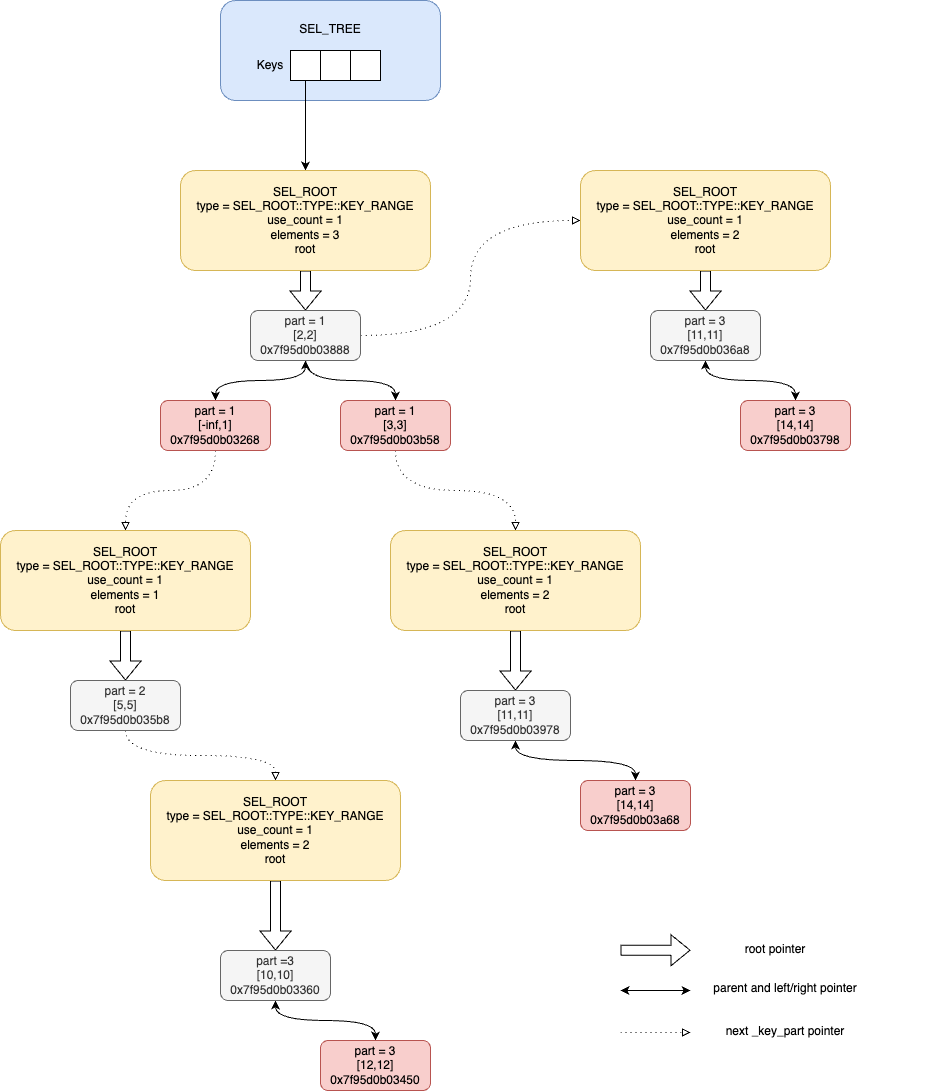

The effect of SEL_TREE is very clear. For complex filter conditions in the WHERE clause, it can be understood as sub-intervals on a certain index. During retrieval, only the records in these sub-intervals on the index need to be obtained. Therefore, we need to structure the complex filter conditions into sub-intervals to facilitate the subsequent rowcount and cost estimation. Here, let's look at the data structure SEL_TREE through the following SQL as an example:

SELECT *

FROM t1

WHERE (kp1 < 1

AND kp2 = 5

AND (kp3 = 10

OR kp3 = 12))

OR (kp1 = 2

AND (kp3 = 11

OR kp3 = 14))

OR (kp1 = 3

AND (kp3 = 11

OR kp3 = 14));The following SEL_TREE is generated:

We use the following characteristics to describe SEL_TREE:

• The keys array in SEL_TREE points to SEL_ROOT for different keys.

• SEL_ROOT points to different intervals of a key_part of a key to form a red-black tree, and the relationship between different intervals is OR.

• Red-black trees are sorted by interval.

• The next_key_part of each node points to the red-black tree of the next key_part, and the relationship between different key_parts is AND.

This graph is actually a structured graph of the AND/OR relationship. Through this graph, we can obtain the interval representation of the records of interest to the query on the index.

In MySQL, analyzing_range_alternatives is used to refer to the Index Range Scan. The role of the get_key_scans_params() function is to use the previously obtained SEL_TREE to obtain the best access path for the Index Range Scan.

Opt_trace_object trace_range_alt(trace, "analyzing_range_alternatives",

Opt_trace_context::RANGE_OPTIMIZER);

AccessPath *range_path = get_key_scans_params(

thd, ¶m, tree, false, true, interesting_order,

skip_records_in_range, best_cost, needed_reg);The logic of the get_key_scans_params() function is also clear, as expressed in the pseudo-code as follows:

for each key in SEL_TREE:

calculate rowcount and cost:

if (cost < best_cost):

update Access PathThe actual MySQL code is as follows:

AccessPath *get_key_scans_params(THD *thd, RANGE_OPT_PARAM *param,

SEL_TREE *tree, bool index_read_must_be_used,

bool update_tbl_stats,

enum_order order_direction,

bool skip_records_in_range,

const double cost_est, Key_map *needed_reg) {

...

// Traverse each index

for (idx = 0; idx < param->keys; idx++) {

key = tree->keys[idx];

if (key) {

ha_rows found_records;

Cost_estimate cost;

uint mrr_flags = 0, buf_size = 0;

uint keynr = param->real_keynr[idx];

if (key->type == SEL_ROOT::Type::MAYBE_KEY || key->root->maybe_flag)

needed_reg->set_bit(keynr);

// Determine whether the index lookups are required to read the index

bool read_index_only =

index_read_must_be_used

? true

: (bool)param->table->covering_keys.is_set(keynr);

Opt_trace_object trace_idx(trace);

trace_idx.add_utf8("index", param->table->key_info[keynr].name);

bool is_ror_scan, is_imerge_scan;

// Obtain the number of rows to be read and the cost

found_records = check_quick_select(

thd, param, idx, read_index_only, key, update_tbl_stats,

order_direction, skip_records_in_range, &mrr_flags, &buf_size, &cost,

&is_ror_scan, &is_imerge_scan);

...

if (found_records != HA_POS_ERROR && thd->opt_trace.is_started()) {

Opt_trace_array trace_range(&thd->opt_trace, "ranges");

...

// If the index dive is skipped, the cost is not calculated

/// No cost calculation when index dive is skipped.

if (skip_records_in_range)

trace_idx.add_alnum("index_dives_for_range_access",

"skipped_due_to_force_index");

else

trace_idx.add("index_dives_for_eq_ranges",

!param->use_index_statistics);

trace_idx.add("rowid_ordered", is_ror_scan)

.add("using_mrr", !(mrr_flags & HA_MRR_USE_DEFAULT_IMPL))

.add("index_only", read_index_only)

.add("in_memory", cur_key.in_memory_estimate());

if (skip_records_in_range) {

trace_idx.add_alnum("rows", "not applicable")

.add_alnum("cost", "not applicable");

} else {

trace_idx.add("rows", found_records).add("cost", cost);

}

}

if ((found_records != HA_POS_ERROR) && is_ror_scan) {

tree->n_ror_scans++;

tree->ror_scans_map.set_bit(idx);

}

// Determine whether to select this Index Range Scan method based on the cost and record the Optimizer Trace

if (found_records != HA_POS_ERROR &&

(read_cost > cost.total_cost() ||

/*

Ignore cost check if INDEX_MERGE hint is used with

explicitly specified indexes or if INDEX_MERGE hint

is used without any specified indexes and no best

index is chosen yet.

*/

(force_index_merge &&

(!use_cheapest_index_merge || !key_to_read)))) {

...

} else {

...

}

}

}

// Generate the access path

...

return path;

}Here, let's focus on how the Index Range Scan calculates the number of rows. The previous number of rows is basically from the records and records_per_key saved in the statistical information, but Index Range Scan uses a method that can obtain the number of rows more accurately: Index Dive.

After a range on an index is given, we can use the interface provided by InnoDB.

ha_rows ha_innobase::records_in_range(keynr, min_key, max_key) to estimate the number of records between min_key and max_key in the keynr-th index. The general principle of this function is to retrieve min_key and max_key on the B+ tree and estimate the number of records between them according to the two search paths on the B+ tree. Generally, when the number of records in the interval between min_key and max_key is too large, the estimation is inaccurate. When the number of records between min_key and max_key is small and finally falls on the same B+ tree leaf node, the estimation is precise.

Estimating the number of rows by Index Dive is obviously time-consuming. In MySQL, the number of records in a single interval can be estimated by the eq_range_index_dive_limit parameter to control the optimization process.

When Index Dive is not available, MySQL still uses records_per_key statistical information to estimate the number of rows.

The last is cost calculation. The cost of the Index Range Scan needs to be calculated separately according to whether the index lookups are required.

If index lookups are not required:

Calculate the number of pages to be read based on the number of read rows, and then multiply the number of pages by the I/O cost of reading each page. This part of the calculation process is the same as the cost calculation of the covering index (in fact, they call the same function):

Number of index pages to be read * Cost of reading each index page

= (Number of records to be read / Number of records per page) * Cost of reading each index page

= (Number of records to be read / Number of records per page) *

(Proportion of in-memory index pages * memory_block_read_cost +

Proportion of on-disk index pages * io_block_read_cost)Finally, add the cost of evaluation.

Summary

Rows: Use statistical information or the Index Dive method to estimate the number of records in the interval

Cost: I/O cost of reading the index pages + CPU cost of evaluation

If index lookups are required:

In this case, the cost is mainly generated each time the index lookups take place, so the I/O cost is calculated according to the following formula:

(rows + ranges) * Cost of reading each clustered index page

= (rows + ranges) *

(Proportion of in-memory index pages * memory_block_read_cost +

Proportion of on-disk index pages * io_block_read_cost)Finally, add the cost of evaluation.

Summary

Rows: Use statistical information or the Index Dive method to estimate the number of records in the interval

Cost: I/O cost of index lookups + CPU cost of evaluation

In fact, all the access paths mentioned above have one thing in common: at most one secondary index can be accessed. However, it is possible to find a better access path by accessing multiple secondary indexes. Roworder Intersect and Index Merge Union are actually access paths that need to access multiple secondary indexes. As shown in their names, Roworder Intersect retrieves multiple secondary indexes, takes the intersection of the obtained record primary keys, and then performs index lookups. Index Merge Union, on the contrary, takes the union.

A simple example to facilitate understanding is as follows:

CREATE TABLE t1 (

id INT PRIMARY KEY,

col1 INT,

col2 INT,

KEY inedx_col1_id (col1, id),

KEY index_col2_id (col2, id)

) ENGINE=InnoDB;

# 1

SELECT * FROM t1 WHERE col1 = 1 OR col2 = 1;

#2

SELECT * FROM t1 WHERE col1 = 1 AND col2 = 1;The first SQL statement can use Index Merge Union to obtain the primary key of the record with col1=1 on the inedx_col1_id, obtain the primary key of the record with col1=2 on the index_col2_id, then merge to obtain the primary key set, and finally obtain the complete record by performing index lookups on the primary key index.

The second SQL statement can use Roworder Intersect to obtain the primary key of the record with col1=1 on the inedx_col1_id, obtain the primary key of the record with col1=2 on the index_col2_id, then obtain the intersection for the primary key set, and finally obtain the complete record by performing index lookups on the primary key index.

At this point, it can be seen that both Index Merge Union and Roworder Intersect rely on a set operation that takes the intersection or union, but it is not easy to implement this set operation. The first thing that needs to be discussed is: Are the records obtained from each index ordered by the primary key? If so, then both the operations of obtaining the intersection and union are easy, which is a multi-way merge. If not, additional sorting is required. In MySQL, the records returned by Scan on an index are ordered by the primary key called Rowid-Ordered Retrieval (ROR). It is also easy to determine whether an index scan is ROR. Assume that there is a primary key index containing the column<a1, ..., a_m, b_1, ..., b_n> and a secondary index containing the column <kp_1, ..., kp_j, a_1, ..., a_m>. Then, as long as SQL has a filter condition of kp_1=v_1, kp_2 = v_2, ..., kp_n = v_n, the records obtained on the secondary index are ordered according to the primary key, and this scan is ROR. In the InnoDB implementation, the primary key columns are added to the secondary index. Therefore, when an equivalent query is performed on an index that does not contain any primary key columns, the scan on the secondary index is ROR.

The cost calculations of the Roworder Intersect and Index Merge Union are also very complicated. Here, let's take Index Merge Union as an example for a brief introduction.

The cost of the Index Merge Union is mainly divided into the following parts (assuming that there is a non-ROR scan). If all are ROR scans, the cost calculation will be simpler.

Next, we will use a table to summarize the cost estimation of all single-table access paths of the MySQL optimizer:

| Access Path | Row count | IO Cost | CPU Cost |

|---|---|---|---|

| system/const | 1 | 1 | |

| Table Scan | stats.records | Number of clustered index pages × Cost of reading each index page + I/O cost correction value (1.1) | CPU cost of evaluation + CPU cost correction value (1.0) |

| Covering Index | stats.records | Number of index pages × Cost of reading each index page | CPU cost of the evaluation |

| Group Range | The cardinality of the key used in the GROUP BY statement | I/O cost of the Skip Scan | CPU cost of the evaluation + CPU cost of retrieving B+ Trees |

| Skip Scan | Histogram-derived selectivity / Default selectivity × stats.records | I/O cost of the Skip Scan | CPU cost of the evaluation + CPU Cost of retrieving B+ Trees |

| Index Range Scan (index lookups are not required) | Use statistical information or Index Dive to estimate | Number of index pages to be read × Cost of reading each index page | CPU cost of the evaluation |

| Index Range Scan (index lookups are required) | Use statistical information or Index Dive to estimate | (Rows + Ranges) × Cost of reading each clustered index page | CPU cost of the evaluation |

| Roworder Intersect | Perform multiple Index Range Scans to obtain the maximum number of rows | None | None |

| Index Merge Union | Perform multiple Index Range Scans to obtain the sum of the number of rows | None | None |

The above details the cost estimation of all single-table access paths in the MySQL optimizer. The sequence of access paths described in the article is based on the enumeration sequence of access paths in the bool JOIN::estimate_rowcount() in the MySQL source code.

There are still many aspects that are not fully explained in this article. For instance, how is statistical information such as records_per_key maintained in MySQL? How is the SEL_TREE in the Index Range Scan generated? These questions remain unanswered. Furthermore, the MySQL optimizer encompasses a wide range of content, including SQL transformation and the JOIN reorder algorithm. If time permits, I will delve into other related modules of the MySQL optimizer in future articles. Finally, if you have any questions or feedback, please feel free to share.

Jingqi Tian (Yuanqi) is a member of the ApsaraDB RDS for MySQL kernel R&D team. Currently, he is mainly engaged in SQL optimization execution, DDL-related R&D, and RDS O&M.

Alibaba Cloud PolarDB Awarded ICDE 2024 Industry and Applications Track Best Paper Award

Alibaba Clouder - May 27, 2019

Alibaba Clouder - September 30, 2019

Alibaba Cloud Community - April 3, 2024

Apache Flink Community China - September 15, 2022

Alibaba Developer - April 26, 2022

ApsaraDB - December 25, 2024

ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn MoreMore Posts by ApsaraDB