By Yu Kai

Co-Author: Xieshi (Alibaba Cloud Container Service)

This article is the third part of the series, which mainly introduces the forwarding links of data plane links in Kubernetes Terway ENIIP modes. First, it can detect the reasons for the performance of customer access results in different scenarios and help customers further optimize the business architecture by understanding the forwarding links of the data plane in different scenarios. On the other hand, by understanding the forwarding links in-depth, when encountering container network jitter, customer O&M and Alibaba Cloud developers can know which link points to deploy and observe manually to further delimit the direction and cause of the problem.

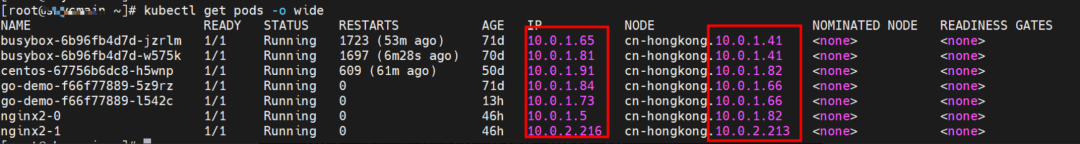

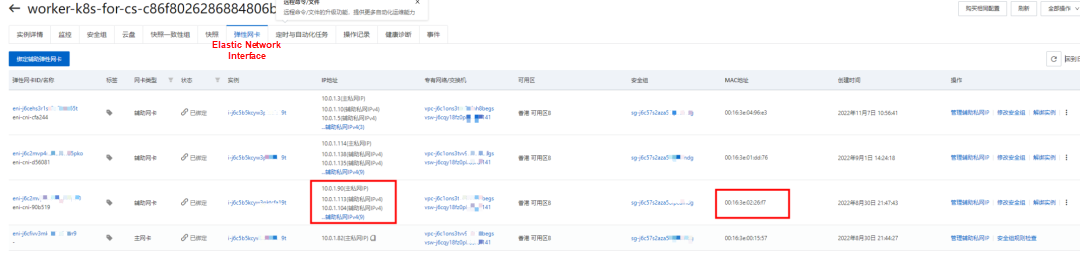

You can configure multiple auxiliary IP addresses for an Elastic Network Interface (ENI). A single Elastic Network Interface can allocate 6 to 20 auxiliary IP addresses based on the instance type. In the ENI multi-IP mode, the auxiliary IP addresses are allocated to containers. This improves the scale and density of pod deployment.

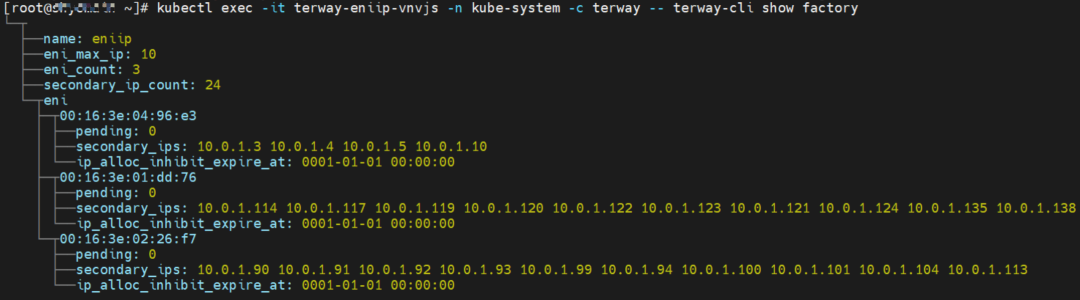

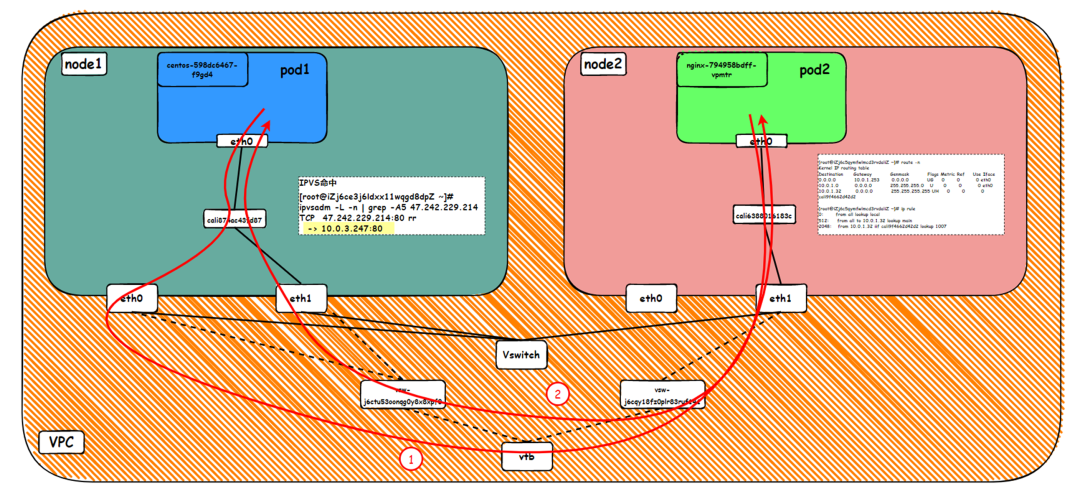

In terms of network connection mode, Terway supports two solutions: Veth pair policy-based routing and ipvlan l. Terway mainly considers:

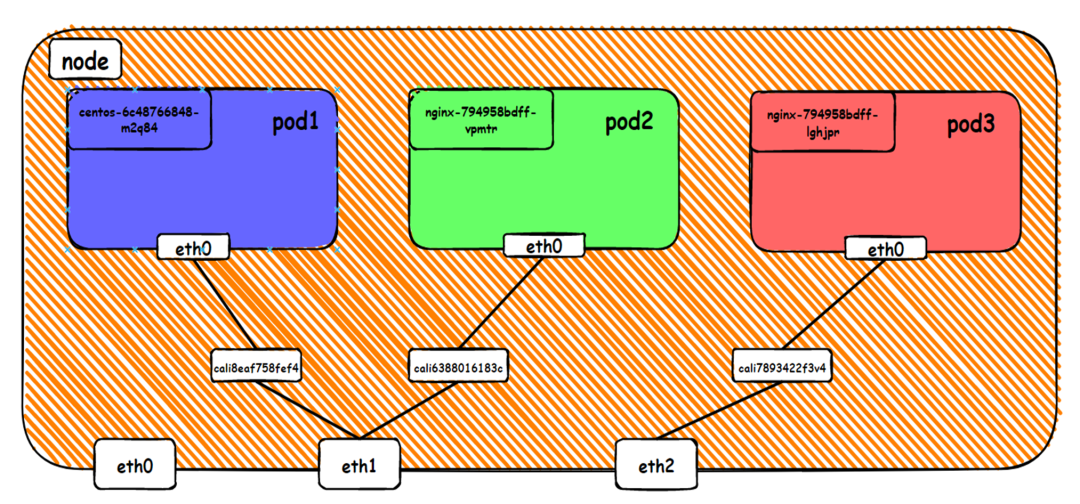

The CIDR block used by the pod is the same as the CIDR block of the node.

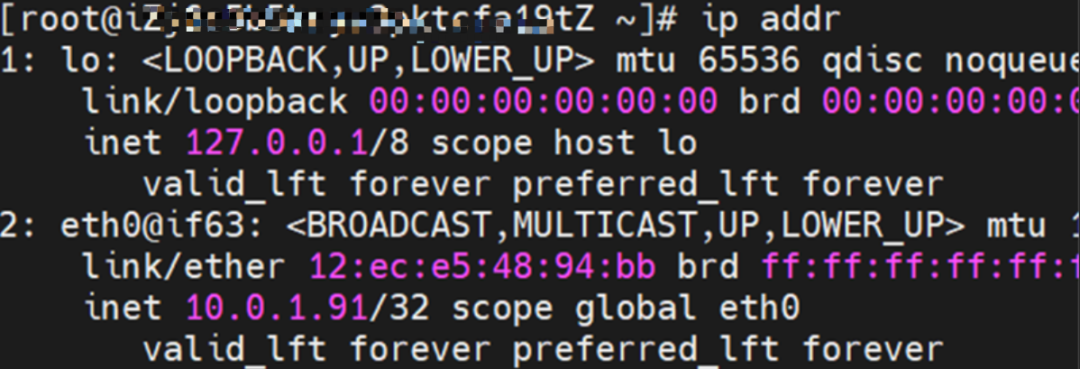

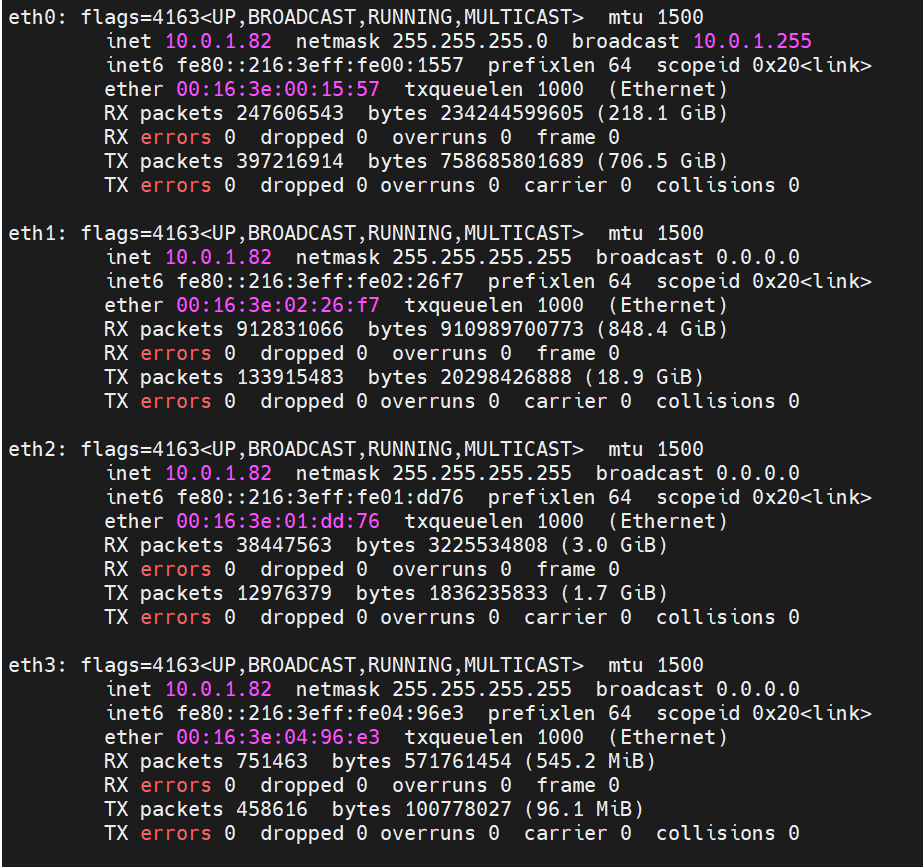

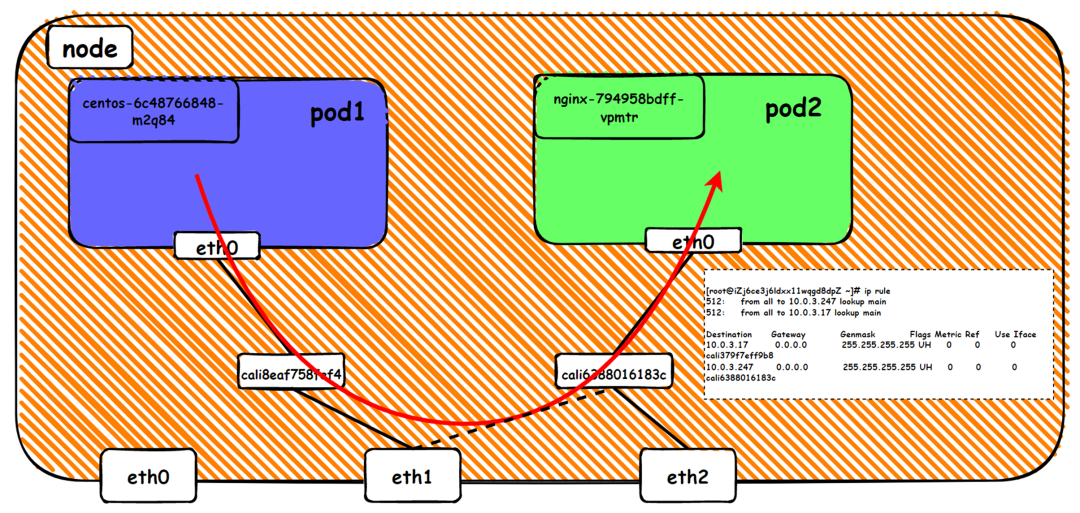

There is a network interface controller inside the Pod; one is eth0, whose IP is the IP of the Pod. The MAC address of this network interface controller is inconsistent with the MAC address of the ENI on the console. At the same time, there are multiple ethx network interface controllers on ECS, indicating that the ENI subsidiary network interface controller is not directly mounted in the network namespace of the Pod.

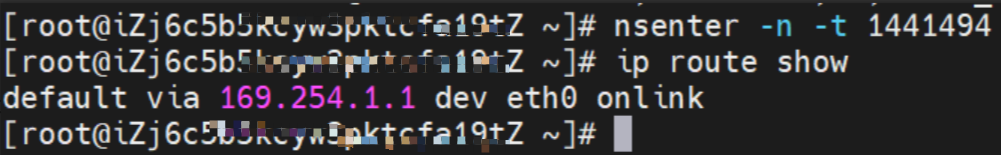

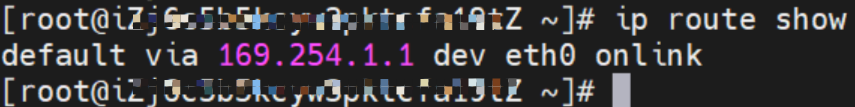

The pod has a default route that only points to eth0, indicating that the pod accesses any address segment from eth0 as a unified ingress and egress.

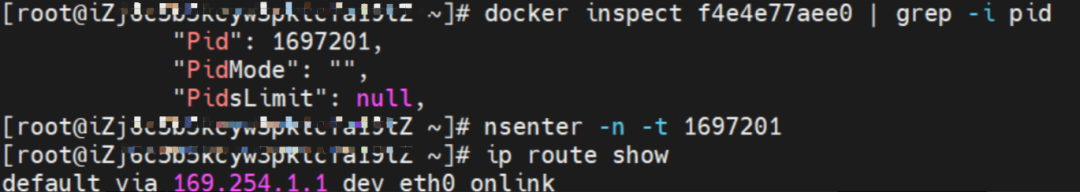

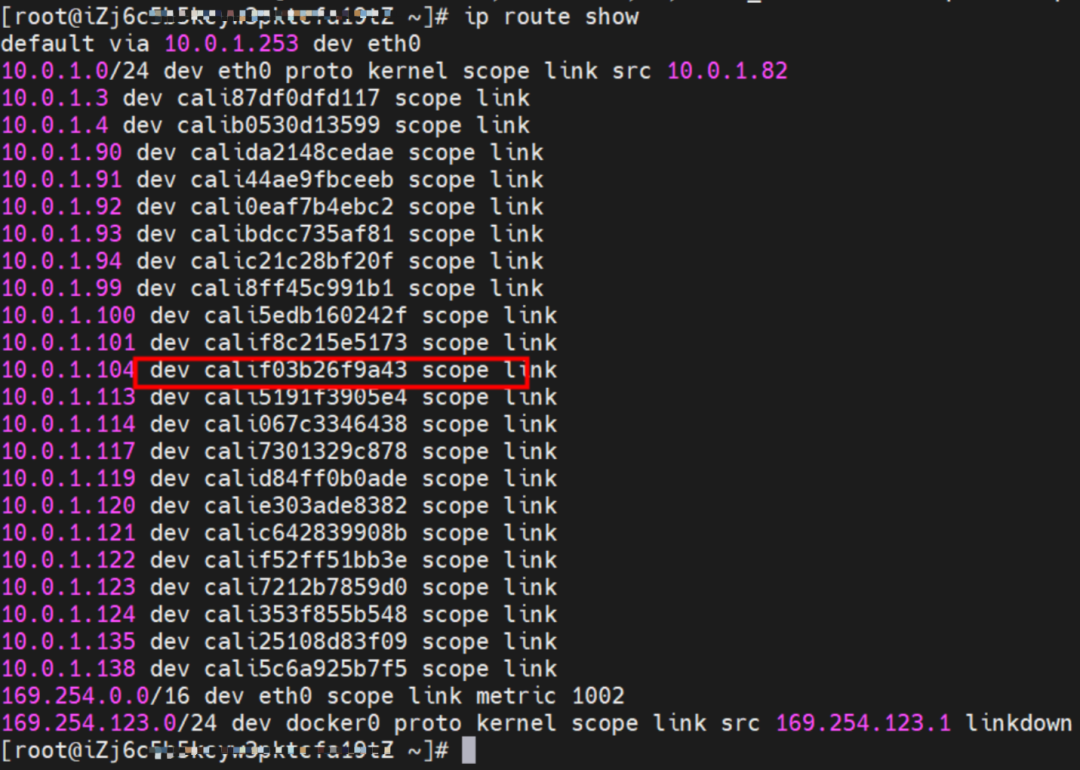

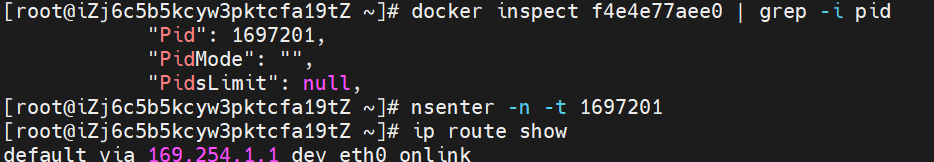

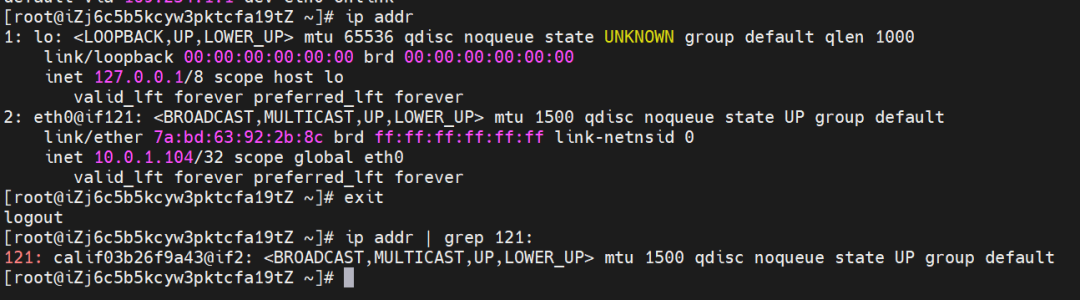

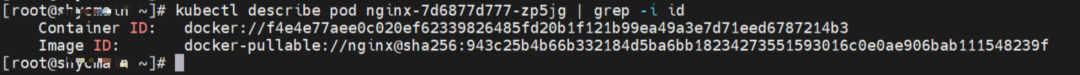

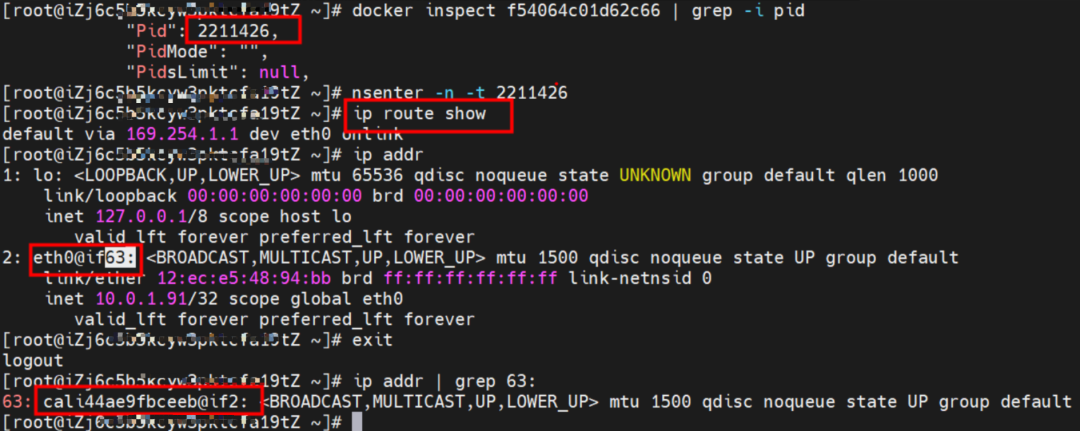

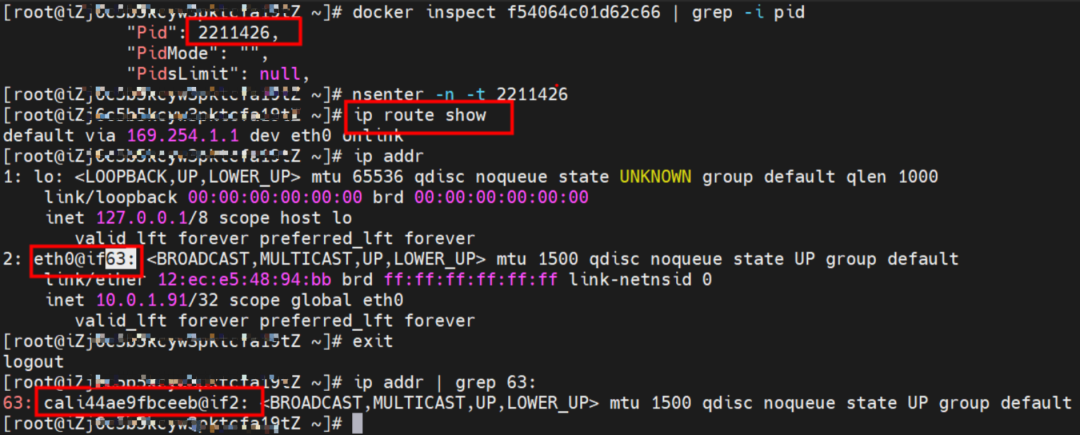

As shown in the figure, we can see eth0@if63 in the network namespace of the container through ip addr, of which 63 will help us find the opposite of veth pair in the network namespace of the container in the OS of ECS. In ECS OS, you can use ip addr | grep 63: to find the virtual network interface controller cali44ae9fbceeb, which is the opposite of the veth pair on the ECS OS side.

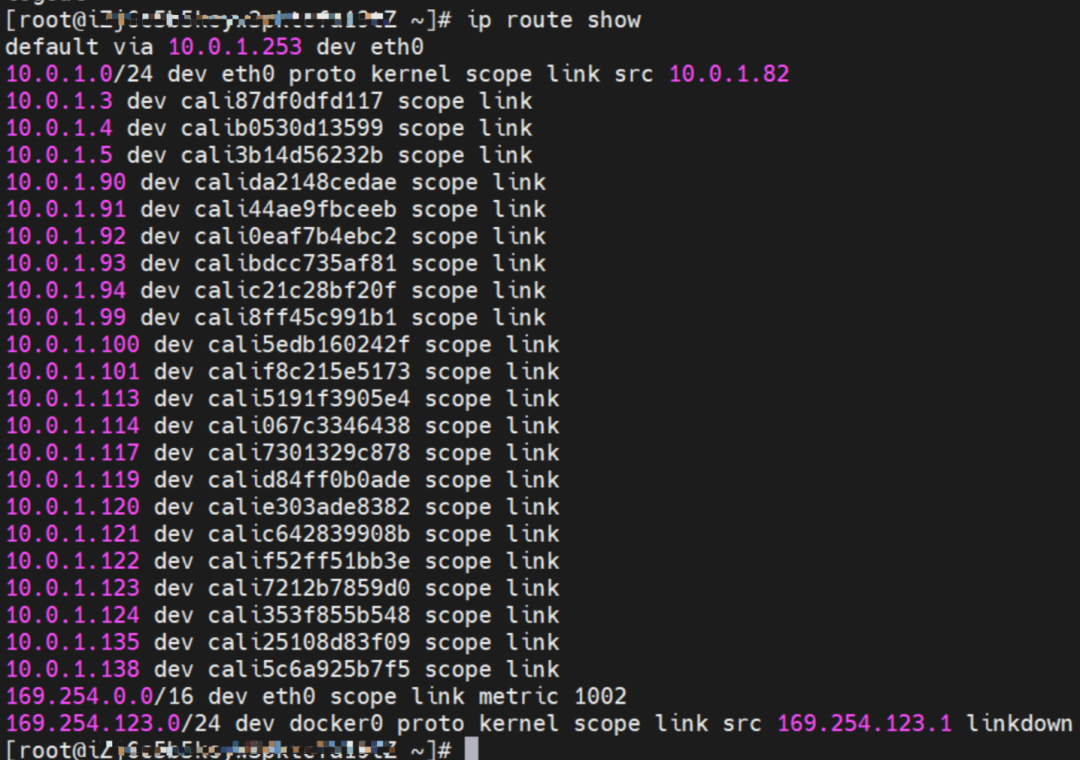

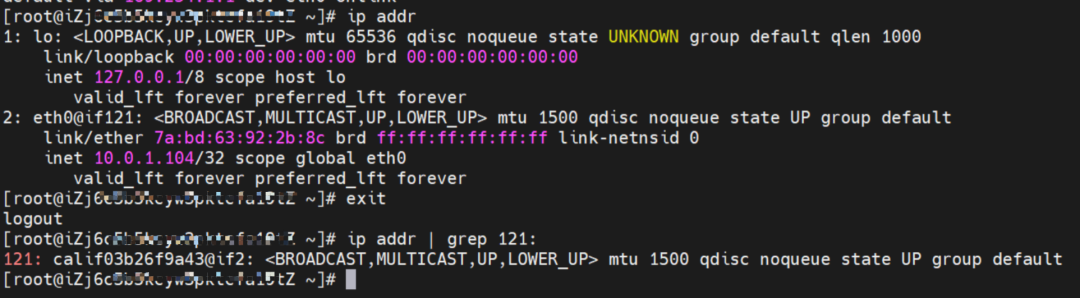

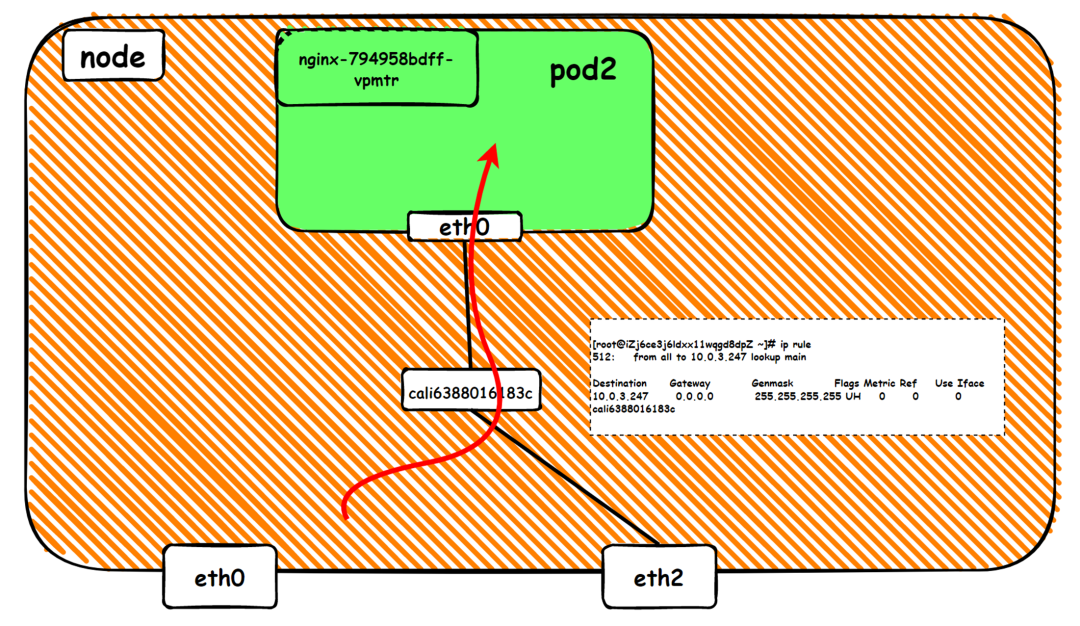

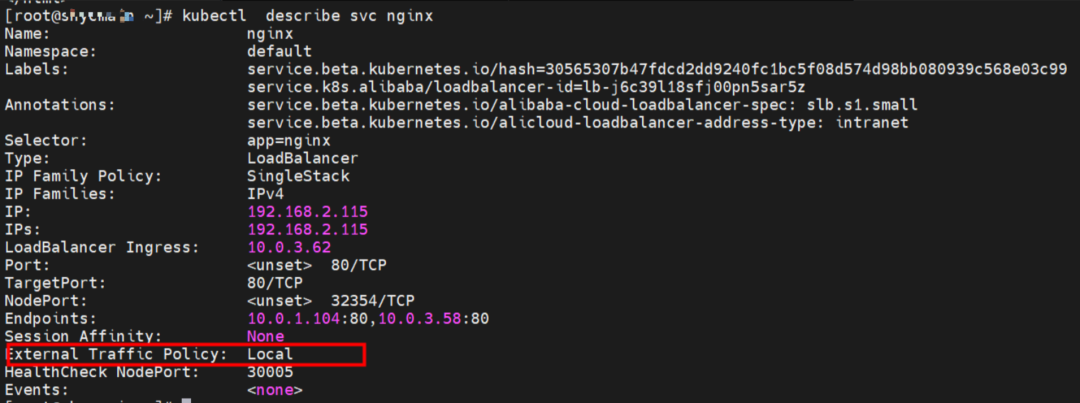

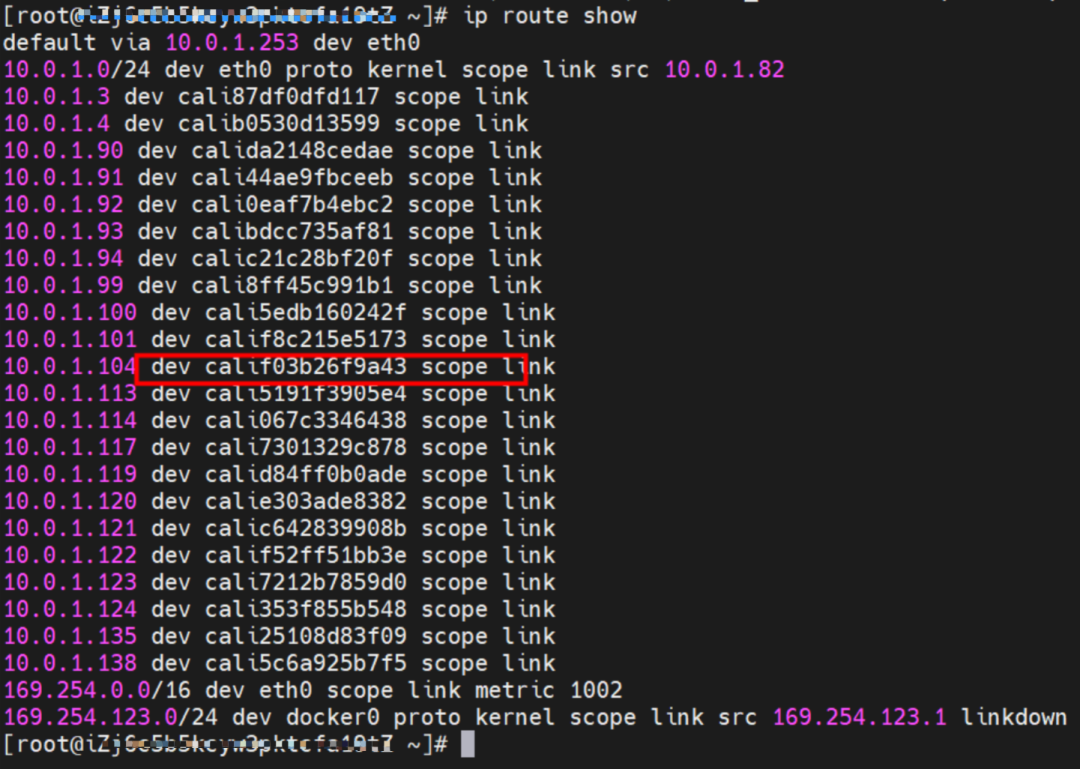

How does ECS OS determine which container to go to for data traffic? Through OS Linux Routing, we can see that all traffic destined for Pod IP will be forwarded to the calico virtual network interface corresponding to Pod. So far, the network namespace of ECS OS and Pod has established a complete ingress and egress link configuration.

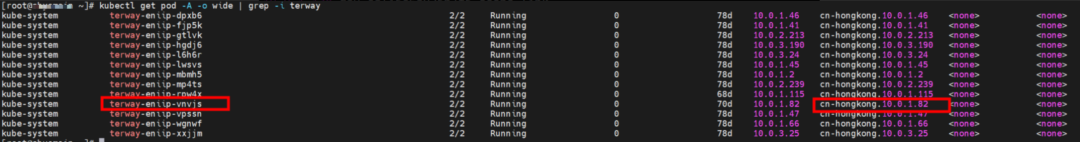

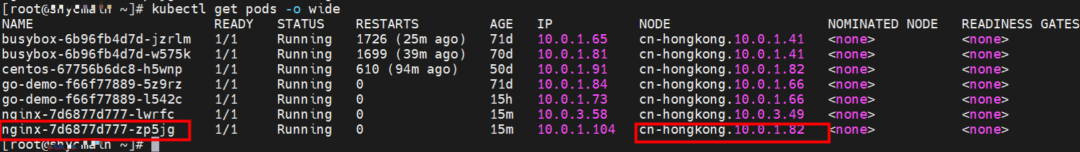

In the veth pair, multiple pods share an ENI to improve the pod deployment density of ECS. How do we know which ENI a pod is assigned to? Terway pods are deployed on each node using a daemonset. You can run the following command to view the Terway Pod on each node. Run the terway-cli show factory command to view the number of secondary ENIs on the node, the MAC address, and the IP address on each ENI.

Therefore, the Terway ENIIP mode can be summarized as:

Based on the characteristics of container networks, network links in Terway ENI mode can be roughly divided into two major SOP scenarios: Pod IP and SVC. Further subdivided, seven different small SOP scenarios can be summarized.

Under the TerwayENI architecture, different data link access scenarios can be summarized into eight categories.

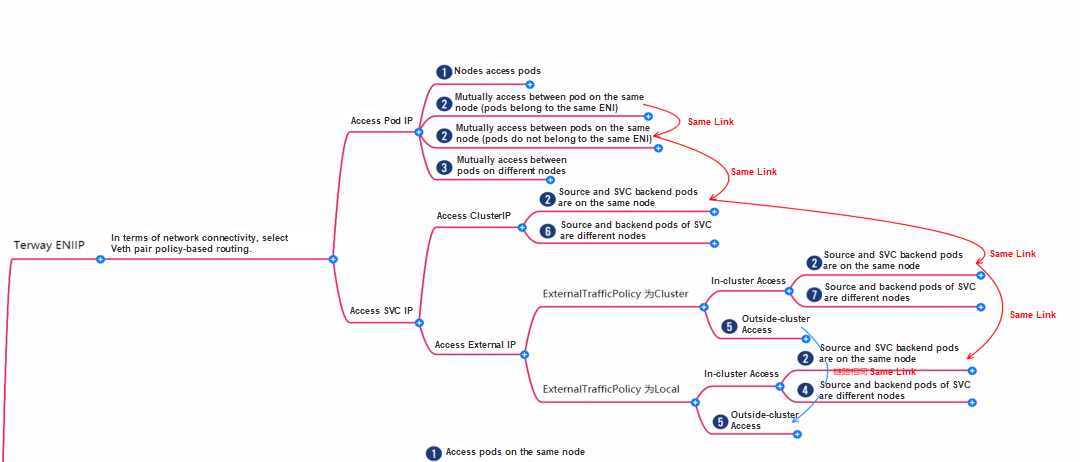

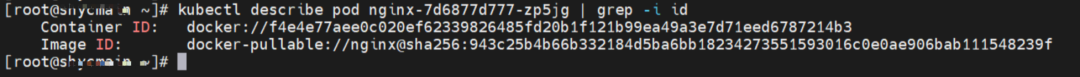

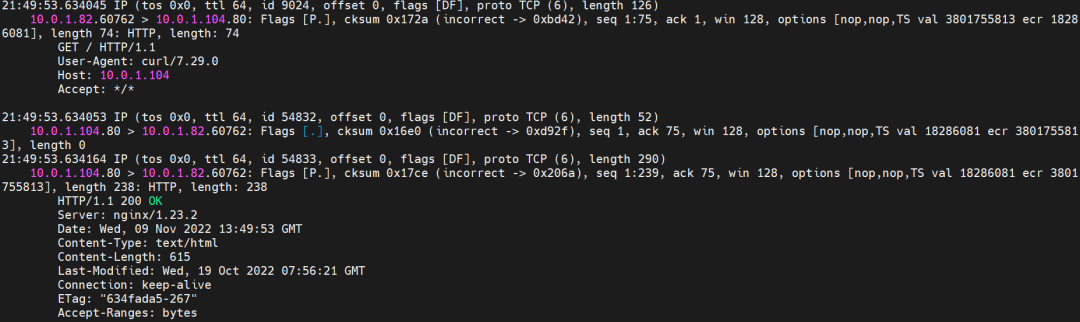

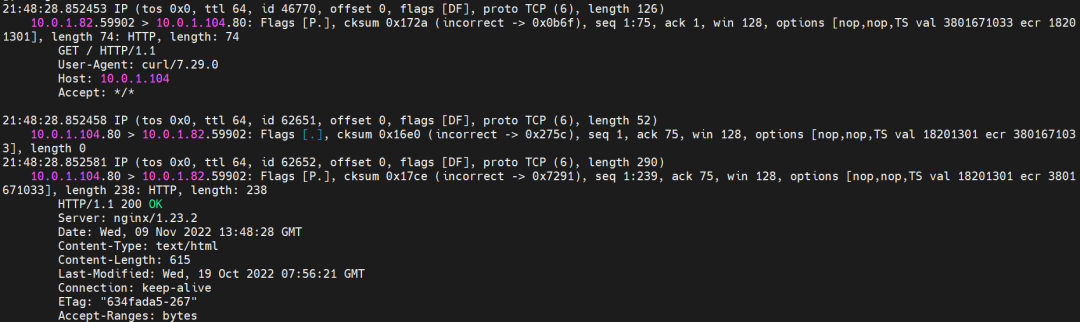

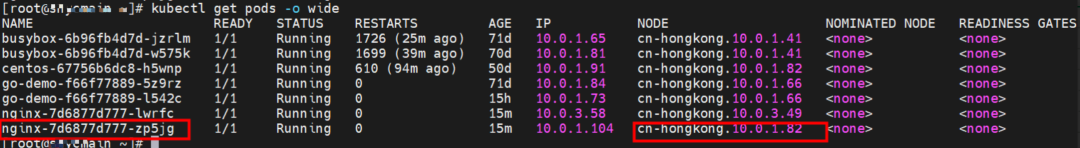

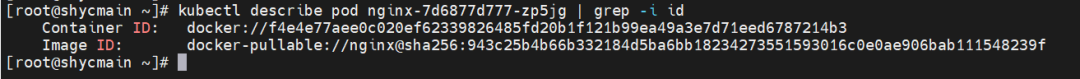

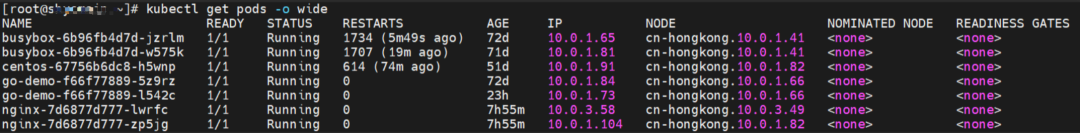

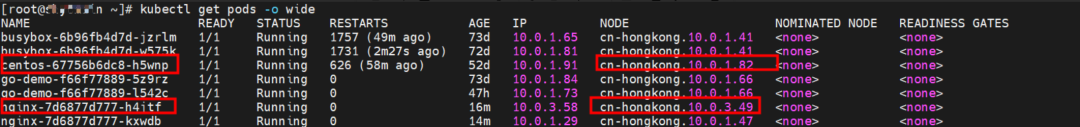

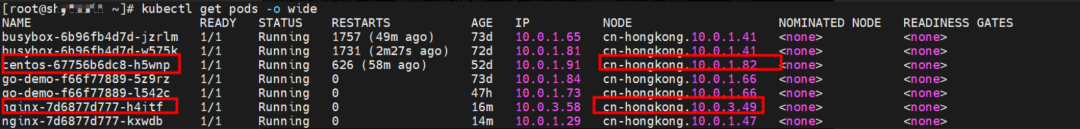

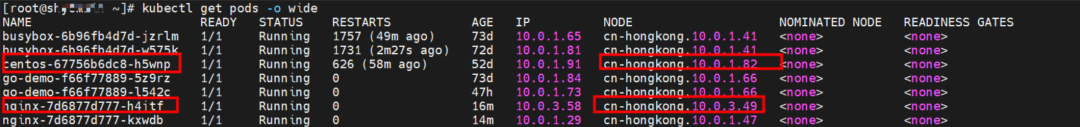

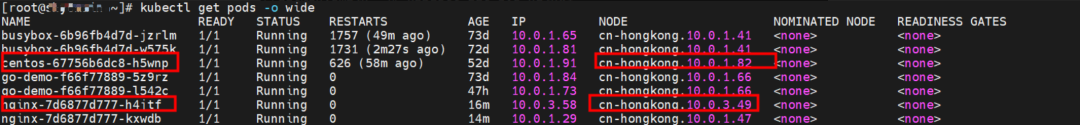

The nginx-7d6877d777-zp5jg and 10.0.1.104 exist on the cn-hongkong.10.0.1.82 node.

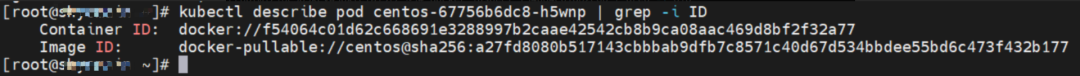

The nginx-7d6877d777-zp5jg IP address is 10.0.1.104, the PID of the container on the host is 1094736, and the container network namespace has a default route pointing to container eth0.

This container eth0 corresponds to the veth pair of calif03b26f9a43 in the ECS OS.

In ECS OS, there is a route that points to Pod IP, and the next hop is calixxxxx. As you can see from the preceding section, the calixxx network interface controller is a pair composed of veth1 in each pod. Therefore, accessing the CIDR of SVC in the pod will point to veth1 instead of the default eth0 route.

Therefore, the main function of the calixx network interface controller here is to:

The destination can be accessed.

nginx-7d6877d777-zp5jg netns eth0 can catch packets.

nginx-7d6877d777-zp5jg calif03b26f9a43 can catch packets.

Data Link Forwarding Diagram

The nginx-7d6877d777-zp5jg and 10.0.1.104 exist on the cn-hongkong.10.0.1.82 node.

The centos-67756b6dc8-h5wnp and 10.0.1.91 exist on the cn-hongkong.10.0.1.82 node.

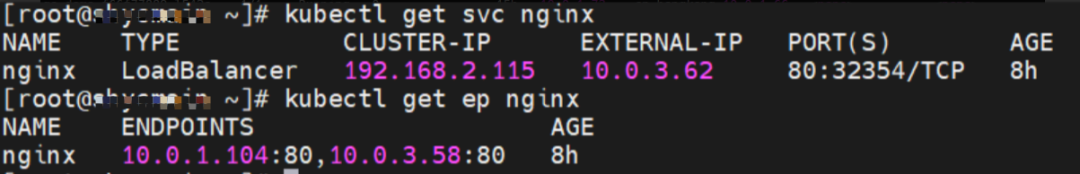

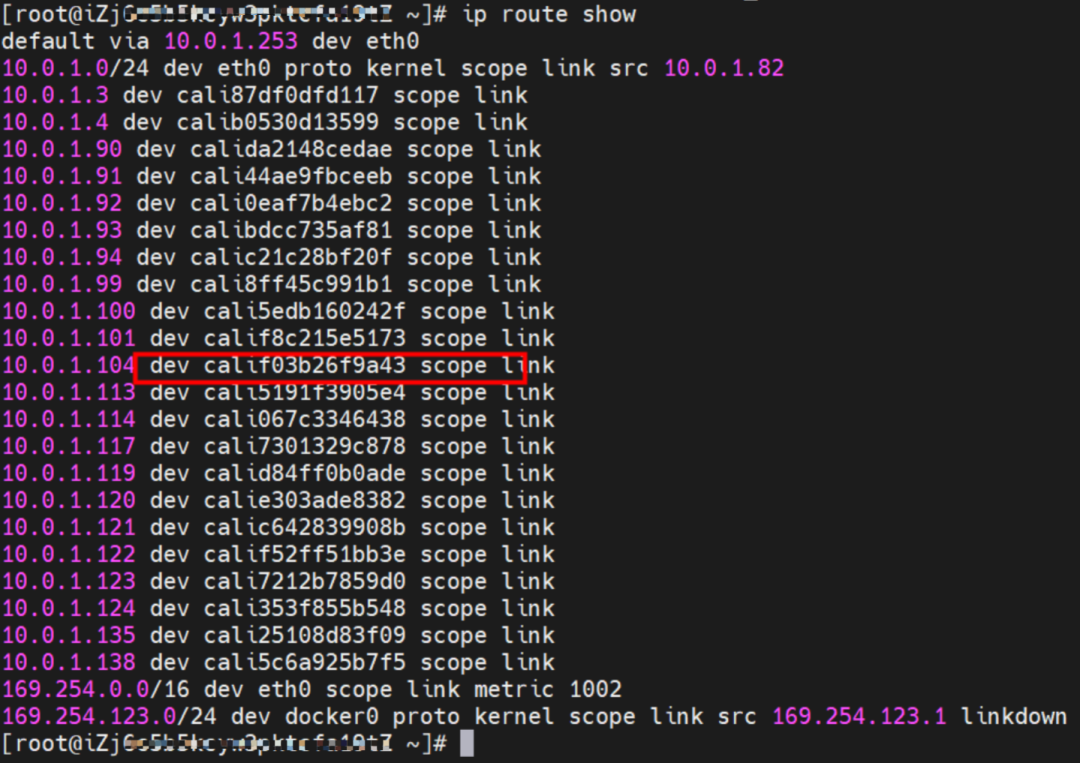

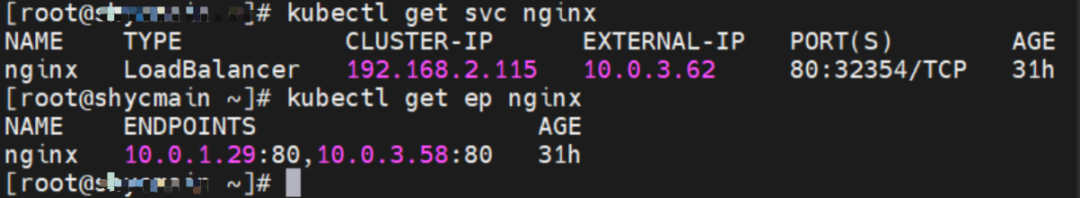

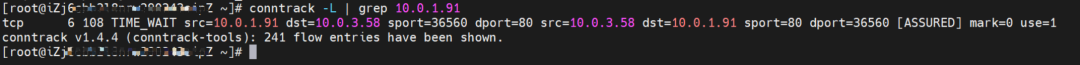

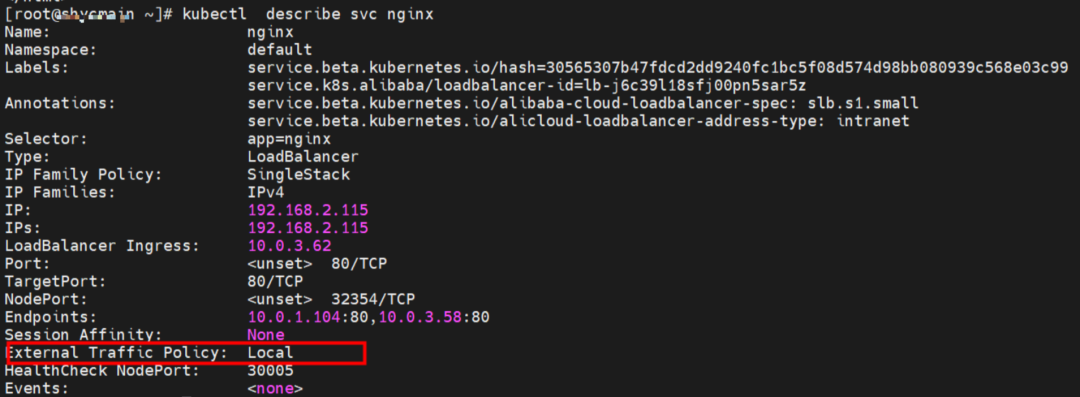

Service is nginx, ClusterIP is 192.168.2.115, and ExternalIP is 10.0.3.62.

The nginx-7d6877d777-zp5jg IP address is 10.0.1.104, the PID of the container on the host is 1094736, and the container network namespace has a default route pointing to container eth0.

This container eth0 corresponds to the veth pair of calif03b26f9a43 in the ECS OS.

You can use the preceding method to discover the cali44ae9fbceeb of the centos-67756b6dc8-h5wnp veth pair. The pod only has the default route in the network space.

In ECS OS, there is a route that points to Pod IP, and the next hop is calixxxxx. As you can see from the preceding section, the calixxx network interface controller is a pair composed of veth1 in each pod. Therefore, accessing the CIDR of SVC in the pod will point to veth1 instead of the default eth0 route.

Therefore, the main function of the calixx network interface controller here is to:

This indicates that related routing is performed at the ECS OS level, and the calixx network interface controller of pods serves as a bridge.

If the IP (ClusterIP or ExternalIP) of SVC is accessed on the same node, we check the relevant IPVS forwarding rules of SVC on the node.

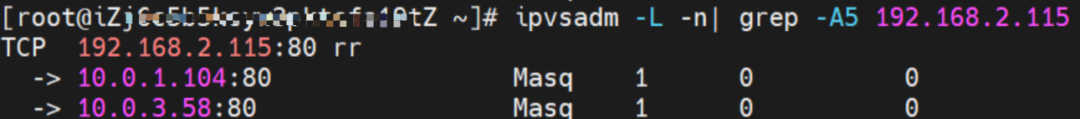

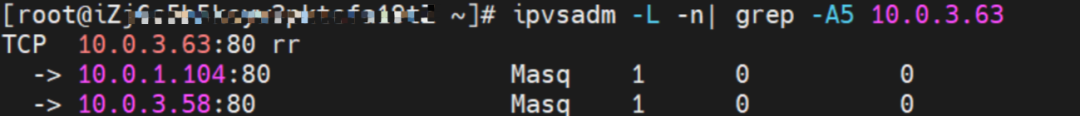

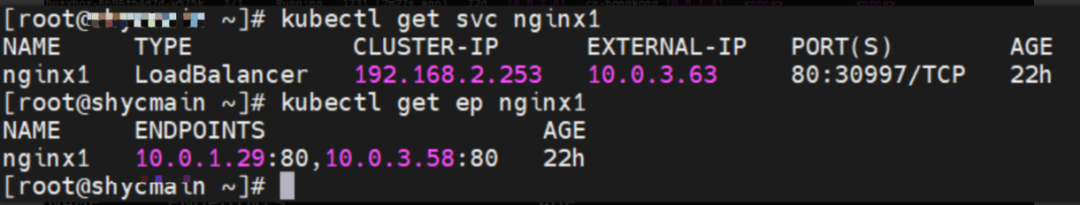

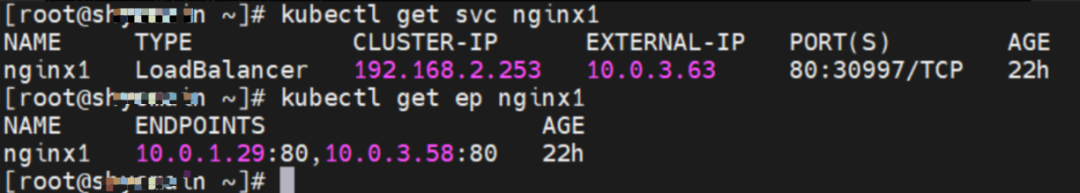

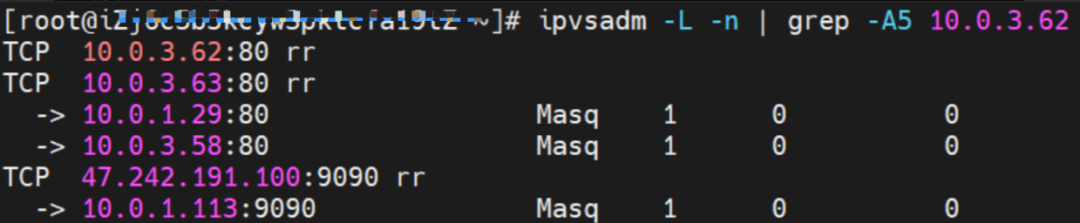

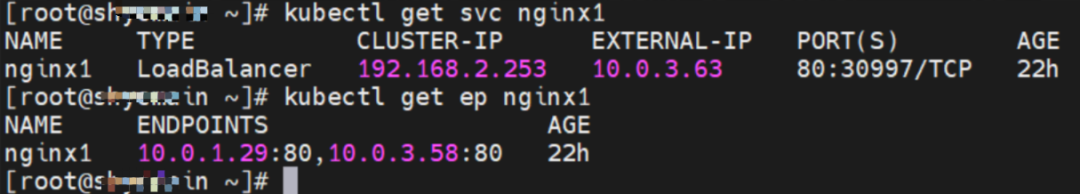

The SVC nginx CLusterIP is 192.168.2.115, the ExternalIP is 10.0.3.62, and the backend is 10.0.1.104 and 10.0.3.58.

cn-hongkong.10.0.1.82

For the ClusterIP of SVC, you can see that both the backend pods of SVC will be added to the forwarding rule of IPVS.

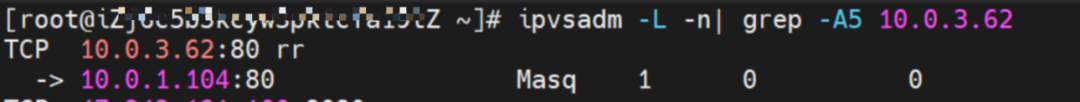

For ExternalIP of SVC, you can see the backend of SVC. Only the backend Pod 10.0.1.104 of this node will be added to the forwarding rule of IPVS.

In SVC mode of LoadBalancer, if the ExternalTrafficPolicy is Local, all SVC backend pods are added to the IPVS forwarding rules of the node for the ClusterIP address. If the node is ExternalIP, only the SVC backend pods on the node are added to the IPVS forwarding rules. If the node does not have an SVC backend pod, the pod on the node fails to access the SVC ExternalIP address.

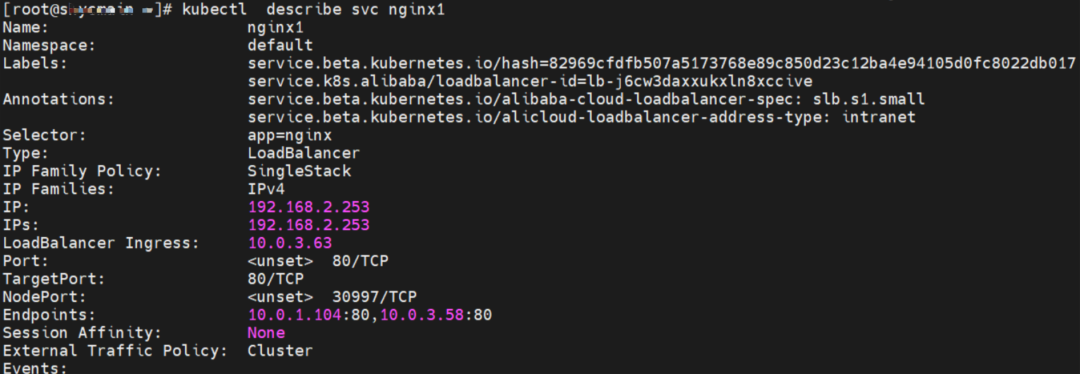

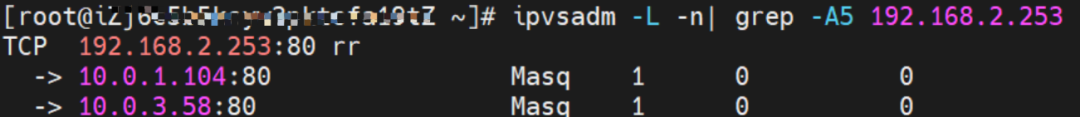

The SVC nginx1 CLusterIP is 192.168.2.253, the ExternalIP is 10.0.3.63, and the backend is 10.0.1.104 and 10.0.3.58.

cn-hongkong.10.0.1.82

For the ClusterIP of SVC, you can see that both the backend pods of SVC will be added to the forwarding rule of IPVS.

For ExternalIP of SVC, you can see that both backend pods of SVC will be added to the forwarding rule of IPVS.

In the SVC mode of LoadBalancer, if the ExternalTrafficPolicy is Cluster, all SVC backend pods are added to the IPVS forwarding rule of the node for ClusterIP or ExternalIP.

The destination can be accessed.

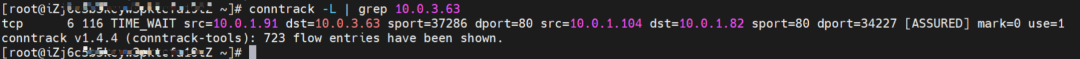

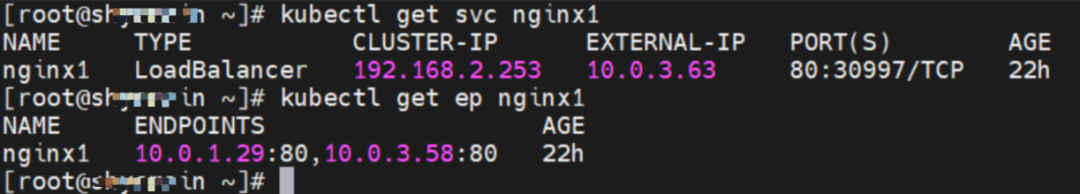

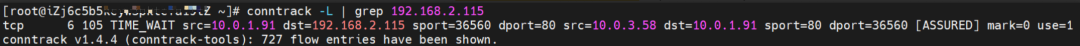

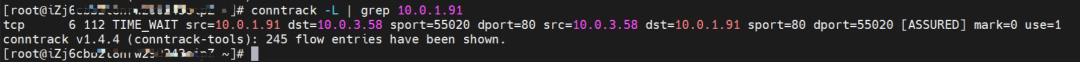

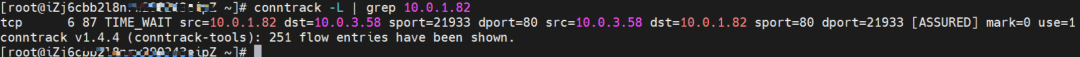

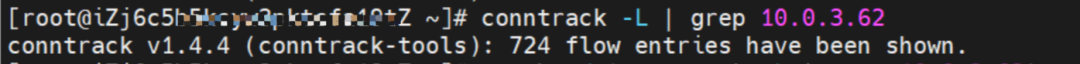

Conntrack Table Information

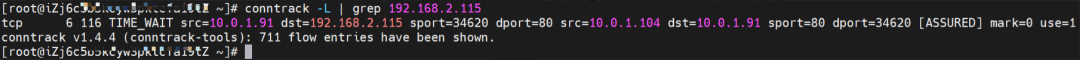

The SVC nginx CLusterIP is 192.168.2.115, the ExternalIP is 10.0.3.62, and the backend is 10.0.1.104 and 10.0.3.58.

1. If the ClusterIP of SVC is accessed, the conntrack information shows that src is the source pod 10.0.1.91, dst is SVC ClusterIP 192.168.2.115, and dport is the port in the SVC. The expectation is that 10.0.1.104 is packaged to 10.0.1.91.

2. If the ExternalIP of SVC is accessed, the src is the source pod 10.0.1.91, the dst is SVC ExternalIP 10.0.3.62, and the dport is the port in the SVC based on the conntrack information. The expectation is that 10.0.1.104 is packaged to 10.0.1.91.

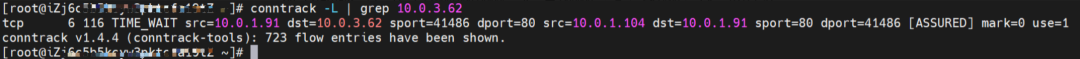

The SVC nginx1 CLusterIP is 192.168.2.253, the ExternalIP is 10.0.3.63, and the backend is 10.0.1.104 and 10.0.3.58.

1. If ClusterIP of SVC is accessed, the conntrack information shows that src is the source pod 10.0.1.91, dst is SVC ClusterIP 192.168.2.253, and dport is the port in the SVC. The expectation is that 10.0.1.104 is packaged to 10.0.1.91.

2. If ExternalIP of SVC is accessed, the src is source pod 10.0.1.91, the dst is SVC ExternalIP 10.0.3.63, and the dport is the port in the SVC based on the conntrack information. The expectation is that the IP 10.0.1.82 of the node ECS is packaged to 10.0.1.91.

In summary, we can see that src has changed many times. So, the real client IP may be lost in Cluster mode.

Data Link Forwarding Diagram

The centos-67756b6dc8-h5wnp and 10.0.1.91 exist on the cn-hongkong.10.0.1.82 node.

The nginx-7d6877d777-lwrfc and 10.0.3.58 exist on the cn-hongkong.10.0.3.49 node.

The centos-67756b6dc8-h5wnp IP address is 10.0.1.104, the PID of the container on the host is 2211426, and the container network namespace has a default route pointing to container eth0.

You can use the preceding method to discover the cali44ae9fbceeb of the centos-67756b6dc8-h5wnp veth pair. The pod has only the default route in the network space.

In ECS OS, there is a route that points to Pod IP and the next hop is calixxxxx. As you can see from the preceding section, the calixxx network interface controller is a pair composed of veth1 in each pod. Therefore, accessing the CIDR of SVC in the pod will point to veth1 instead of the default eth0 route.

Therefore, the main function of the calixx network interface controller here is to:

The destination can be accessed.

Data Link Forwarding Diagram

The nginx-7d6877d777-h4jtf and 10.0.3.58 cn-hongkong.10.0.1.82 exist on cn-hongkong.10.0.3.49 node.

centos-67756b6dc8-h5wnp and 10.0.1.91 exist on node.

Service1 is nginx, ClusterIP is 192.168.2.115, and ExternalIP is 10.0.3.62.

Service2 is ngin1, ClusterIP is 192.168.2.253, and ExternalIP is 10.0.3.63.

The kernel routing part has been described in detail in the summary of Scenario 2 and Scenario 3.

IPVS rules on source ECS.

According to the IPVS rules on source ECS in the summary of scenario 2, no matter in which SVC mode, for ClusterIP, all SVC backend pods will be added to the IPVS forwarding rule of the node.

The destination can be accessed.

Conntrack Table Information

The SVC nginx CLusterIP is 192.168.2.115, the ExternalIP is 10.0.3.62, and the backend is 10.0.1.104 and 10.0.3.58.

cn-hongkong.10.0.1.82

On the source ECS, src is source pod 10.0.1.91, dst is SVC ClusterIP 192.168.2.115, and dport is the port in SVC. The expectation is that 10.0.3.58 is packaged to 10.0.1.91.

cn-hongkong.10.0.3.49

On the destination ECS, src is the source pod 10.0.1.91, dst is the Pod IP 10.0.3.58, and dport is the port of the pod. It is expected that this pod is packaged to 10.0.1.91.

The SVC nginx1 CLusterIP is 192.168.2.253, the ExternalIP is 10.0.3.63, and the backend is 10.0.1.104 and 10.0.3.58.

cn-hongkong.10.0.1.82

On the source ECS, src is source pod 10.0.1.91, dst is SVC ClusterIP 192.168.2.115, and dport is the port in SVC. The expectation is that 10.0.3.58 is packaged to 10.0.1.91.

cn-hongkong.10.0.3.49

On the destination ECS, src is the source pod 10.0.1.91, dst is the Pod IP 10.0.3.58, and dport is the port of the pod. It is expected that this pod is packaged to 10.0.1.91.

For ClusterIP, source ECS will add all SVC backend pods to the IPVS forwarding rule of the node. The destination ECS cannot capture any SVC ClusterIP information and can only capture the IP of the source Pod, so it will return to the subsidiary network interface controller of the source Pod when returning the package.

Data Link Forwarding Diagram

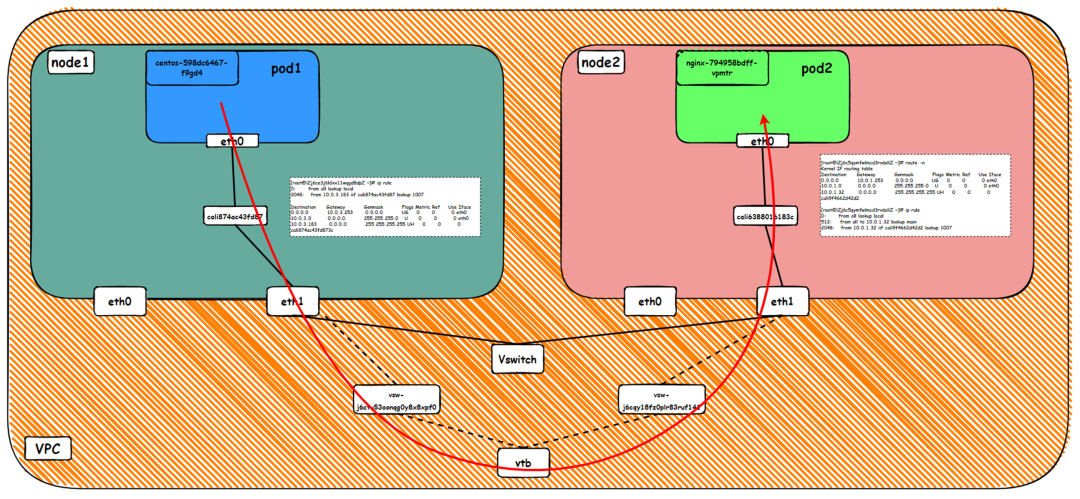

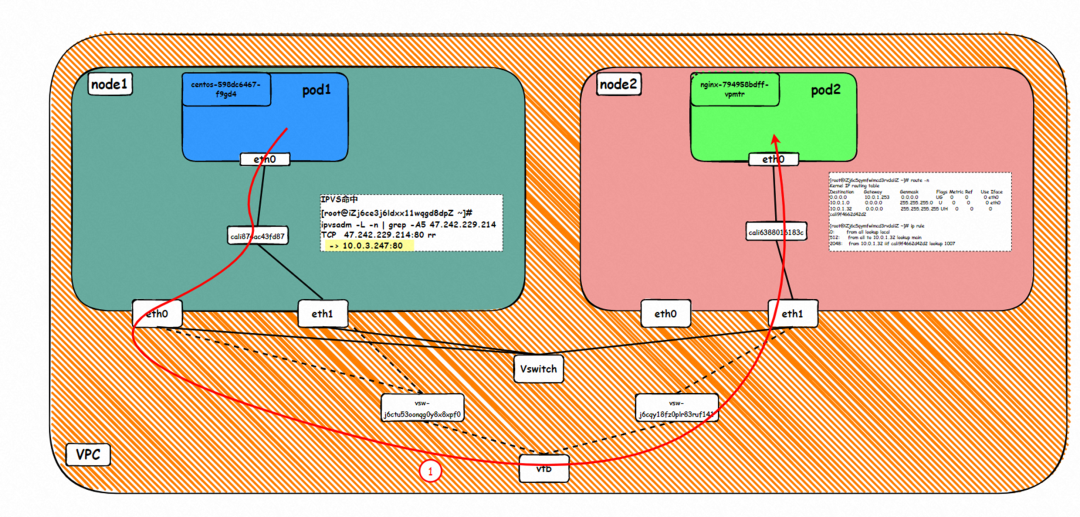

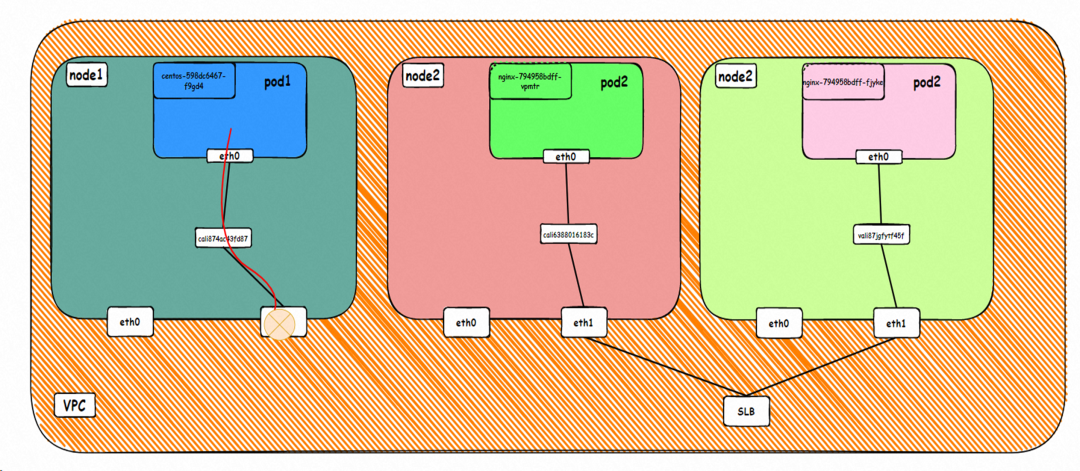

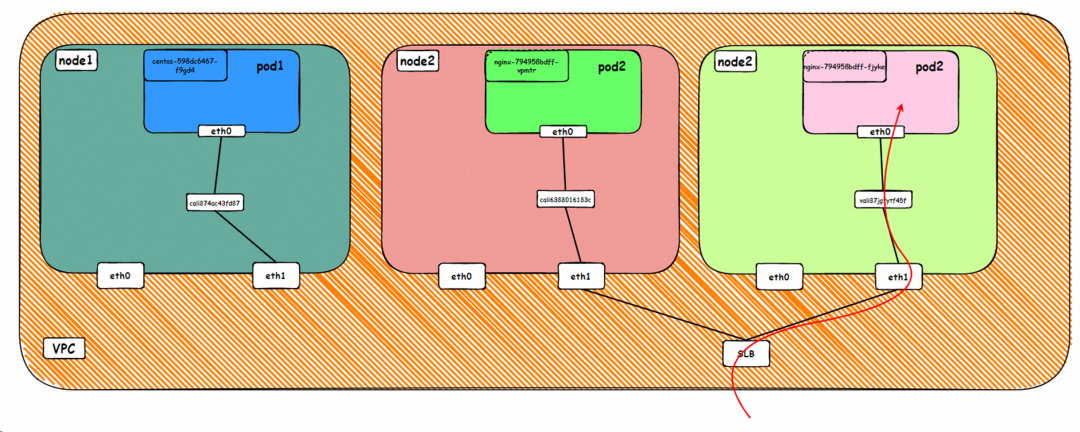

ECS1 Pod1 eth0 → ECS1 Pod1 calixxxxx → ECS1 primary network interface controller eth0 → vpc route rule (if any) → ECS2 secondary network interface controller ethx → ECS2 Pod2 calixxxxx -> ECS2 Pod2 eth0

ECS2 Pod2 eth0 → ECS2 Pod2 calixxxxx → ECS2 secondary network interface controller ethx → vpc route rule (if any) → ECS1 secondary network interface controller eth1 → ECS1 Pod1 calixxxxx → ECS1 Pod1 eth0

The nginx-7d6877d777-h4jtf and 10.0.3.58 exist on the cn-hongkong.10.0.3.49 node.

The centos-67756b6dc8-h5wnp and 10.0.1.91 exist on the cn-hongkong.10.0.1.82 node.

Service2 is ngin1, ClusterIP is 192.168.2.253, and ExternalIP is 10.0.3.63.

The kernel routing part has been described in detail in the summary of Scenario 2 and Scenario 3.

IPVS rules on source ECS.

According to the IPVS rules on the source ECS in the summary of scenario 2, in the cluster mode of the ExternalTrafficPolicy, for ExternalIP, all SVC backend pods will be added to the IPVS forwarding rules of the node.

The destination can be accessed.

Conntrack Table Information

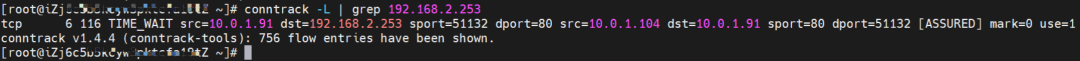

The SVC nginx1 CLusterIP is 192.168.2.253, the ExternalIP is 10.0.3.63, and the backend is 10.0.1.104 and 10.0.3.58.

cn-hongkong.10.0.1.82

On the source ECS, src is source pod 10.0.1.91, dst is SVC ExternalIP 10.0.3.63, and dport is the port in SVC. The expectation is that 10.0.3.58 is packaged to the address 10.0.1.82 of the source ECS.

cn-hongkong.10.0.3.49

On the destination ECS, src is the IP address 10.0.1.82 of the source pod, dst is the IP address 10.0.3.58 of the pod, and dport is the port of the pod. This pod is expected to be packaged to the address 10.0.1.82 of the source ECS.

When the ExternalTrafficPolicy is Cluster, for ExternalIP, the source ECS will add all SVC backend pods to the IPVS forwarding rule of the node. The destination ECS cannot capture any SVC ExternalIP information. It can only capture the IP of the ECS where the source pod is located. So, when returning the package, it will return to the main network interface of the ECS where the source pod is located, which is different from accessing ClusterIP in scenario 4.

Data Link Forwarding Diagram

The nginx-7d6877d777-h4jtf and 10.0.3.58 exist on the cn-hongkong.10.0.3.49 node.

The centos-67756b6dc8-h5wnp and 10.0.1.91 exist on the cn-hongkong.10.0.1.82 node.

Service1 is nginx, ClusterIP is 192.168.2.115, and ExternalIP is 10.0.3.62.

The kernel routing part has been described in detail in the summary of Scenario 2 and Scenario 3.

IPVS rules on the source ECS.

The SVC nginx CLusterIP is 192.168.2.115, the ExternalIP is 10.0.3.62, and the backend is 10.0.1.104 and 10.0.3.58.

cn-hongkong.10.0.1.82

For the SVC's ExternalIP, you can see the SVC's backend without any forwarding rules.

According to the IPVS rules on the source ECS in Scenario 2, when the ExternalTrafficPolicy is in Local mode, for ExternalIP, only the backend pods of the SVC on the current node are added to the IPVS forwarding rules on the node. If the node does not have an SVC backend, there are no forwarding rules.

The destination can not be accessed.

Conntrack Table Information

The SVC nginx1 CLusterIP is 192.168.2.253, the ExternalIP is 10.0.3.63, and the backend is 10.0.1.104 and 10.0.3.58.

cn-hongkong.10.0.1.82 has no conntrack record tables.

Data Link Forwarding Diagram

The nginx-7d6877d777-h4jtf and 10.0.3.58 exist on the cn-hongkong.10.0.3.49 node.

The nginx-7d6877d777-kxwdb and 10.0.1.29 exist on the cn-hongkong.10.0.1.47 node.

Service1 is nginx, ClusterIP is 192.168.2.115, and ExternalIP is 10.0.3.62.

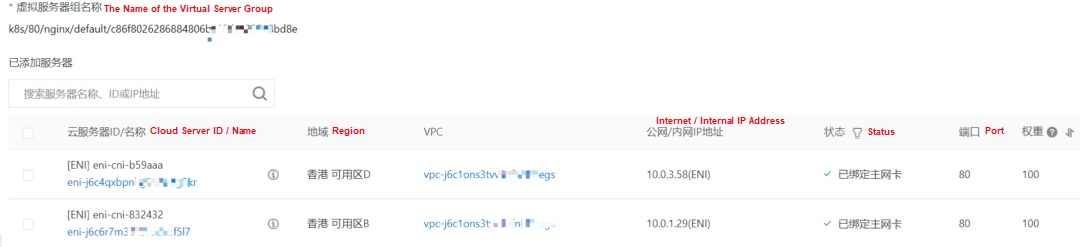

In the SLB console, you can see that the backend server group of the lb-j6cw3daxxukxln8xccive virtual server group is the ENI eni-j6c4qxbpnkg5o7uog5kr and eni-j6c6r7m3849fodxdf5l7 of two backend nginx pods.

From the external perspective of the cluster, the backend virtual server group of SLB is the network interface controller of the two ENIs to which the backend pods of SVC belong, and the internal IP address is the address of the pods.

The destination can be accessed.

Data Link Forwarding Diagram

This article focuses on ACK data link forwarding paths in different SOP scenarios in Terway ENIIP mode. With the customer's demand for extreme performance, Terway ENIIP can be divided into seven SOP scenarios. The forwarding links, technical implementation principles, and cloud product configurations of these seven scenarios are sorted out and summarized. This provides a preliminary guide to deal with link jitter, optimal configuration, and link principles under the Terway ENIIP architecture. In Terway ENIIP mode, the veth pair is used to connect the network space of the host and pod. The address of the pod comes from the auxiliary IP address of the Elastic Network Interface, and policy-based routing need to be configured on the node to ensure that the traffic of the auxiliary IP passes through the Elastic Network Interface to which it belongs. As such, ENI multi-Pod sharing can be realized, which improves the deployment density of the Pod. However, the veth pair will use the ECS kernel protocol stack for forwarding. The performance of this architecture is not as good as the ENI mode. In order to improve the performance, ACK developed the Terway ebpf + ipvlan architecture based on the ebpf and ipvlan technologies of the kernel.

Analysis of Alibaba Cloud Container Network Data Link (2): Terway EN

Analysis of Alibaba Cloud Container Network Data Link (4): Terway IPVLAN + EBPF

212 posts | 13 followers

FollowAlibaba Cloud Native - June 12, 2023

Alibaba Cloud Native - June 9, 2023

Alibaba Cloud Native - June 7, 2023

Alibaba Container Service - February 20, 2023

Alibaba Container Service - August 10, 2023

Alibaba Container Service - August 10, 2023

212 posts | 13 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native