By Yu Kai

Co-Author: Xieshi, Alibaba Cloud Container Service

This article is the fourth part of the series. It mainly introduces the forwarding links of data plane links in Kubernetes Terway EBPF + IPVLAN mode. First, by understanding the forwarding links of the data plane in different scenarios, it can discover the reasons for the performance of customer access results in different scenarios and help customers further optimize the business architecture. On the other hand, through an in-depth understanding of forwarding links, when encountering container network jitter, customer O&M and Alibaba Cloud students can know which link points to deploy and observe manually to further delimit the direction and cause of the problem.

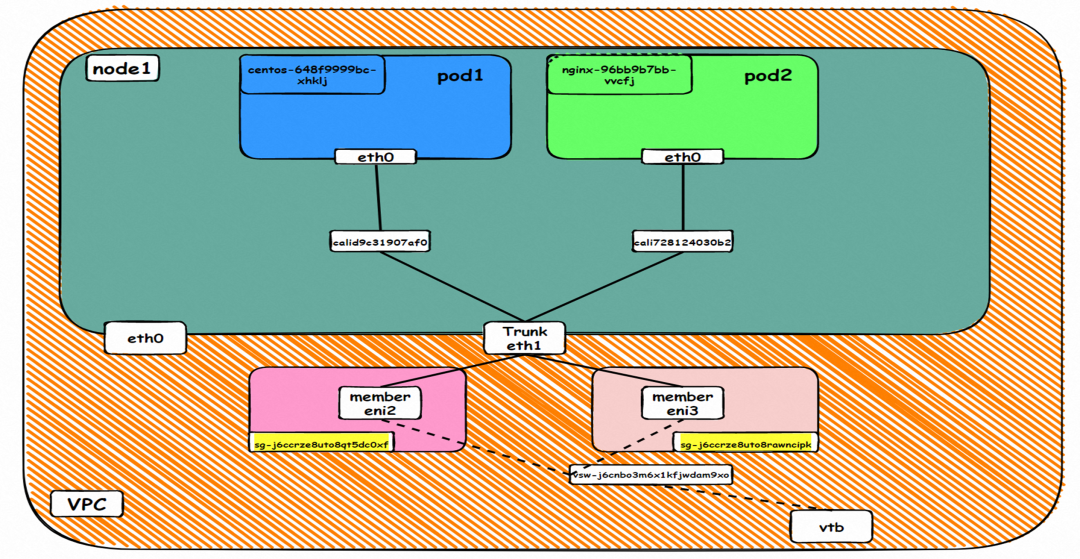

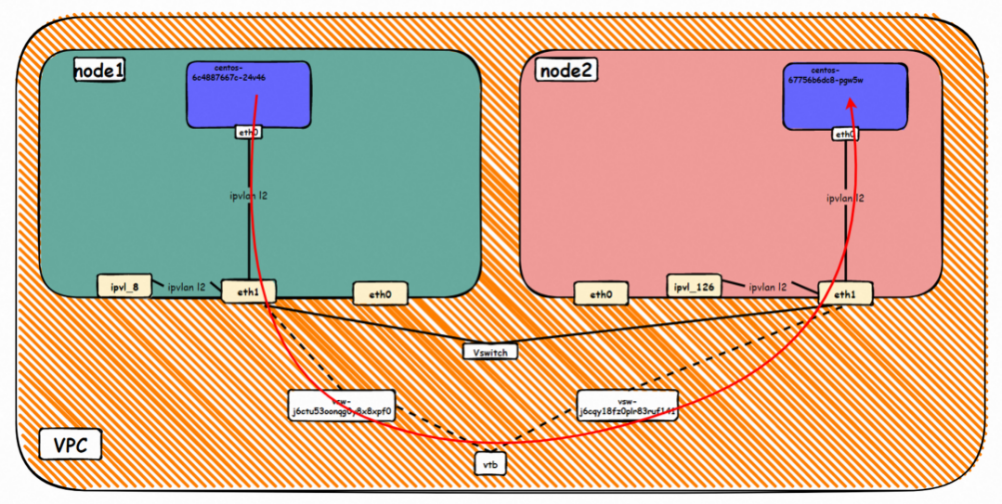

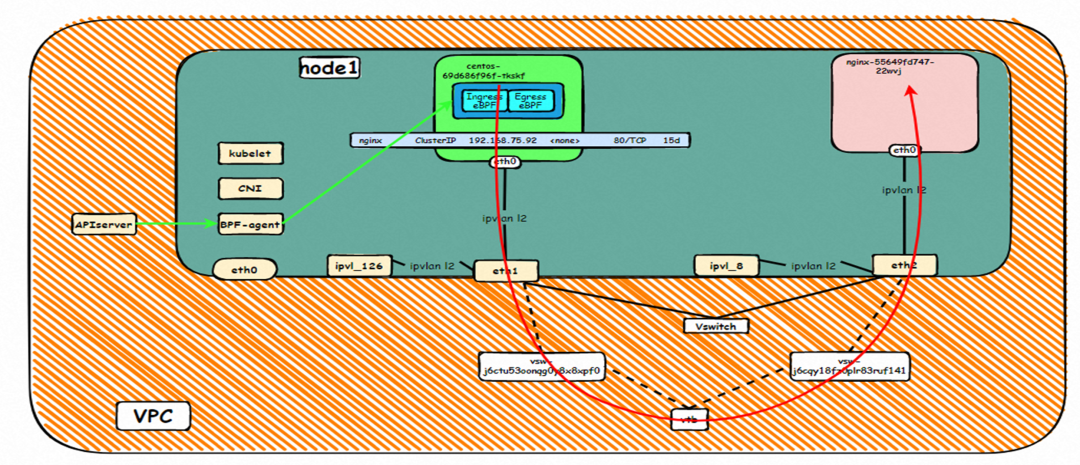

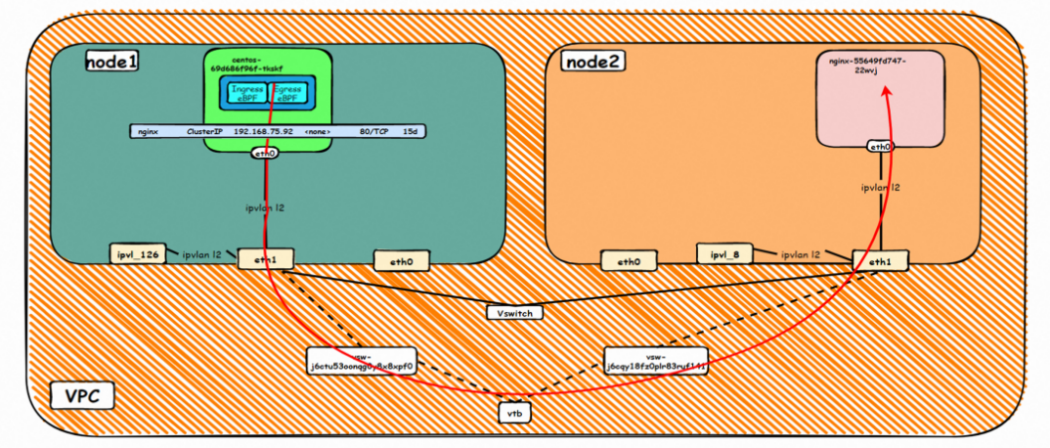

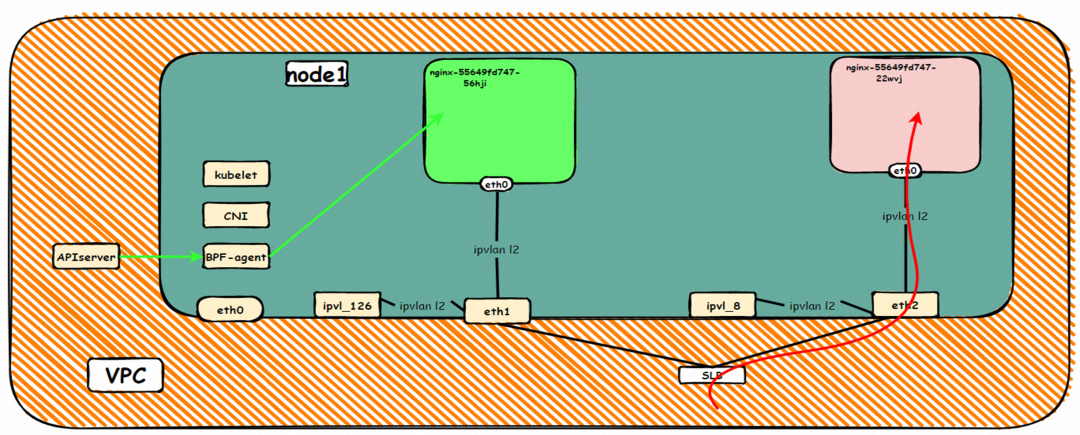

Elastic Network Interface allows you to configure multiple auxiliary IP addresses. A single Elastic Network Interface can allocate 6 to 20 auxiliary IP addresses based on the instance type. The ENI multi-IP mode uses this auxiliary IP to allocate to the container. This improves the scale and density of pod deployment. In terms of network connection, Terway supports two schemes: Veth pair policy-based routing and ipvlan l. Linux supports ipvlan virtual networks in 4.2 kernels and later, which can realize that multiple sub-network interface controllers virtualized by a single network interface controller use different IP addresses. Terway uses this virtual network type to bind the auxiliary IP of Elastic Network Interface to the sub-network interface controller of IPVlan to open up the network. Using this mode makes the network structure of ENI multi-IP sufficiently simple and has better performance than veth policy-based routing.

ipvlan:

https://www.kernel.org/doc/Documentation/networking/ipvlan.txt

The CIDR block used by the pod is the same as the CIDR block of the node.

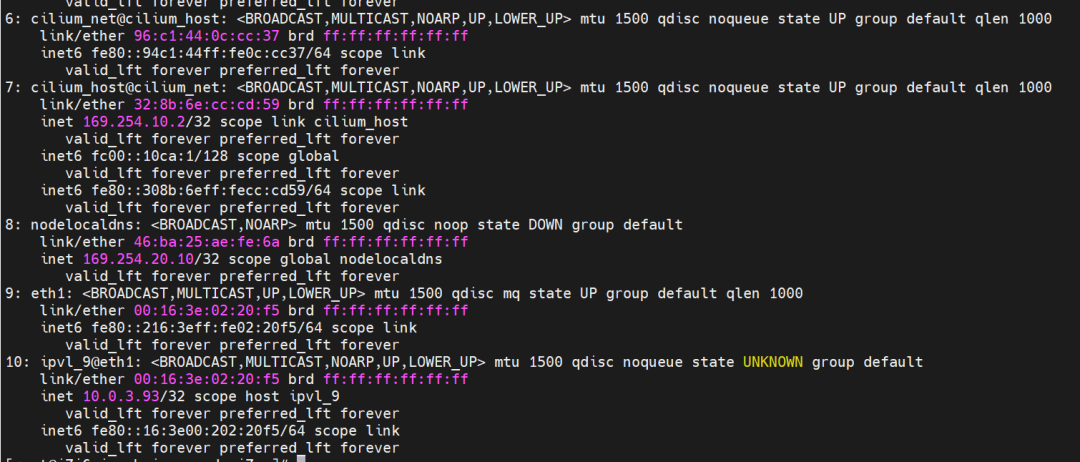

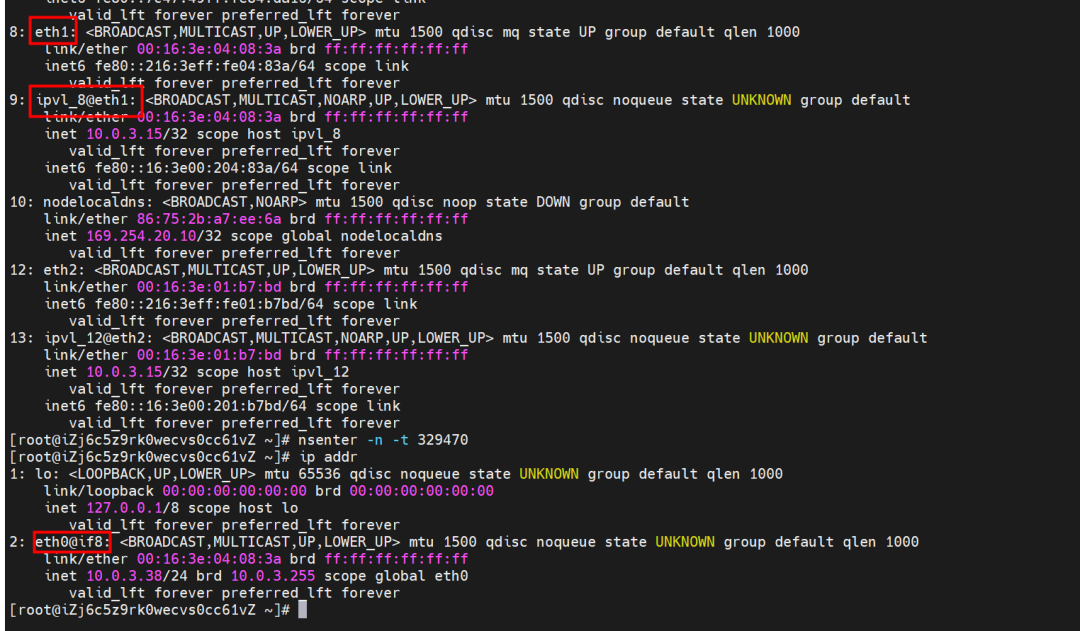

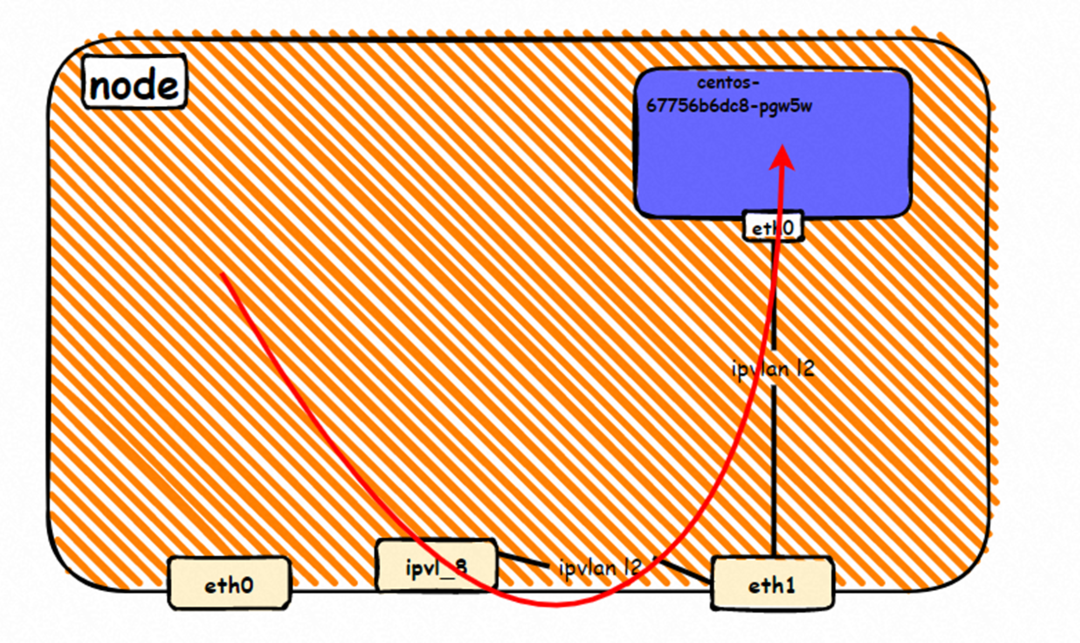

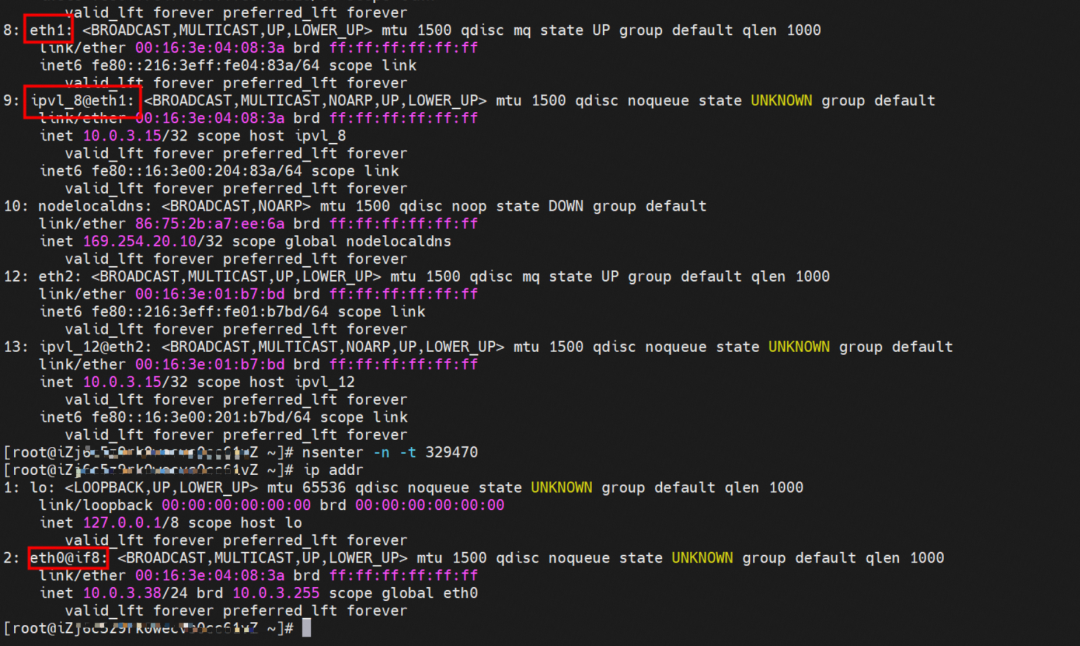

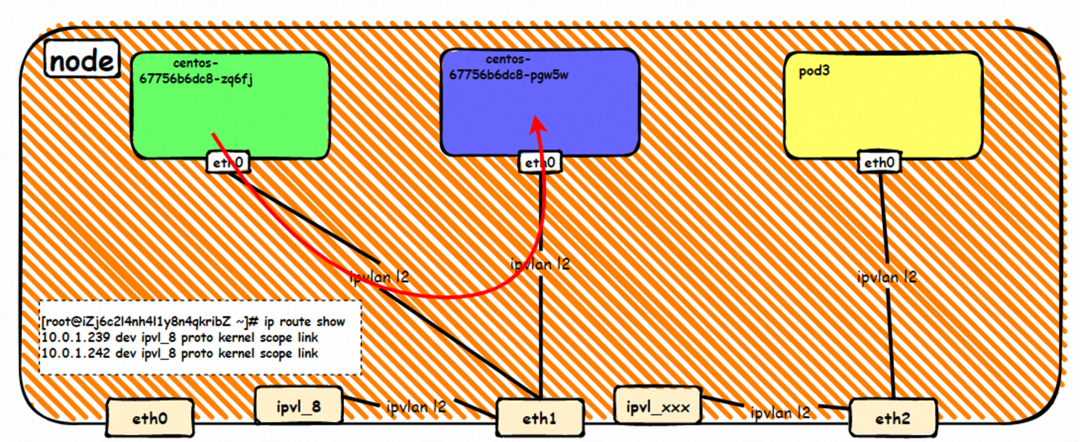

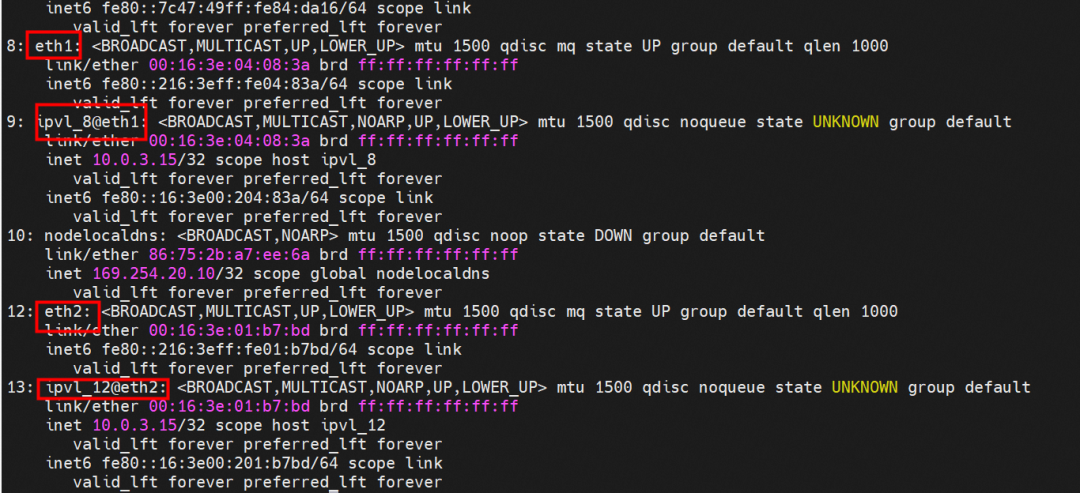

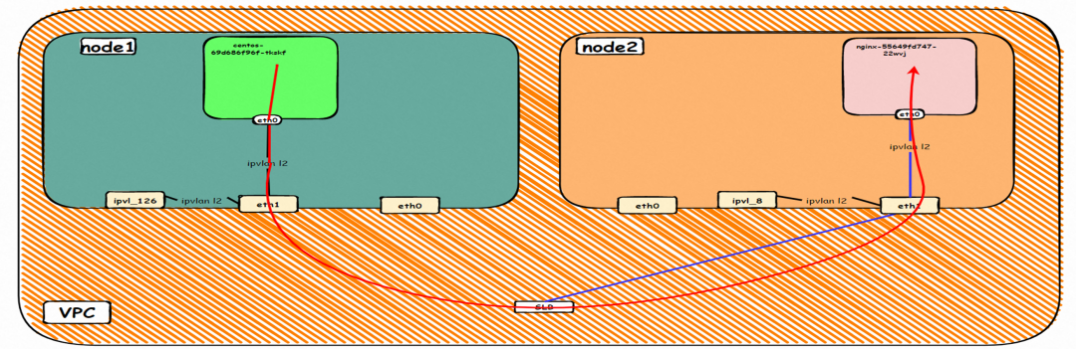

There is a network interface controller inside the Pod, and one is eth0, where the IP of eth0 is the IP of the Pod. The MAC address of this network interface controller is inconsistent with the MAC address of the ENI on the console. At the same time, there are multiple ethx network interface controllers on ECS, indicating that the ENI subsidiary network interface controller is not directly mounted in the network namespace of the Pod.

The pod has a default route that only points to eth0, indicating that the pod accesses any address segment from eth0 as a unified ingress and egress.

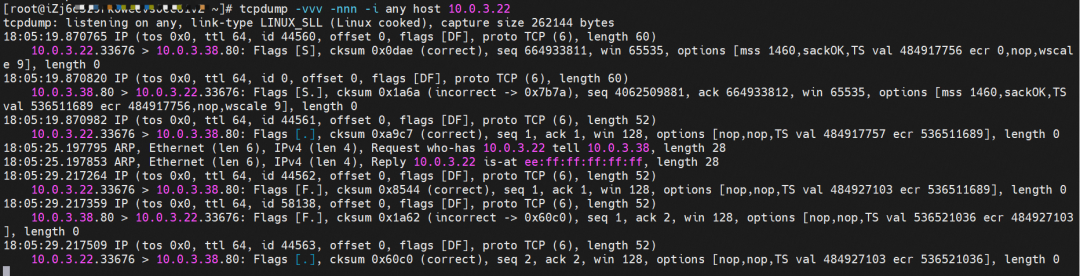

How do pods communicate with ECS OS? At the OS level, as soon as we see the network interface controller of ipvl_x, we can see that it is attached to eth1, indicating that an ipvl_x network interface controller will be created for each attached network interface controller at the OS level to establish a connection tunnel between OS and Pod.

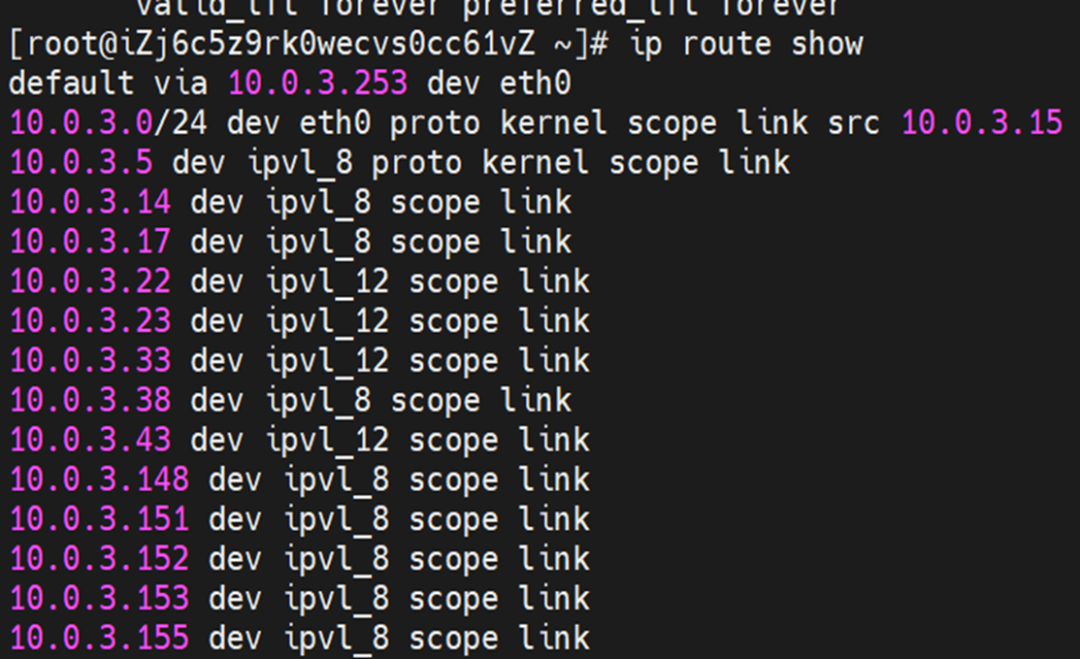

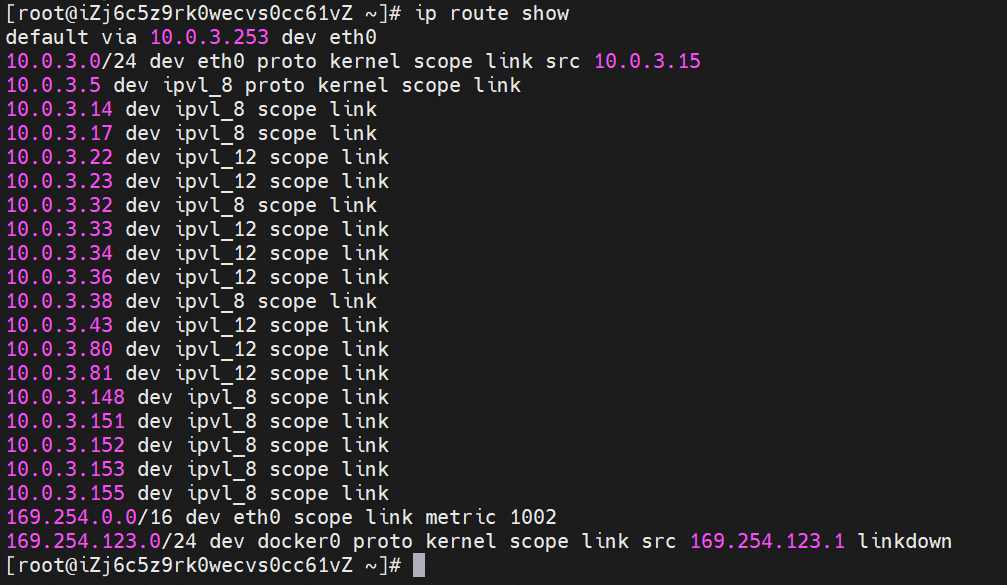

How does ECS OS determine which container to go to for data traffic? Through the OS Linux Routing, we can see that all traffic destined for Pod IP will be forwarded to the ipvl_x virtual network interface corresponding to Pod. So far, the network namespace of ECS OS and Pod has established a complete ingress and egress link configuration. The implementation of IPVLAN on the network architecture has been introduced.

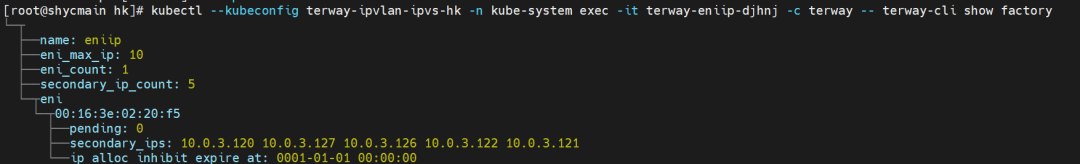

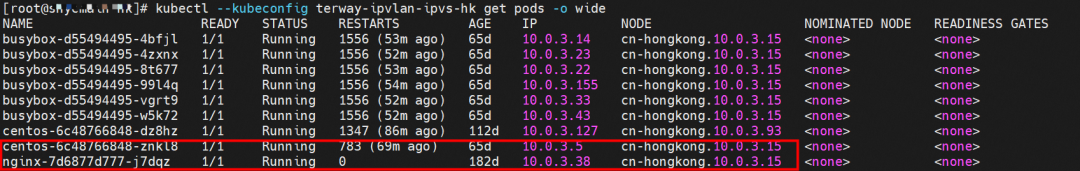

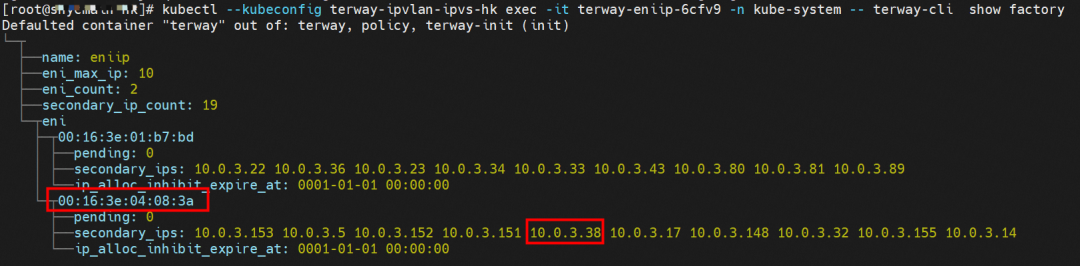

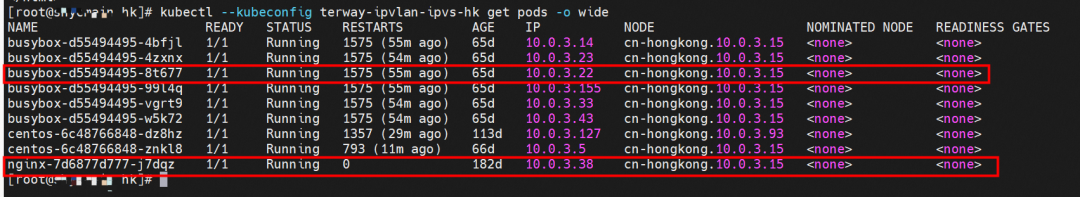

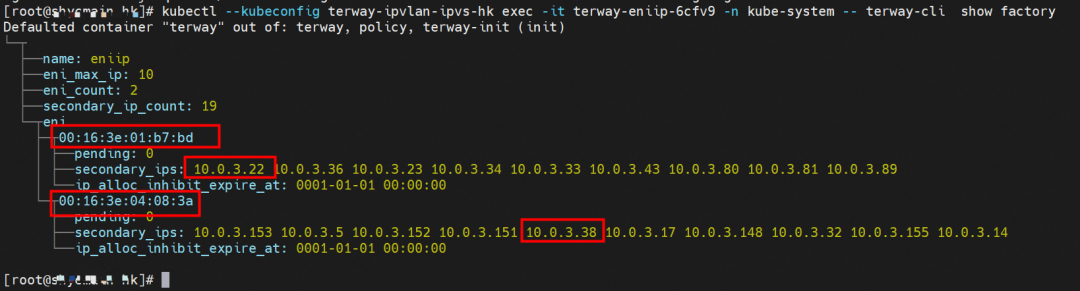

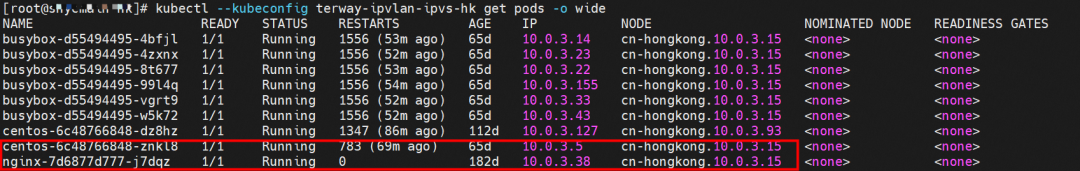

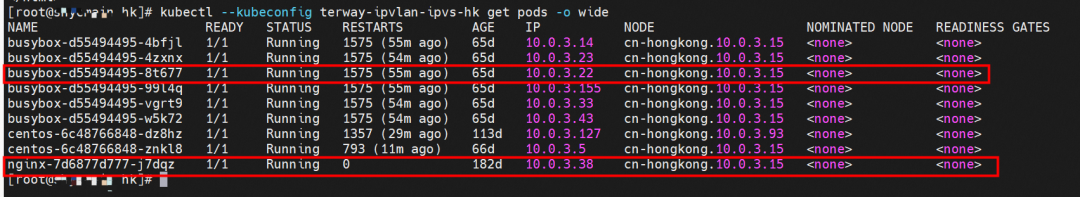

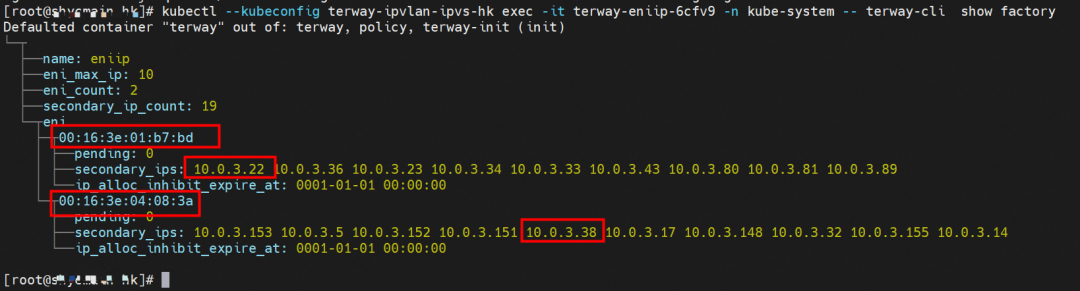

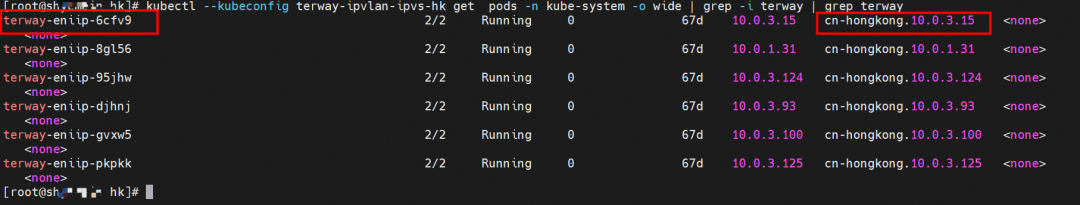

For the implementation of ENI multi-IP, this is similar to the principle of Analysis of Alibaba Cloud Container Network Data Link (3): Terway ENIIP. Terway pods are deployed on each node using a daemonset. You can run the following command to view the Terway pods on each node. Run the terway-cli show factory command to view the number of secondary ENIs on the node, the MAC address, and the IP address on each ENI.

How can we achieve it for SVC? Those who have read the previous four parts of the series should know that for Pod to access SVC, the container uses various methods to forward the request to the ECS level where the Pod is located, and the netfilter module in the ECS implements SVC IP resolution. This is a good method, but since the data link needs to switch from the Pod's network namespace to the ECS's OS network namespace, two kernel protocol stacks have been passed. Performance loss will occur. If you have the pursuit of high concurrency and high performance, you may not fully meet the needs of customers. How do we implement high concurrency and latency-sensitive services? Is there a way for Pods to access SVC to implement backend resolution directly in the Pod's network namespace, thus combining IPVLAN to implement a kernel protocol stack? In kernel 4.19, the emergence of ebpf has realized this requirement. There is not too much explanation of ebpf here. Those interested can visit the link below. We only need to know that ebpf is a security sandbox that can run safely at the kernel level. When the specified behavior of the kernel is triggered, the ebpf setting program will be executed. Using this feature, we can modify the packets accessing SVC IP at the tc level.

Official Link:

https://ebpf.io/what-is-ebpf/

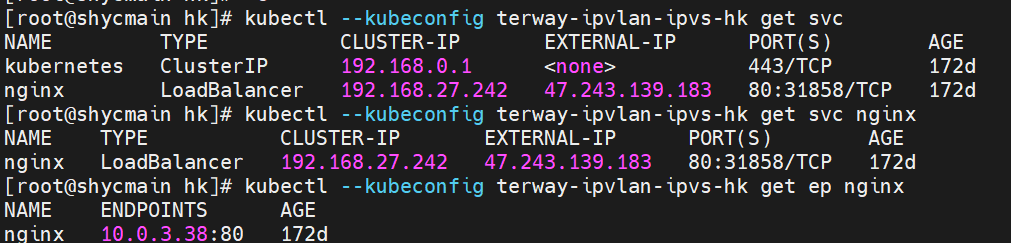

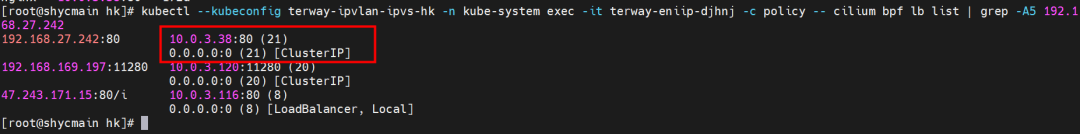

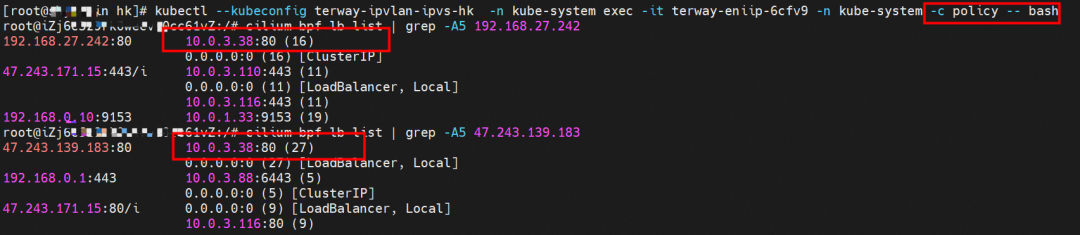

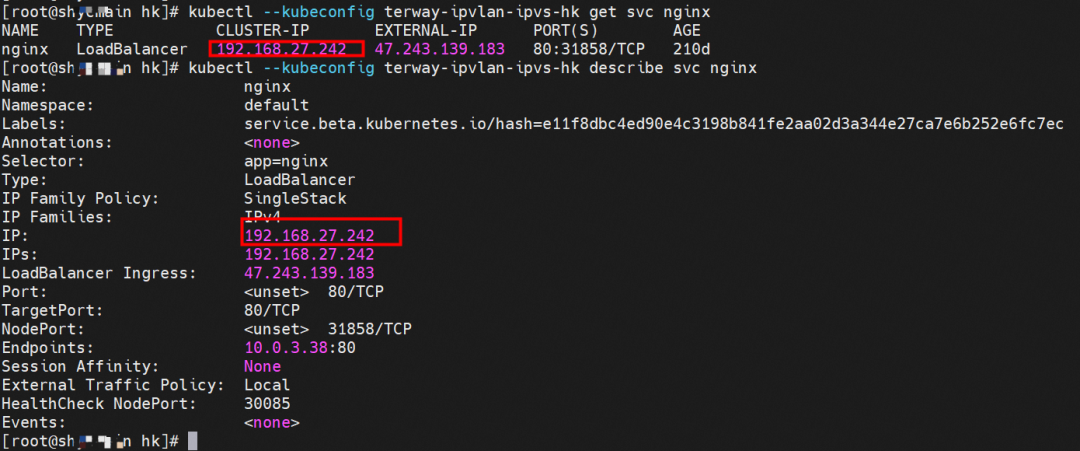

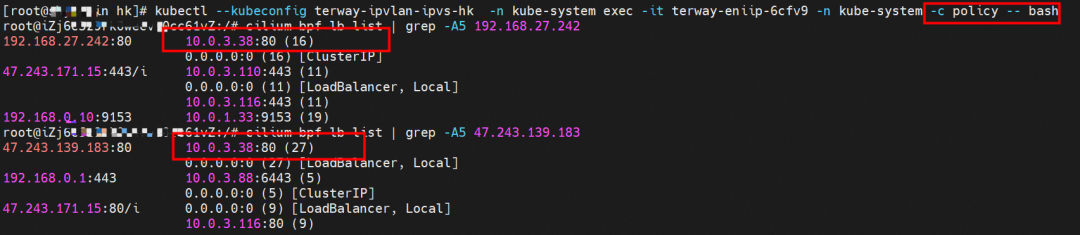

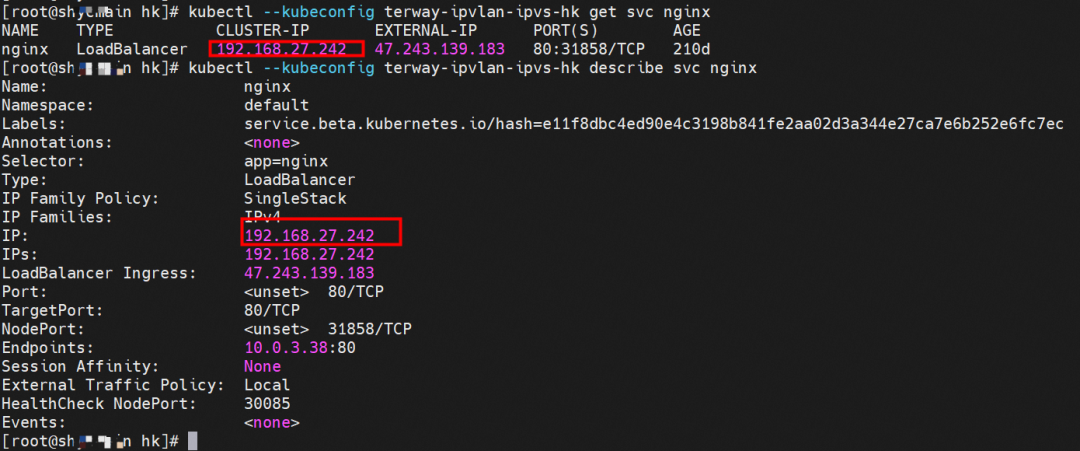

For example, as shown in the figure, you can see there is an SVC named nginx in the cluster. The clusterIP is 192.168.27.242, and the backend pod IP is 10.0.3.38. Through the cilium bpf lb list, you can see that the access to the clusterIP 192.168.27.242 in the ebpf program will be transferred to the IP of 10.0.3.38, while there is only one default route in the Pod. In the IPVLAN + EBPF mode, if a pod accesses the SVC IP, the SVCIP is converted by ebpf to the IP address of an SVC backend pod in the network namespace of the pod. Then, the data link is sent to the pod. In other words, SVCIP is only captured in pods. The source ECS, the destination ECS, and the pod in the destination ECS cannot be captured. If there are over 100 pods in the rear section of an SVC, SVCIP cannot be captured outside the Pod because ebpf exists. Once network jitter occurs, which backend IP should be captured, or which backend Pod should be captured? Is it a complex and unsolvable scene? Container Service and AES have created an ACK Net-Exporter container network observability tool, which can be used for continuous observation and problem determination in this scenario.

ACK Net-Exporter Container Networks:

https://www.alibabacloud.com/help/en/container-service-for-kubernetes/latest/acknetexporternetwork

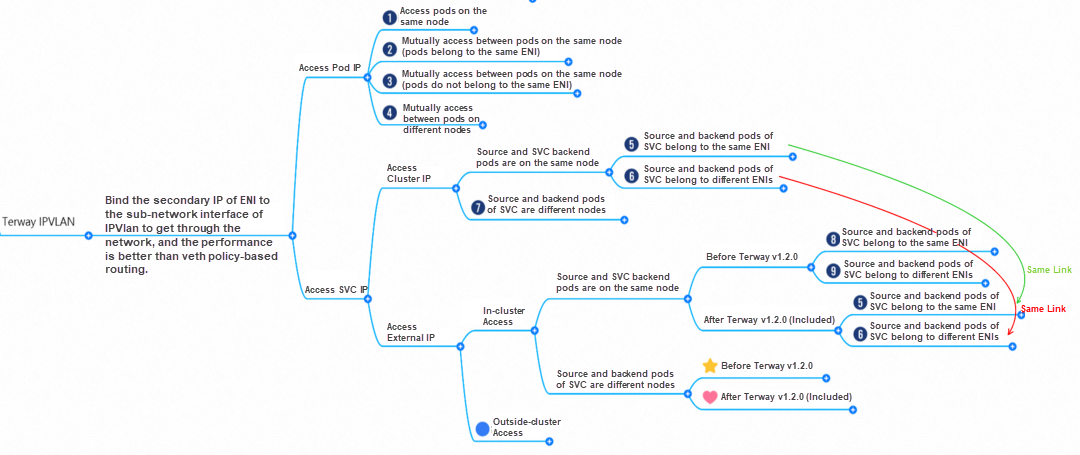

Therefore, the Terway IPVLAN + EBPF mode can be summarized as:

Terway IPVLAN + EBPF Mode Container Network Data Link AnalysisAccording to the characteristics of container networks, network links in Terway IPVLAN + EBPF mode can be roughly divided into two major SOP scenarios: Pod IP and SVC. Further subdivided, they can be summarized into 12 different small SOP scenarios.

In the TerwayENI architecture, different data link access scenarios can be summarized into 12 categories.

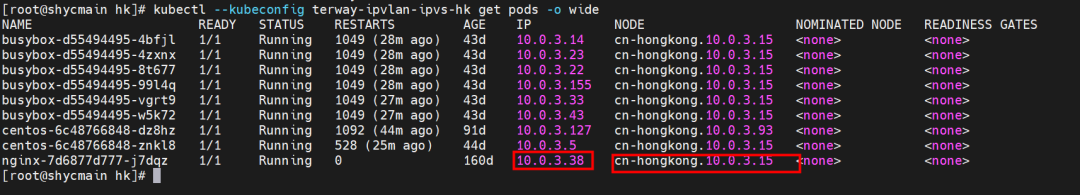

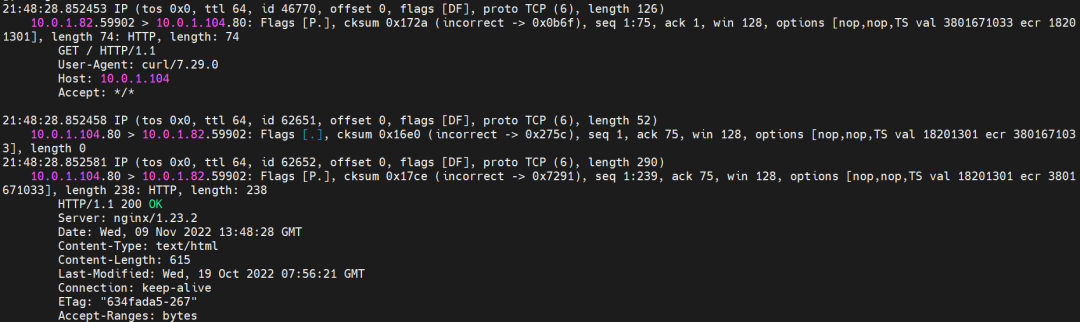

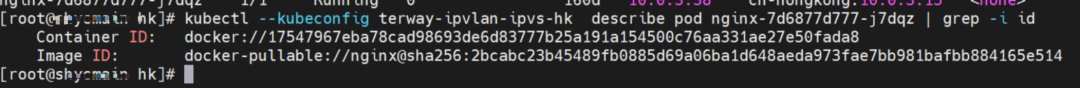

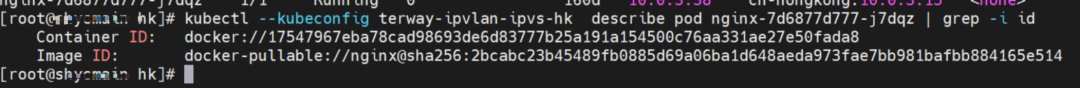

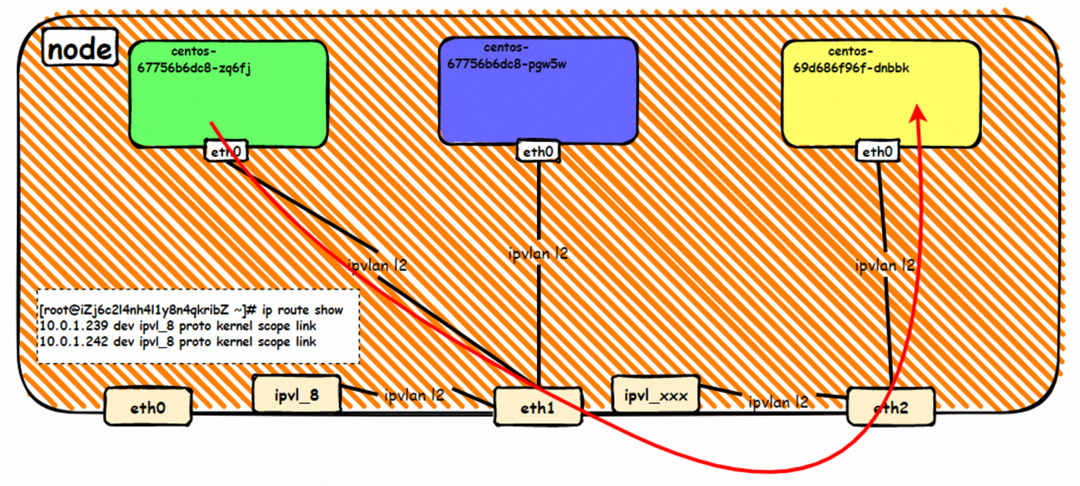

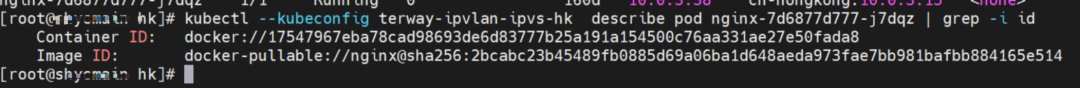

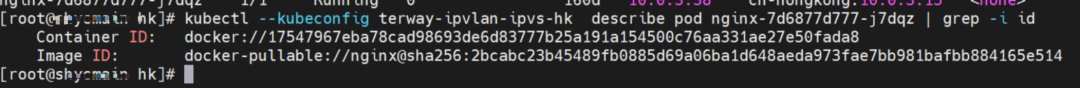

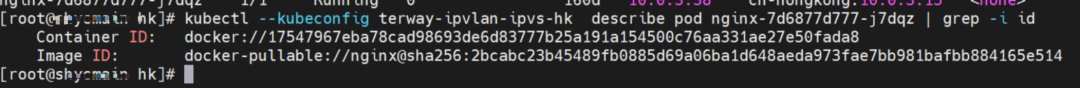

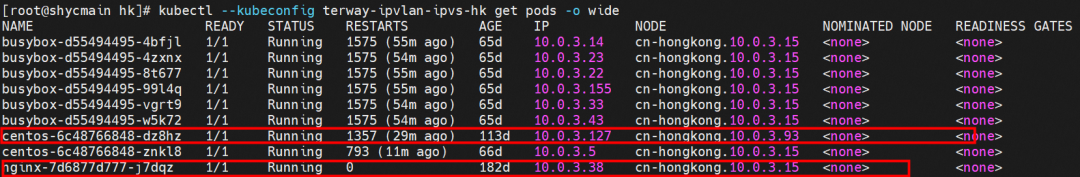

The nginx-7d6877d777-j7dqz and 10.0.3.38 exist on cn-hongkong.10.0.3.15 node.

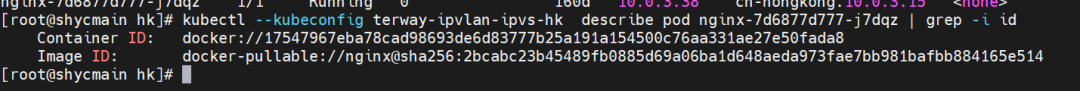

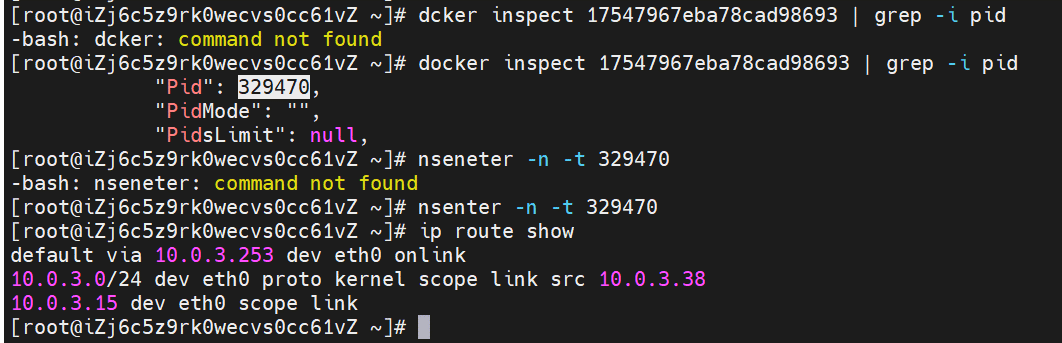

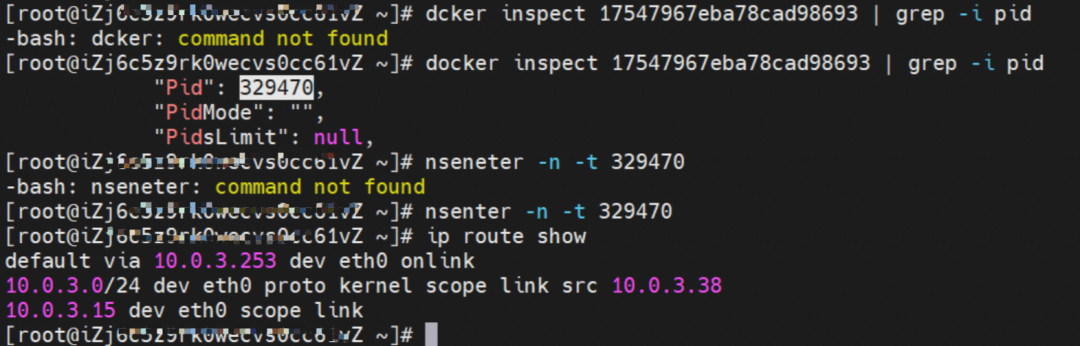

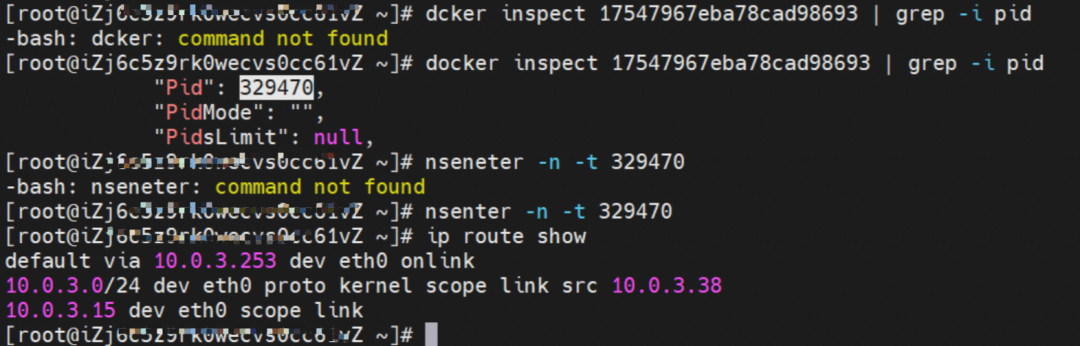

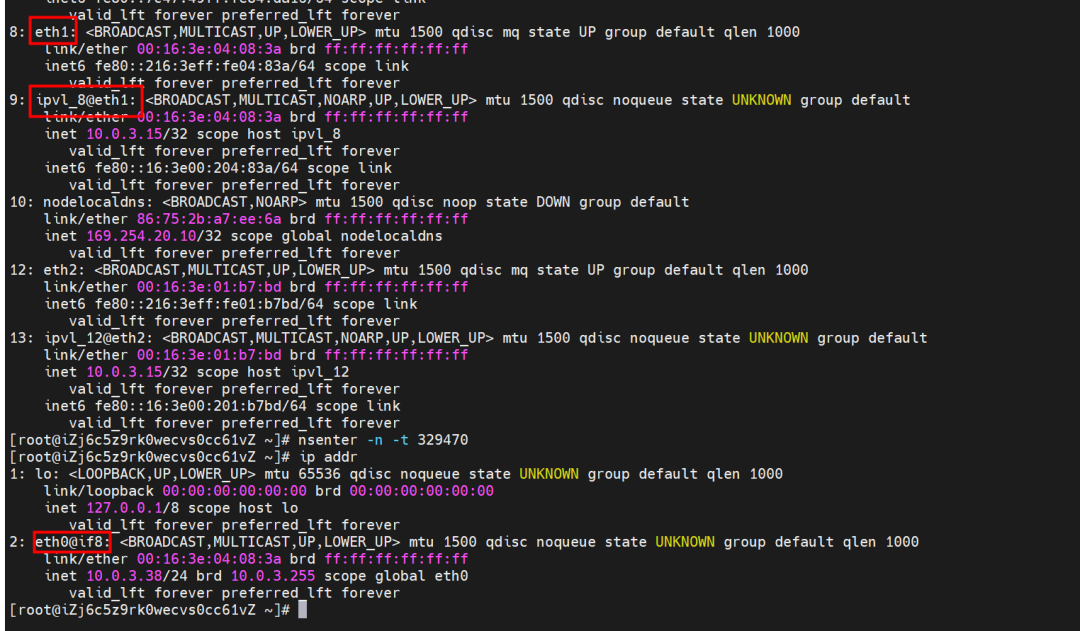

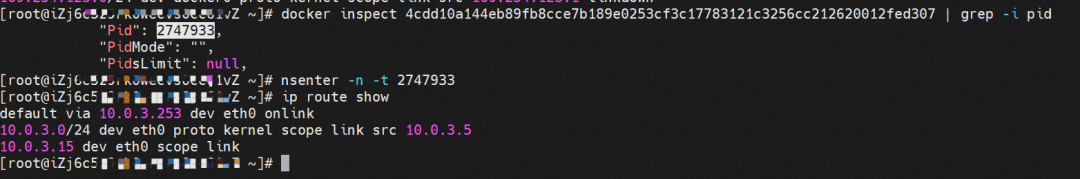

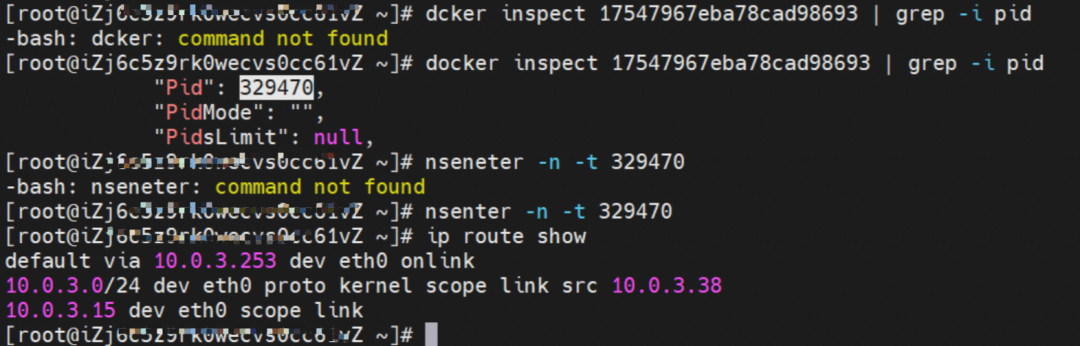

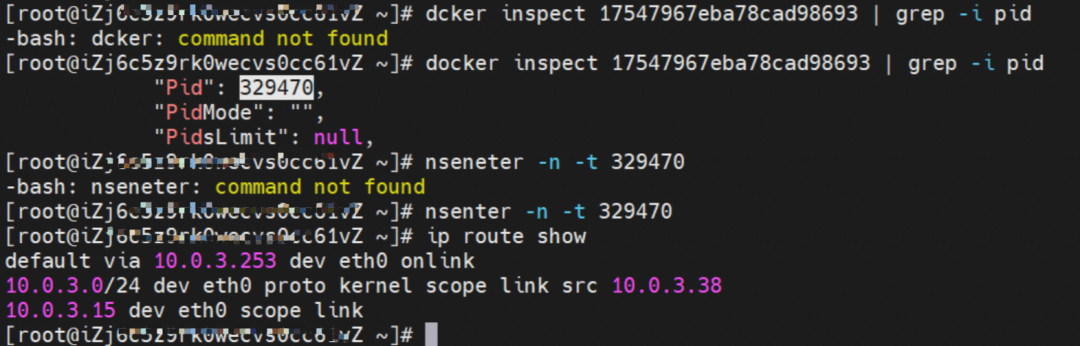

The nginx-7d6877d777-j7dqz IP address is 10.0.3.38, the PID of the container on the host is 329470, and the container network namespace has a default route pointing to container eth0.

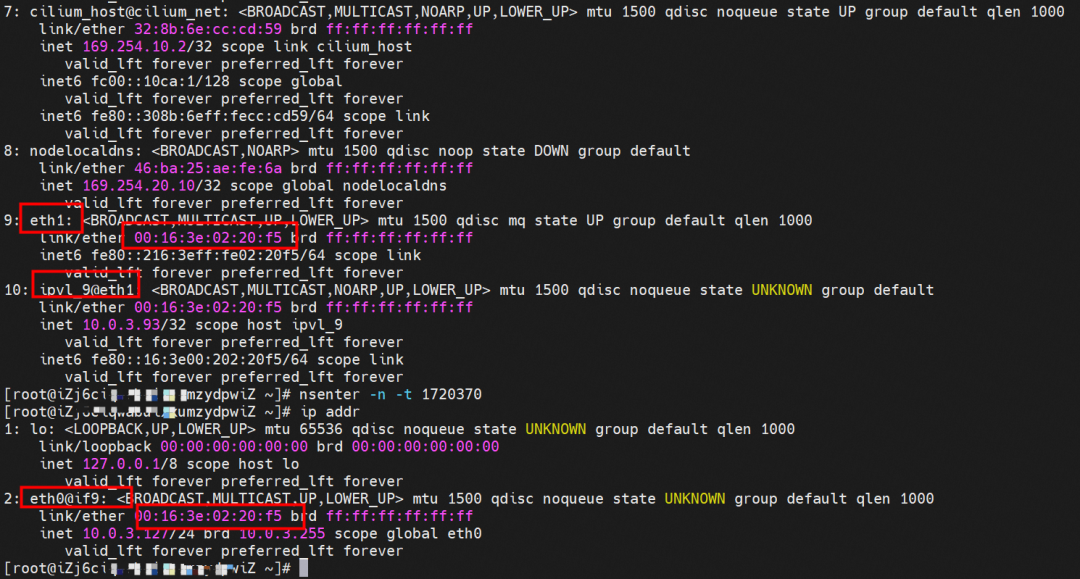

The container eth0 is a tunnel established between the ECS OS and the secondary ENI eth1 of the ECS instance through an ipvlan tunnel, and the secondary ENI eth1 has a virtual ipvl_8@eth1 network interface controller.

Through the OS Linux Routing, we can see that all traffic destined for Pod IP will be forwarded to the ipvl_x virtual network interface corresponding to Pod, thus establishing the connection tunnel between ECS and Pod.

The destination can be accessed.

nginx-7d6877d777-zp5jg netns eth0 can catch packets.

ipvl_8 of ECS can catch packets.

Data Link Forwarding Diagram

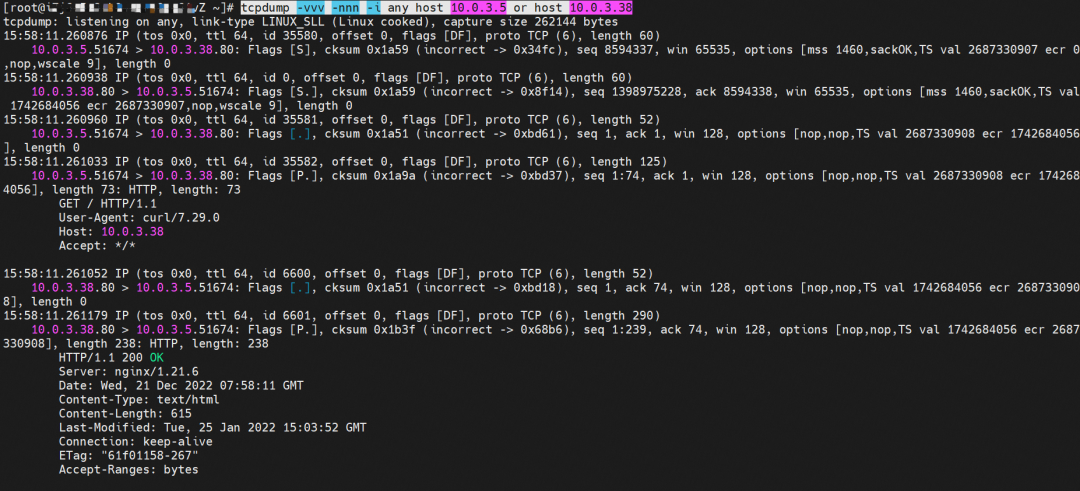

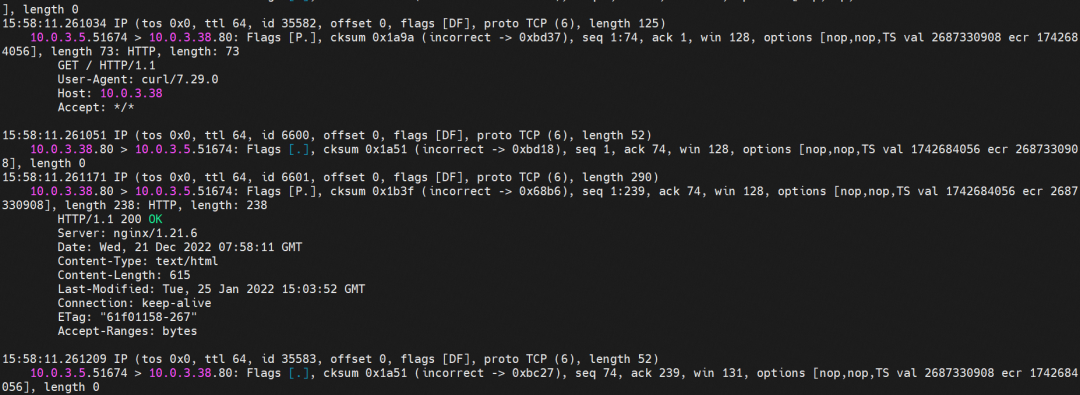

The nginx-7d6877d777-j7dqz and centos-6c48766848-znkl8 pods with the IP addresses 10.0.3.38 and 10.0.3.5 respectively exist on cn-hongkong.10.0.3.15.

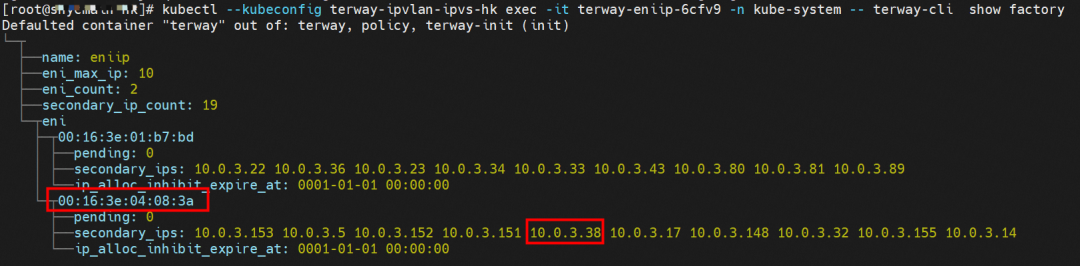

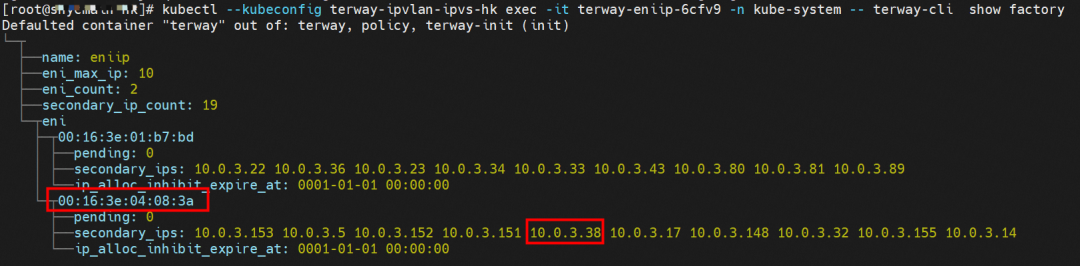

Through the Terway pod of this node, we can use the terway-cli show factory command to see that the two IPs (10.0.3.5 and 10.0.3.38) belong to the same MAC address 00:16:3e:04:08:3a, indicating that the two IP belong to the same ENI. Then, we can infer that the nginx-7d6877d777-j7dqz and centos-6c48766848-znkl8 belong to the same ENI network interface controller.

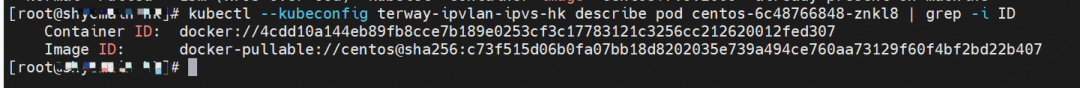

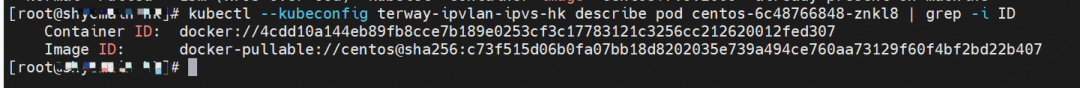

The centos-6c48766848-znkl8 IP address is 10.0.3.5, and the PID of the container on the host is 2747933. The container network namespace has a default route pointing to container eth0, and there is only one. This indicates that the pod needs to use this default route to access all addresses.

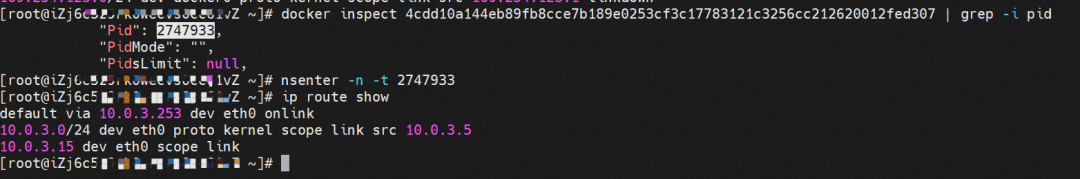

The nginx-7d6877d777-j7dqz IP address is 10.0.3.38, the PID of the container on the host is 329470, and the container network namespace has a default route pointing to container eth0.

The container eth0 is a tunnel established between the ECS OS and the secondary ENI eth1 of the ECS instance through an ipvlan tunnel. The secondary ENI eth1 has a virtual ipvl_8@eth1 network interface controller.

The destination can be accessed.

centos-6c48766848-znkl8 netns eth0 can catch packets.

nginx-7d6877d777-zp5jg netns eth0 can catch packets.

The ipvl_8 network interface controller does not capture the relevant data packets.

Data Link Forwarding Diagram

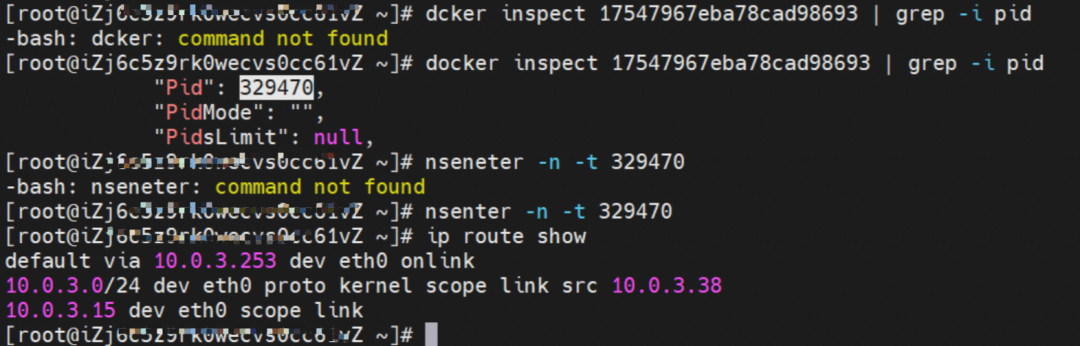

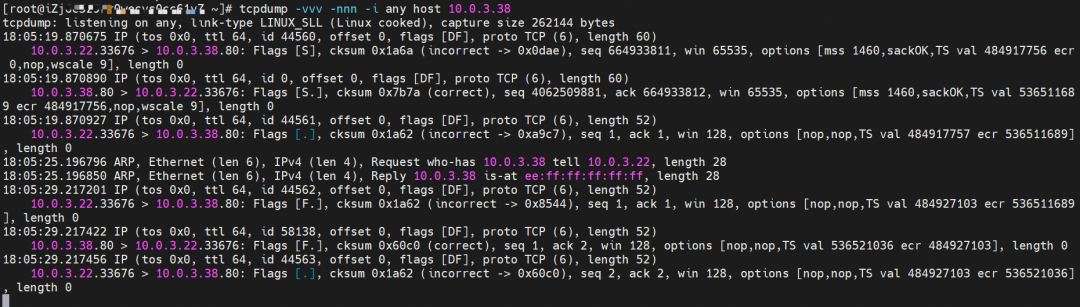

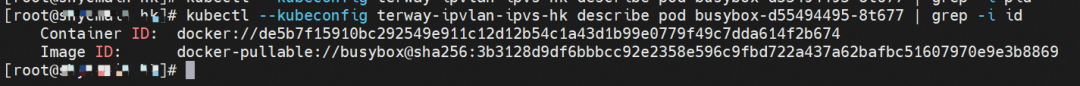

The nginx-7d6877d777-j7dqz and busybox-d55494495-8t677 pods with the IP addresses 10.0.3.38 and 10.0.3.22 respectively, exist on cn-hongkong.10.0.3.15.

Through the Terway pod of this node, we can use the terway-cli show factory command to see that the two IPs (10.0.3.22 and 10.0.3.38) belong to the same MAC address 00:16:3e:01:b7:bd and 00:16:3e:04:08:3a, indicating that the two IP's belong to different ENI. Then, we can infer that the nginx-7d6877d777-j7dqz and busybox-d55494495-8t677 belong to different ENI.

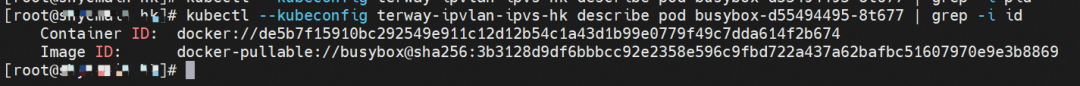

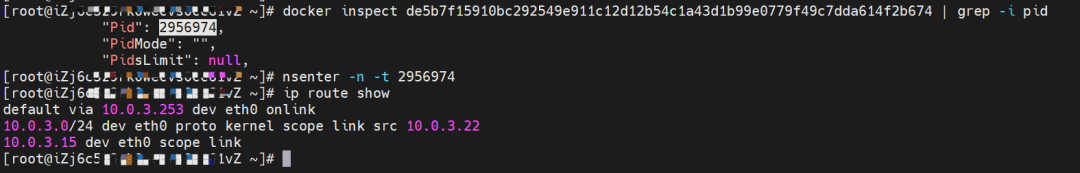

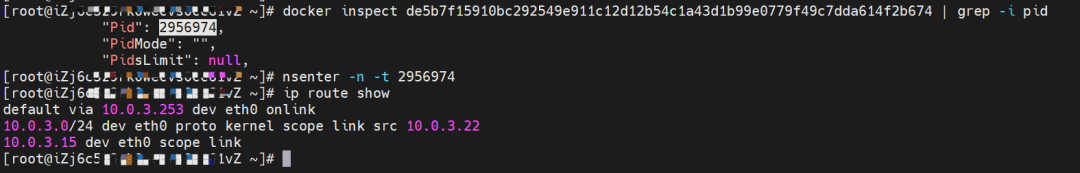

The busybox-d55494495-8t677 IP address is 10.0.3.22, and the PID of the container on the host is 2956974. The container network namespace has a default route pointing to container eth0, and there is only one, indicating the pod needs to use this default route to access all addresses.

The nginx-7d6877d777-j7dqz IP address is 10.0.3.38, the PID of the container on the host is 329470, and the container network namespace has a default route pointing to container eth0.

The container eth0 is a tunnel established in the ECS OS through the ipvlan tunnel and the attached ENI eth1 of ECS. It can be seen through the MAC address. The nginx-7d6877d777-j7dqz and busybox-d55494495-8t677 are assigned eth1 and eth2, respectively.

The destination can be accessed.

busybox-d55494495-8t677 netns eth0 can catch packets.

nginx-7d6877d777-zp5jg netns eth0 can catch packets.

Data Link Forwarding Diagram

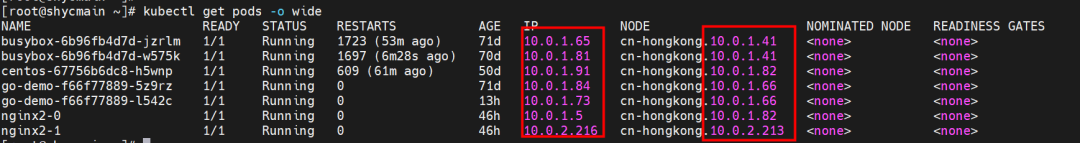

The nginx-7d6877d777-j7dqz exists on the cn-hongkong.10.0.3.15 node. IP address 10.0.3.38.

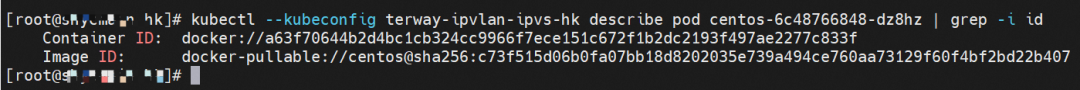

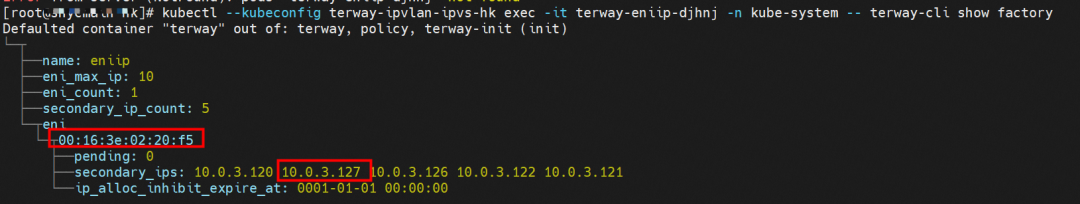

The centos-6c48766848-dz8hz exists on cn-hongkong.10.0.3.93. The IP address is 10.0.3.127.

Through the Terway pod of this node, we can use the terway-cli show factory command to see nginx-7d6877d777-j7dqz IP 10.0.3.5 belongs to ENI on cn-hongkong.10.0.3.15 with a MAC address of 00:16:3e:04:08:3a.

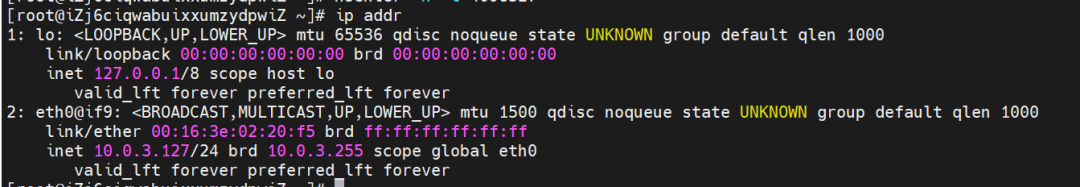

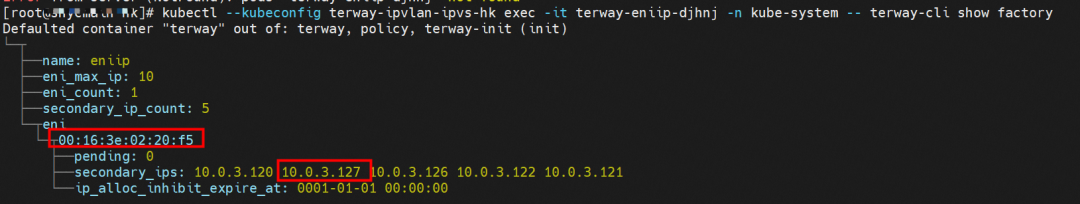

Through the Terway pod of this node, we can use the terway-cli show factory command to see centos-6c48766848-dz8hz IP 10.0.3.127 belongs to ENI on cn-hongkong.10.0.3.93 with a MAC address of 00:16:3e:02:20:f5.

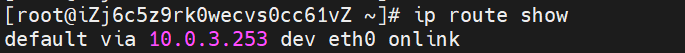

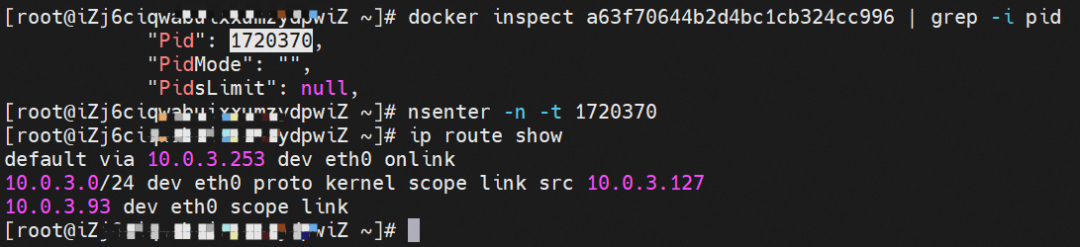

The centos-6c48766848-dz8hz IP address is 10.0.3.127, and the PID of the container on the host is 1720370. The container network namespace has a default route pointing to container eth0, and there is only one, indicating the pod needs to access all addresses through this default route.

The nginx-7d6877d777-j7dqz IP address is 10.0.3.38, the PID of the container on the host is 329470, and the container network namespace has a default route pointing to container eth0.

In the ECS OS, the tunnel is established through the ipvlan tunnel and the ECS's secondary ENI eth1. The MAC address can be used to view the ENI addresses assigned to the two pods.

centos-6c48766848-dz8hz

nginx-7d6877d777-j7dqz

The destination can be accessed.

Packet capture is not shown here. From the client's perspective, data streams can be captured in the centos-6c48766848-dz8hz network namespace eth0 and the ENI eth1 corresponding to the ECS instance deployed in this pod. From the server perspective, the data stream can be captured in the nginx-7d6877d777-j7dqz network namespace eth0 and the ENI eth1 corresponding to the ECS instance deployed in this pod.

Data Link Forwarding Diagram

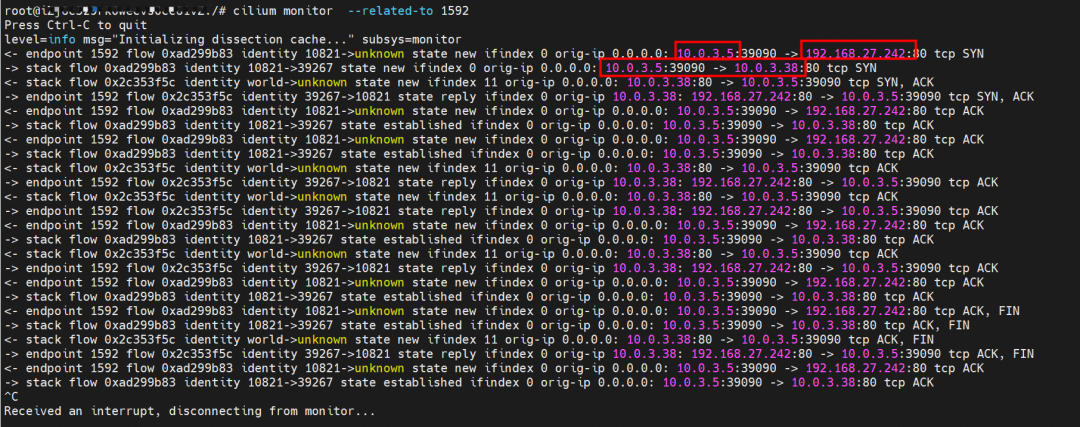

The nginx-7d6877d777-j7dqz and centos-6c48766848-znkl8 pods with the IP addresses 10.0.3.38 and 10.0.3.5 respectively, exist on cn-hongkong.10.0.3.15.

Through the Terway pod of this node, we can use the terway-cli show factory command to see that the two IPs (10.0.3.5 and 10.0.3.38) belong to the same MAC address 00:16:3e:04:08:3a, indicating that the two IP belong to the same ENI. Then, we can infer that the nginx-7d6877d777-j7dqz and centos-6c48766848-znkl8 belong to the same ENI.

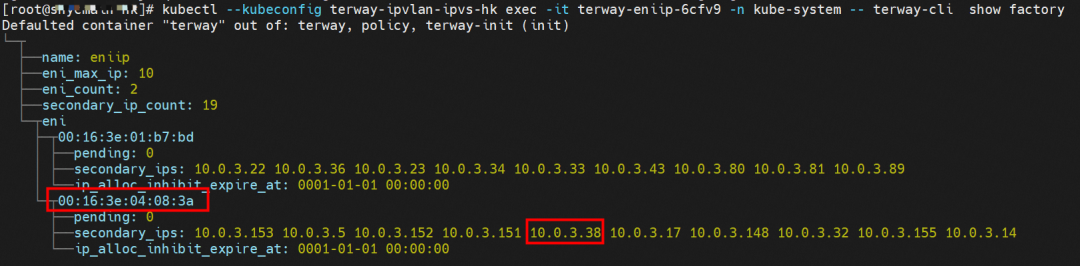

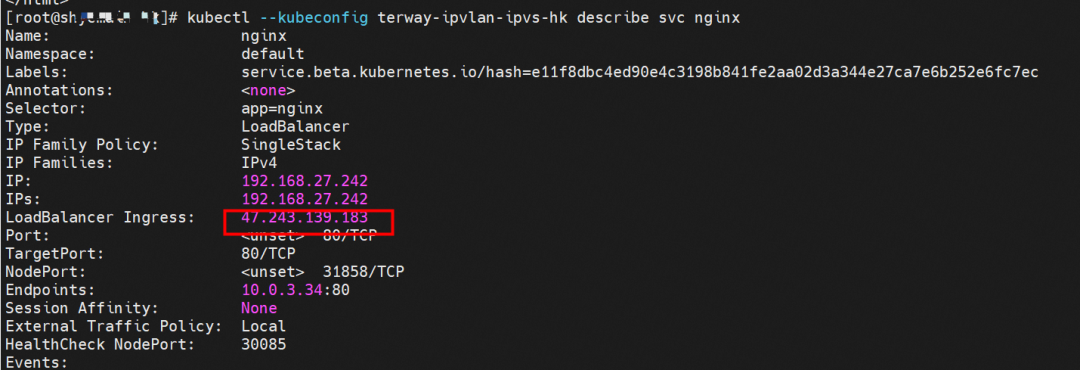

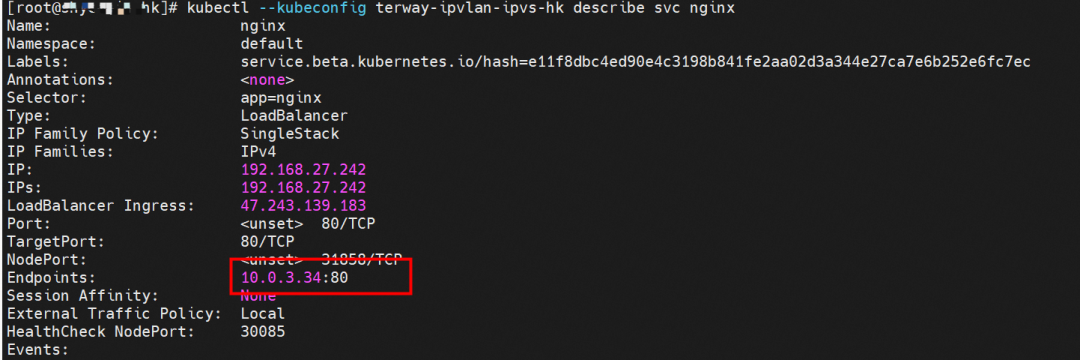

By describing SVC, you can see that the nginx pod is added to the backend of SVC nginx. The ClusterIP of the SVC is 192.168.27.242. If external IP addresses are accessed from within a cluster, the architecture of the external IP addresses or external IP addresses used to access SVC within the cluster is the same for Terway version ≥ 1.20. As such, external IP addresses are not separately described in this section. ClusterIP is used as an example. For the Terway version < 1.20, external IP addresses are used in subsequent sections.

The centos-6c48766848-znkl8 IP address is 10.0.3.5, and the PID of the container on the host is 2747933. The container network namespace has a default route pointing to container eth0, and there is only one. This indicates that the pod needs to use this default route to access all addresses.

The nginx-7d6877d777-j7dqz IP address is 10.0.3.38, the PID of the container on the host is 329470, and the container network namespace has a default route pointing to container eth0.

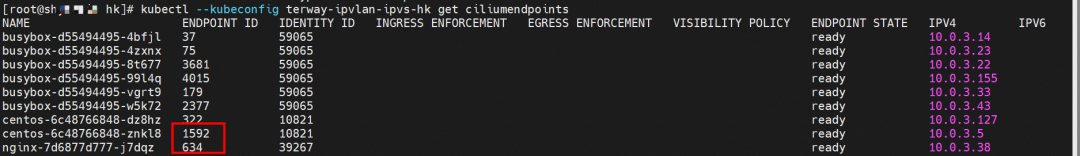

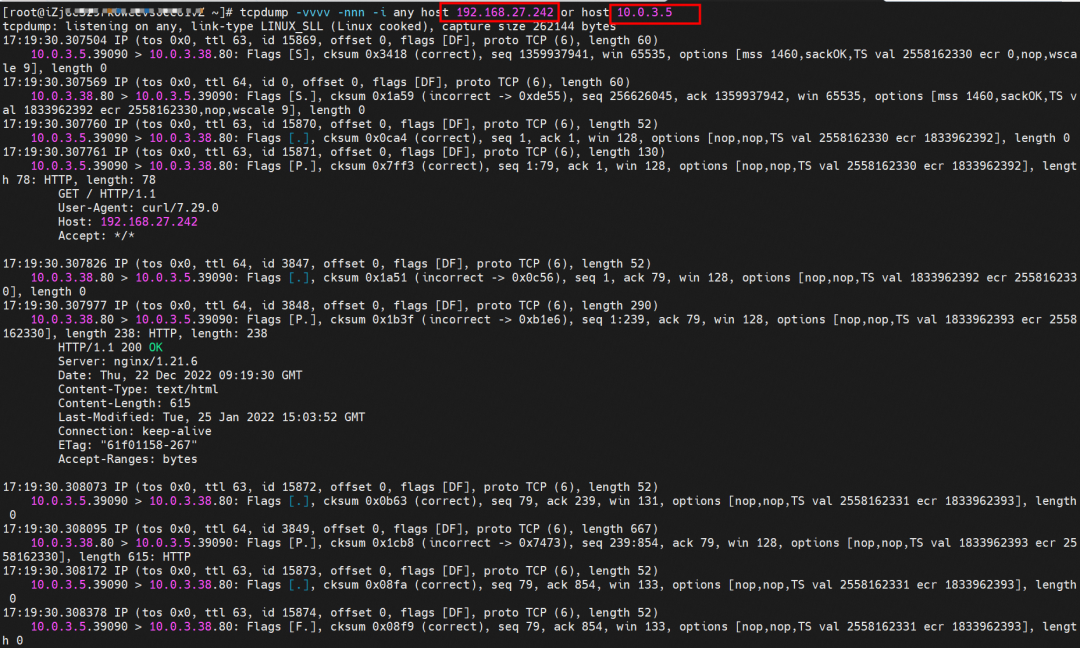

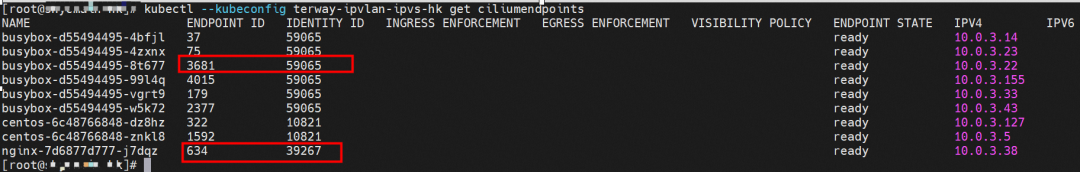

In ACK, the cilium is used to call ebpf. The following command shows that the nginx-7d6877d777-j7dqz and centos-6c48766848-znkl8 identity IDs are 634 and 1592, respectively.

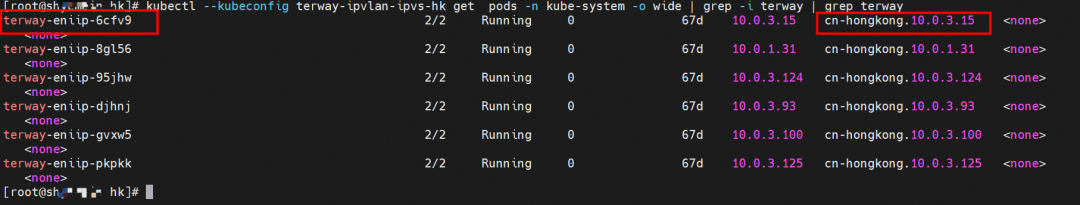

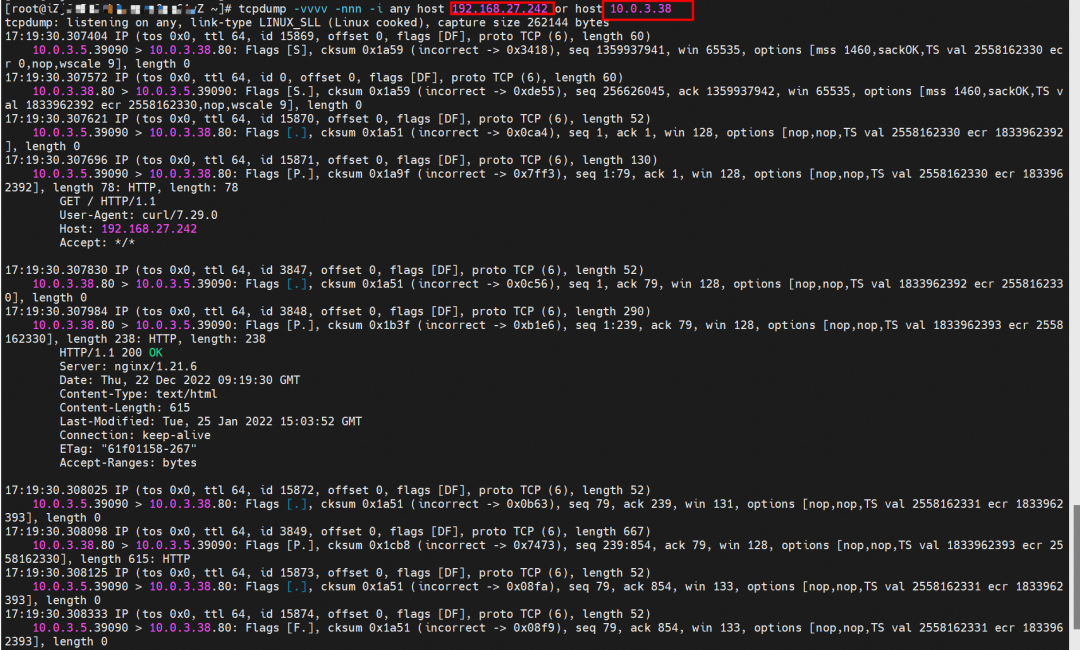

In the centos-6c48766848-znkl8 pod, you can find that the Terway pod of the ECS where the pod is located is terway-eniip-6cfv9. Run the following cilium bpf lb list | grep -A5 192.168.27.242 command in the Terway pod. The backend record for CLusterIP 192.168.27.242:80 in ebpf is 10.0.3.38:80. All of the above is recorded in the tc of the source Pod centos-6c48766848-znkl8 pod through the EBPF.

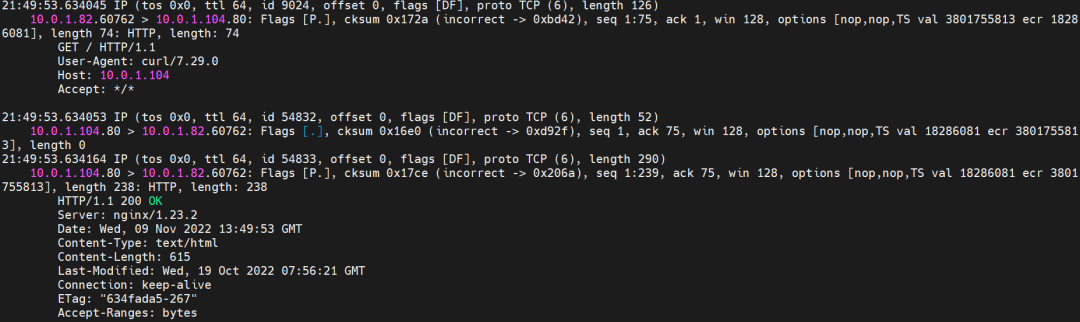

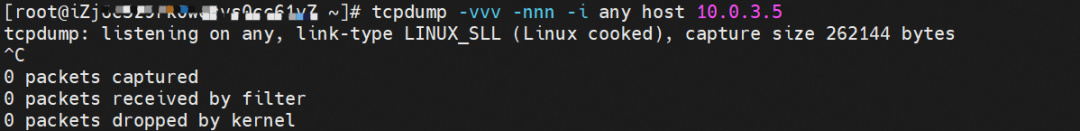

It can be theoretically inferred that if the pod in the cluster accesses the CLusterIP or External IP address of SVC (Terway≥ 1.20), the data flow will be converted into the backend pod IP of the corresponding SVC in the pod's network namespace. Then, the pod will be sent out from eth0 of the pod's network namespace, enter the ECS where the pod is located, and pass through the IPVLAN tunnel, and forward to the same ECS or egress ECS through the corresponding ENI. In other words, if we capture packets, no matter whether we capture packets in pod or ECS, we cannot capture the IP address of SVC but only the IP address of Pod.

The EBPF technology allows in-cluster access to avoid the kernel protocol stack inside the ECS OS and reduces the kernel protocol stack of some pods, improving network performance and pod density, and bringing network performance that is not weaker than a single ENI. However, this method will bring huge changes and impacts on our observability. Imagine that your cluster calls each other. The IP of the call is the IP of the SVC. There are dozens or hundreds of pods referenced by the backend of the SVC. When the source pod calls, there is a problem. Generally, the error is connect to failed and other similar information. The traditional packet capture method is to capture packets in the source Pod, the destination Pod, ECS, etc., and filter SVC IP to string the same data stream between different packets. However, in the case of eBPF, SVC IP cannot be captured due to the implementation of the technology. Does it pose a great challenge to observability in the case of occasional jitter?

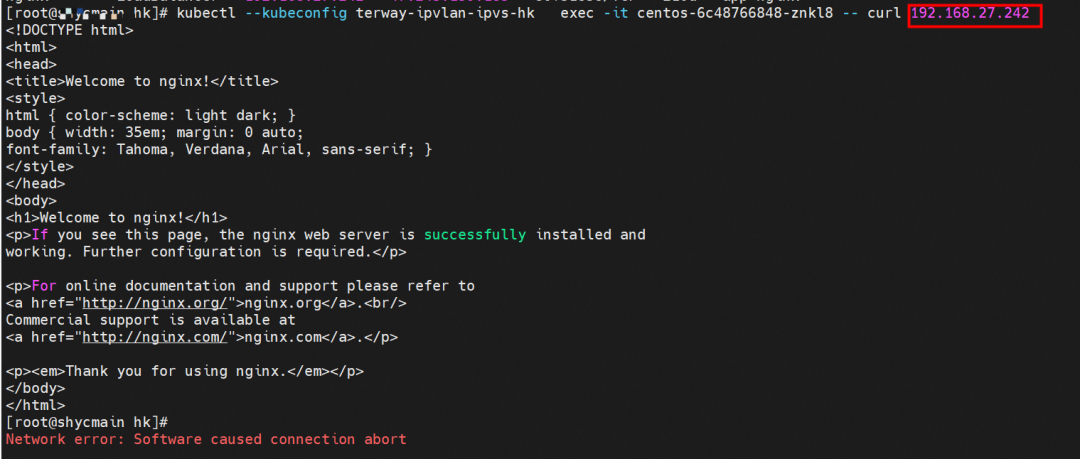

The destination can be accessed.

After accessing the SVC from the client Pod centos-6c48766848-znkl8, we can see the access is successful.

The eth0 packet is captured in the centos-6c48766848-znkl8 network namespace of the client. The packet capture address is the IP address of the destination SVC and the backend POD IP address of the SVC. You can see that only the IP addresses of the SVC backend pods can be captured. The SVC IP addresses cannot be captured.

The backend POD nginx-7d6877d777-zp5jg of the target SVC captures packets in the network namespace eth0. The packet capture address is the IP address of the destination SVC and the client POD IP address. You can see that only the client Pod IP can be caught.

Cilium provides a monitor function. We use cilium monitor --related-to . We can see that the source POD IP accesses the SVCIP 192.168.27.242 and is resolved to SVC's backend POD IP 10.0.3.38. This shows that SVC IP is directly forwarded at the tc layer. It also explains why SVC IP cannot be captured by packet because packet capture is on netdev, and protocol stack and tc have been passed at this time.

If SVC IP access is involved in the following sections, a detailed packet capture display will not be made.

Data Link Forwarding Diagram

The nginx-7d6877d777-j7dqz and busybox-d55494495-8t677 pods with the IP addresses 10.0.3.38 and 10.0.3.22 respectively, exist on cn-hongkong.10.0.3.15.

Through the Terway pod of this node, we can use the terway-cli show factory command to see that the two IPs (10.0.3.22 and 10.0.3.38) belong to the same MAC address 00:16:3e:01:b7:bd and 00:16:3e:04:08:3a, indicating that the two IPs belong to different ENI. Then, we can infer that the nginx-7d6877d777-j7dqz and busybox-d55494495-8t677 belong to different ENI.

By describing SVC, you can see that the nginx pod is added to the backend of SVC nginx. The ClusterIP of the SVC is 192.168.27.242. If external IP addresses are accessed from within a cluster, the architecture of the external IP addresses or external IP addresses used to access SVC within the cluster is the same for Terway version ≥ 1.20. As such, external IP addresses are not separately described in this section. ClusterIP is used as an example. For the Terway version < 1.20, external IP addresses are used in subsequent sections.

The busybox-d55494495-8t677 IP address is 10.0.3.22, and the PID of the container on the host is 2956974. The container network namespace has a default route pointing to container eth0, and there is only one, indicating the pod needs to use this default route to access all addresses.

The nginx-7d6877d777-j7dqz IP address is 10.0.3.38, the PID of the container on the host is 329470, and the container network namespace has a default route pointing to container eth0.

In ACK, the cilium is used to call ebpf. The following command shows the nginx-7d6877d777-j7dqz and busybox-d55494495-8t677 identity IDs are 634 and 3681, respectively.

In the busybox-d55494495-8t677 pod, you can find that the Terway pod of the ECS where the pod is located is terway-eniip-6cfv9. Run the following cilium bpf lb list | grep -A5 192.168.27.242 command in the Terway pod. The backend record for CLusterIP 192.168.27.242:80 in ebpf is 10.0.3.38:80. All of these are recorded in the tc of the source Pod centos-6c48766848-znkl8 pod through the EBPF.

The ebpf forwarding of SVC ClusterIP will not be described in detail here. Please refer to the description in section 2.5 for more detailed information. From the description, it can be known that the IP of the accessed SVC has been converted by ebpf to the IP of the backend pod of SVC in the network namespace of the client busybox, and the IP of the SVC accessed by the client cannot be captured on any dev. Therefore, this scenario is similar to the network architecture in section 2.3, except the action is forwarded by cilium ebpf in the client.

Data Link Forwarding Diagram

The nginx-7d6877d777-j7dqz, IP address 10.0.3.38, exists on cn-hongkong.10.0.3.15.

The centos-6c48766848-dz8hz, IP address 10.0.3.127, exists on cn-hongkong.10.0.3.93.

Through the Terway pod of this node, we can use the terway-cli show factory command to see cn-hongkong nginx-7d6877d777-j7dqz IP 10.0.3.5 belongs to ENI on cn-hongkong.10.0.3.15 with a MAC address of 00:16:3e:04:08:3a.

Through the Terway pod of this node, we can use the terway-cli show factory command to see cn-hongkong centos-6c48766848-dz8hz IP 10.0.3.127 belongs to ENI on cn-hongkong.10.0.3.93 with a MAC address of 00:16:3e:02:20:f5.

By describing SVC, you can see that the nginx pod is added to the backend of SVC nginx. The ClusterIP of SVC is 192.168.27.242. If external IP addresses are accessed from within a cluster, the architecture of the external IP addresses or external IP addresses used to access SVC within the cluster is the same for Terway version ≥ 1.20. As such, external IP addresses are not separately described in this section. ClusterIP is used as an example. For the Terway version < 1.20, external IP addresses are used in subsequent sections.

Pod accesses SVC's Cluster IP, while SVC's backend Pod and client Pod are deployed on different ECS. This architecture is similar to the mutual access between Pods on different ECS nodes in section 2.4. Only this scenario is Pod accessing SVC's ClusterIP. Note: This scenario is accessing ClusterIP. If it is accessing External IP, the scenario will be further complicated, which will be explained in detail in the following sections. For the description of ebpf forwarding of ClusterIP, please refer to the description in section 2.5 for details. As in the previous sections, the IP of SVC accessed by the client cannot be captured on any dev.

Data Link Forwarding Diagram

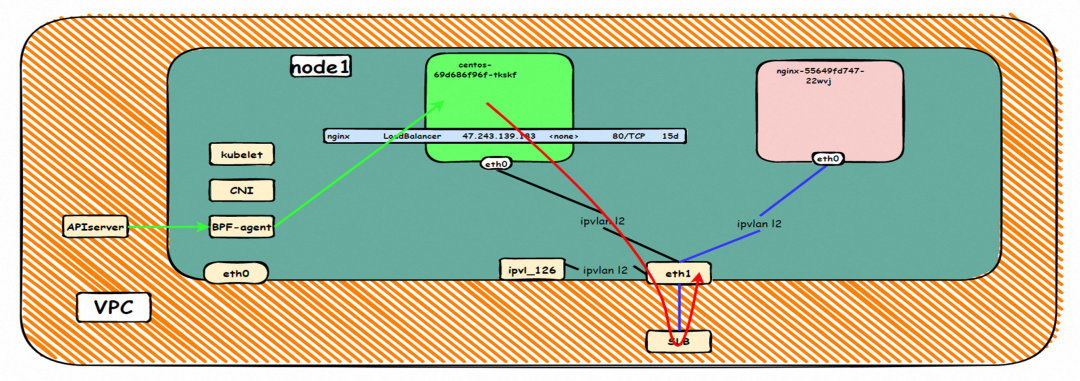

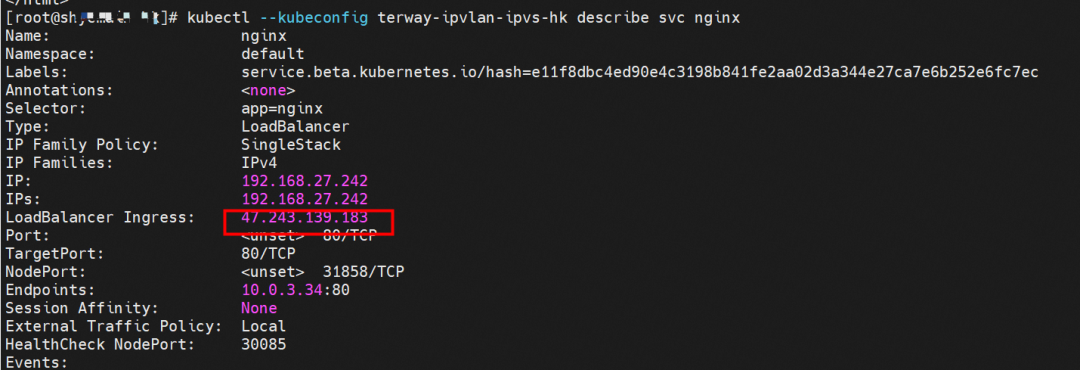

The environment here is similar to section 2.5 and will not be described too much, except that this section accesses the External IP 47.243.139.183 when the Terway version is less than 1.2.0.

Please refer to section 2.5.

The client pod and the backend pod of the SVC belong to the same ENI. If the version of Terway is earlier than 1.2.0, access the External IP, data link forwards ECS to SLB of External IP through ENI, and forwards it to the same ENI. Layer-4 SLB does not support the same EI as the client and server at the same time, so it will form a loop. Please see the following links for more information:

Data Link Forwarding Diagram

If you access an external IP address or an SLB instance from within a cluster, kube-proxy directly routes the traffic to the endpoint of the backend service. In Terway IPVLAN mode, the traffic is handled by Cilium instead of kube-proxy. This feature is not supported in Terway versions earlier than 1.2.0. This feature is automatically enabled for clusters that use Terway 1.2.0 or later versions. You must manually enable this feature for clusters that use Terway versions earlier than 1.2.0. (This is the section 2.5 section.)

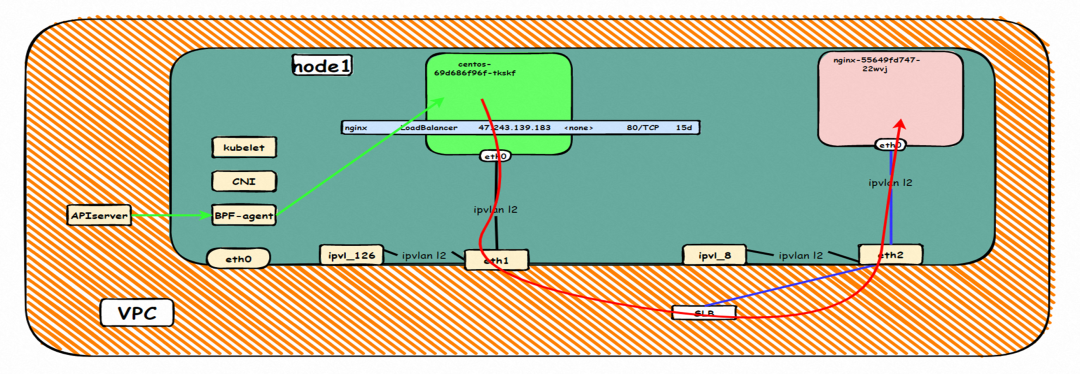

The environment here is similar to section 2.6 and will not be described too much, except that this section accesses the External IP 47.243.139.183 when the Terway version is less than 1.2.0.

Please refer to sections 2.6 and 2.8.

The client pod and the backend pod of the SVC belong to the same Elastic Compute Service instance but do not belong to the same ENI. Therefore, if the version of Terway is earlier than 1.2.0, the External IP address data link is accessed from the ECS instance to the SLB of the External IP address through the client pod ENI, and the external IP address is forwarded to another ENI. Although the two client pods and the SVC backend pods are in the same ECS instance since they belong to different ENIs, no loopback is formed, and the access is successful. Note: The result here is different from section 2.8.

Data Link Forwarding Diagram

The environment here is similar to section 2.6 and will not be described too much, except that this section accesses the External IP 47.243.139.183 when the Terway version is less than 1.2.0.

Refer to the 2.7 and 2.9 sections.

This is similar to the 2.7 architecture. The client pod and the backend pod of the SVC do not belong to different ECS nodes. The client accesses the SVC's external IP address. Only the version of Terway is different. In section 2.7, the version of Terway is greater than 1.2.0, and this section is less than 1.2.0. Only the version of Terway is different from eniconfig. Note: One of the two access links will pass through SLB, and the other will not pass through SLB. The reason for the different placement is that the intra-cluster SLB is enabled after Terway 1.2.0, and the access to ExternalIP will be loaded to the Service CIDR block. Please see section 2.8 for more information.

Data Link Forwarding Diagram

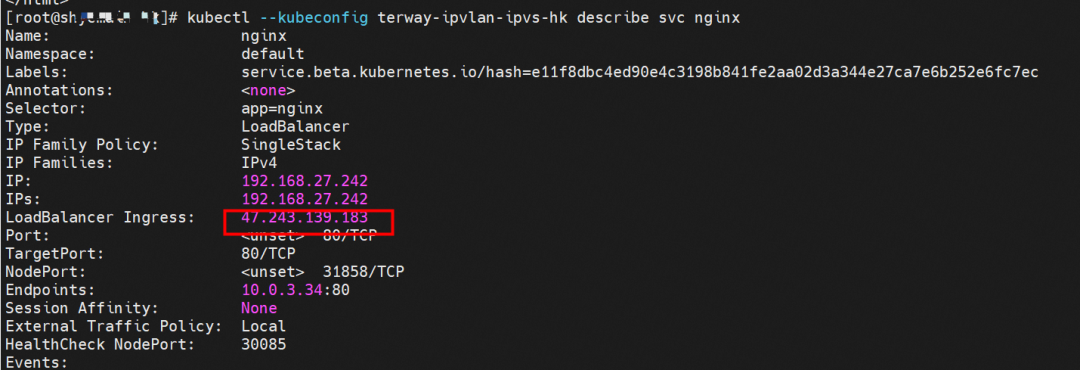

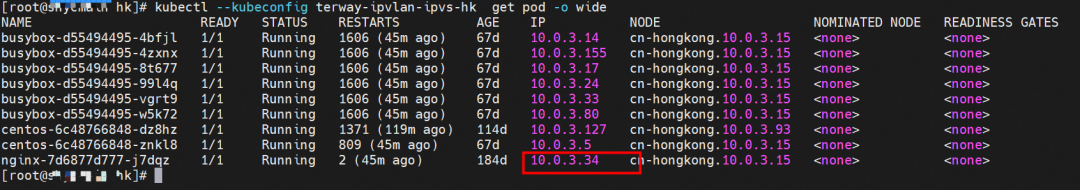

The nginx-7d6877d777-j7dqz, IP address 10.0.3.34, exists on cn-hongkong.10.0.3.15.

By describing SVC, you can see that the nginx pod is added to the backend of SVC nginx. The ClusterIP of the SVC is 192.168.27.242.

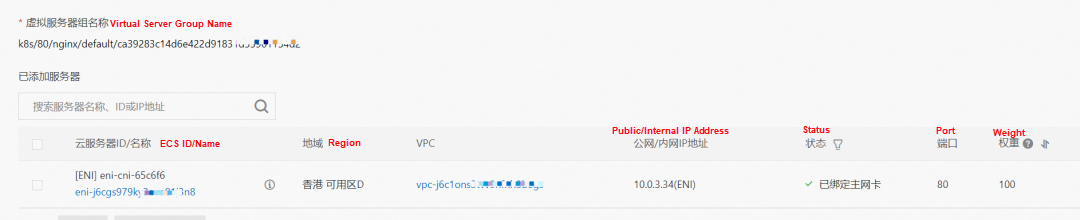

In the SLB console, you can see that the backend server group of the lb-j6cj07oi6uc705nsc1q4m VServer group is an ENI eni-j6cgs979ky3evp81j3n8 of two backend nginx pods.

From the external perspective of the cluster, the SLB backend virtual server group of SLB is the ENI to which the backend pods of SVC belong, and the internal IP address is the address of the pods.

Data Link Forwarding Diagram

This article focuses on ACK data link forwarding paths in different SOP scenarios in Terway IPVLAN + EBPF mode. With the customer's demand for extreme performance, Terway IPVLAN + EBPF has a higher pod density than Terway ENI. Compared with Terway ENIIP, Terway IPVLAN + EBPF has higher performance, but it also brings about adjustments to the complexity and observability of network links. This scenario can be divided into 11 SOP scenarios, and the forwarding links of these 11 scenarios, technical implementation principles, the cloud product configurations are sorted out and summarized one by one, which provides preliminary guidance to encounter link jitter, optimal configuration, and link principles under the Terway IPVLAN architecture. In the Terway IPVLAN mode, EBPF and IPVLAN tunnels are used to avoid the forwarding of data links in the ECS OS kernel protocol stack, which inevitably brings higher performance. At the same time, multiple IP addresses share ENI in the same way as the ENIIP mode to ensure pod density. However, as business scenarios become more complex, the ACL control of business tends to be managed in the pod dimension. For example, you need to set security ACL rules in the pod dimension.

In part five of this series, we will analyze the Terway ENI-Trunking mode - ACK Analysis of Alibaba Cloud Container Network Data Link (5): Terway ENI-Trunking.

Analysis of Alibaba Cloud Container Network Data Link (3): Terway ENIIP

Analysis of Alibaba Cloud Container Network Data Link (5): Terway ENI-Trunking

212 posts | 13 followers

FollowAlibaba Developer - September 7, 2020

Alibaba Cloud Native Community - March 14, 2023

OpenAnolis - October 26, 2022

Alibaba Cloud Native - June 9, 2023

Alibaba Cloud Native - June 12, 2023

Alibaba Clouder - September 1, 2021

212 posts | 13 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native