We can divide a speech synthesis system into a splicing synthesis system and parameter synthesis system. When we introduce the neural network into the parameter synthesis system as a model, the synthesis quality and naturalness of the parameter synthesis system get significantly improved. On the other hand, the popularity of IoT devices (such as smart loudspeaker boxes and smart TVs) also imposes computing resource constraints and real-time rate requirements for the parameter synthesis systems deployed on the devices. The Deep Feedforward Sequential Memory Network (DFSMN) we have introduced in this study can maintain the synthesis quality, while effectively reducing the computational usage, and improving the synthesis speed.

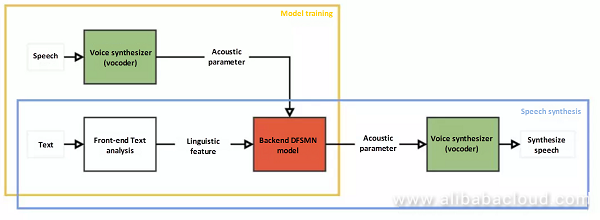

We will adopt a statistical parameter speech synthesis system based on a Bidirectional Long Short-Term Memory (BLSTM) as a baseline system. Similar to other modern statistical parameter speech synthesis systems, the statistical parameter speech synthesis system based on the DFSMN we have proposed also consists of three main parts: voice synthesizer (vocoder), front-end module, and backend module, as shown in the figure above. We have used WORLD, an open source tool, as the vocoder for extracting spectrum information, the logarithm of fundamental frequency, band period characteristic (BAP), and voice mark from the original speech waveform during model training, and for completing conversion from acoustic parameters to actual voice during speech synthesis.

We have used the front-end module for performing regularization and lexical analysis on input texts. These linguistic features get encoded, and we have used them as inputs for neural network training. We have used the backend module for establishing the mapping from the inputted linguistic features to acoustic parameters. The DFSMN serves as the backend module. Deep Feedforward Sequential Memory Network (DFSMN) as an improved version of the standard FSMN, a Compact Feedforward Sequential Memory Network (cFSMN) introduces low-rank matrix factorization into the network structure. Such improvement simplifies the FSMN, decreases the number of parameters of the model, and accelerates the model training and prediction processes.

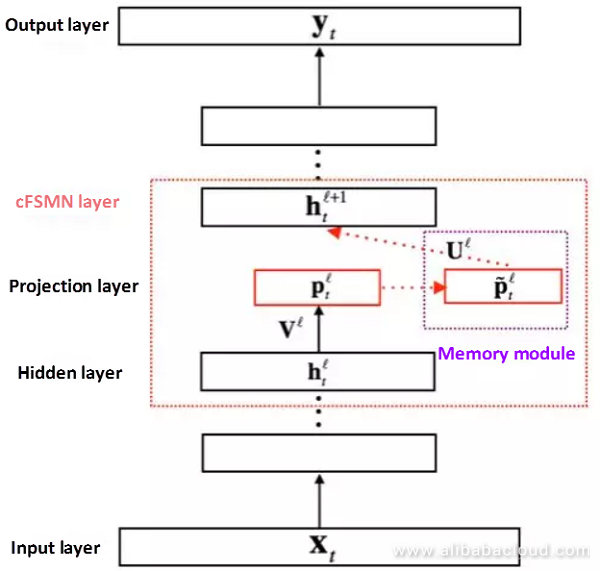

The figure above shows the structure of the cFSMN. We can represent the computing process of each cFSMN layer in the neural network with the following steps:

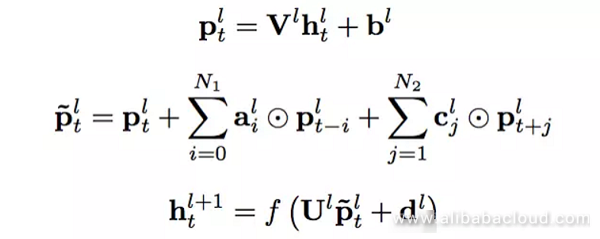

The three steps represent the following formulas, respectively:

Similar to Recurrent Neural Networks (RNNs, comprising a BLSTM), the cFSMN is capable of capturing long-range information of a sequence by adjusting orders of memory modules. On the other hand, we can train the cFSMN through Backpropagation (BP). Compared with the RNNs trained that we train using a Backpropagation Through Time (BPTT) algorithm, the cFSMN is faster and is not easily affected by the disappearance of gradients.

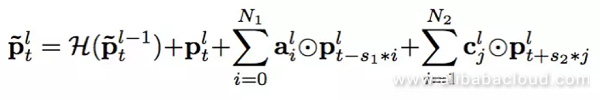

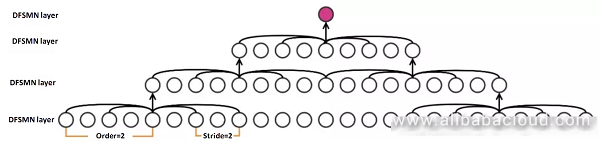

We can obtain the DFSMN by making further improvements on the cFSMN. We have used the skip-connections technique, which is widely used in deep neural networks, for the DFSMN so that the gradient can pass nonlinear transformation when we execute the BP algorithm. This allows us to converge the network quickly and correctly even though more DFSMN layers are stacked. The increase of depth has two advantages for a DFSMN model. On the one hand, deeper networks generally have a stronger representational ability. On the other hand, the increase of depth can indirectly increase the context length used when the DFSMN model predicts the output of the current frame, which is intuitively useful for capturing long-range information of a sequence. Specifically, we add the skip-connections between memory modules of two adjacent layers, as we have shown in the formula below. Memory modules in DFSMN layers have the same dimension so that we can implement the skip-connections through identical transformation.

We can consider that the DFSMN is a flexible model. When the input sequence is short, or there is a high requirement on the prediction delay, we can use a small order of a memory module. In this case, we have used only information of the frames near to the current frame for predicting the output of the current frame. However, if the input sequent is too long, or the prediction delay is not significant, we can use a large order of a memory module. In such a case we can use long-range information of a sequence effectively and use it as a model to improve the performance of the model.

In addition to the order, we add another hyperparameter to memory modules of the DFSMN, i.e., stride, which we have used for representing the number of skipped adjacent frames when the memory modules extract information of past or future frames. It has a basis because compared with the speech recognition task, more adjacent frames in the speech synthesis task get overlapped.

As mentioned above, in addition to directly increasing the order of memory modules of the layers, the increase of depth can also directly increase the context length used for the model when we predict the output of the current frame. The figure above gives an example.

In the experiment, we take a dataset of Chinese novels read by a man as an example. We divide the dataset into two parts: a training set and a validation set. The training set includes 38,600 readings (about 83 hours), and the validation set includes 1,400 readings (about 3 hours). The sampling rate of all speech data is 16k Hz, its frame length is 25 ms, and its frameshift is 5 ms. We have used the WORLD vocoder for extracting acoustic parameters frame by frame, including 60 dimensions of Mel-frequency cepstral coefficients, 3 dimensions of logarithms of a fundamental frequency, 11 dimensions of BAP features, and 1 dimension of voice mark. We have used the four features as four goals of neural network training for multi-goal training. The linguistic features extracted by the front-end module are 754 dimensions in total and serve as inputs of neural network training.

The compared baseline system relies on a powerful BLSTM model. The BLSTM model consists of one full connection layer on the bottom and three BLSTM layers on the top. The full connection layer includes 2,048 units, and the BLSTM layer includes 2,048 memory units. The BLSTM model is trained using the BPTT algorithm, while the DFSMN model is trained using a standard BP algorithm. We have trained our models, (including the baseline system) on two GPUs through a Blockwise Model-update Filtering (BMUF) algorithm. We use a multi-target frame level Mean Squared Error (MSE) as a training goal.

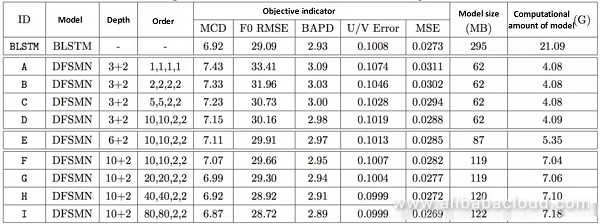

All DFSMN models consist of several DFSMN layers on the bottom and two full connection layers on the top. Each DFSMN includes 2,048 nodes and 512 projection nodes, and each full connection layer includes 2,048 nodes. In the table above, the third column indicates the number of DFSMN layers and full connection layers which constitute the model. The fourth column shows the order and stride of the memory module of the DFSMN layer of the model.

Since we are applying the FSMN model in the speech synthesis task for the first time, our experiment starts from a model with a shallow depth and small order, i.e., model A (please note that only model A's stride is 1 because the model with the stride of 2 is always a bit better than the model with stride of 1). From system A to system D, the order gets gradually increased while three DFSMN layers remain fixed. From system D to system F, the number of layers gets gradually increased while the order and stride of 10,10,2,2 remain fixed. From system F to system I, the order is gradually increased again while 10 DFSMN layers remain fixed. In the experiments above, with the increase of the depth and order of the DFSMN model, the objective indicative is gradually decreased (the lower, the better). This trend is obvious, and the objective indicative of system H exceeds the BLSTM baseline system.

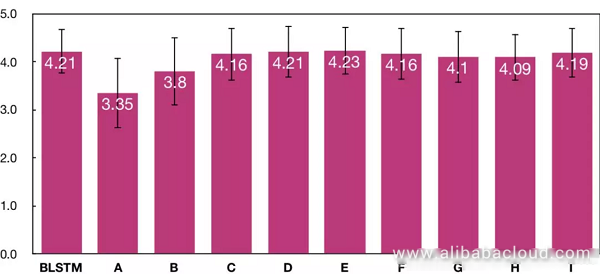

On the other hand, a Mean Opinion Score (MOS) test (the higher, the better) is made. We have shown the test result in the figure above. 40 testers whose native language is Chinese completed the test and were paid via a crowdsourcing platform. In this test, each system generates 20 synthesis speeches outside the set, and ten different testers independently evaluate each synthesis speech. The test result of the MOS indicates that the subjective listening naturalness from system A to system E gets gradually increased, and system E reaches the level consistent with the BLSTM baseline system. However, although the objective indicator of subsequent systems gets continuously increased, their subjective indicator fluctuates up and down on the score of system E and does not increase further.

We can conclude from the subjective/objective test that 120 frames (600 ms) of history and future information are upper limits of the context length required for modeling the acoustic model for speech synthesis. More context information is not useful for the synthesis result. Compared with the BLSTM baseline system, the model size of the proposed DFSMN system can be a quarter of the baseline system, and its prediction speed is four times faster than the baseline system while obtaining the subjective listening consistent with the baseline system. In this way, the DFSMN system is very suitable for end-user product environments having high requirements on memory occupation and computational efficiency, for example, when deployed on IoT devices.

Read similar articles and learn more about Alibaba Cloud’s products and solutions at www.alibabacloud.com.

References:

https://arxiv.org/abs/1802.09194

2,605 posts | 747 followers

FollowAlibaba Clouder - March 31, 2021

Alibaba Cloud Community - January 7, 2022

Alibaba Cloud MaxCompute - December 7, 2018

Alibaba Clouder - February 1, 2018

Alibaba Cloud Community - December 27, 2021

Alibaba Cloud Community - June 24, 2022

2,605 posts | 747 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT March 10, 2019 at 12:54 pm

The key sentences ..."Speech synthesis engines play a significant role in improving man-machine interactions; Alibaba Research presents a radical approach that makes speech-engines faster than traditional approaches". It is a good selling point.