The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

By Suchuan, from the Alibaba F(x) Team

In 2017, a paper called pix2code: Generating Code from a Graphical User Interface Screenshot drew public attention. This paper introduced the application of deep learning technology in UI. With deep learning technology, a UI screenshot can be recognized to generate the UI structure description and convert the description into HTML code. Some people think the direct generation of code from UI screenshots is of little significance. AI and Sketch software use data structures to save the structure description of design files. So, machine learning is not needed for obtaining the structure description. Moreover, the certainty of generated code cannot be ensured. Others agree with this idea and think the deep learning model is capable of UI feature learning and UI intelligent design.

After the paper was released, the pix2code-based project, Screenshot2Code, ranked first on the Github lists. The project can automatically convert UI screenshots into HTML code. Its developer claimed that artificial intelligence would completely change frontend development in three years. This also raised doubt among users that don't believe HTML code alone can meet the requirements of complex frontend technologies and various frameworks.

In 2018, Microsoft AI Lab open-sourced the sketch-to-code tool, Sketch2Code. Some people considered the generated code unsatisfactory and not applicable to the production environment. Others thought the automatic generation of code was still in the beginning stages and had broader development prospects.

In 2019, Alibaba opened its intelligent code generation platform, imgcook, to the public. It allows the intelligent generation of different types of code, such as React, Vue, Flutter, and Mini Program, by recognizing design drafts, such as Sketch, PSD, and image. It supported the automatic generation of 79.34% of frontend code during the 2019 Double 11 Global Shopping Festival. This showed that the intelligent generation of code was no longer an offline experiment and how it could create value.

Every time these new automatic code generation products are released, there will always be discussions and worries on the Internet. For example, questions and comments such as, "Will AI replace the frontend?" and "A large number of frontend programmers will lose their jobs" are commonplace.

For the former question, AI will not replace the frontend for a while, but it will bring changes. On the one hand, frontend intelligence explorers will experience changes. They can become Node.js server engineers and machine learning engineers, creating more value and achievements. On the other hand, the developers that enjoy the fruits of frontend intelligence will be affected. Their ways of R&D will be updated. For example, code intelligent generation, recommendation, error correction, and UI automated testing can help them complete massive simple and repetitive works. Thus, they can focus on more valuable things.

This article discusses our ideas about AI in the frontend field and efforts to implement code intelligent generation. As explorers in the frontend intelligence field, we, the Taobao F(X) Team, have ideas on the future development of AI in the frontend field. We also make efforts to promote the implementation and iteration of AI in imgcook. As a result, AI-supported the code intelligent generation of 90.4% of new modules during the 2020 Double 11 Global Shopping Festival. The coding efficiency increased by 68%.

The monthly average PV and UV of the official website of imgcook are 6,519 and 3,059 in 2020, which are 2.5 times higher than in 2019.

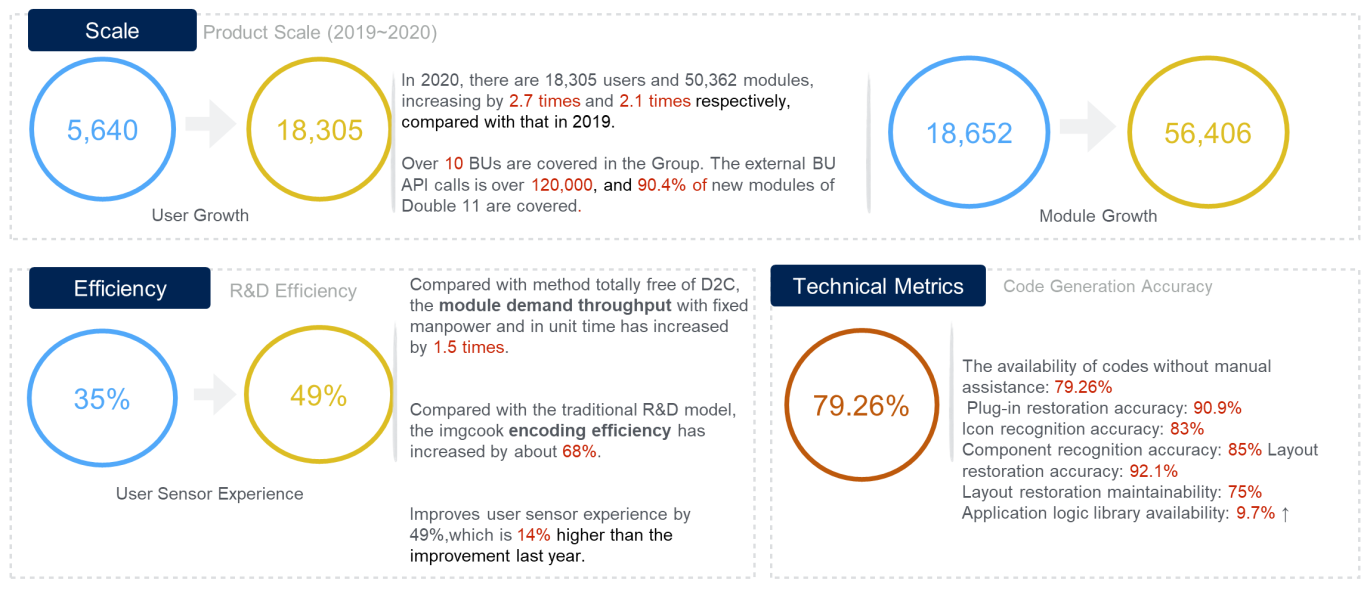

There are 18,305 imgcook users, among which 77% are community users, and 23% are internal users of Alibaba Group. There are 56,406 imgcook modules, among which 68% are external modules, and 32% are internal modules. The number of users and modules has increased by 2.7 times and 2.1 times in 2020, compared to 2019.

At least 150 companies are involved in the community, and more than ten Business Units (BU) are covered in the Group. 90.4% of new modules of Double 11 are covered. 79.26% of intelligently generated codes without manual assistance have been retained to release, and the coding efficiency (Ratio of module complexity to R&D time) has increased by 68%.

Compared with 2019, the user sensory experience has improved by 14%. Compared with the D2C-free operation, the throughput of module demands with fixed workloads and in unit time increased by about 1.5 times. Moreover, compared with traditional R&D models, imgcook has enhanced the coding efficiency by about 68%.

An Overview of Imgcook R&D Performance

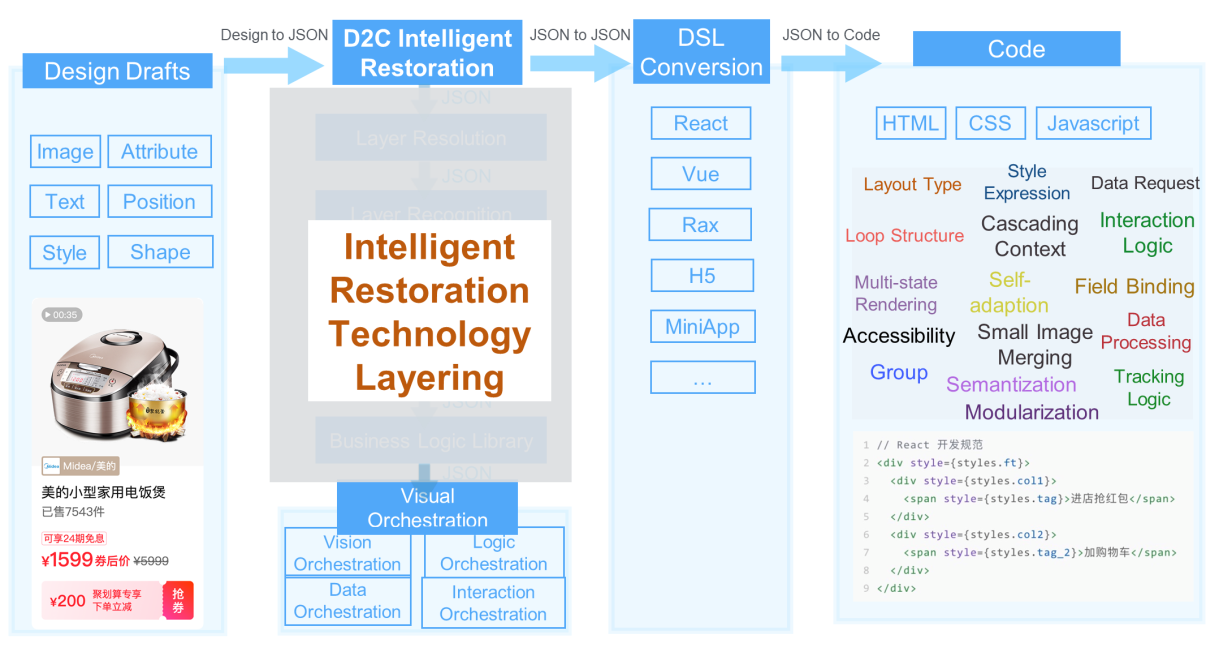

The first thing is the principle of code intelligent generation by imgcook. Imgcook mainly identifies information from the visual draft and transforms the information into code to generate code automatically.

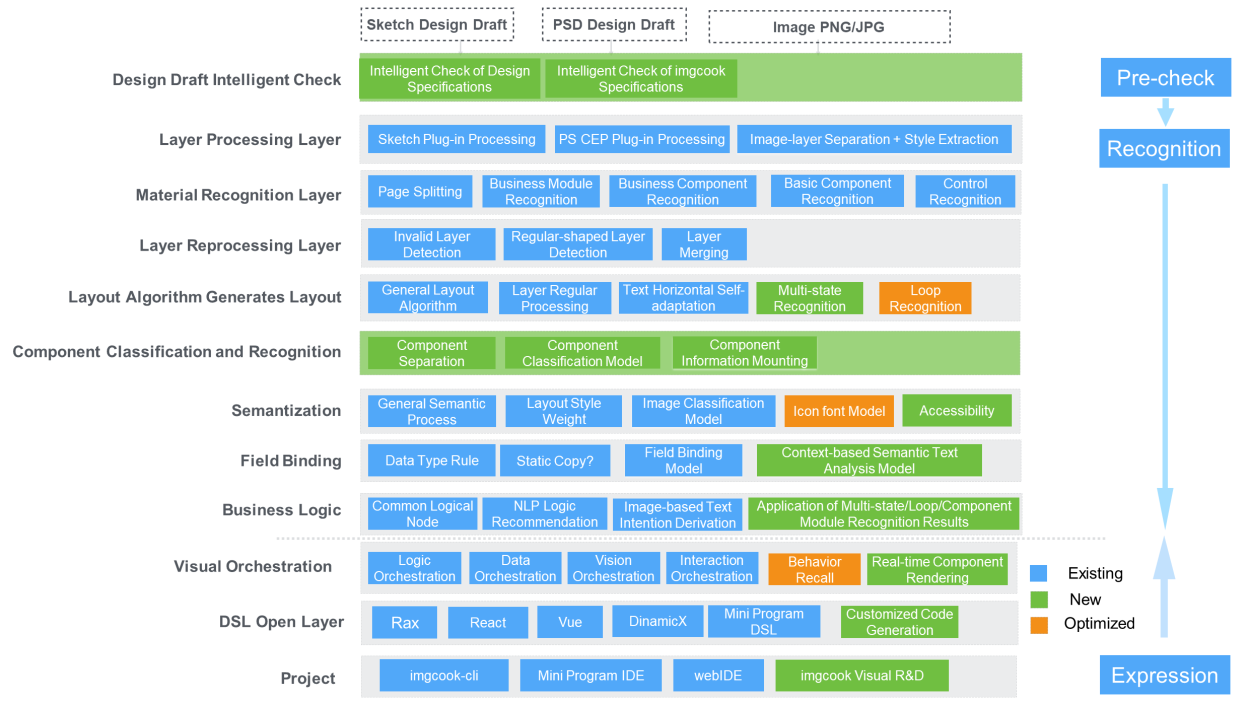

Essentially, imgcook uses plug-ins of design tools to extract JSON descriptive information from design drafts. Then, it processes and converts the information via intelligent restoration technologies, such as rule systems, computer vision, and machine learning. By doing so, a JSON file that conforms to the code structure and semantics is generated and converted to the frontend code with a DSL converter. Serving as a JS function, the DSL converter converts JSON to the necessary code. For example, the output of React DSL is React code that complies with React development specifications.

Process of D2C Code Intelligent Generation

The core part is the conversion from JSON to JSON. The design draft only has metadata, such as images and texts, with absolute coordinates as the location. Besides, the styles in the design draft are different from those on the Web page. For example, the opacity attribute does not affect sub-nodes in Sketch but does on web pages. However, manually written code contains information, such as layout types, maintainable DOM structures, and data semantics, components, and loops.

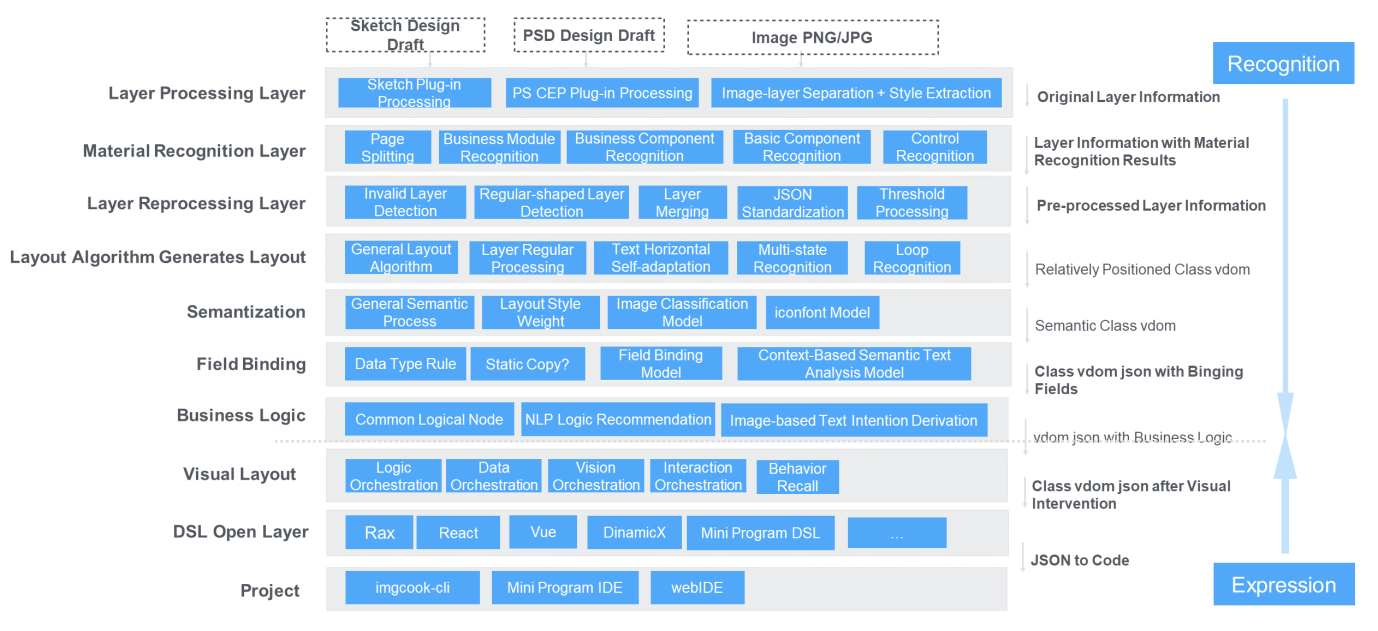

Intelligent restoration is tailored to intelligently generate codes like those generated manually. The intelligent restoration of D2C has been layered, and the input and output of each layer are in JSON format. The essence of intelligent restoration is JSON conversion layer by layer. To modify JSON files, the imgcook editor can be used for visual intervention. Then, the editor converts JSON files that conform to the code structure and semantics into codes at the DSL layer.

D2C Intelligent Restoration Technology Hierarchy

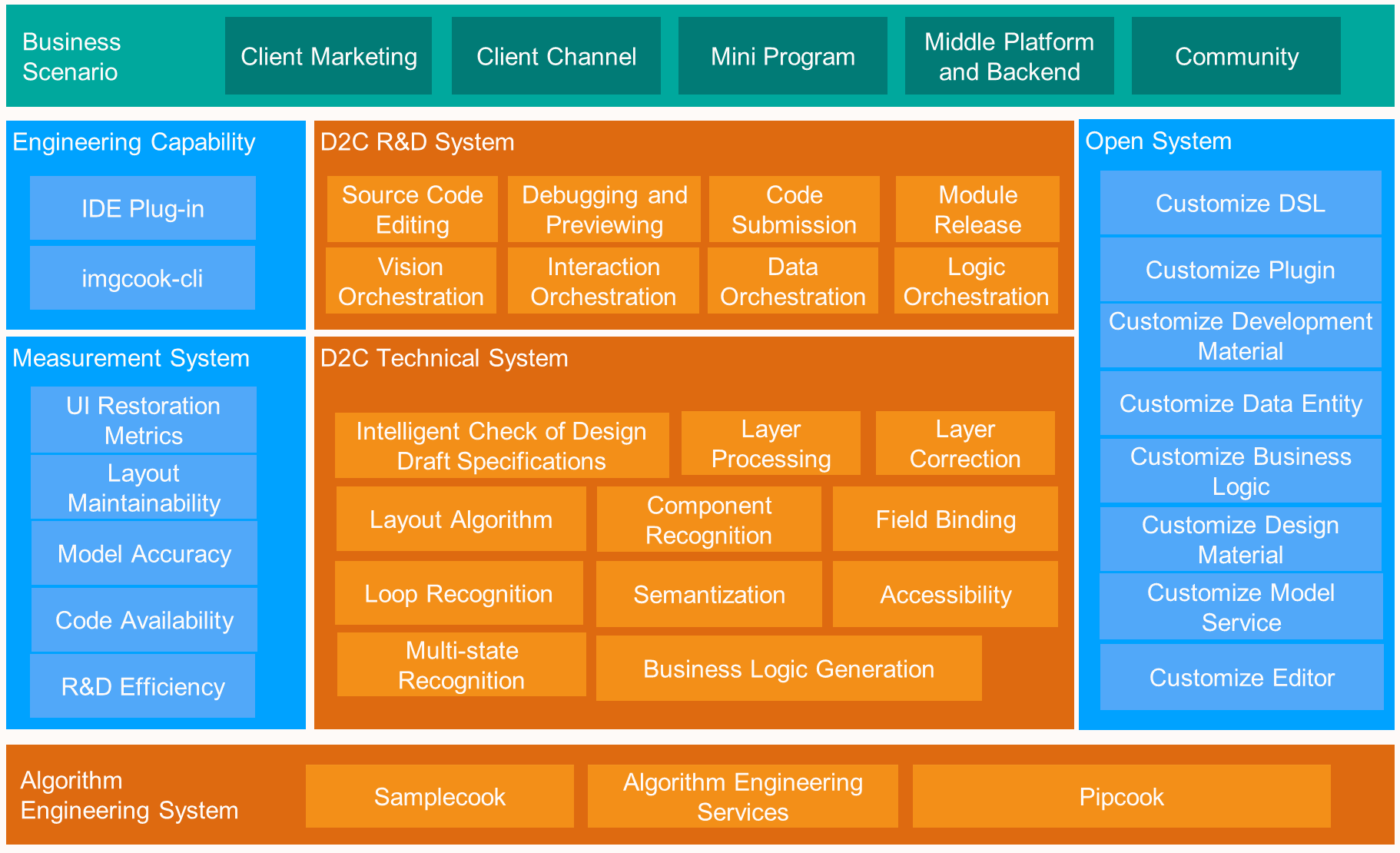

The core procedure of intelligent restoration constitutes the core technical system of D2C and measures the core restoration capability and R&D efficiency through the measurement system. The lower layer provides intelligent capabilities in the core technology system based on the algorithm engineering system, including Samplecook, algorithm engineering services, and frontend algorithm engineering framework Pipcook. The upper layer follows the post-procedure of intelligent restoration through the D2C R&D system. The layer also meets users' requirements for the second iteration by enabling visual intervention. Additionally, the engineering procedure is built into the imgcook to support all-in-one development, debugging, preview, and publish, which improves the overall engineering efficiency. Moreover, the open system is formed based on highly scalable customization capabilities, such as customized DSL and development materials. The system and the upper layer together form the D2C architecture. Thus, the D2C architecture supports various business scenarios, including those in Alibaba's internal clients, mini programs, middle platforms, backend, and external communities.

D2C Technical Architecture Diagram

With the intelligent frontend, this year, the full procedure of the D2C technical system was enhanced intelligently. Pipcook and Samplecook were provided. The former allows the frontend engineers to be machine learning engineers. The latter solves sample collection issues. The R&D procedure of the marketing module is also optimized to improve overall R&D efficiency.

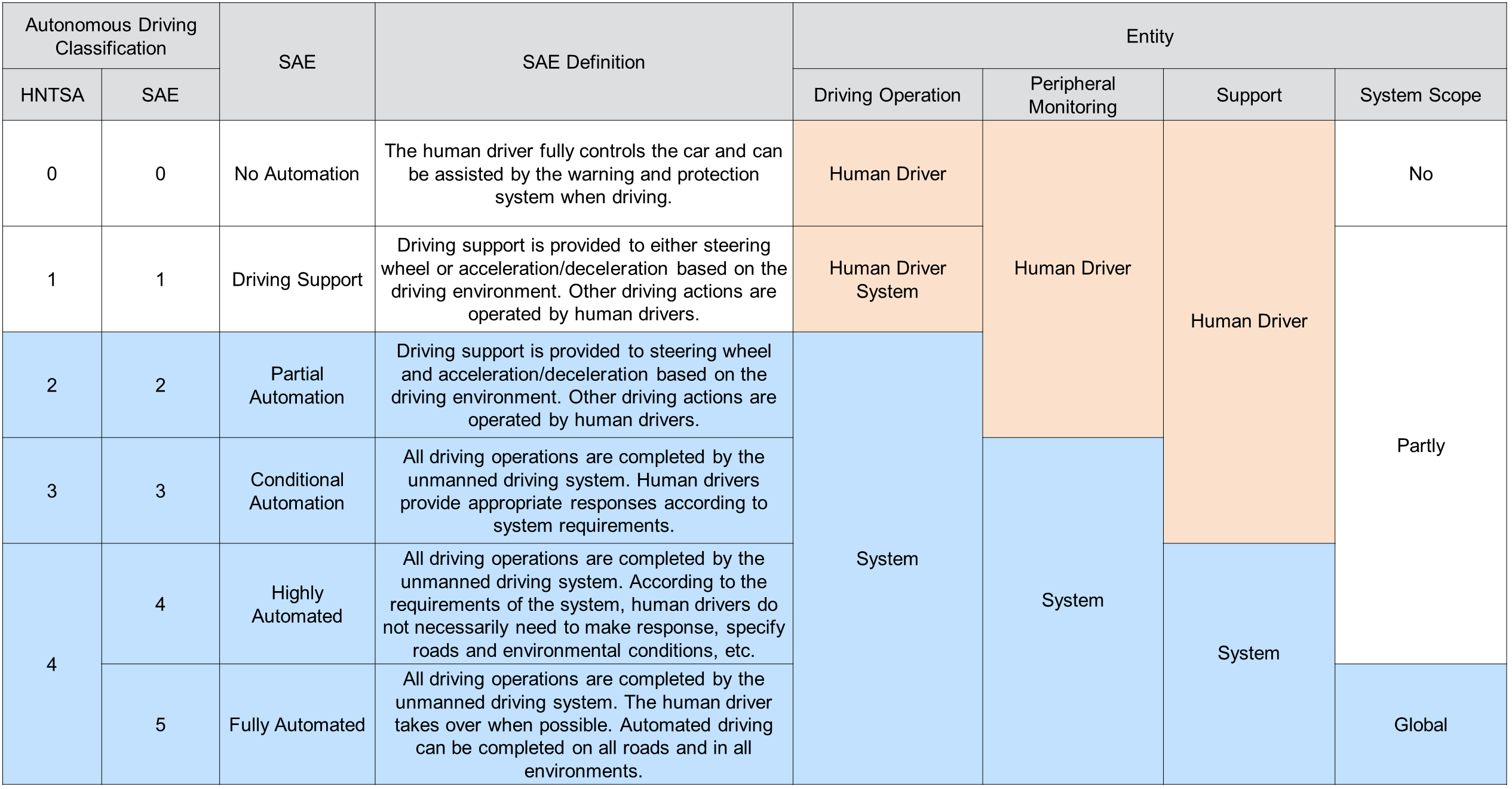

The Taxonomy of Driving Automation for Vehicles divides driving automation into six levels, from 0 to 5. The taxonomy is based on the extent to which the driving automation system can perform dynamic driving tasks and the role allocation and limitations when performing dynamic driving tasks. In high-level autonomous driving, the driver becomes a passenger. The taxonomy sets clear standards that guide various enterprises to carry out more targeted R&D and technology deployment works.

Autonomous Driving Taxonomy

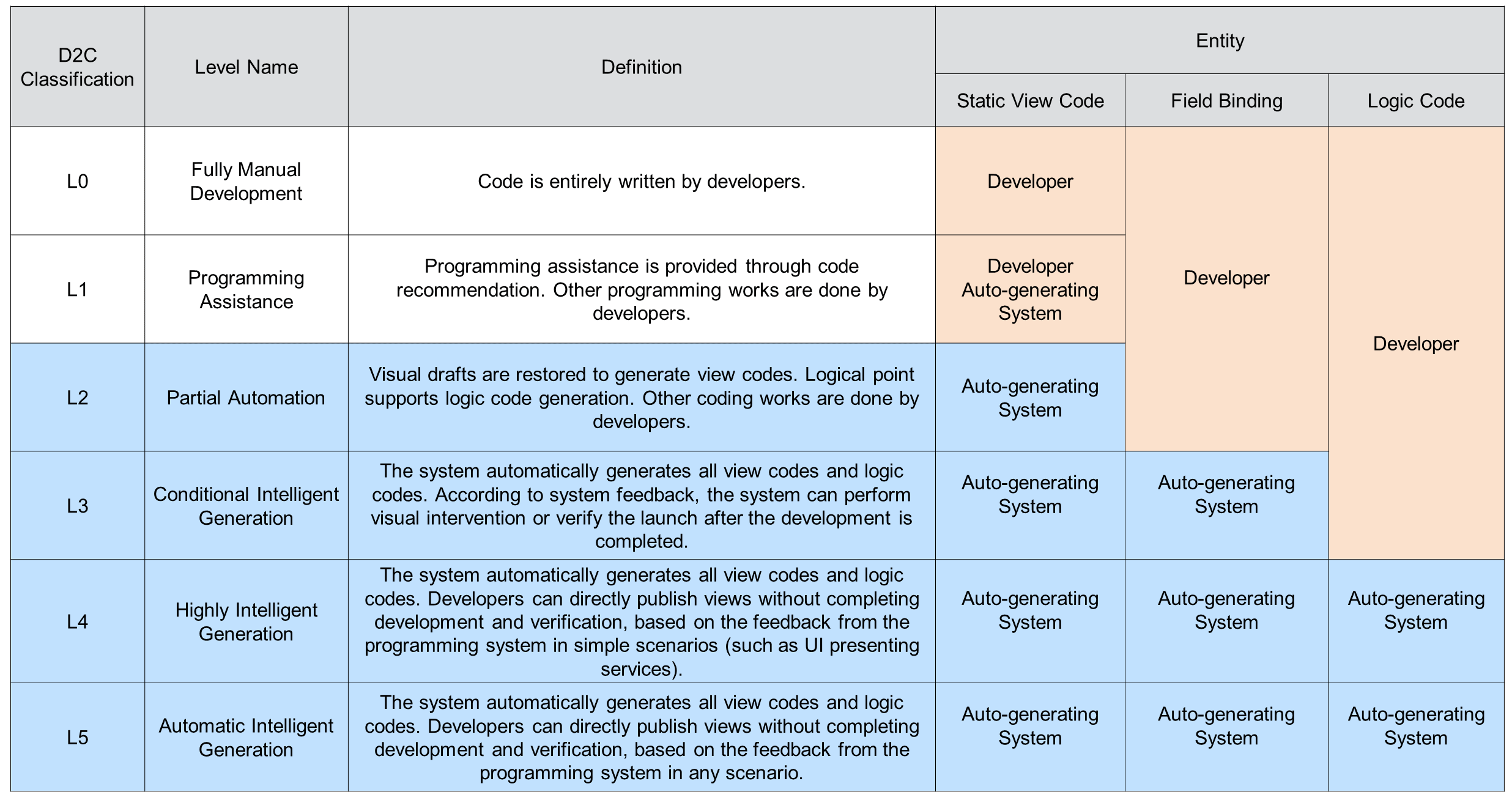

Referring to the taxonomy, we considered the extent to which the D2C system can automatically generate codes and made the role of codes and requirements for manual intervention and verification clear. Based on these works, a similar taxonomy of the D2C system delivery is defined to help understand the current level of the D2C system and the next development direction.

D2C System Delivery Taxonomy

Currently, imgcook is at L3 of D2C. The intelligently generated codes still need visual intervention or manual development before verification and release. It's required to split up the UI information architecture and subdivide intelligent capabilities of code automated generation from the UI information to enter L4.

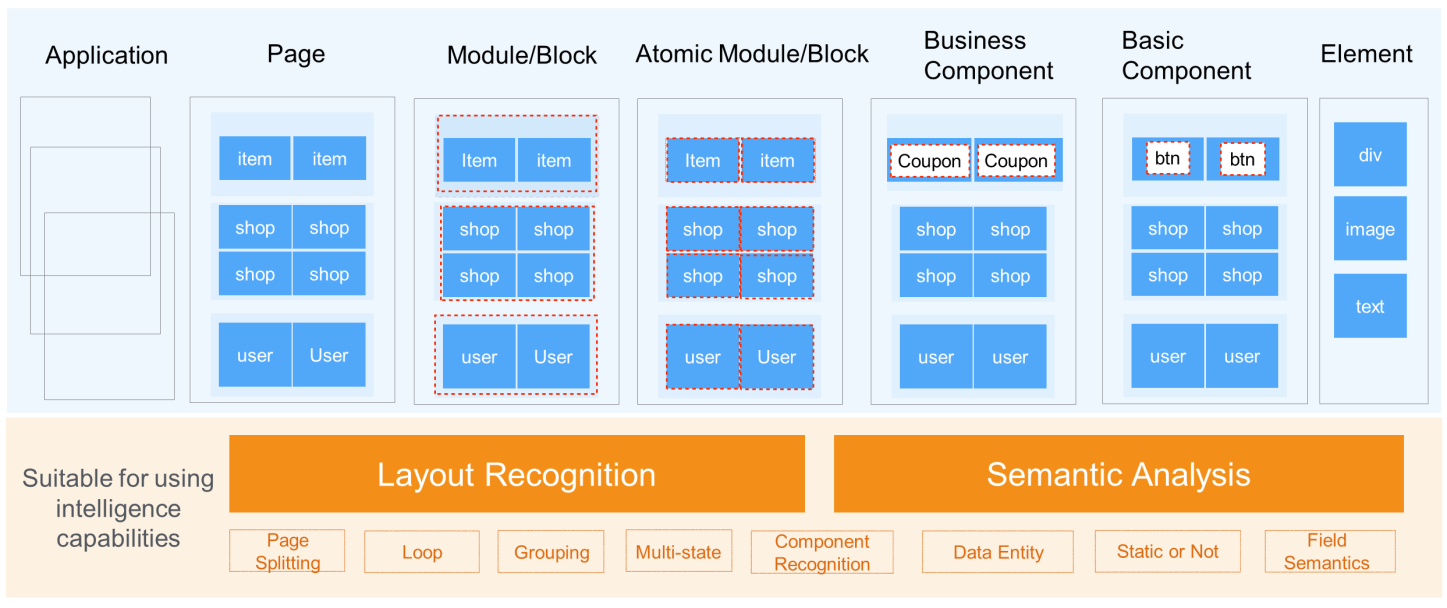

UI Information Architecture Splitting

An application consists of multiple pages. Each page is divided by UI granularity into modules or blocks, atomic modules or blocks, business and basic components, and elements. UI's layout structure and semantics with each granularity need to be defined. Thus, modular, componentized, and semantic codes can be generated as written manually.

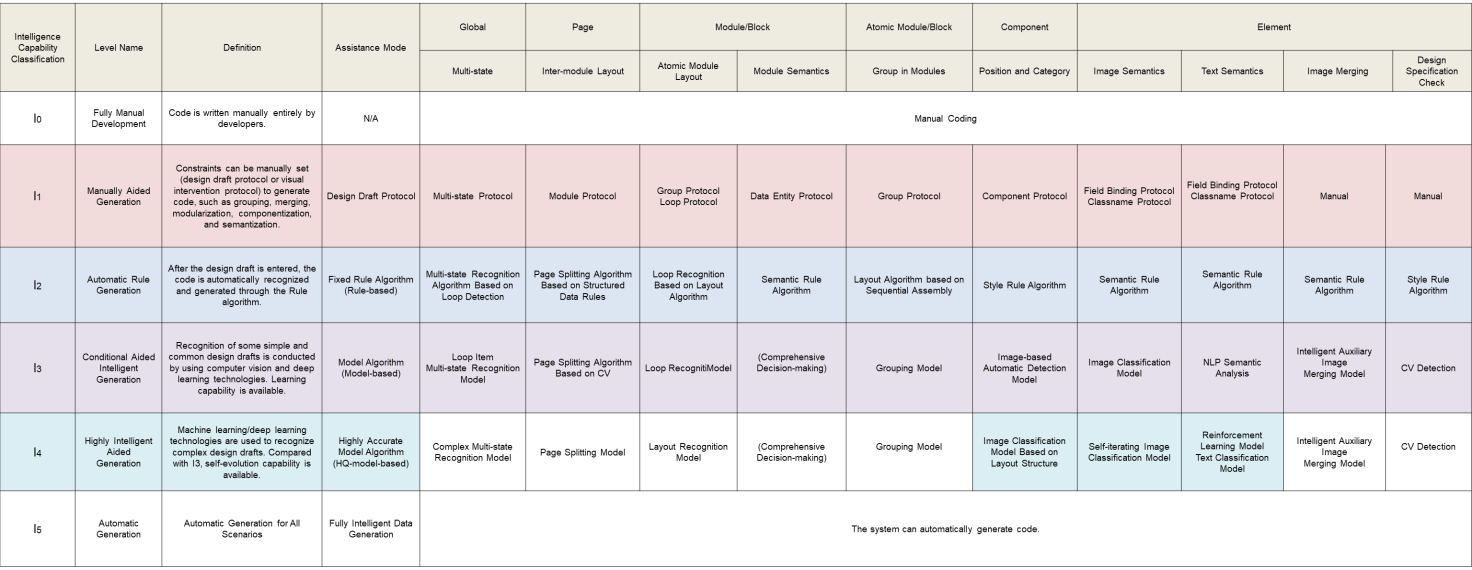

By splitting up the UI information architecture and combining it with imgcook's technical system, we classified intelligence capabilities at different granularities from I0 to I5. The I is short for intelligent.

D2C Intelligent Capability Classification

The colored parts refer to existing capabilities. Imgcook's intelligence capability is currently at I3 and I4 and works in collaboration with capability at I2 in the production environment. The intelligence capability at I3 needs to optimize and iterate the model continuously. The capability enters I4 when the accuracy rate of real online scenarios reaches 75%, or the model can self-iterate. If the capability enters I5, D2C system delivery can enter L4, according to D2C System Delivery Taxonomy. The generated code can be released without manual intervention and verification.

However, we may remain at L4 for a long time, that is, human-machine collaborative programming. The taxonomy aims to promote the implementation of intelligence capability, help improve the intelligence capabilities of D2C, and gain a deeper understanding of D2C intelligence.

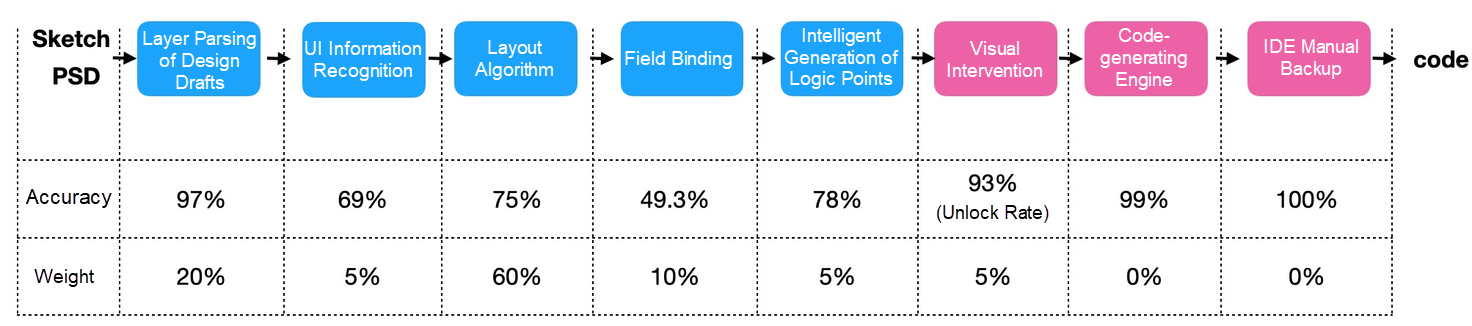

The availability of generated codes is taken as the overall technical indicator. The individual technical indicator and the impact weight on the code availability are defined according to the technical levels of the code generation procedure. The theoretical accuracy of generated code is the sum of the product of accuracy and weight in each stage. The code availability is equal to the proportion of imgcook-generated codes to the final released codes.

Technical Indicator and Weight Definition of Each Level

The intelligence capabilities of D2C are distributed in all stages of the restoration procedure. The intelligence capabilities of the entire procedure have been upgraded to improve code availability. Besides, the technical solution is refined to the following aspects:

The application of intelligent capabilities also needs support from engineering procedures. The procedure involves the application of model recognition results, online users' behavior recall, and the acceptance of UI component recognition results by frontend development components. Moreover, the overall D2C technical system also needs simultaneous upgrades.

D2C Technical System Upgrade

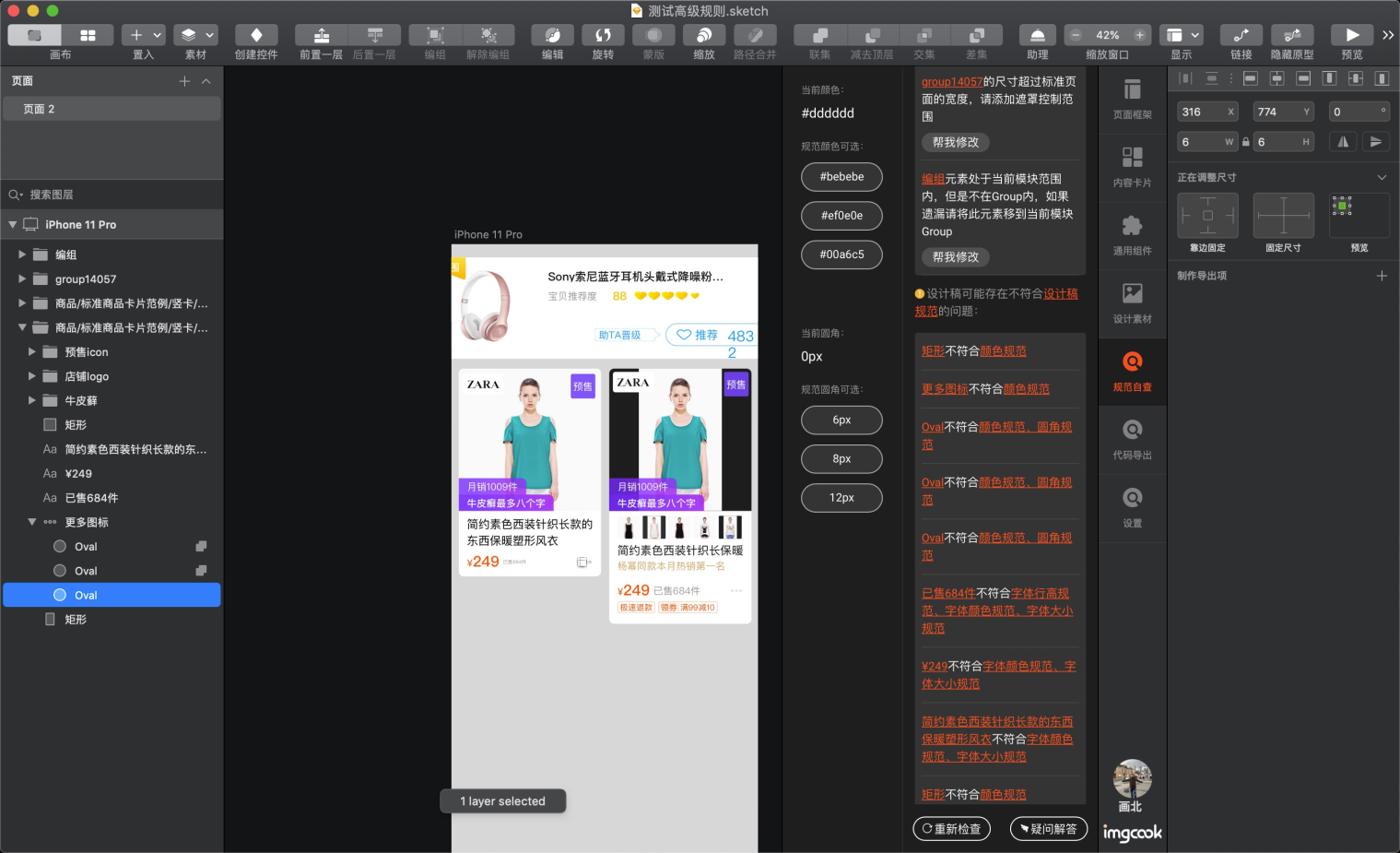

Imgcook identifies and generates code via rule system and intelligent technology by resolving the layer information of design drafts. However, there are differences in expression, structure, and specifications between the design domain and the R&D domain. So, the layer structure of design drafts has a great influence on the rationality of the generated codes. Some unqualified design drafts need to be adjusted by imgcook "group" and "picture merge" protocols.

Codes not conforming to the specification are often developed, such as extra consoles.log, no comments, and repeated variable declarations. Tools, such as ESLint and JSLint, are provided to guarantee the consistency of code specifications.

In the design domain, intelligent checking tools called Design Lint (DLint) are also developed to guarantee the design specifications. These tools can also intelligently review design drafts, prompt errors, and assist in modification.

Design Specification Check

UI materials can be divided into modules/blocks, components, and elements. The information directly extracted from Sketch/PSD is only about text, pictures, and locations. The directly generated codes are composed of div, img, and span. Componentized and semantic codes are essential for practical development. Intelligent technologies, such as NLP and deep learning, are tailored to extract component and semantic information from design drafts.

Last year, exploration and practices have been conducted in recognition of components, images, and text. The recognition results are finally applied in semantics and field binding. However, the recognition effect is greatly influenced by technical solutions. Therefore, the following improvements have been made this year:

The target detection solution was used to recognize UI components, which requires the correct recognition of component categories and locations. For complete visual images, complex backgrounds can be recognized by mistake. Moreover, due to the biased recognized location, it is difficult to mount images to correct nodes, resulting in low accuracy of model recognition results in online applications.

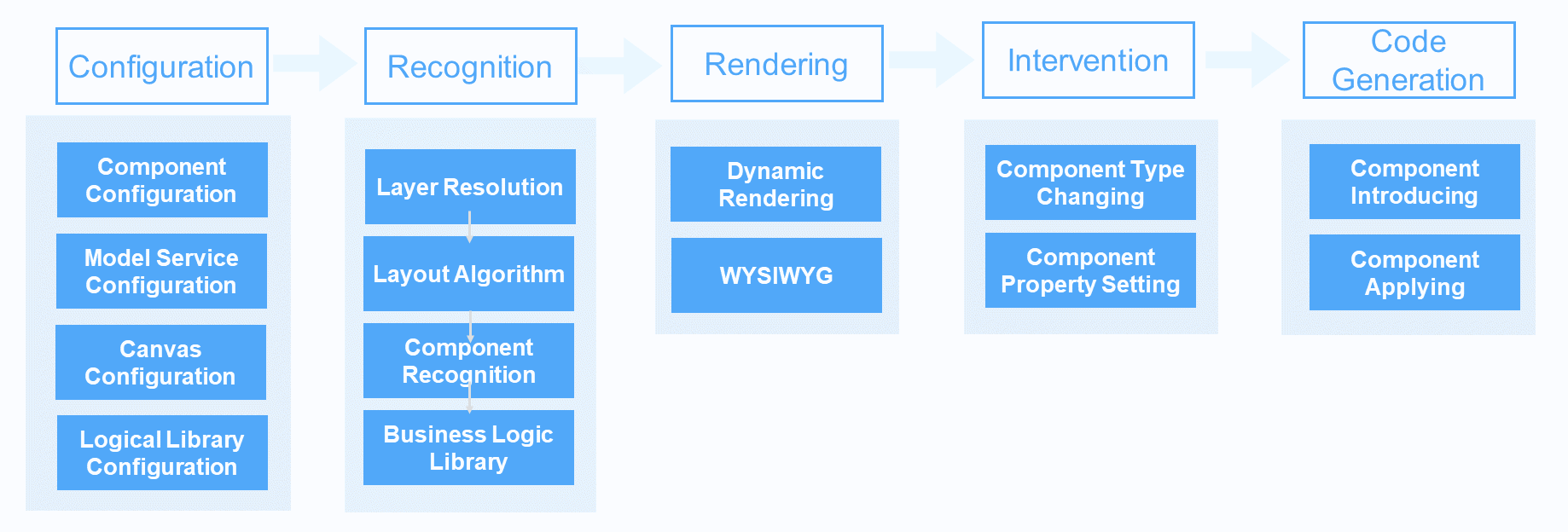

Compared with the original solution, imgcook can obtain the position information of each layer from the visual draft. So, image classification is preferred, and the recognition results can be applied online. This depends on an intelligent material system with components that can be configured, identified, rendered, intervened, and can generate codes. For detailed implementation solutions, please see the following article about component checks.

Component Recognition Application Procedure

The demonstration of component recognition being applied online is listed below:

Component recognition recognizes the video information in visual drafts and uses rax-video to accept and generate codes at component granularity. You must configure customized components (component library settings) and component recognition model service (model service settings.) Canvas resources for video components rendering (editor configuration > canvas resources), and business logic points of the component recognition results (business logic library settings) are also needed.

Demonstration of Component Recognition Procedure

https://p3-juejin.byteimg.com/tos-cn-i-k3u1fbpfcp/c45b9a36156047da953b8119dc88d90c~tplv-k3u1fbpfcp-zoom-1.gif

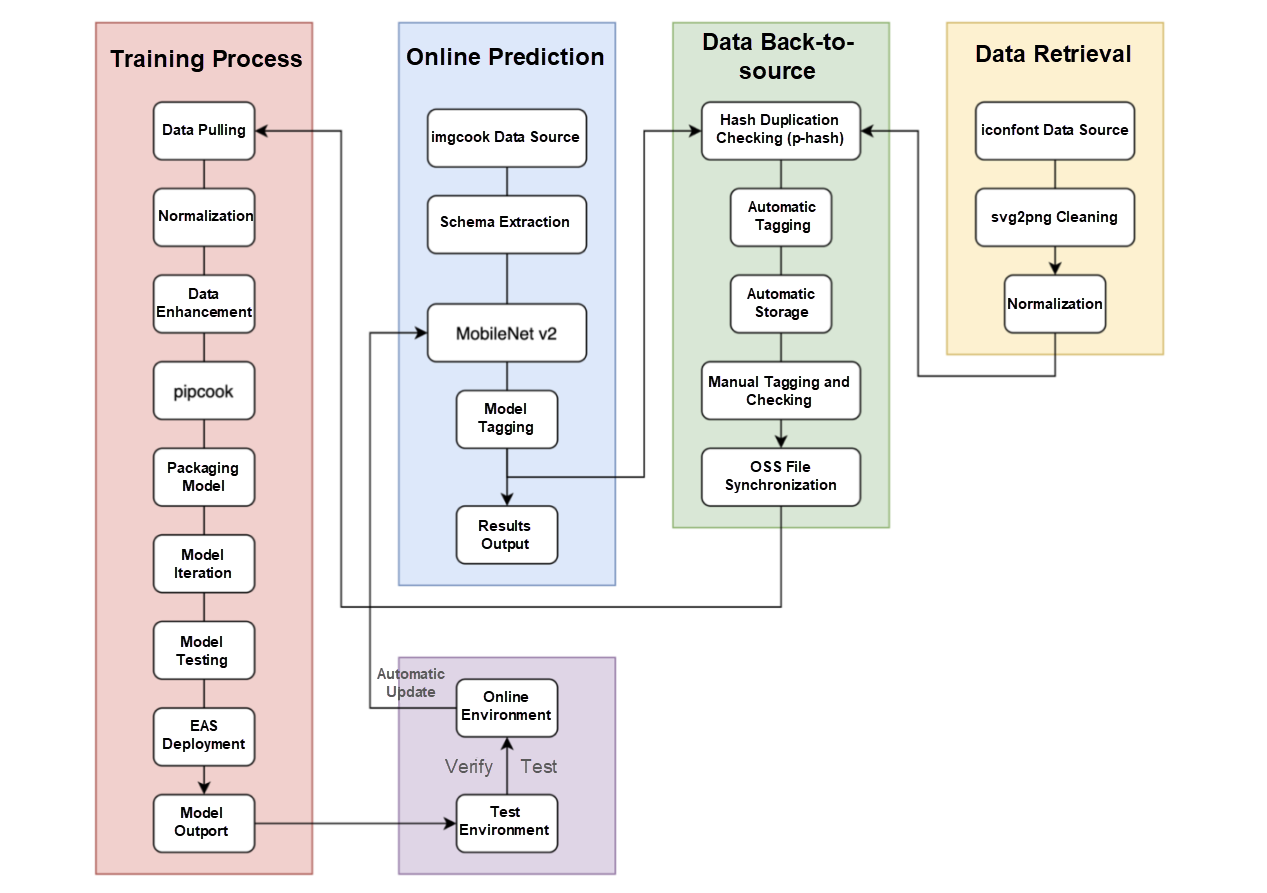

Icon recognition is about image classification, which applies the image classification solution. A closed-loop procedure is designed for con automatic collection models, data processing, and automatic model training and releasing to enable the self-enhancement of model generalization. By doing so, the model can self-iterate and optimize.

Closed-Loop Procedure of Icon Recognition Model

The number of new training sets has reached 2,729 since the launch of the icon recognition model, with a monthly average of 909 valid data returned. The test set accuracy improved from 80% to 83% after several instances of self-iteration.

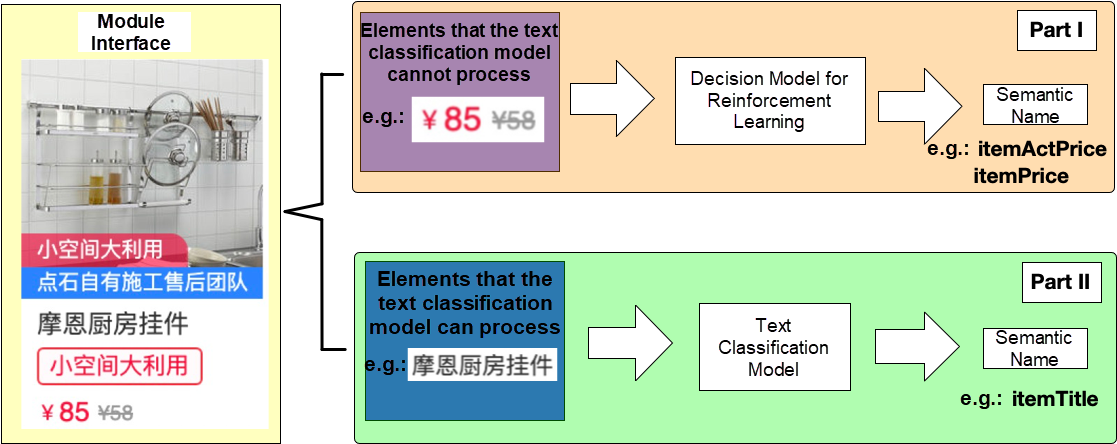

A key part of imgcook's intelligent system is how to bind semantic information with UI elements. The previous solution was to recognize semantics based on images and text classification models, which was limited. It only classifies texts, without considering the context semantics in the whole UI, resulting in a poor model effect.

For example, for the field $200, the text classification model cannot recognize its semantics since its meaning varies in different scenarios, such as activity price, original price, and coupon. Semantic analysis should be performed by referring to the relationship (the unique style) between the field and the UI interface.

Therefore, another solution is introduced for semantic recognition, which combines the context semantics in the UI. The solution of image element selection and text classification is adopted to solve the problem of interface element semantics. First, based on reinforcement learning, the interface elements are "filtered" by style to recognize non-plain texts with styles. Then, the plain texts are further classified. The specific framework is shown below:

Reinforcement Learning + Text Classification

The model algorithm training results are shown below.

Context-Based Semantic Analysis

The layout displays the relationship between nodes in the code. In terms of granularity, the relationship includes the relationship between modules/blocks on a page, between atomic modules/blocks in a module/block, and between components/elements in an atomic module/block.

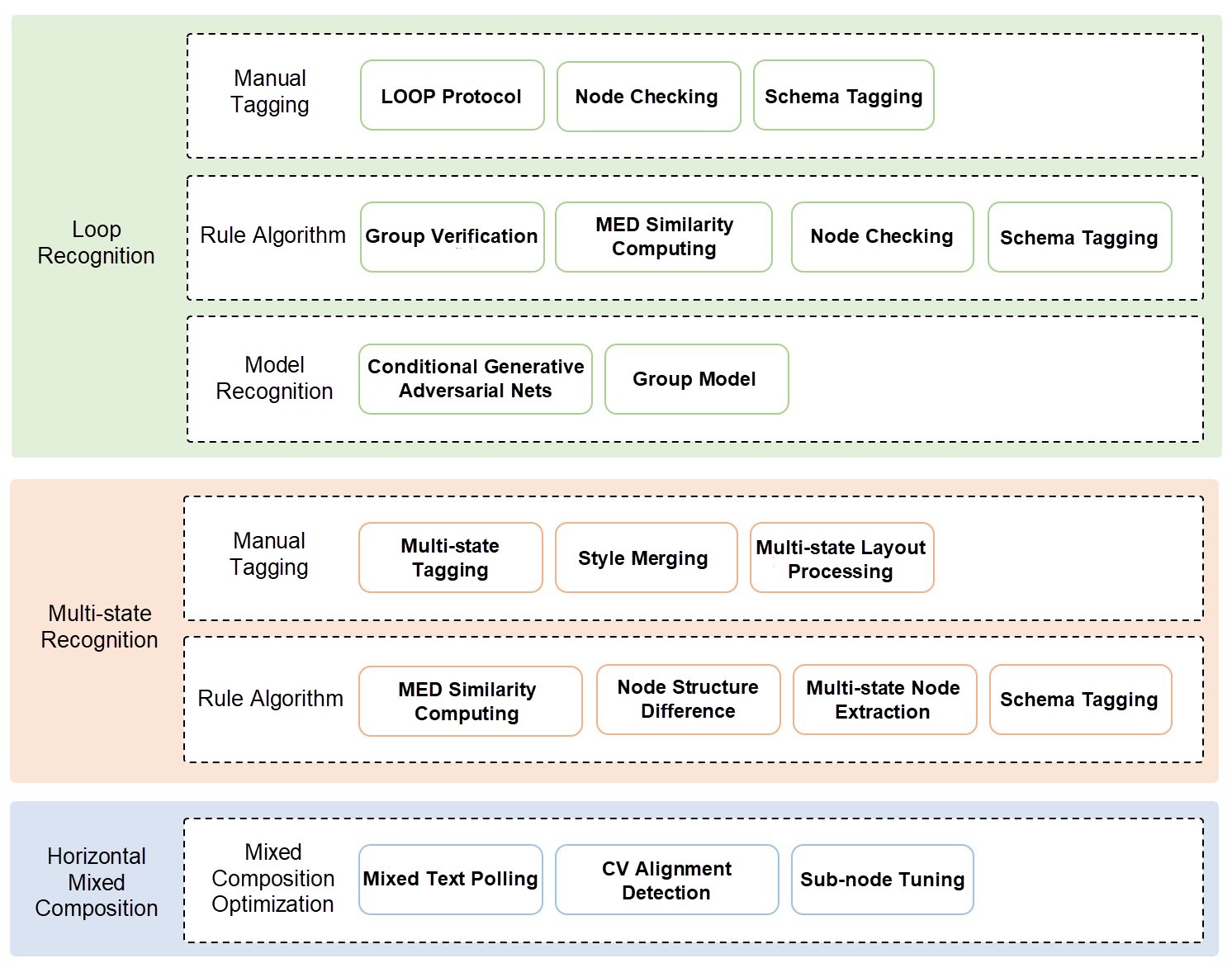

Today, imgcook is capable of loop recognition and multi-state recognition. It recognizes the loop body in design drafts to generate loop codes and the multi-state node to generate multi-state UI codes. It also defines the measurement of layout maintainability to measure the accuracy of the restoration stage.

Improved Recognition Capability in Layout Restoration

In the business logic generation stage, the original configuration procedure is optimized. Thus, the business logic library is decoupled from the algorithm engineering procedure, and applications and expressions of all recognition results are accepted. The material recognition stage only focuses on the materials in the UI rather than how the recognition results are used to generate code. The same is true for loop recognition and multi-state recognition in the layout restoration stage. This way enables the customization of the recognition result expression and allows users to perceive intelligent recognition results and choose whether to use them.

In addition, logic codes can be generated partly from demand drafts, code snippet recommendations, or intelligent code recommendations (also called Code to Code or C2C.)

Configuration of General Business Logic Library

The algorithm engineering services provide model training products based on UI feature recognition. These products offer a way to adopt machine learning for those willing to use business components recognition. Samplecook was developed to solve the major problems of algorithm engineering services. It is a new derivative product that provides a shortcut of sample generation for frontend UI recognition models.

Some may worry that it would be difficult for frontend engineers to solve problems in the frontend field through machine learning. Pipcook, a frontend algorithm engineering framework, was developed to lower the threshold. It allows frontend engineers to complete machine learning tasks through JavaScript.

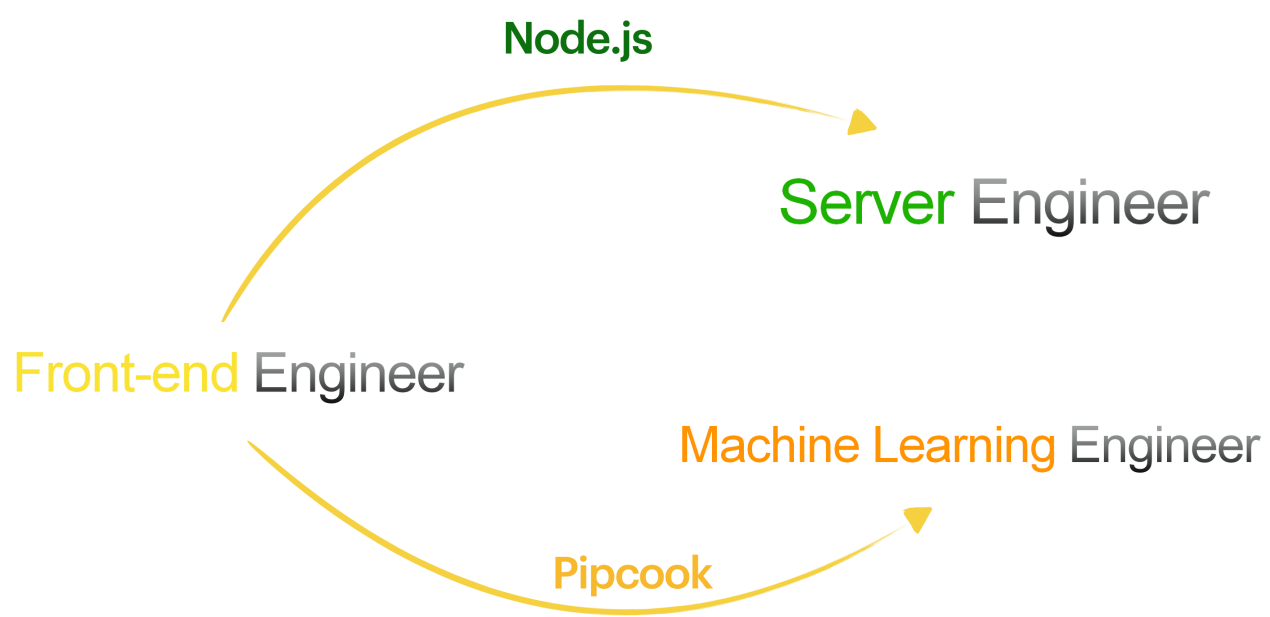

Node.js can turn frontend engineers into server-side engineers, while Pipcook can turn frontend engineers into machine learning engineers.

Frontend and Machine Learning

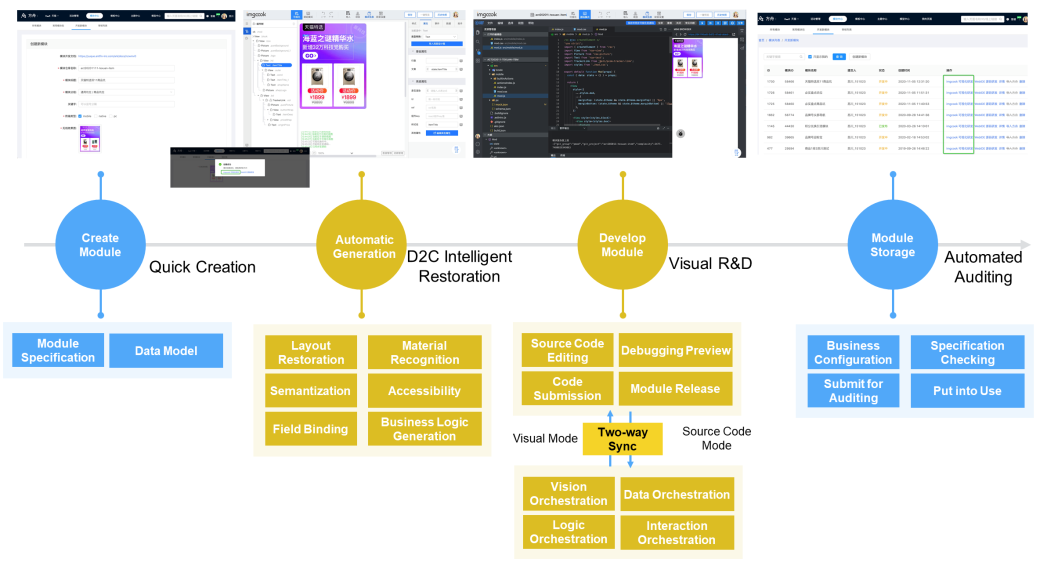

Taobao marketing focuses on module development. The complete module development process is listed below:

The entire R&D process requires switching among multiple platforms, so the development procedure experience and engineering efficiency need to be improved.

In the second phase, it would more convenient if development, debugging, previewing, and publishing could be performed on the imgcook platform. The one-stop D2C R&D mode is a good choice to improve overall R&D efficiency and experience.

Therefore, the imgcook visual editor for marketing has been customized with visual and source code modes. It enables intelligent code generation and visual R&D in visual mode. The generated code can be synchronized to WebIDE in source code mode with one-click. WebIDE supports interface-based debugging, previewing, and publishing.

The Imgcook Visual R&D Process for Taobao Modules

Compared with traditional modes, the coding efficiency (ratio of complexity to R&D time) in imgcook visual R&D mode is 68% higher.

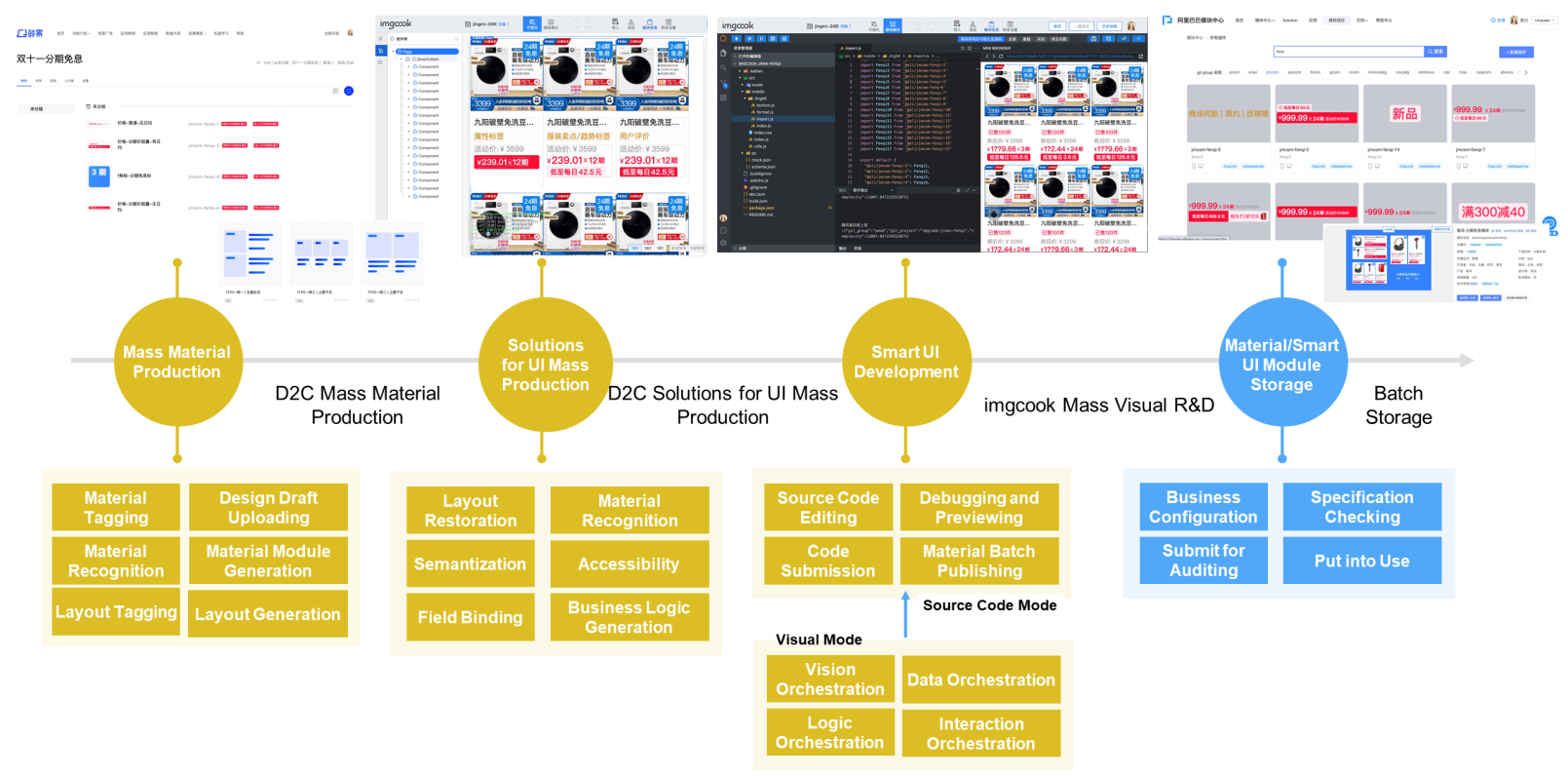

Smart UI is a solution that provides users with personalized UI by analyzing their characteristics. Therefore, it is necessary to develop a large number of UI interfaces. In the original development procedure of Taobao's Jingmi platform, imgcook modules were created in batches after visual drafts were uploaded and materials were parsed. However, independent development, warehouse, and publishing were required for each material in corresponding imgcook visual interfaces. Also, the overall visual effect of the final smart UI could not be seen. As a result, the material development costs required for Smart UI, and the cost of business access to Smart UI will be very high.

The Imgcook Visual R&D Process for Smart UI

During that time, the Smart UI R&D procedure was updated and D2C visual R&D procedure accepts the mass production of Smart UI. After uploading design drafts to parse UI materials, imgcook modules are created to generate material UI codes in batches. Relationships between the code warehouse and imgcook modules are also established. Moreover, created materials can be imported into imgcook in batches to generate different types of UI solutions. In the generated UI solutions, centralized development on materials can be performed. In addition, the mass release of materials is supported. The entire procedure is more centralized and efficient.

This year, the frontend intelligence contributed to the upgrade of the frontend R&D mode. Several BUs built datasets and algorithm models for frontend design draft recognition jointly, which were widely applied during Double 11.

Comprehensive technical system upgrades of the D2C mode have affected the R&D procedure of marketing modules. These upgrades include UI multi-state recognition, live video components, loop intelligent recognition enhancement, and many other aspects. 90.4% of codes for new modules have been intelligently generated during Double 11, which is higher than last year. The intelligent review capability of design drafts was also upgraded. According to statistics, intelligently generated codes (without human assistance) accounted for 79.26% of publishing.

Compared with the traditional module development mode, the D2C mode increases the coding efficiency (ratio of module complexity to R&D time) by 68%. The throughput of module demands with a fixed workload and in unit time increased by about 1.5 times.

Currently, manual code modifications are required for the following reasons:

These issues need to be gradually addressed later.

User Modifications in D2C Mode

At the same time, imgcook supports Smart UI mass production in Tmall's main venue and industry venues, enhancing production efficiency.

Smart UI Mass Production

Whether using computer vision or deep learning technology, accuracy issues are inevitable for the intelligent generation. Low accuracy may be unacceptable in online environments. A closed loop of algorithm engineering produce needs to be established with data used by online users to improve the accuracy of model online recognition. In the imgcook system, icon recognition has formed a complete procedure closed loop from sample collection to applications of model recognition results. Therefore, developers do not need to spend much time on model optimization. In the future, other models will also be able to self-iterate.

Another difficulty in the intelligent D2C mode is how to apply model recognition results to generate code. For example, the component recognition model can identify the category of the component. However, it is unclear which component library is involved and how to recognize the attribute value of components in UI during code generation. Capabilities and the layered architecture of intelligent restoration technology of the imgcook platform can solve the issues listed above. More intelligent solutions will be available for the production environment in the future.

In the future, the D2C intelligence capabilities of imgcook will be split up further. More intelligent solutions will be explored, and existing model capabilities will be optimized. Moreover, a closed-loop mechanism of algorithm engineering that enables self-iteration will be built. Frontend codes with higher maintainability and stronger semantics can be generated by continuously improving model generalization capabilities and online recognition accuracy.

2,603 posts | 747 followers

FollowAlibaba F(x) Team - June 20, 2022

Alibaba Clouder - November 25, 2020

Alibaba F(x) Team - February 25, 2021

Alibaba Clouder - December 31, 2020

Alibaba F(x) Team - June 21, 2021

Alibaba F(x) Team - February 3, 2021

2,603 posts | 747 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More AIRec

AIRec

A high-quality personalized recommendation service for your applications.

Learn More Artificial Intelligence Service for Conversational Chatbots Solution

Artificial Intelligence Service for Conversational Chatbots Solution

This solution provides you with Artificial Intelligence services and allows you to build AI-powered, human-like, conversational, multilingual chatbots over omnichannel to quickly respond to your customers 24/7.

Learn MoreMore Posts by Alibaba Clouder