By Suchuan, from F(x) Team

This article is based on a presentation from GMTC Beijing 2021.

Taobao and Tmall have a lot of business products, and there will be many big promotion activities every year. Different minds of product pages lead to diverse UI vision and different business logic. A large number of UIs are difficult to reuse, requiring a large amount of frontend power to develop. Frontend developers are under a lot of business pressure. We developed an automated code generation platform, imgcook, to solve this dilemma and help improve frontend R&D efficiency.

We have gradually introduced AI technologies in imgcook, such as CV and NLP, to assist in identifying design draft information and generating codes intelligently with high readability and maintainability. It also automatically generates 79.34% of frontend codes during the Double 11 Global Shopping Festival, helping improve 68% of frontend research and development efficiency. The intelligent code generation platform imgcook has been officially opened to the public in 2019, serving 25,000 + users.

This article introduces the core idea of imgcook, the design draft generation code platform, and the application scenarios and implementation practices of AI technology in imgcook.

The content is mainly about which design draft can be used to generate code with machine learning technology and how to use machine learning technology to solve these problems. We hope the whole practical process of solving code generation problems with machine learning can be clearly explained from the frontend perspective.

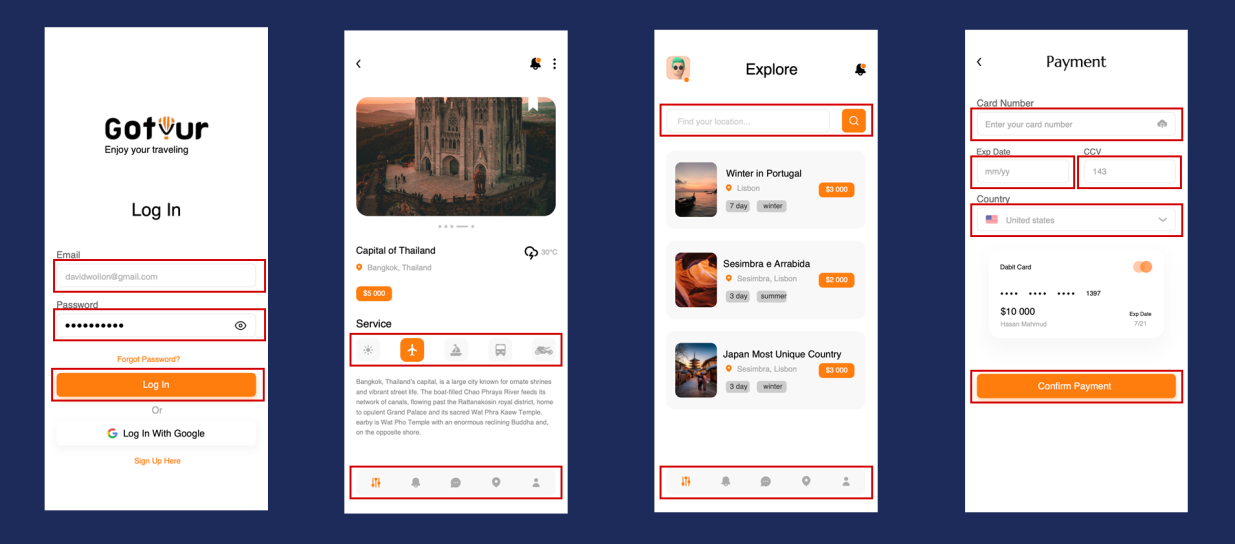

imgcook is an intelligent code generation platform for design drafts. It can generate maintainable frontend code, such as React, Vue, and applets, with one click on Sketch, PSD, Figma, and pictures.

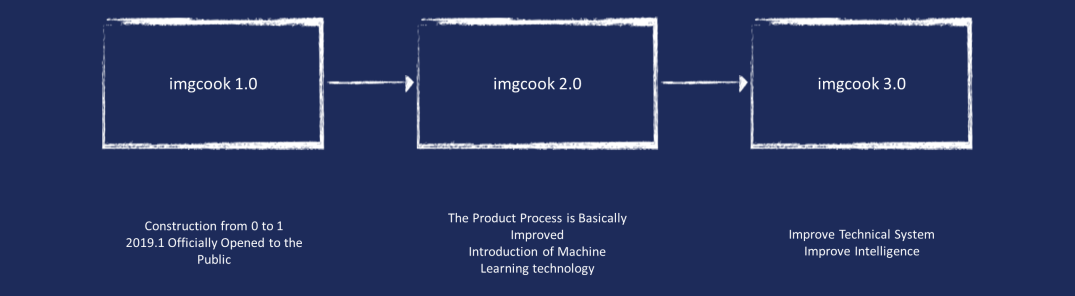

imgcook has gone through three stages so far. In January 2019, we officially published version 1.0 of imgcook to the public. After more than one year of development, we gradually introduced the 2.0 stage with computer vision and machine learning technology to solve some problems encountered in the code generation of design drafts. Machine learning technology was applied to multiple scenarios in imgcook by the 3.0 stage.

Among these modules on last year's Double 11 activity page, 90.4% of new modules used imgcook 3.0 to generate code. The availability rate of generated code is 79%, which helps the frontend coding efficiency improve by 68%.

How does imgcook generate the code?

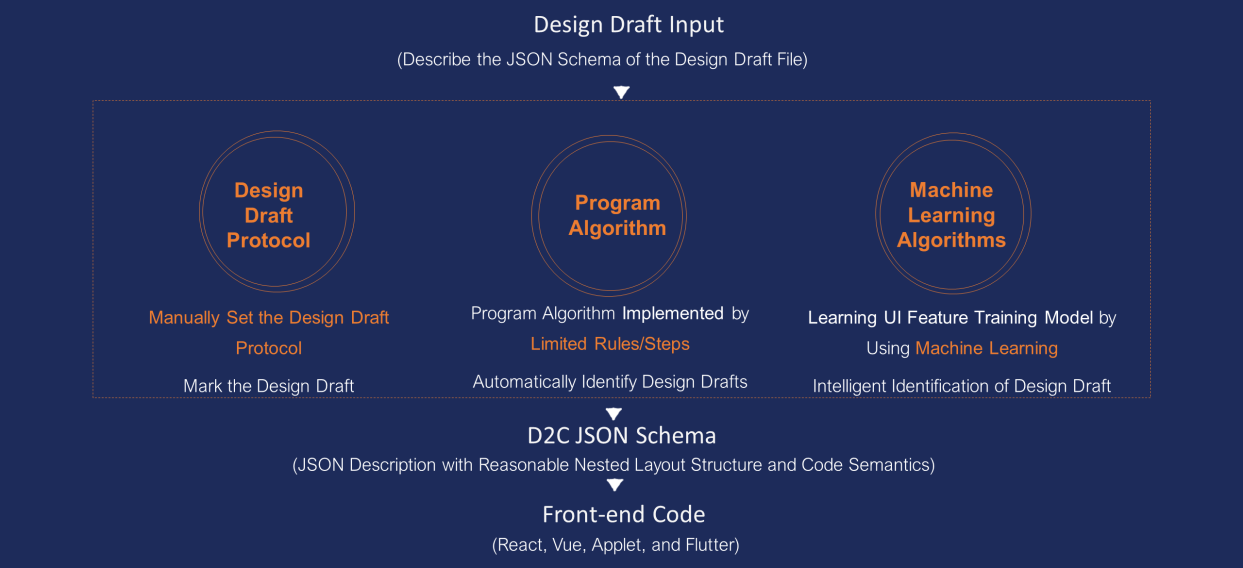

We can extract JSON Schema from the design document. If you have a Sketch document, you can decompress the Sketch document with the unzip command to see the JSON Schema.

After getting this JSON Schema, we can use the design protocol, program algorithm, and model algorithm to calculate, analyze and identify, and convert this JSON Schema into a D2C JSON Schema with reasonable nested layout structure and code semantics.

If you have used imgcook, you can see this D2C schema in the imgcook editor. Then, we can convert the D2C schema into different types of frontend codes using various DSL conversion functions, such as React and Vue.

Principle of imgcook 3.0 Technology

This is the general process of imgcook generating code where the program algorithm refers to the automated identification of the design draft using limited rule calculation. For example, we can calculate whether it is a loop structure according to the coordinates, width, and height information of each layer and judge whether the text is a title or prompt information according to the font size and word number.

However, the rules of this judgment are limited, and there is no way to solve the problems in millions of design drafts. Intelligent identification using machine learning models can solve some problems that cannot be covered using program algorithms. We also provide a way to manually mark the layers of design drafts for problems that cannot be solved using program algorithms and model algorithms.

Which problems can be solved by using the machine learning model to identify the design draft? Before introducing which code generation problems machine learning can solve, we need to learn what machine learning can do and what types of problems it can solve.

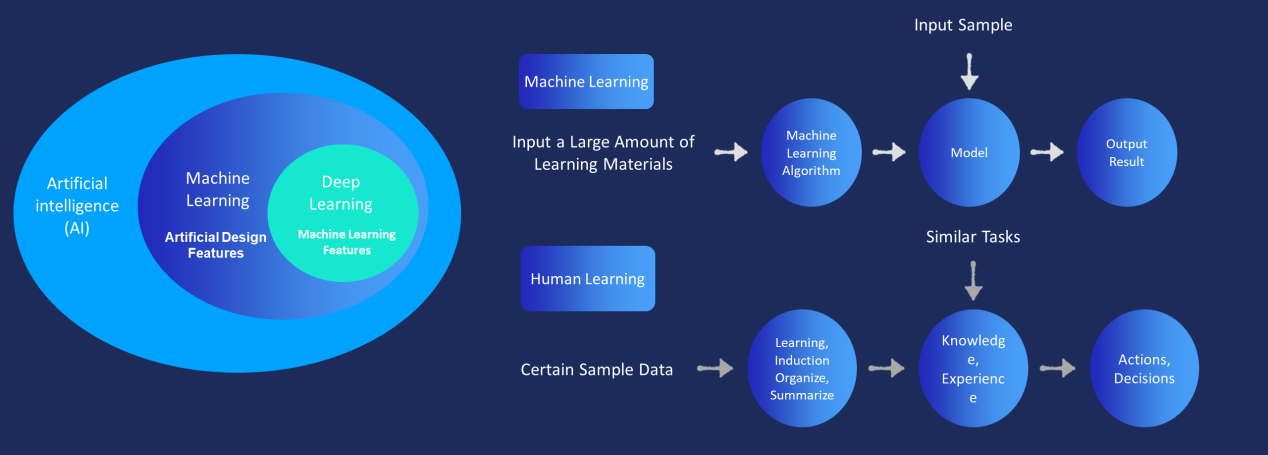

Today's topic is the applications and practices of AI in imgcook. AI is an abbreviation for artificial intelligence. Artificial intelligence can provide machines with adaptability and reasoning ability, which can help reduce labor costs.

Machine learning is a technology to achieve artificial intelligence. It can input a large number of samples to the machine learning algorithm. After the algorithm learns the characteristics of these samples, it can predict the characteristics of similar samples.

The process of machine learning is very similar to the process of human learning. Knowledge and experience are obtained through learning. When there are similar tasks, decisions or actions can be made based on an existing experience.

One difference is that the human brain needs very little information to sum up knowledge or experience with very strong applicability. For example, people can correctly distinguish between cats and dogs as long as we have seen a few cats or dogs, but machines need a lot of learning materials.

Deep learning is a branch of machine learning. The main difference between deep learning and traditional machine learning algorithms lies in the way features are processed. I won't go into details here. Most of the deep learning algorithms used in imgcook are also traditional machine learning algorithms. Later, when we discuss them, we will call them machine learning algorithms.

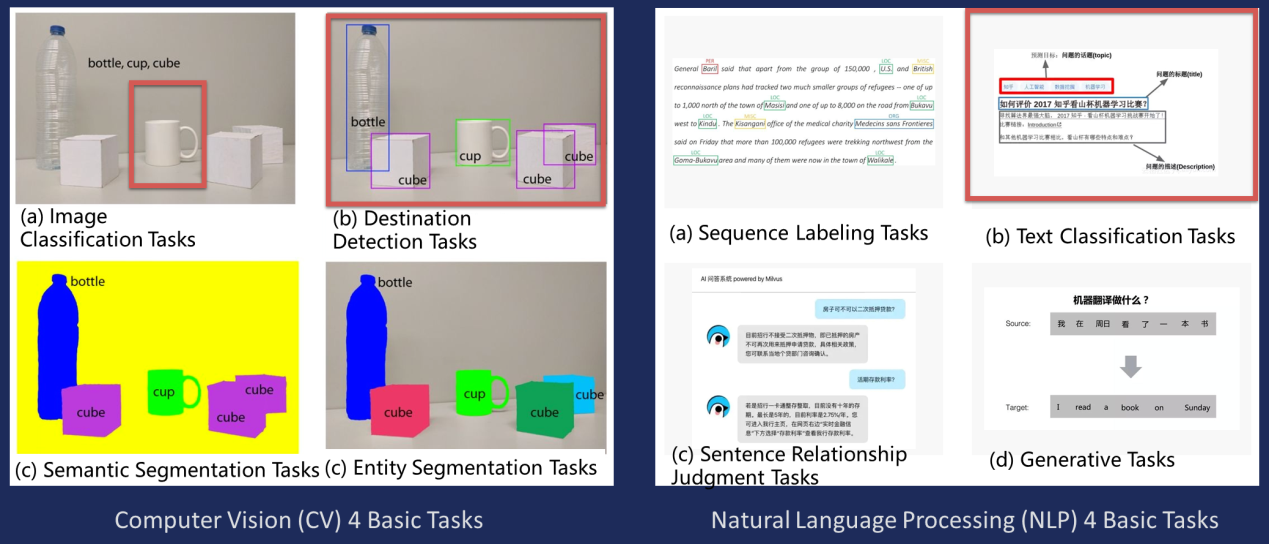

After understanding these concepts, we will look at what types of problems machine learning can solve. Here are four basic tasks for processing images in the field of computer vision and four basic tasks for processing words in the field of NLP.

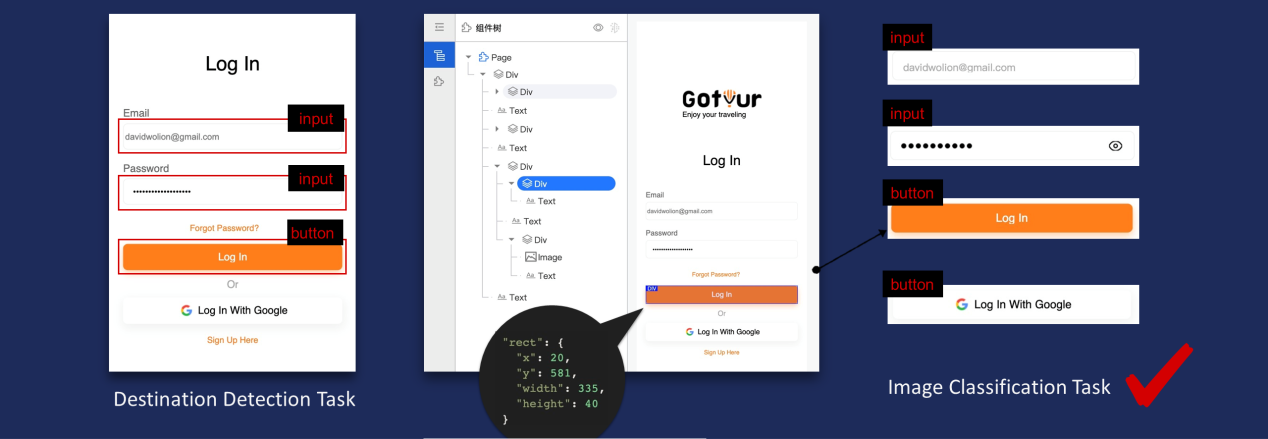

For image recognition, if given an image, and you just want to identify what object, it is an image classification task. If you want to identify the destination object in the image, and you also need to know the position of the object, it is a destination detection task. More fine-grained image recognition includes semantic segmentation and entity segmentation.

The same is true for text recognition, such as the Zhihu problem here. If you want to identify whether this text is a question title or a question description, this is a question of text classification. There are also tasks (such as the sequence labeling of place names and names), sentence relationship judgment tasks (such as intelligent customer service question and answer), and text generation tasks (such as machine translation).

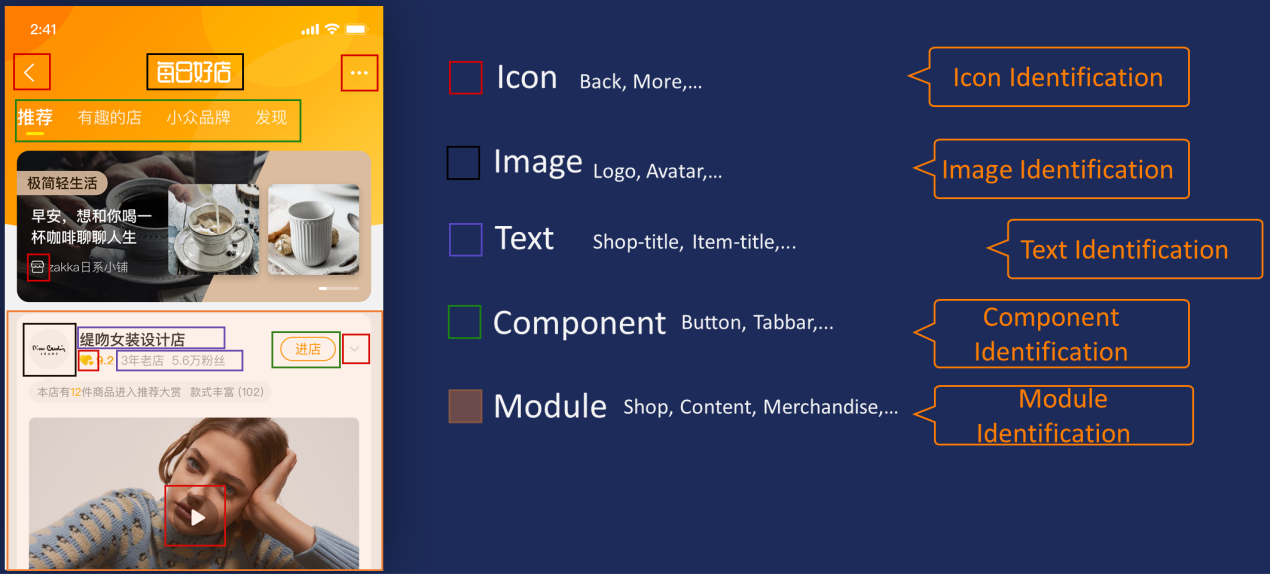

The problem identifying UI information on a page in imgcook is generally defined as image classification, destination detection, and text classification tasks.

There are many different dimensions of UI information in the design draft. For example, there are icons in this design draft, and we can train an icon recognition model to identify which icons are on the page, such as the page return icon here. Three "..." indicates more icons.

There are also pictures on the page. The semantics of the pictures can be obtained using picture recognition, text recognition, component recognition, and module recognition, all of which can be recognized by training a machine learning platform model to recognize.

What can these identification results be used to accomplish? You can think about what we write when we write code.

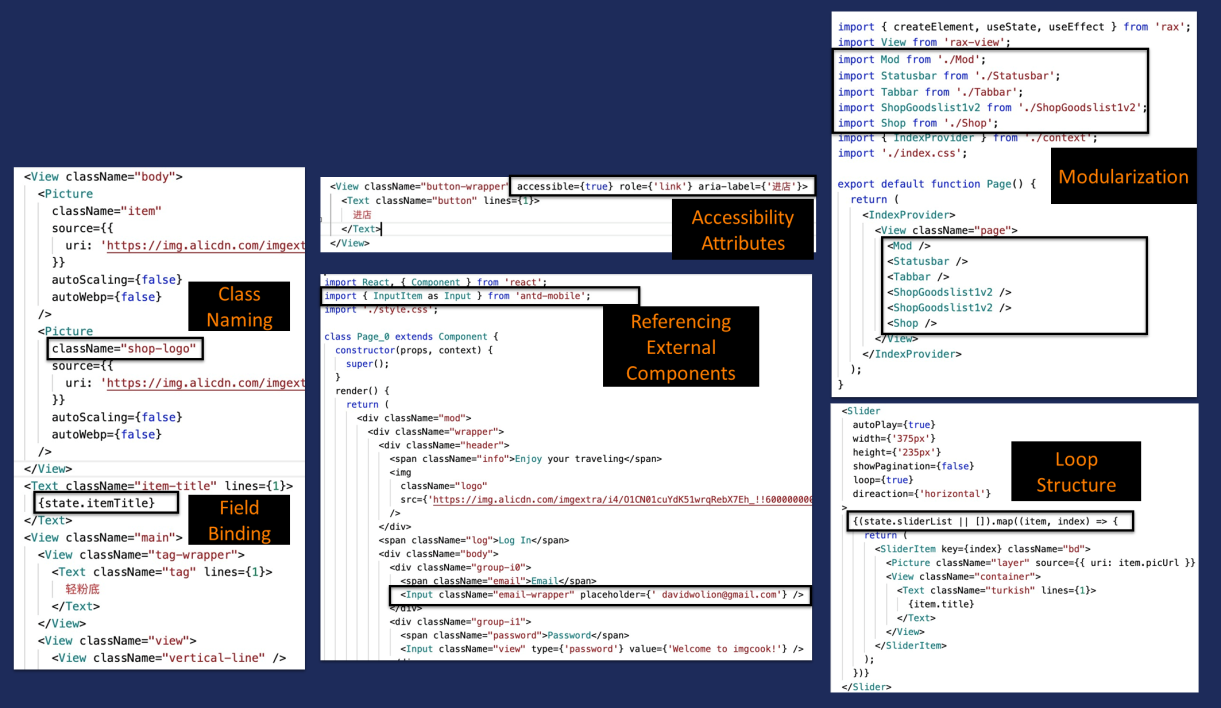

The results of this model recognition can be used to give the class name an easy-to-understand name or generate field binding code automatically. If it is identified as a button component, it can generate barrier-free attribute code or introduce external components when generating code automatically. If it is identified as a module, it can generate modular code.

How do you use a machine learning model to identify this UI information and apply it to the code generated process?

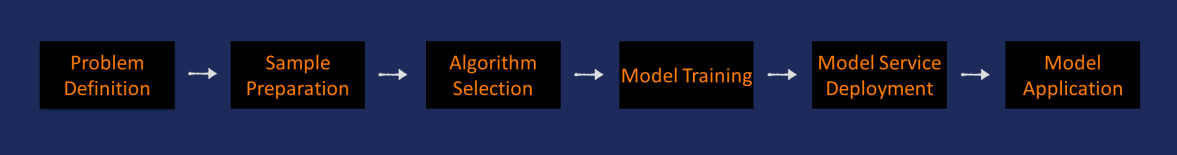

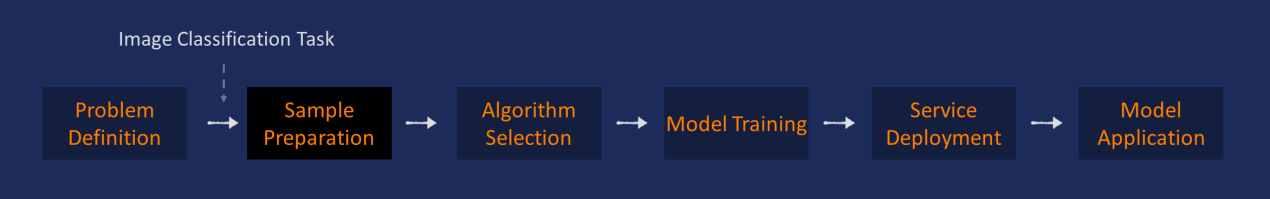

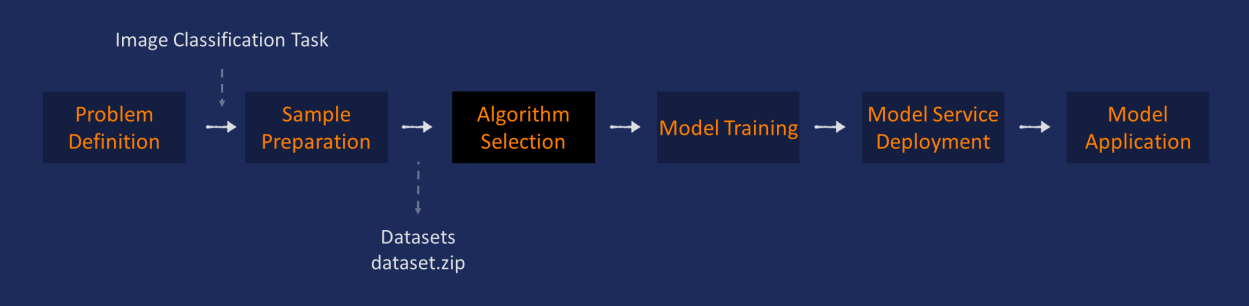

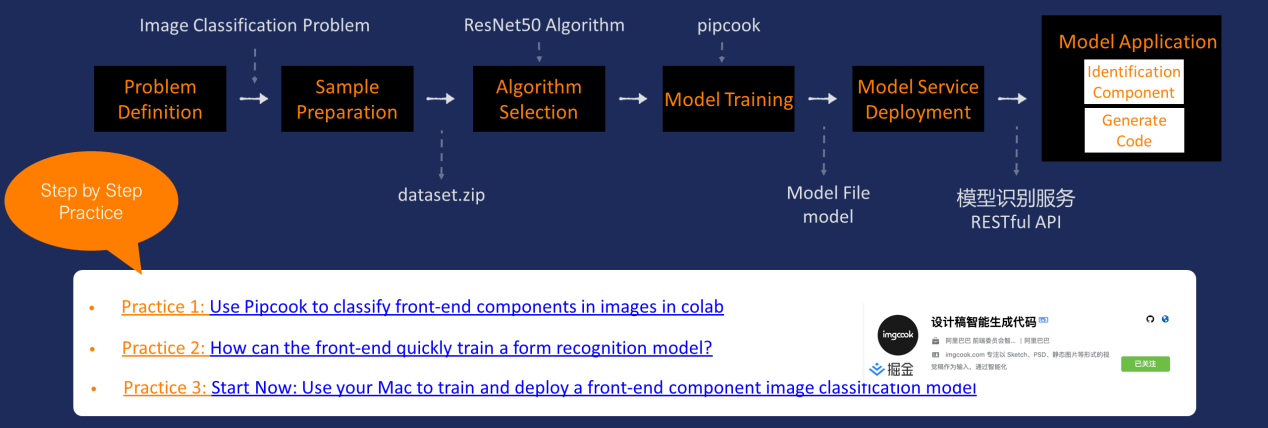

The general steps to solving problems using machine learning are listed below:

First, we must determine the business problem we want to solve. Then, we must determine whether the problem is suitable for using machine learning. Not all problems are suitable for using machine learning. For example, if the program algorithm can solve more than 90% of problems, and the accuracy is very high, there is no need to use it.

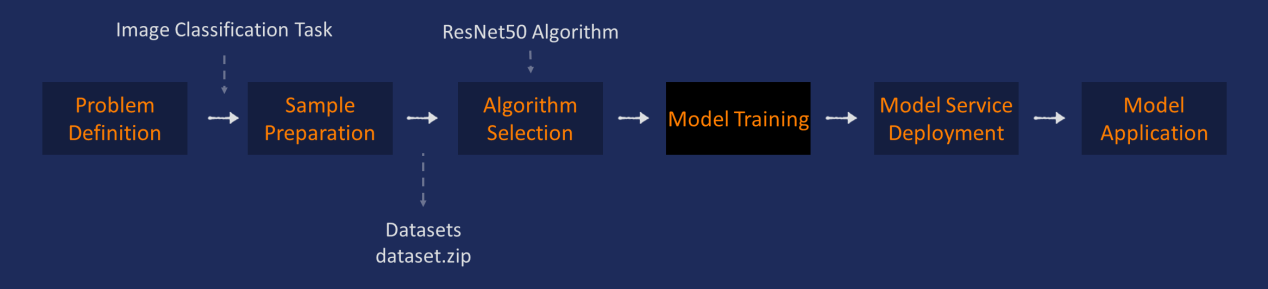

If you are determined to use machine learning, we also need to determine what type of machine learning task the problem belongs to, such as an image classification task or a target detection task. Then, you can start preparing samples after determining the task type because the data set requirements for model training are different for various task types.

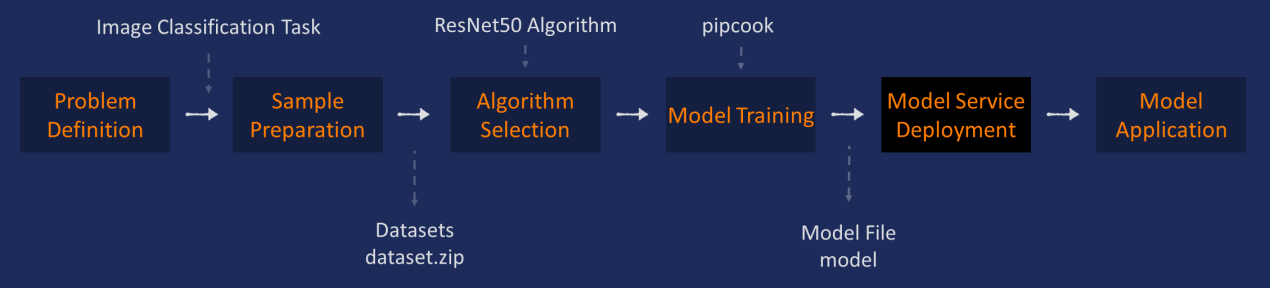

After the training data is ready, it is necessary to determine which machine learning algorithm to use. Once the training data is available and the algorithm is determined, a large amount of training data can be input into the algorithm to learn sample features, which is the training model. After the model is obtained, the model service is deployed. Finally, the model service is called in the engineering project to identify UI information.

This is what I want to share today. I will use machine learning to solve business problems.

Here, I will not discuss how to write machine learning algorithms, the principles of machine learning algorithms, and how to adjust parameters when training models. I am a frontend engineer. I am more concerned about how to use machine learning technology to solve problems. I want to share the practical process of using machine learning technology to solve code generation problems and some experiences in the practical process.

This article takes the problems of introducing external components in the code generation process as examples to introduce the machine learning practice implementation process in detail.

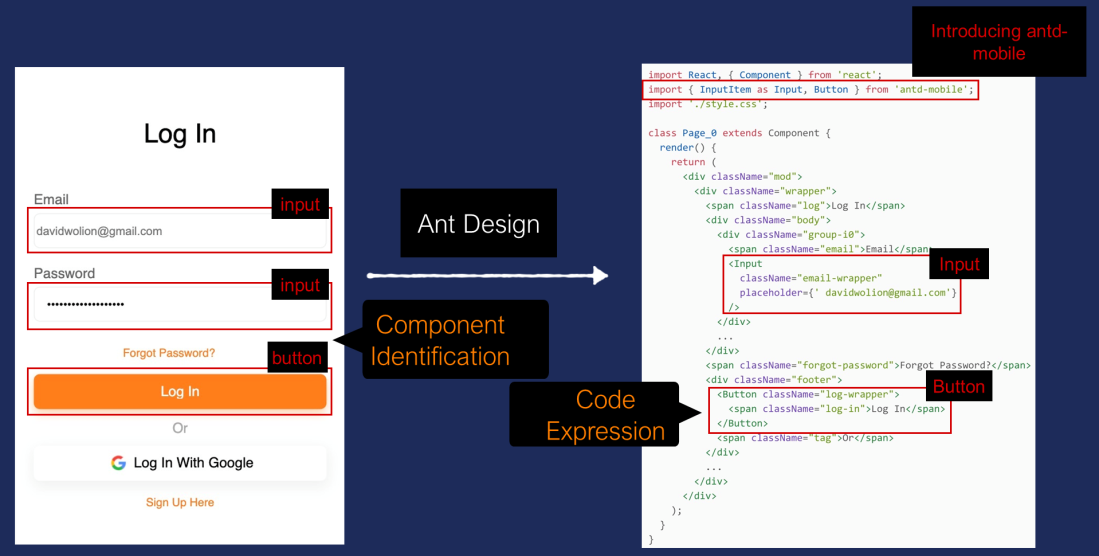

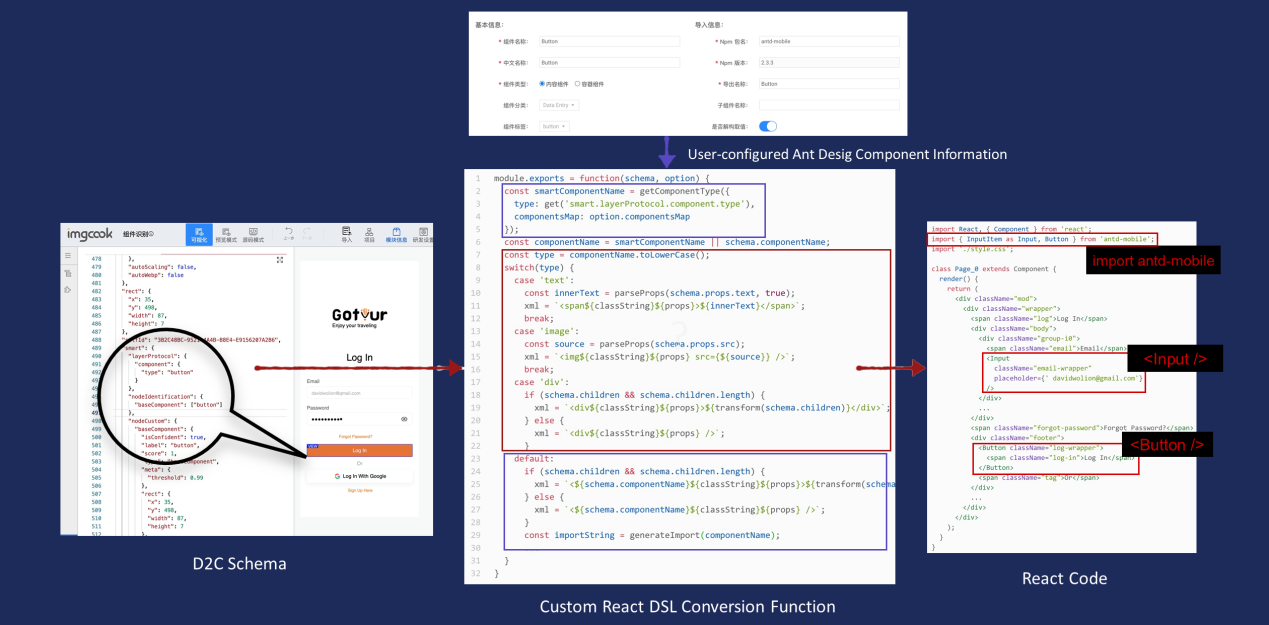

For example, such a page contains an input box and button. Now, you want to use the Ant Design component to implement it. If you manually write, you need to introduce the Ant Design component library and use the Input component and Button component in Antd.

Now, if we need to generate it automatically, we need to identify the components on the page and replace them with the introduced Antd components because all we extracted from the design are rectangular boxes, text, images, and such information, and we don't know which ones are the components.

Therefore, there are two things to do - component identification and code expression.

We now need to identify which components are on this page. If the input of the machine learning model is on this page, the model needs to identify which components are on the page, the location of the components, and the category of components. Then, this is a destination detection task in machine learning.

However, we can get the D2C JSON description information of this page. The location information of each node is known. For example, the root node of this button has such a rect attribute describing the location size in the D2C JSON description. Since the location of each node is known, I only need to know whether this node is a component and what type of component it is.

In machine learning, this is an image classification task. We will input screenshots of these nodes to the model to tell me what type of component this is after the model is identified.

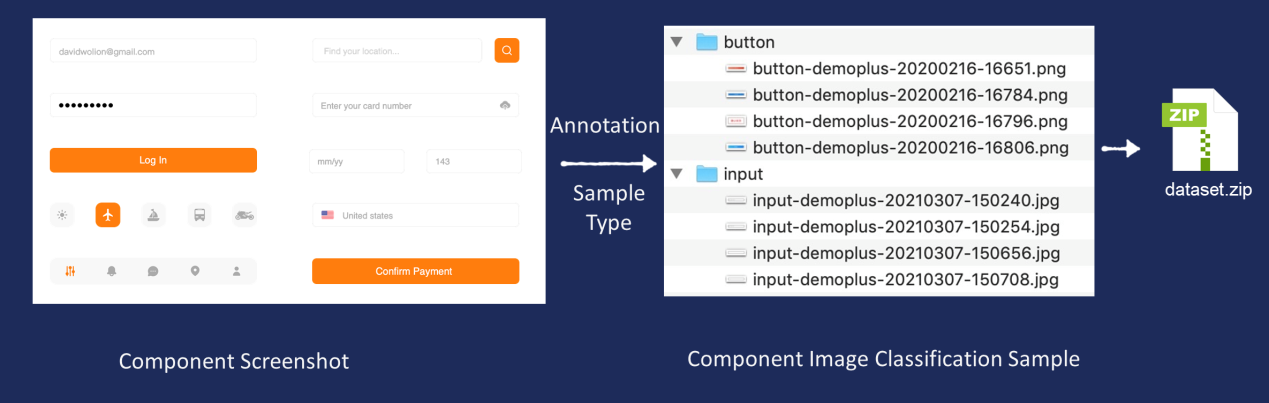

Since it is an image classification task, you can prepare samples for model training. The preparation of sample data is the most time-consuming and laborious thing in the whole stage. A large number of samples need to be collected, and the sample needs to be labeled by type.

When we make machine learning learn sample features, we need to tell the algorithm what this picture is in addition to giving the algorithm a picture of a sample so the algorithm can learn. All samples of this type have these characteristics. The next time there is a sample with similar characteristics, the model will be able to identify what type this similar sample is.

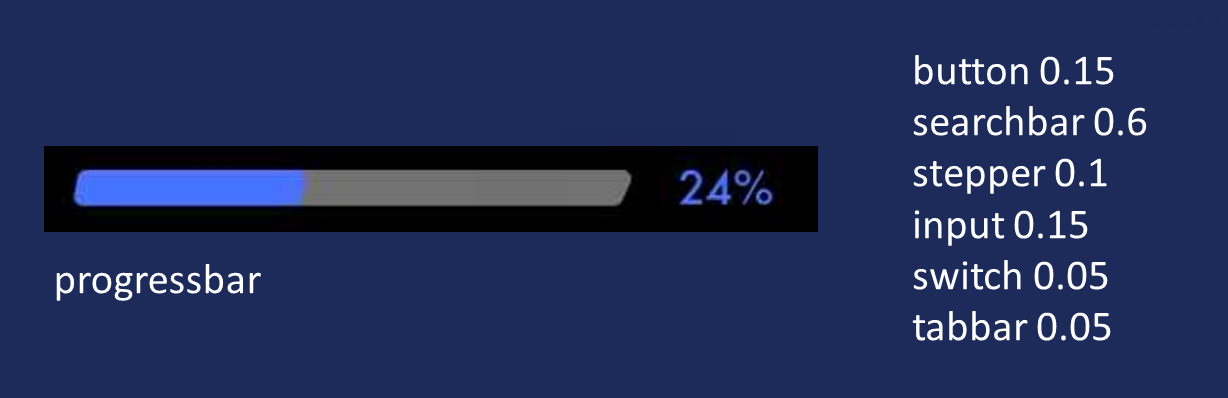

Before collecting samples, it is necessary to determine which types of components the model needs to identify. If the samples you learn for the algorithm are only button, searchbar, stepper, input, switch, and tabbar, the trained model can only identify these types of components. If your model gives a progress bar screenshot of the components to identify, the model will not recognize that this is a progress bar. It will only tell the probability of the progress bar falling into any of these categories.

Here, we have identified six types that the model needs to identify. Then, we can start collecting samples. Each sample includes an image of a component and the category of the component image.

The most direct way to collect images of these basic components is to extract screenshots of components from online pages or design drafts. For example, these pages are collected first, and the screenshots of components in these pages are saved using some tools or scripts.

Screenshots of these components also need to be marked with the type of the components and stored in such a file format. Component images of the same type are placed in the same folder, and the name of the folder is the type of the component. Then, compress it into a zip file, which is the data set of the component image classification we need.

I extracted the pages from more than 20,000 design drafts in Alibaba and manually selected and extracted these components from the frames in the pages. At that time, I recorded that it took 11 hours to extract the screenshots of two categories of components from more than 14,000 pages. This is only the extraction of two categories of components. The previous also includes the time to extract page images and filter and deduplicate from more than 20,000 Sketch files. The whole process of collecting samples is difficult.

Moreover, there are generally many buttons on the page. However, there are few other types of components, such as switch and stepper, which will lead to unbalanced samples. We need a similar number of samples for each classification we input into the algorithm.

> sample labeling time: 14,000 pages * 2 categories == 11 hours sample imbalance: 25647 pages == 7,757 buttons + 1,177 switches +...We can use our familiar skills at the front end to solve such problems, such as using the headless browser puppeteer to generate them automatically.

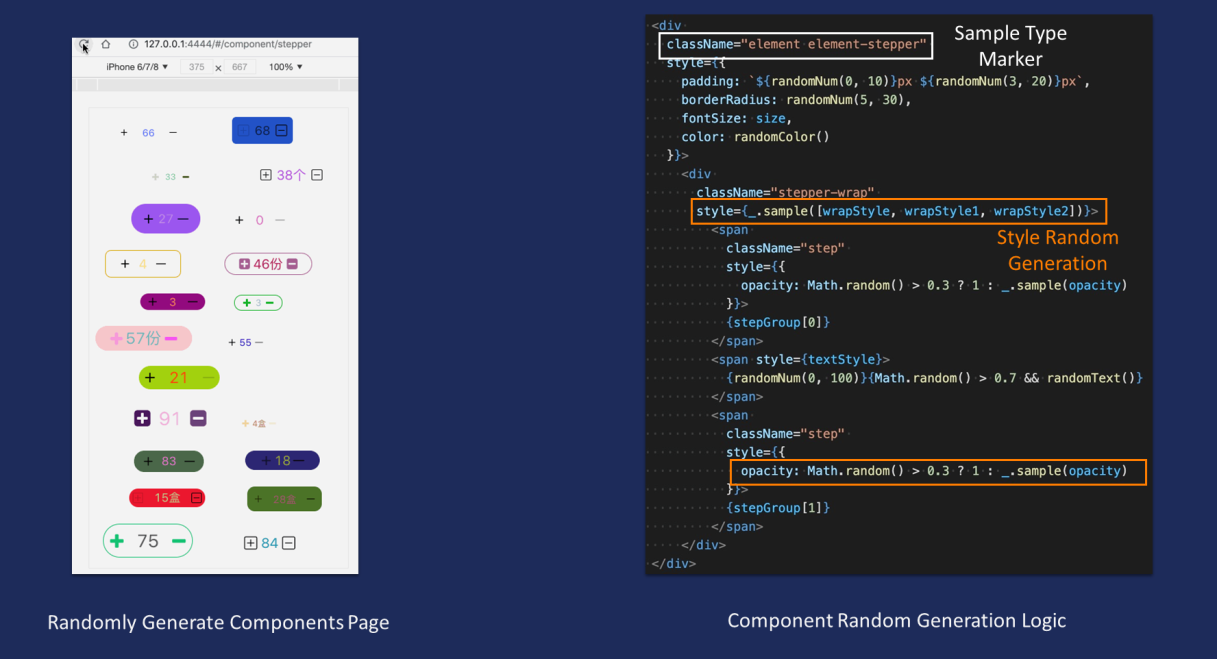

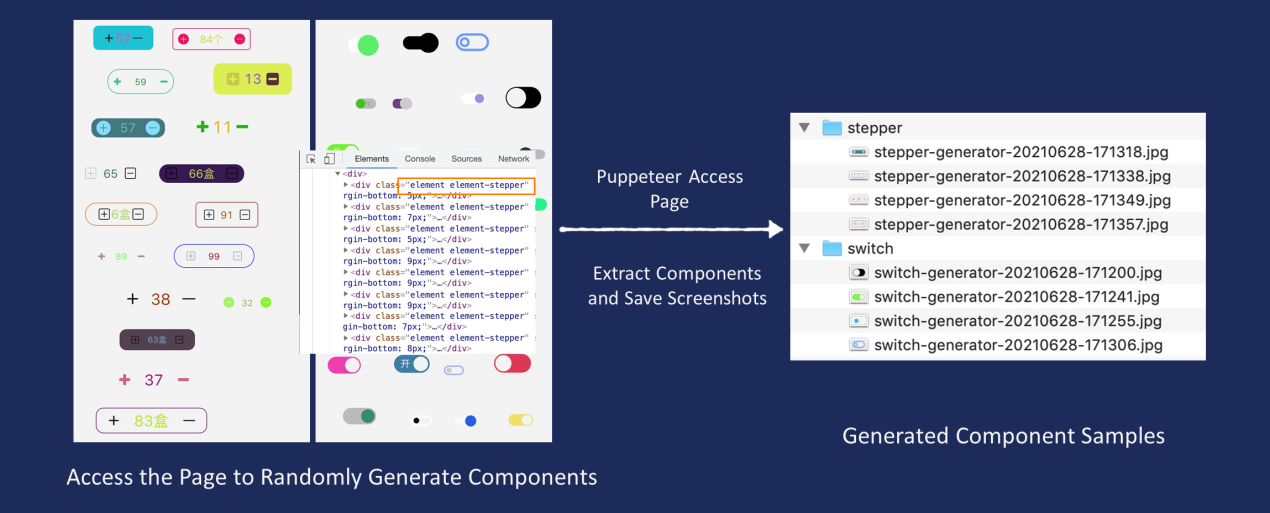

Specifically, we can write a page, and every time we refresh the page, different types and styles of components will be rendered. Components are randomly generated. When implementing component styles, padding, margin, text content, and text size are randomly generated. The only certainty is to add a type mark to each component. For example, if it is a stepper component, you need to add a class name of element-stepper to the node of this button.

This way, we will use the headless browser puppeteer to access this page automatically. Each time we visit, we will obtain all component node types from this page according to the class names we set and save screenshots. Finally, we can get the data set we need.

Some may say they can generate as much as they want.

This method can save a lot of energy, but it is still based on limited rules generated. The sample characteristics are not divergent enough, and there are certain differences with the visual characteristics of real samples.

If most of the samples input into the algorithm are created images that are different from the real samples, the model learns the characteristics of the artificial samples, and the accuracy of identifying the real samples with the model will be poor.

Therefore, automatically generated samples are only used as a supplement to the relatively small number of real samples.

Now that the dataset is ready, the next step is to determine which machine learning algorithm to choose to learn the characteristics of these samples.

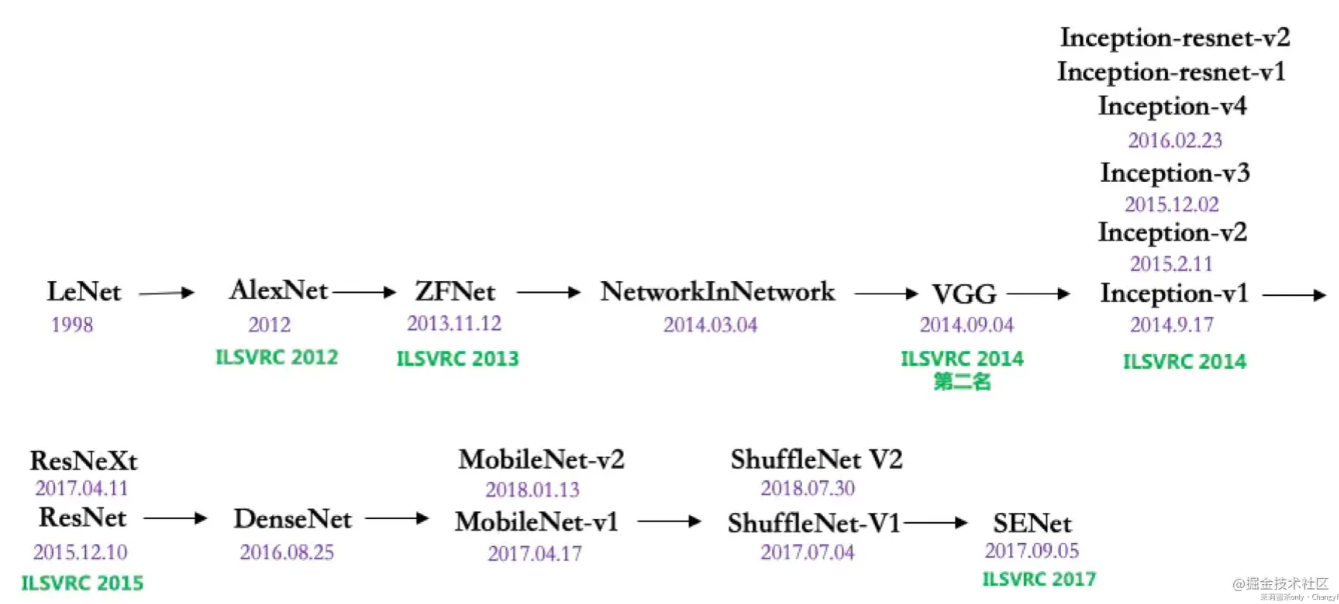

Since this is an image classification task, we can collect the development history of image classification algorithms and see which classical algorithms are available.

Classical Image Classification Algorithm

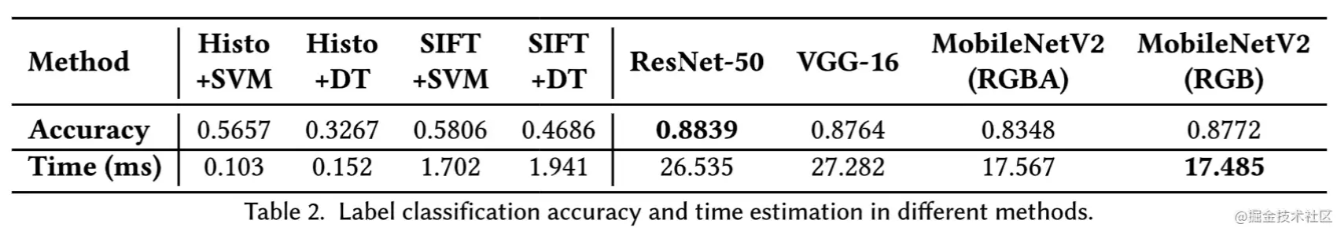

Then, select a few of these algorithms that the industry uses more to make a comparison in terms of model accuracy and prediction time. The complexity of component images is relatively high for component recognition, and the accuracy requirements for recognition are relatively high. Resnet50 is finally selected in component recognition.

Let's take the icon recognition model as an example. Since the icon is relatively simple, the recognition result is basically used to name the classname, so the icon classification algorithm selects MobileNetV2.

Comparison of Model Effects of Several Image Classification Algorithms

Now that we have determined the algorithm, the next step is to train the model.

The workflow of model training is listed below:

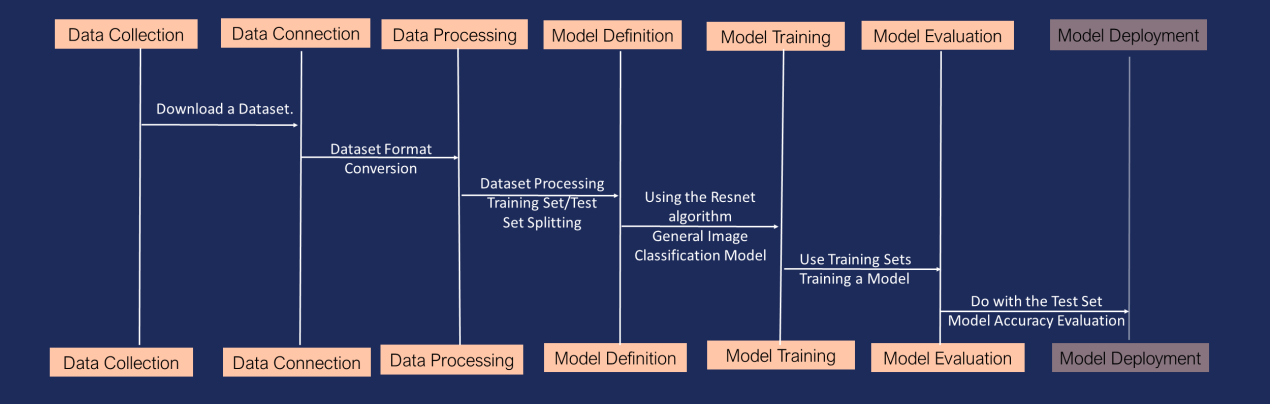

You need to download the dataset first, convert the dataset format, and process the dataset. For example, you can split the prepared datasets into a training set and a test set according to a certain ratio. Then, you can introduce an algorithm to define the model. Then, you can use the training set to train the model. After the model is trained, you can use the test set to evaluate the accuracy of the model. This way, the process of model training is completed, and the trained output model is deployed to the remote machine.

If you are a machine learning engineer and familiar with machine learning and Python, you will write your script for the model training workflow to train the model.

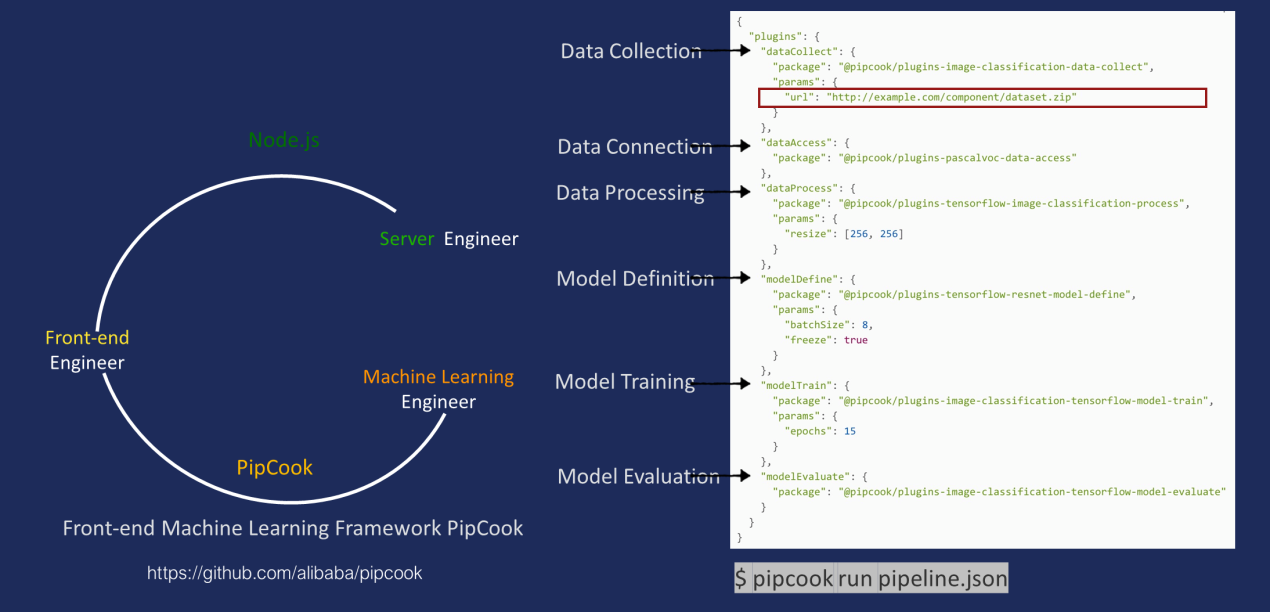

Frontend personnel can use the frontend algorithm engineering framework Pipcook to train the model. We can understand Pipcook this way. Nodejs can make frontend engineers do server-side things, and Pipcook can also make frontend engineers do machine learning tasks.

Pipcook encapsulates and integrates these processes, which are represented by a JSON file. Each stage in the training workflow is defined by a plug-in that supports the configuration of parameters for each stage. We only need to replace the input value of the URL parameter of the data collection plug-in with our dataset link.

Then, the execution Pipcook run pipline.json will start training the model.

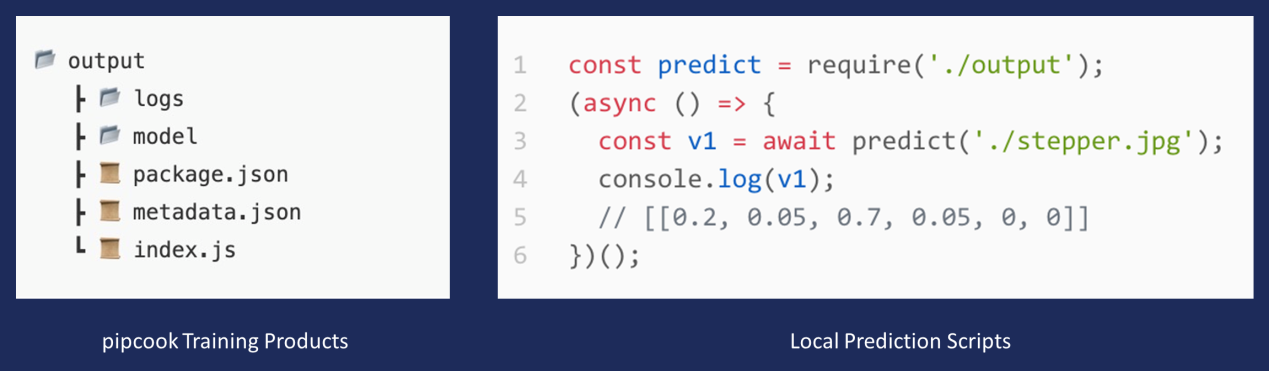

After the training process is completed, a model file will be generated. Pipcook encapsulates the file into an npm package. We can directly write a js script locally to test it. The predicted result is the probability that this test sample falls into each category. We can set a threshold, and when the probability is greater than this threshold, the result of recognition is considered reliable.

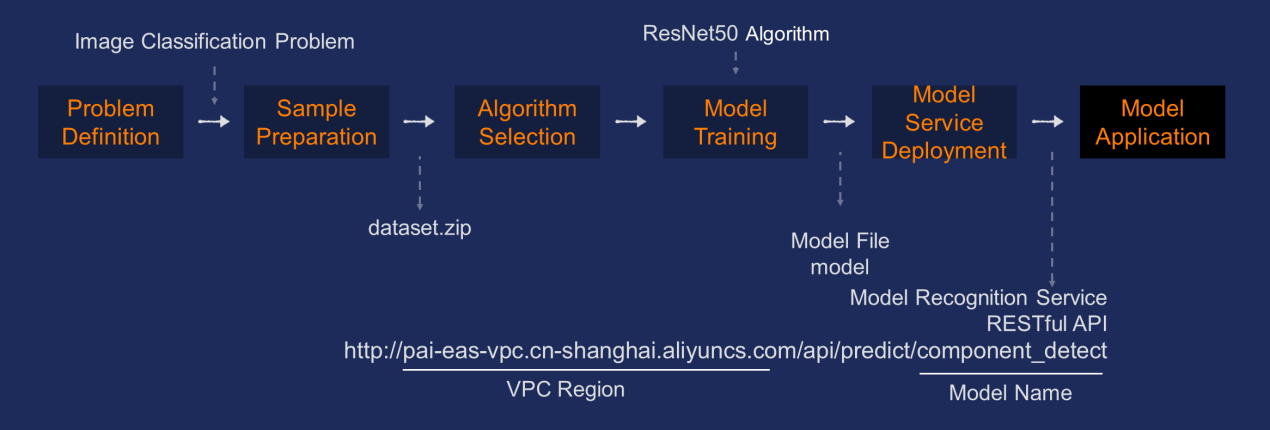

If you want to apply the model recognition service in an engineering project, you also need to deploy the model to a remote machine.

There are many ways to deploy the models. For example, we can deploy the models to Alibaba Cloud EAS, select the model online service in the Alibaba Cloud console, and upload the model file for deployment. After the deployment is completed, you can use the region where the virtual machine is located and the specified model name to construct the API of the visiting model service.

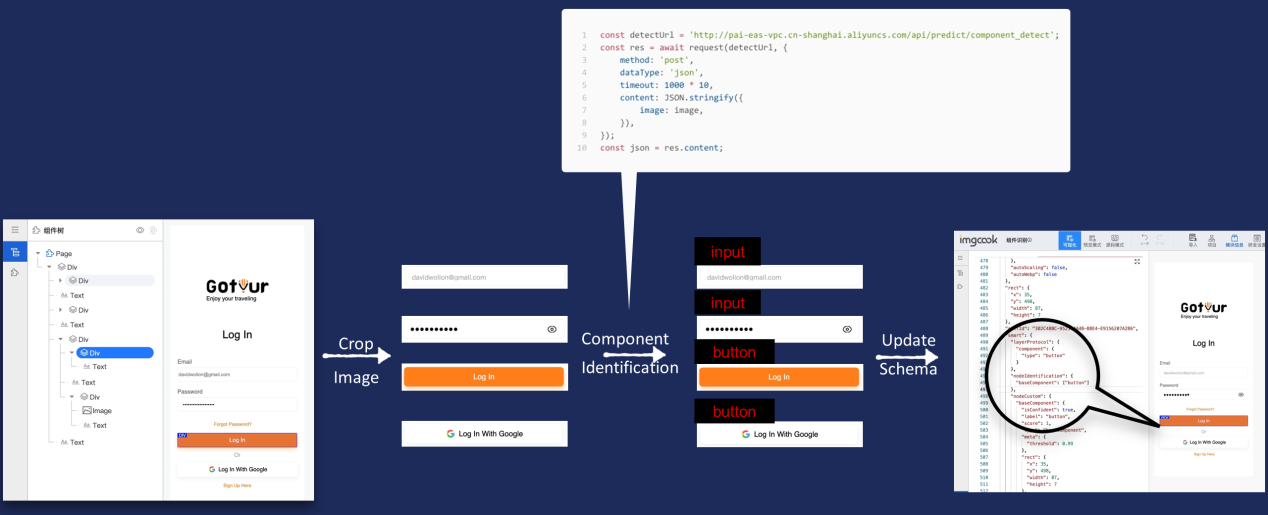

In other words, you can obtain such an accessible RESTful API after the model is deployed. Now, we can call this API in the D2C engineering process to identify components, which is the final model application stage.

The application processes of the models will be different in different application scenarios. In the D2C component identification scenarios, after the D2C Schema with a relatively reasonable nested layout structure is generated using the layout algorithm, the internal screenshots of these pages are cut out according to the position of div container nodes, and the component identification model service is called to identify. Then, the identification result is updated to the smart field of the corresponding node in the D2C Schema.

Is this model application completed? Not yet; this is only the process of component identification. As we said earlier, there are two things to do. The first thing to do is identify components, and the second thing to do is achieve code. We will also list the identified results in code.

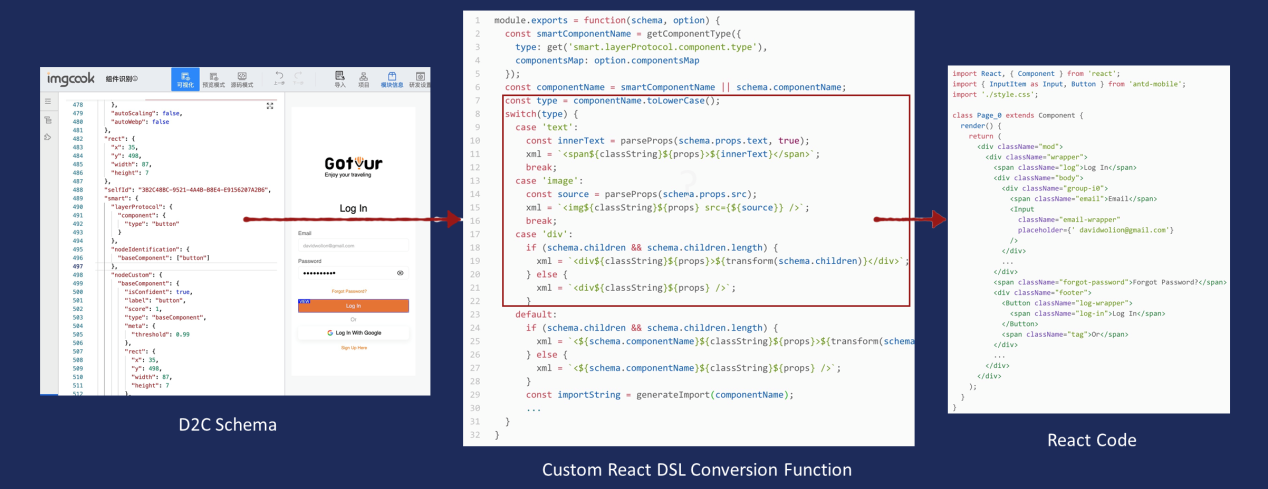

The imgcook supports user-defined DSL conversion functions to convert D2C schemas into different types of frontend code. The general logic is to recursively traverse the nodes in the D2C schema, determine the node type, and convert it into the corresponding label.

If the result of component recognition needs to be converted into an external component during the conversion process, you also need to enter user-typed components into the DSL function. Based on the type of component and the results of model identification, user-typed external components are introduced in code generation.

Here, the practical process of using machine learning to solve the problems of introducing external components is finished.

We can recall that to solve the problem of automatically generating code for scenarios where external components are introduced, we choose to train a machine learning image classification model to identify components on the page.

First of all, we need to prepare samples. We can collect real pages or design drafts, extract components, and label samples to generate data sets. We can also use puppeteer to generate data sets automatically. After the data sets are prepared, the Resnet algorithm is selected according to the trade-off between model recognition accuracy and recognition speed.

Then, use the frontend machine learning algorithm framework Pipcook to train the model. Pipcook encapsulates the loaded data set for us. After processing the data, defining the model, training the model, evaluating the whole pipeline, we only need to execute a Pipcook run to start training.

After the training is completed, a model file is obtained. Deploy this model file to Alibaba Cloud EAS, and you can obtain an accessible model recognition service Restful API. Then, call this API in the code generation process to identify components in the UI interface. The component recognition result is finally used to generate code.

Here are several step-b-step practical cases. The first step uses Pipcook training and executes it in colab. It is good to click directly without considering environmental problems. The second is to use Pipcook training to train on your computer. You need to install the environment on your computer. The third step is a machine learning task implemented in Python. You can also see how Python implements the process of data set loading, model definition, and model training.

These practical tasks provide code and datasets. If you are interested, you can experience the machine learning practice process introduced today according to this case.

Just now, we took component identification as an example to introduce the whole process of machine learning practice and application. In addition to component recognition, there are text recognition, icon recognition, and more. The practical methods basically follow this step.

These model recognition results can be used in UI code generation and business logic code generation. On the Double 11 page, there were some modules whose business logic code is not very complex, and the generation code availability rate can reach more than 90%, achieving zero R&D investment.

For example, if we manually hand-write the code for a module on such a Double 11 activity page, what business logic will be involved?

For example, there is a loop here. We only need to implement the UI of a commodity and use map loop. For example, the title, price, and commodity pictures need to be bound with dynamic data. The clicking on the commodity needs to jump, and the tracking log should be sent when the user clicks. In addition to these, there seems to be no other business logic.

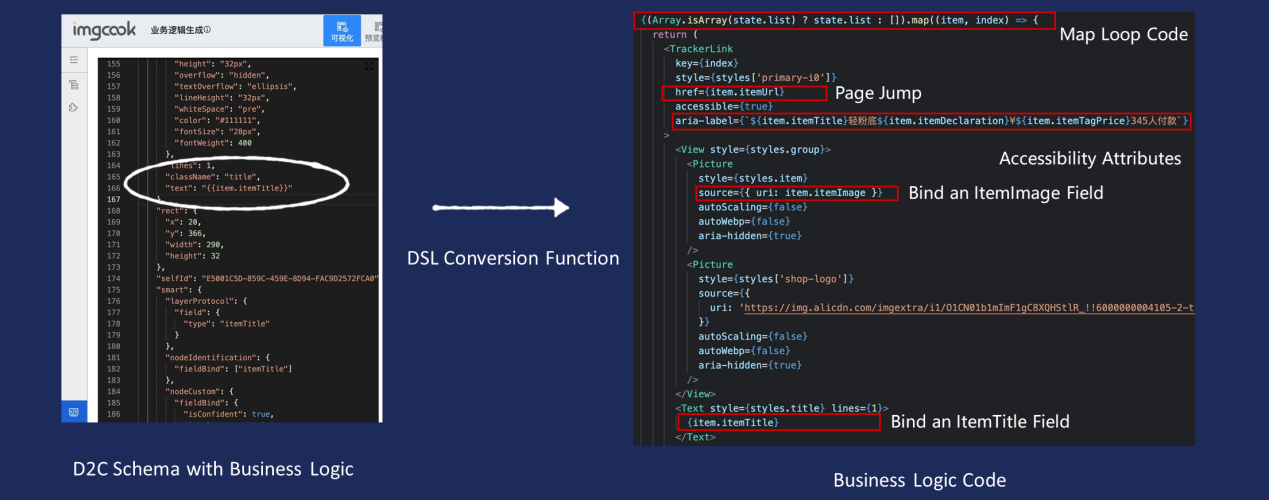

Then, we can automatically generate this business logic according to the results of model recognition. Here is a 1 row 2 loop, which can recognize that this is a commodity title and a commodity picture. These recognition results can be applied to the generation of business logic codes, such as field binding and page jump.

As mentioned earlier, the recognition result is hung on the smart field of each node. For example, it is recognized that the content of this text node is an itemTitle. If the recognition result is to be used for field binding, we can replace the content of the text node with the recognition result with the expression of field binding.

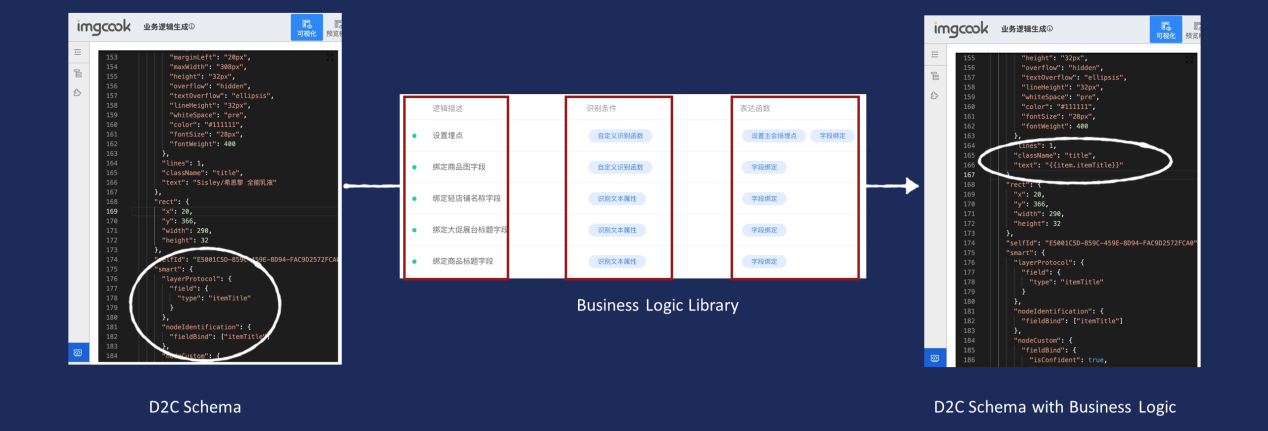

Where is this replacement operation done? The imgcook allows users to enter their business logic with a recognizer and an expressioner.

Each node in the D2C schema will go through these logic recognizers to see if there are business logic points. If so, it will follow the logical expression in the corresponding expression.

For example, the product title field is bound to this business logic. The recognizer set is to determine whether the fieldbind field on the smart field of the text node is itemTitle. If so, the logic in the expressioner is executed to bind this node to the itemTitle field automatically.

This way, we can update the D2C schema according to the user-defined business logic and convert the D2C schema with the business logic into code using the DSL conversion function.

This is the result of model identification, which is applied to the business logic code generation process. Other business logic generation also uses a business logic library to take up the model identification results, which are applied to code generation.

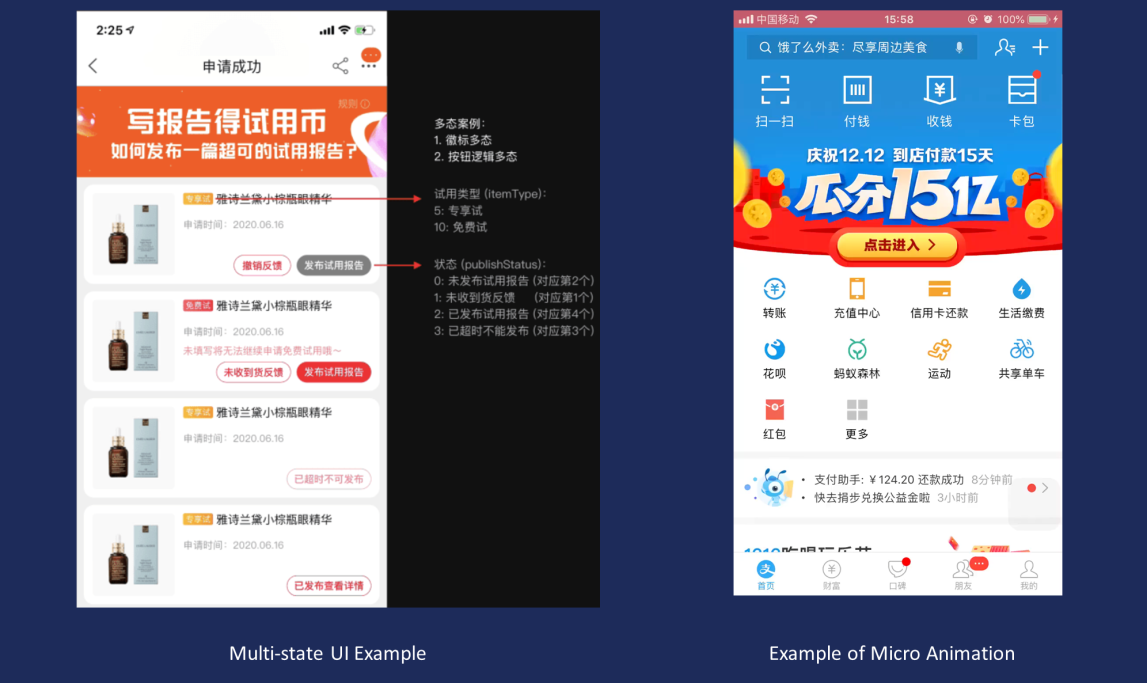

In the future, we will continue to improve the quantity and quality of generated codes with intelligent technology explore new code generation issues (such as multi-state UI) and complex UI (such as micro-effect). For example, the release trial report button here has a variety of states and displays different UI according to different data returned by the server. For example, clicking the enter button on this page has this micro-effect:

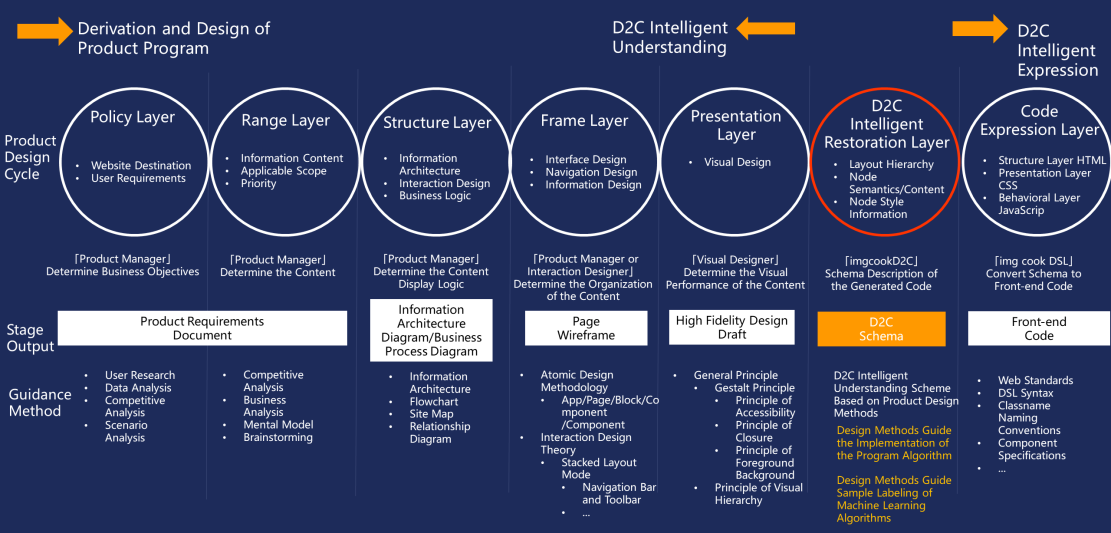

In addition, the essence of the design draft generation code D2C is to intelligently understand the product design product (high-fidelity design draft). The high fidelity design draft is produced by the product manager based on some methods and theories after the product design cycle to determine the business objectives and page structure, the visual designer combining product features, functional understanding, and visual design specifications.

We expect to build such a theoretical system of D2C underlying cognition, integrate product design and visual design methods into D2C practice, and intelligently understand design products based on product design methods and visual design principles. This can help us understand design drafts more comprehensively and deeply and help generate more and higher quality code.

D2C Practical Theory System

imgcook is an open platform. You are welcome to experience the design draft to generate code with imgcook.

If you have learned something from this article, you can start thinking about which problems in the frontend field can be solved with machine learning, such as AI design, code recommendation, intelligent UI, and UI automated testing. If you are interested, you can use the practical process introduced today to write a Demo project and experience it yourself.

In the future, I also hope more people can participate in the construction of frontend intelligence and use intelligent technology to solve more problems in the frontend fields.

The HAOMO Technology Frontend Team Developed 2000+ Modules with imgcook

Designers Have Developed over 1,100 Pages with Frontend Developers Using imgcook

66 posts | 5 followers

FollowAlibaba F(x) Team - September 30, 2021

Alibaba Clouder - December 31, 2020

Alibaba Clouder - December 31, 2020

Alibaba F(x) Team - June 22, 2021

Alibaba F(x) Team - June 20, 2022

Alibaba F(x) Team - September 1, 2021

66 posts | 5 followers

Follow Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Web Hosting

Web Hosting

Explore how our Web Hosting solutions help small and medium sized companies power their websites and online businesses.

Learn MoreMore Posts by Alibaba F(x) Team