In an era marked by rapid breakthroughs in artificial intelligence, Alibaba’s Qwen 2.5 series represents a transformative step forward in the evolution of language models. By integrating multi-modal functionalities with innovative transformer architectures, Alibaba has positioned Qwen 2.5 as a formidable contender in the global AI landscape. This article provides a comprehensive strategic review of Qwen 2.5, outlining its technical foundations, unique capabilities, and the implications of its deployment on both industry practices and global competition.

Historically, AI language models have evolved from rule-based and statistical approaches to the deep learning revolution ignited by transformer architectures. The seminal work by Vaswani et al. in 2017 laid the groundwork for models capable of understanding long-range dependencies in text. Successive advancements—exemplified by GPT, BERT, and their successors—have driven exponential increases in model complexity and parameter counts. In this context, the Qwen 2.5 series builds on these developments by not only expanding general language understanding but also addressing niche applications through specialized variants.

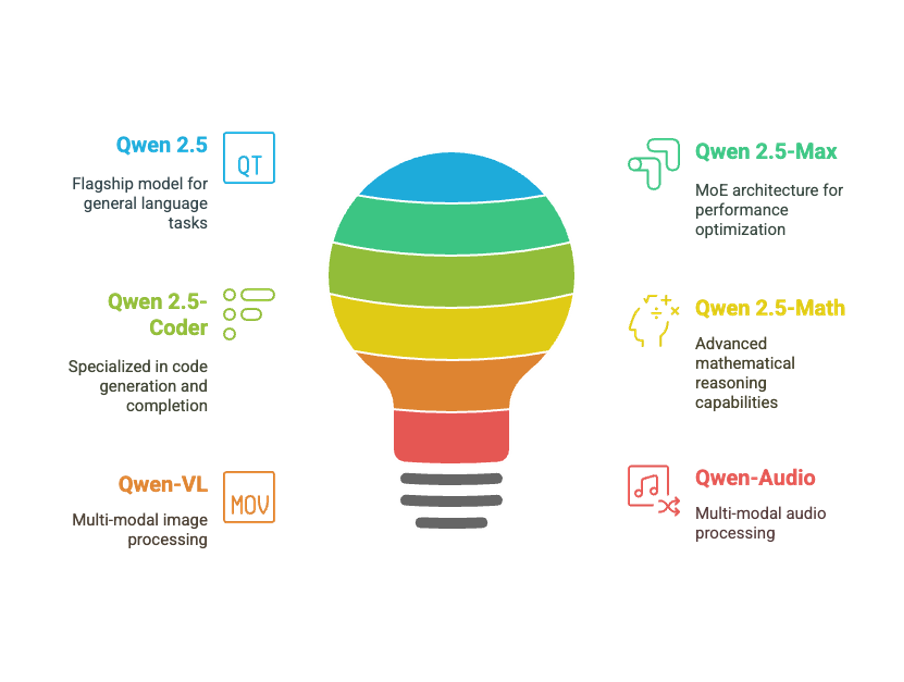

Alibaba’s Qwen 2.5 series is designed to serve a wide range of applications, ensuring high performance across general language tasks and specialized domains. The suite includes:

• Qwen 2.5 (Flagship Model): Pre-trained on up to 18 trillion tokens, this variant excels in general language processing, creative content generation, and robust instruction following.

• Qwen 2.5-Max: Utilizing a Mixture-of-Experts (MoE) architecture, this model pushes performance benchmarks by dynamically allocating computational resources for demanding tasks, supported by over 20 trillion tokens.

• Qwen 2.5-Coder: Tailored for the digital age, it is optimized for code generation and completion, with training on 5.5 trillion tokens of code data and support for 92 programming languages.

• Qwen 2.5-Math: Focused on advanced mathematical reasoning, this model leverages techniques like Chain-of-Thought (CoT) and Tool-Integrated Reasoning (TIR) to tackle complex, multi-step problems.

• Qwen-VL and Qwen-Audio: Extending the series into multi-modal domains, these variants handle image and audio processing, respectively, opening new avenues for applications in visual and auditory analysis.

At the core of Qwen 2.5 is the transformer architecture—a framework that has become synonymous with modern AI. In its standard form, the dense transformer model applies uniform parameters across tasks, ensuring stability and consistent performance. In contrast, Qwen 2.5-Max employs the Mixture-of-Experts (MoE) approach, wherein multiple specialized “experts” are dynamically activated according to the input’s complexity. This hybrid strategy offers two key advantages:

• Scalability: The MoE architecture scales performance without a proportional increase in computational cost.

• Specialization: It allows the model to excel in niche areas such as complex reasoning, code generation, and mathematical problem-solving.

A defining feature of the Qwen 2.5 series is its extensive training process:

• General Pre-Training: The flagship model is pre-trained on an immense dataset of up to 18 trillion tokens, spanning literature, science, and everyday language.

• Enhanced Specialized Training: Variants such as Qwen 2.5-Max, Coder, and Math benefit from targeted datasets that fine-tune performance for their respective domains.

• Fine-Tuning Techniques: Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF) further refine the models, aligning them with real-world tasks and ensuring they adhere to complex instructions.

One of Qwen 2.5’s standout features is its ability to process extended contexts—up to 128,000 tokens. This enables applications that require deep contextual understanding, such as:

• Legal and academic document processing

• Long-form narrative generation

• Complex customer service interactions

Supporting over 29 languages, Qwen 2.5 ensures that its powerful capabilities are accessible on a global scale. This multilingual prowess allows for:

• Cross-cultural communication

• Localized content generation

• Enhanced global collaboration

The series’ strategic segmentation into specialized variants—such as Qwen 2.5-Coder for programming tasks and Qwen 2.5-Math for advanced mathematical reasoning—illustrates a deliberate move toward targeted problem-solving. This differentiation:

• Enhances efficiency: By fine-tuning models for specific tasks, Qwen 2.5 delivers more precise outputs.

• Optimizes resource allocation: Specialized models lower operational costs while maintaining high performance.

• Drives industry-specific innovation: Tailored applications in sectors like finance, healthcare, and legal services empower organizations to integrate AI more seamlessly into their workflows.

Rigorous testing across benchmarks such as MMLU, HumanEval, and GSM8K has demonstrated Qwen 2.5’s superiority in both general and specialized tasks. When compared to leading models like GPT-4o, DeepSeek-V3, and Llama 3.1, Qwen 2.5 distinguishes itself through:

• Higher performance scores in multi-domain tasks

• Cost-effective operational models that reduce computational overhead

• Enhanced long-context and instruction-following capabilities

Qwen 2.5 challenges the longstanding dominance of Silicon Valley’s AI models by proving that high performance does not necessarily entail high operational costs. This paradigm shift is influencing:

• Investor sentiment: Capital allocation is increasingly directed toward models that offer both efficiency and scalability.

• Competitive strategy: Established players are prompted to innovate and refine their own models in response to Qwen 2.5’s success.

• Global innovation: The ripple effects of Qwen 2.5’s introduction have spurred rapid AI advancements across both domestic and international markets.

By offering scalable deployment options via Alibaba’s PAI-EAS platform, Qwen 2.5 democratizes access to advanced AI:

• User-Friendly Integration: Organizations can seamlessly incorporate Qwen models into their existing workflows with minimal technical overhead.

• Flexible Deployment: Options for both cloud-based and on-premises deployment address diverse security and cost considerations.

• Tailored Fine-Tuning: Industry-specific customization allows businesses to align AI outputs with proprietary data and regulatory requirements.

Looking ahead, the strategic innovations embodied by the Qwen 2.5 series are likely to shape the future of AI in several ways:

• Convergence of Multi-Modal AI: Future models will integrate text, visual, and auditory data more seamlessly, enhancing user experiences.

• Increased Specialization: A growing trend toward domain-specific models will drive further customization and improved performance in targeted applications.

• Ethical and Regulatory Evolution: As AI becomes more pervasive, discussions on data privacy, bias mitigation, and ethical AI use will gain prominence.

• Global Competitive Realignment: With Qwen 2.5 setting new performance and efficiency benchmarks, the global AI ecosystem is poised for rapid, transformative change.

Alibaba’s Qwen 2.5 series is not just an incremental improvement in language modeling—it represents a strategic leap that redefines the role of AI in modern society. By combining expansive pre-training, innovative architectures like MoE, and targeted fine-tuning strategies, Qwen 2.5 sets new industry standards. Its ability to process extended contexts, support multiple languages, and deliver specialized outputs positions it as a critical tool for driving efficiency, innovation, and global competitiveness in the AI domain. As the industry continues to evolve, the legacy of Qwen 2.5 will likely be seen as a catalyst for a new generation of intelligent, adaptable, and cost-effective AI solutions.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Alibaba Cloud’s Comprehensive GenAI Ecosystem: Empowering Enterprise Innovation

Strategic Approach to Maximizing Performance Efficiency on Alibaba Cloud

4 posts | 0 followers

FollowAlibaba Cloud Community - September 6, 2024

Ashish-MVP - April 8, 2025

Alibaba Cloud Community - January 4, 2026

Alibaba Cloud Community - January 21, 2025

Alibaba Cloud Community - October 15, 2025

Alibaba Cloud Community - September 19, 2024

4 posts | 0 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More