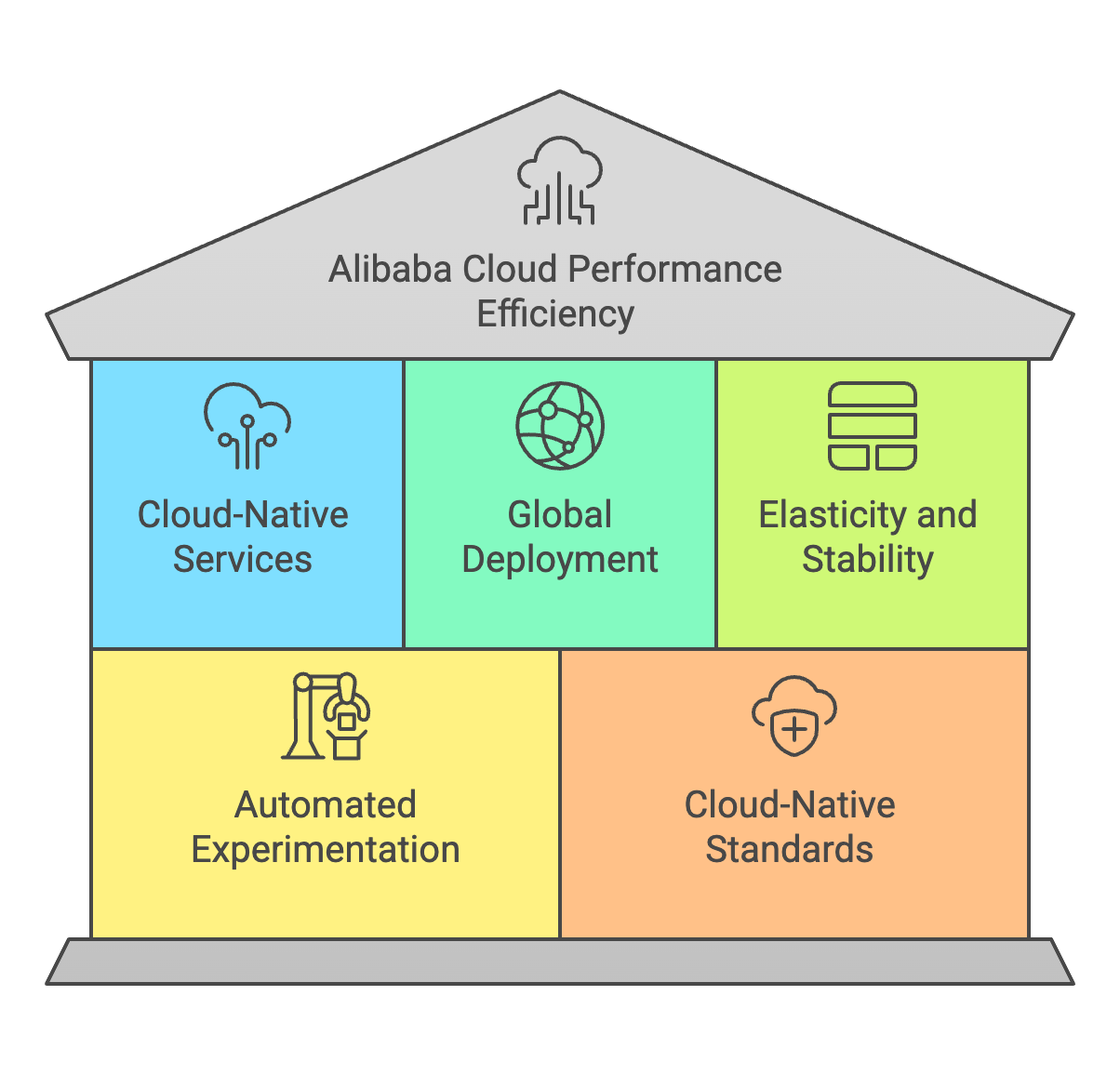

In today’s dynamic cloud environment, achieving peak performance requires a combination of architectural foresight, service optimization, and continuous refinement. Alibaba Cloud’s Well-Architected Framework provides a structured methodology to ensure performance efficiency through its Performance Efficiency Pillar, which emphasizes leveraging cloud-native capabilities, adaptive resource management, and data-driven decision-making. This article outlines a detailed strategy for designing and maintaining high-performance architectures on Alibaba Cloud, covering design principles, service selection, measurement, monitoring, and trade-offs.

To build a performance-optimized architecture, Alibaba Cloud advocates adhering to five core principles:

1. Adopt Cloud-Native Services

Prioritize managed services (e.g., Elastic Container Instance, Function Compute) over custom-built solutions to reduce operational overhead and benefit from Alibaba Cloud’s built-in optimizations. For example, serverless architectures eliminate infrastructure management, enabling teams to focus on code while the platform handles scaling and resource allocation.

2. Leverage Global Deployment

Utilize Alibaba Cloud’s global infrastructure to deploy resources across regions, reducing latency for geographically distributed users. Services like Global Accelerator (GA) and Content Delivery Network (CDN) optimize traffic routing and caching, ensuring low-latency access to applications.

3. Maximize Elasticity and Stability

Design applications to dynamically scale with demand using auto-scaling groups, ECS spot instances, and serverless resources. For stateful workloads, combine Elastic Compute Service (ECS) with high-performance storage (e.g., ESSD PL3 cloud disks) to maintain stability under fluctuating loads.

4. Automate Experimentation

Use Infrastructure as Code (IaC) tools and Alibaba Cloud Resource Orchestration Service (ROS) to rapidly test configurations. Automated deployment pipelines enable A/B testing of instance types, storage tiers, and database engines to identify optimal setups.

5. Align with Cloud-Native Standards

Ensure compatibility with Kubernetes (via ACK), serverless frameworks, and open APIs to simplify integration with Alibaba Cloud’s service ecosystem and future-proof architectures.

Elastic Compute Service (ECS):

Serverless Compute:

Block Storage:

File Storage:

Object Storage Service (OSS):

ApsaraDB for PolarDB:

Lindorm:

ApsaraDB for Redis:

Server Load Balancer (SLB):

Cloud Enterprise Network (CEN):

Express Connect:

1. Define Metrics:

Track technical indicators (e.g., latency, CPU utilization) alongside business KPIs (e.g., transaction completion rate).

2. Automated Testing:

Use Performance Testing Service (PTS) to simulate traffic spikes, validate SLAs, and identify bottlenecks. For example:

3. Visualize Data:

Integrate PTS with DataV to create dashboards showing response times, error rates, and resource consumption trends.

1. Infrastructure Monitoring with CloudMonitor:

2. Application Monitoring with ARMS:

1. Caching:

2. Asynchronous Processing:

3. Database Sharding:

4. Geographical Redundancy:

Achieving optimal performance on Alibaba Cloud demands a holistic approach that combines service selection rigor, automated testing, real-time monitoring, and strategic architectural compromises. By adopting cloud-native services like PolarDB, ECI, and GA, organizations can reduce latency, scale dynamically, and maintain resilience. Continuous measurement via PTS and ARMS ensures alignment with performance goals, while trade-offs such as caching and asynchronous processing mitigate bottlenecks. Ultimately, aligning with Alibaba Cloud’s Well-Architected Framework empowers businesses to deliver fast, reliable, and cost-efficient applications in a competitive digital landscape.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Unlocking the Future of AI with Qwen 2.5 VL: Where Vision Meets Language

4 posts | 0 followers

FollowUtkarsh Anand - September 9, 2023

Kidd Ip - October 27, 2025

Nick Patrocky - January 30, 2024

ray - April 16, 2025

Lana - April 14, 2023

Alibaba Cloud Native Community - February 13, 2023

4 posts | 0 followers

Follow Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Application Real-Time Monitoring Service

Application Real-Time Monitoring Service

Build business monitoring capabilities with real time response based on frontend monitoring, application monitoring, and custom business monitoring capabilities

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn More Remote Rendering Solution

Remote Rendering Solution

Connect your on-premises render farm to the cloud with Alibaba Cloud Elastic High Performance Computing (E-HPC) power and continue business success in a post-pandemic world

Learn More