The authors are two core developers of OpenAnolis Kernel-SIG, who are experts in the Linux kernel scheduler.

Benchmark has become ridiculed in the mobile phone industry. To be honest, benchmark is one of the most important evaluation methods in the field of operating systems. For example, the Linux Kernel community often evaluates the value of an optimization patch by benchmarking. There are media like Phoronix that focus on Linux benchmark. Today, I would like to say one more thing. Getting a high score in benchmark is the embodiment of excellence, which is based on a deep understanding of the kernel. This article stems from a daily performance optimization analysis. When we evaluated Tuned, an automation performance tuning software, we found that it made some minor modifications to the parameters related to the Linux kernel scheduler in the server scenario. These modifications improved the performance of the Hackbench. Isn't it interesting? Let's dive in!

The content of this article is listed below:

Most threads /processes in Linux are scheduled by a scheduler called Completely Fair Scheduler (CFS), which is one of the core components of Linux. (In Linux, there are only subtle differences between threads and processes, which are uniformly expressed as processes later in the article.) The core of CFS is the red-black tree, which is used to maintain running time processes in the system as the basis for selecting the next process to run. In addition, it supports priority, group scheduling (based on the well-known cgroup), throttling, and other functions that can meet various advanced requirements.

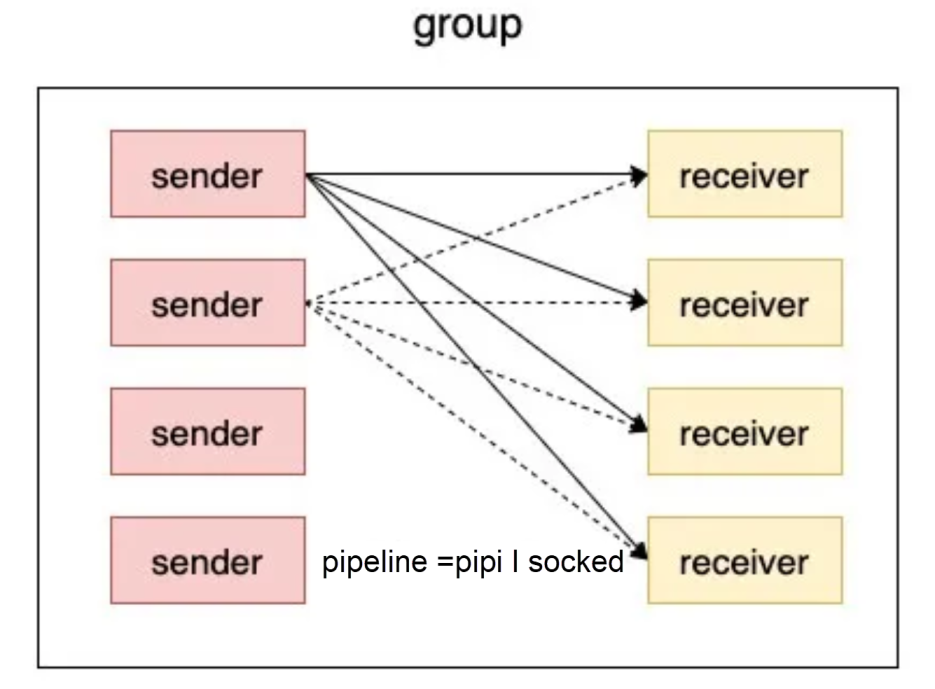

Hackbench is a stress testing tool for Linux kernel schedulers. Its main job is to create a specified number of scheduling entity pairs (threads/processes), let them transmit data through sockets/pipes, and count the time cost of the entire running process.

This article focuses on the following two parameters. They are important factors that affect the performance of Hackbench. The system administrator can use the sysctl command for settings.

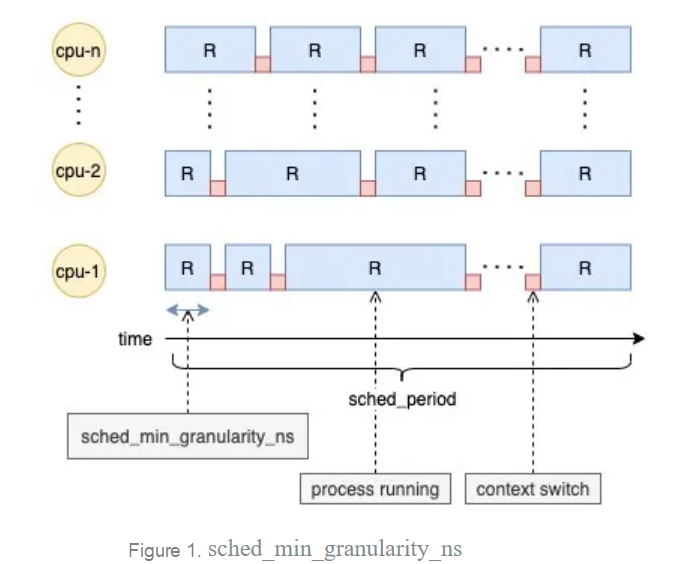

The duration of the sched period of the CFS can be affected by modifying the kernel.sched_min_granularity_ns. For example, set kernel.sched_min_granularity_ns = m. When there are a large number of runnable processes in the system, the larger the m, the longer the CFS scheduling period will be.

As shown in figure 1, each process can run on the CPU and has a different length of time. sched_min_granularity_ns ensures the minimum running time of each process (in the case of the same priority). The larger the sched_min_granularity_ns, the longer the time each process can run at a time.

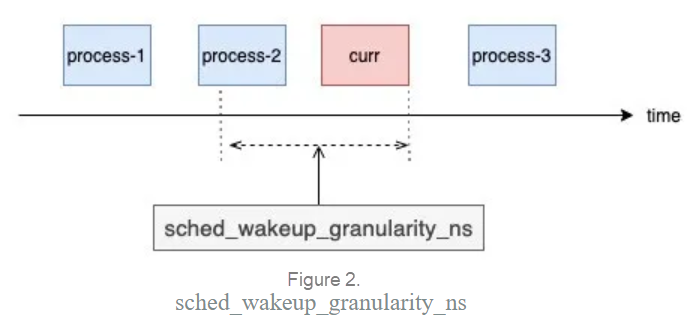

It ensures the reawakened process will not preempt the running process frequently. The larger the kernel.sched_wakeup_granularity_ns, the smaller the preemption frequency.

As shown in figure 2, process-{1,2,3} are to be woken up. The running time of process-3 is greater than curr (the process running on the CPU), so it fails to preempt. Although the running time of process-2 is less than curr, but the difference between them is less than sched_wakeup_granularity_ns, so it also fails to preempt too. Only process-1 can preempt curr. Therefore, the smaller the sched_wakeup_granularity_ns, the faster the response time after the process is woken up (the shorter the waiting time).

The Hackbench working mode is divided into process mode and thread mode. The main difference is whether to create a process or thread as the basis for testing. The following is an introduction to the thread:

we can find that the thread/process in the same group is mainly I/O-intensive, and the thread/process between different groups is mainly CPU-intensive through the preceding Hackbench model analysis.

Figure 3: Hackbench Working Mode

Voluntary Context Switching:

Therefore, there are many voluntary context switches in the system, but there are also involuntary context switches. The latter will be affected by the parameters we will introduce below.

In the hackbench-socket test, tuned the modified parameters of the sched_min_granularity_ns and sched_wakeup_granularity_ns of CFS, resulting in performance differences. The following descriptions provide details:

| Switches /Parameters and Performance | sched_min_granularity_ns | sched_wakeup_granularity_ns | Performance |

| Close Tuned | 2.25ms | 3ms | Poor |

| Open Tuned | 10ms | 15ms | Good |

Next, we adjust these two scheduling parameters for further analysis.

Note: For brief expression, m refers to kernel.sched_min_granularity_ns and w refers to kernel.sched_wakeup_granularity_ns.

To explore the influence of two parameters on the scheduler, we choose to fix one parameter at a time, investigate how the other parameter affects the performance, and use system knowledge to explain the principle behind this phenomenon.

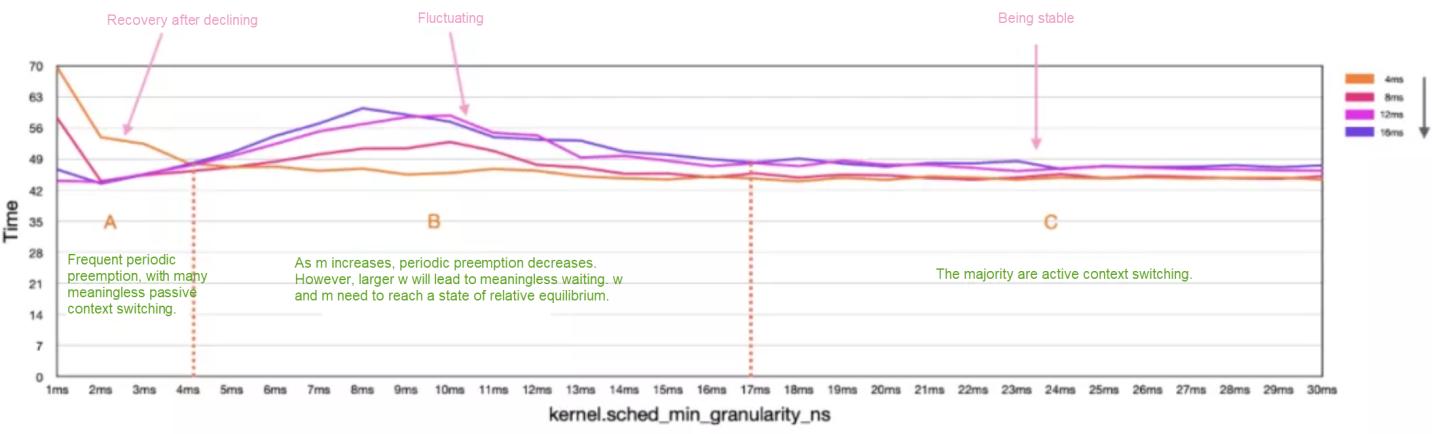

Figure 4: Fix w and adjust m

In the preceding figure, we fixed the parameter w and divided it into three parts according to the changing trend of the parameter m: region A (1ms~4ms), region B (4ms~17ms), and region C (17ms~30ms). In region A, all four curves show a fast downward trend. In region B, all four curves are in a state with large fluctuations, and in region C, curves tend to be stable.

In the relevant knowledge in the second section, it can be known that m affects the running time of the process, which means that it affects the involuntary context switching of the process.

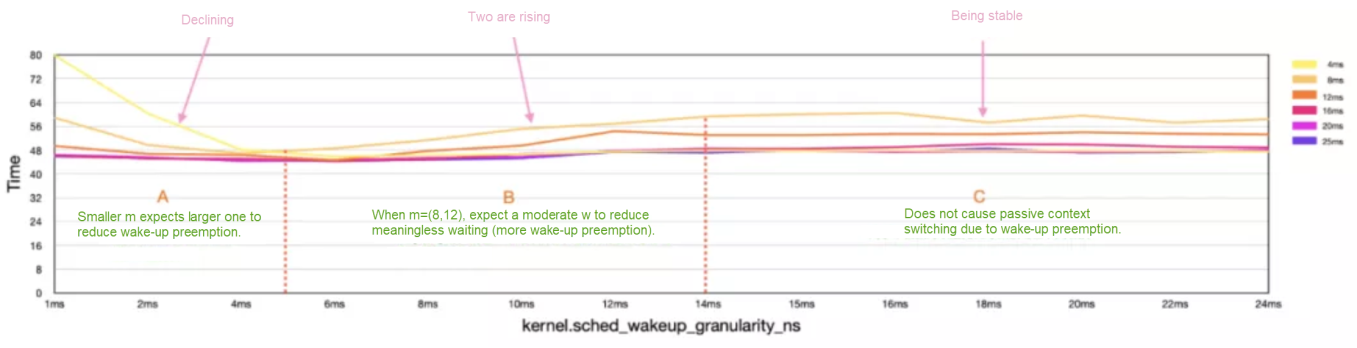

Figure 5: Fix m and adjust w

We have fixed the parameter m in the preceding figure, which is divided into three regions:

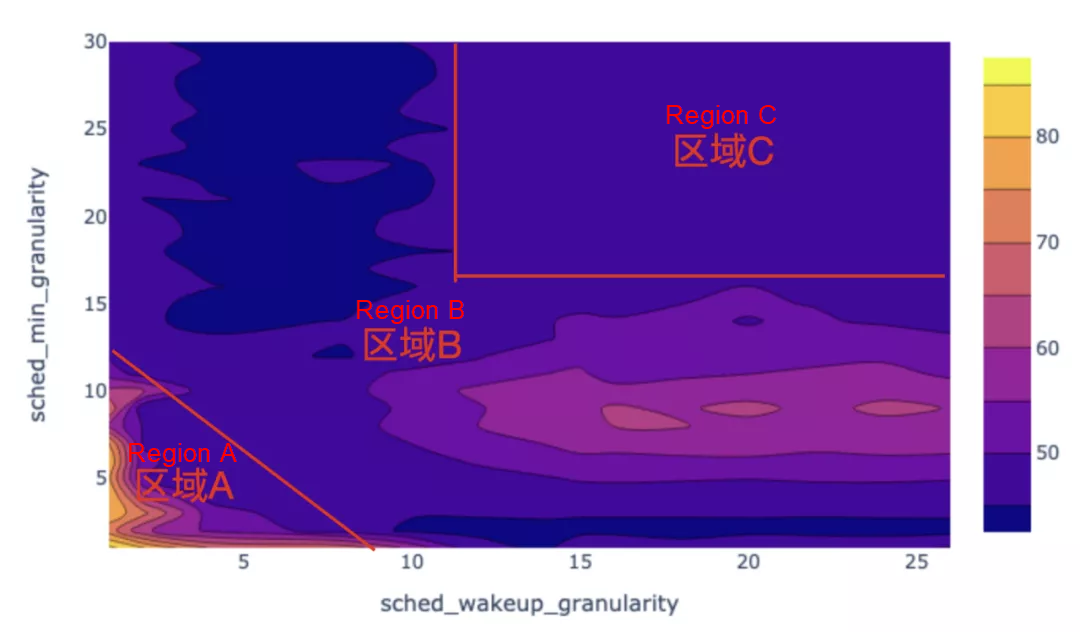

The following is a thermal overview of experimental data to show the constraint relationship between m and w. The three areas will be different from those in Figures 4 and 5.

Figure 6: Overview

Cloud Application Performance Diagnosis of System O&M Tool SysAK

Inclavare Containers: The Future of Cloud-Native Confidential Computing

99 posts | 6 followers

FollowAlibaba Clouder - January 30, 2018

Alibaba Clouder - March 14, 2017

Alibaba Clouder - June 12, 2018

Alibaba Clouder - January 2, 2018

Alibaba Clouder - March 2, 2021

Sally - October 29, 2019

99 posts | 6 followers

Follow Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn More Remote Rendering Solution

Remote Rendering Solution

Connect your on-premises render farm to the cloud with Alibaba Cloud Elastic High Performance Computing (E-HPC) power and continue business success in a post-pandemic world

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn MoreMore Posts by OpenAnolis