Real-time monitoring of services to understand the current operational metrics and health status of services is an indispensable part in the microservice system. Metrics, as an important component of microservices, provides a comprehensive data base for service monitoring. Recently, Dubbo Metrics version 2.0.1 was released. This article reveals the origins of Dubbo Metrics and the 7 major improvements.

Dubbo Metrics (formerly Alibaba Metrics) is a widely used basic class library for metric tracking within Alibaba Group. It has two versions: Java and Node.js. Currently, Java is the open-source version. Built in 2016, the internal version has gone through nearly three years of development and the test of the shopping carnival on "Singles' Day". It has become the factual standard of microservices monitoring metrics within Alibaba Group, covering all layers of metrics from the system, JVM, middleware, and to the application, and has formed a unified set of specifications in terms of naming rules, data formats, tracking methods and computing rules.

Dubbo Metrics code is derived from Dropwizard Metrics and is version number 3.1.0. At that time, two main reasons led to the decision to fork it internally for customized development.

One reason is that the development of the community is very slow. The next version was updated in the third year after 3.1.0. We were worried that the community could not respond to business needs in time. Another more important reason is that, at that time, 3.1.0 did not support multi-dimensional tags, and could only be based on traditional metric naming methods, such as a.b.c. This means that Dropwizard Metrics can only perform measurements in a single dimension. In the actual business process, many dimensions cannot be determined in advance. And, in a large-scale distributed system, after data statistics are completed, the data needs to be aggregated according to various business dimensions, such as aggregate by data center and group, which, at that time, could not be satisfied by Dropwizard either. For various reasons, we made the decision to fork a branch for internal development.

Compared with Dropwizard Metrics, Dubbo Metrics has the following improvements:

As described above, multi-dimensional tags have a natural advantage over the traditional metric naming method in terms of dynamic tracking, data aggregation, and the like. For example, to compute the number of calls and RT of a Dubbo service named DemoService, it is usually named dubbo.provider.DemoService.qps and dubbo.provider.DemoService.rt based on the traditional naming method. For only one service, this method has no big problem. However, if a microservice application provides multiple Dubbo services at the same time, then it is difficult to aggregate the QPS and RT for all services. Metric data inherently has the time series attribute, so the data can be appropriately stored in a time series database. To compute the QPS of all Dubbo services, you need to find all indexes named dubbo.provider.*.qps, and then sum them up. Fuzzy search is involved, so it is time-consuming to implement for most databases. If the QPS and RT of the Dubbo method level needs to be computed in more detail, the implementation will be more complicated.

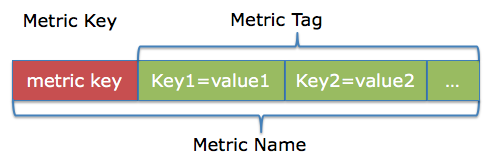

Considering that all metrics produced by all microservices of a company will be uniformly summarized to the same platform for processing, the Metric Key should be named according to the same set of rules to avoid naming conflicts, and the format is appname.category[.subcategory]*.suffix

In the preceding example, the same metric can be named as dubbo.provider.service.qps{service="DemoService"}, where the name of the previous part is fixed and remain unchanged, while the tags in brackets can be changed dynamically or even added more dimensions. For example, after adding a method dimension, the name becomes dubbo.provider.service.qps{service="DemoService",method="sayHello"}, and the IP and data center information of the machine can also be added. This kind of data storage is compatible with time series databases. Based on the data, aggregation, filtering and other operations in any dimension can be easily realized.

Note: At the end of December 2017, Dropwizard Metrics 4.0 began to support tags, and the implementation of tags in Dubbo Metrics is based on the implementation in Dropwizard. Both MicroMeter and Prometheus provided in Spring Boot 2.0 have also introduced the concept of tags.

The precise statistics function of Dubbo Metrics is different from that of Dropwizard or other open-source project tracking statistical libraries. This is explained with the following two dimensions, the selection of the time window and the statistical method of throughput rate.

In the case of throughput statistics (such as QPS), the implementation of Dropwizard is a sliding window + exponential weighted moving average (EWMA), providing only 1 minute, 5 minutes and 15 minutes of choices on the time window.

In the case of data statistics, we need to define the statistical time window in advance. Generally, two methods can be used to establish a time window, namely a fixed window and a sliding window.

The fixed time window takes absolute time as the reference frame, for statistics within an absolute time window. The time window remains unchanged whenever the statistical data is accessed. However, the sliding window uses the current time as the reference frame and a specified window size is deduced forward from the current time for statistics. The window changes with the change of time and data.

The fixed window has the following advantages: First, the window does not need to be moved, and the implementation is relatively simple. Second, different machines are all based on the same time window, so you only need to aggregate clusters according to the same time window, which is relatively easy. The disadvantage is that if the window size is large, the real-time performance will be affected and the statistical information of the current window cannot be obtained immediately. For example, if the window is 1 minute, you must wait until the end of the current 1 minute to obtain the statistics for this 1 minute.

The advantage of the sliding window is that it has better real-time performance. The statistical information in a time window deduced forward from the current time can be seen at any time. Relatively, the collection time of different machines is different, so the data on different machines must be aggregated through the so-called Down-Sampling. That is, based on the fixed time window, the data collected in the window is applied to an aggregate function. For example, if the cluster has 5 machines and Down-Sampling is carried out according to the average value at the frequency of 15 seconds. If a metric data point is collected at 00:01, 00:03, 00:06, 00:09, 00:11 respectively within the time window of 00:00~00:15, the weighted average value of these 5 points is regarded as the average value after Down- Sampling at 00:00.

In practice, however, sliding windows still encounter the following problems

To solve these problems, Dubbo Metrics provides the BucketCounter metric, which can accurately compute the data at minute-level and second-level, and the time window can be accurate to 1 second. The results after cluster aggregation can be ensured to be accurate, provided that the time on each machine is synchronized. It also supports statistics based on sliding windows.

After years of practice, we have gradually discovered that when users observe and monitor, they first focus on the cluster data, and then the stand-alone data. However, the throughput rate on a single machine does not really make much sense. But what does this mean?

For example, if you have a microservice, and two machines, and a method generates 5 calls within a certain period of time. The time points of these calls are [5,17] on Machine 1, and [6,8,8] on Machine 2, respectively (assuming the unit is milliseconds). If you want to compute the average RT of the cluster, you can first compute the average RT on a single machine, and then compute the overall average RT. According to this method, the average RT of Machine 1 is 11 ms, and the average RT of machine 2 is 7.33 ms. After the two are averaged again, the average RT of the cluster is 9.17 ms. But is this the actual result?

If the data on Machine 1 and machine 2 are pulled together to compute, the actual average RT is (5+17+6+8+8)/5 = 8.8 ms, which is obviously different from the above result. Considering the loss of precision in computing floating point numbers and the expansion of cluster size, this error will become more apparent. Therefore, it is concluded that the throughput rate on a single machine is meaningless for computing the cluster throughput, and only meaningful in the dimension of a single machine.

The EWMA provided by Dropwizard is also an average, but it also takes into account the time factor. The closer the data is to the current time, the higher the weight of the data will be. In case the time is long enough, for example, 15 minutes, this method makes sense. However, it has been observed that it is not significant to consider the weight of the time dimension in a short time window, such as 1s and 5s. Therefore, during the internal renovation process, Dubbo Metrics has been improved as follows:

In the scenario of a big promotion, improving statistical performance is an important topic for Dubbo Metrics. In Alibaba business scenarios, the QPS of a statistical interface may reach tens of thousands, for example, the scenario of accessing the cache. Therefore, in this case, the statistical logic of metrics may become a hot spot. We have made some targeted optimizations:

In high concurrency scenarios, java.util.concurrent.atomic.LongAdder performs best in data accumulation, so almost all operations are ultimately attributed to operations on this class.

When the data expires, the data needs to be cleaned up. In the previous implementation, LongAdder#reset was used to clean up the object for reuse. However, the actual test shows that LongAdder#reset is actually a CPU-consuming operation. Therefore, the memory is selected to replace CPU, and a new LongAdder object is used to replace LongAdder#reset when cleaning up.

In the implementations of some metrics, some have more statistical dimensions and multiple LongAdder objects needs to be updated at the same time. For example, in the metric implementation of Dropwizard Metrics, the moving average of 1 minute, 5 minutes, and 15 minutes is computed, and 3 LongAdder objects needs to be updated each time. However, in fact, these 3 updating operations are repeated and only one update is needed.

In most scenarios, RT is measured in milliseconds. Sometimes, when RT is computed to be less than 1 ms, the RT transmitted to metrics is 0. However, it is found that JDK native LongAdder does not optimize the add(0) operation. Even if the input is 0, the logic is still repeated, which essentially calls sun.misc.Unsafe.UNSAFE.compareAndSwapLong. At this time, if metrics determines the Add operation is not performed on the counter when RT is 0, then one Add operation can be omitted. This is very helpful for middleware with high concurrency, such as distributed cache. In an internal application test, we have found that in 30% of the cases, the RT for accessing distributed cache is 0 ms. This optimization can omit a lot of meaningless update operations.

It is only necessary to update a Long object to compute the number of calls and the time at the same time, which is approaching the theoretical limit.

After observation, it is found that the success rate for some metrics of middleware is usually very high, which is 100% under normal circumstances. To compute the success rate, the number of successes and the total number of calls need to be computed. The two are almost the same in this case, which leads to waste as an addition operation is done in vain. If, in turn, only the number of failures is computed, then the counter is only updated if a failure occurs, thus greatly reducing the addition operations.

In fact, if we perform orthogonal splitting for each case, such as success and failure, then the total number can be computed by summing up the number in various cases. This further ensures that the count is updated only once per call.

But do not forget that, in addition to the number of calls, the RT of the method execution also needs to be computed. Can it be further reduced?

The answer is yes! Assuming that RT is computed in milliseconds, we know that 1 Long object has 64 bits (in fact, the Long object in Java is signed, so theoretically only 63 bits are available), while a statistical cycle of metrics only computes 60s of data at most, so these 64 bits cannot be used up anyway. Can we split these 63 bits and compute the count and RT at the same time? In fact, this is feasible.

We use the high 25 bits of the 63 bits of a Long object to represent the total count in a statistical cycle, and the low 38 bits to represent the total RT.

------------------------------------------

| 1 bit | 25 bit | 38 bit |

| signed bit | total count | total rt |

------------------------------------------When a call comes in, assuming that the RT passed in is n, then the number accumulated each time is not 1 or n, but

1 * 2^38 + nThis design mainly has the following considerations:

What if it does overflow?

Due to the previous analysis, it is almost impossible to overflow, so this problem has not been solved for the time being and will be solved later.

In the previous code, BucketCounter needs to ensure that only one thread updates the Bucket under multi-thread concurrent access, so it uses an object lock. In the latest version, BucketCounter has been reimplemented, removing the lock from the original implementation and operating only with AtomicReference and CAS. In this way, local testing has found that the performance increased by about 15%.

Dubbo Metrics fully supports all layers of metrics from the operating system, JVM, middleware, to the application, unifies various naming metrics so that they can be used out of the box, and supports the collection of certain metrics to be turned on and off at any time through configuration. Currently supported metrics mainly include:

It supports Linux, Windows, and Mac, including CPU, Load, Disk, Net Traffic, and TCP.

It supports classload, GC times and time, file handles, Young/Old area occupation, thread status, off-heap memory, compiling time, and some metrics support automatic difference computation.

Tomcat: number of requests, number of failures, processing time, number of bytes sent and received, and number of active threads in thread pool;

Druid: number of SQL executions, number of errors, execution time, and number of rows affected;

Nginx: accept, active connections, number of read and write requests, number of queues, QPS of the request, and average RT.

For more detailed metrics, please refer to here. Support for Dubbo, NACOS, Sentinel, and Fescar will be added later.

Dubbo Metrics provides the JAX-RS based REST interface exposure that makes it easy to query various internal metrics. It can start the HTTP server to provide services independently (by default, a simple implementation based on Jersey + sun Http server is provided), or embed the existing HTTP Server to expose metrics. For specific interfaces, please see:

https://github.com/dubbo/metrics/wiki/query-from-http

If all the data is stored in memory, data loss may occur due to pull failure or application jitter. To solve this problem, metrics has introduced a module, which provides two mode, the log mode and the binary mode, to flash data to the disk.

The way to use it is simple, like getting the Logger for a logging framework.

Counter hello = MetricManager.getCounter("test", MetricName.build("test.my.counter"));

hello.inc();Supported metrics include:

Dubbo Metrics @Github:

https://github.com/dubbo/metrics

Wiki:

https://github.com/dubbo/metrics/wiki (continuous updating)

Wang Tao (GitHub ID @ralf0131) is an Apache Dubbo PPMC Member, an Apache Tomcat PMCMember, and a technical expert of Alibaba.

Zi Guan (GitHub id@min) is an Apache Dubbo Commiter and a senior development engineer at Alibaba, who is responsible for the development of Dubbo Admin and Dubbo Metrics projects, and community maintenance.

Ali-Perseus: Unified and Distributed Communication Framework for Deep Learning

2,593 posts | 793 followers

FollowAlibaba Clouder - November 7, 2019

Alibaba Cloud Native Community - May 23, 2023

Alibaba Cloud Native Community - January 26, 2024

Alibaba Cloud Native Community - September 12, 2023

Alibaba Cloud Native Community - July 20, 2023

Alibaba Developer - August 24, 2021

2,593 posts | 793 followers

Follow Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Application Real-Time Monitoring Service

Application Real-Time Monitoring Service

Build business monitoring capabilities with real time response based on frontend monitoring, application monitoring, and custom business monitoring capabilities

Learn More Resource Management

Resource Management

Organize and manage your resources in a hierarchical manner by using resource directories, folders, accounts, and resource groups.

Learn MoreMore Posts by Alibaba Clouder