By System O&M SIG

Containerization is currently considered the best practice for building enterprise IT architectures. The deployment architecture of cloud-native containerization, compared to traditional IDC deployment architecture, has become the industry standard due to its efficient operation and maintenance capabilities and cost control.

However, containerization also presents certain challenges. In addition to shielding IDC infrastructure and cloud resources, it also introduces opacity at the container engine layer, which is not covered by existing cloud-native observability systems.

A significant number of users running production-level JAVA on Kubernetes with over 1,000 nodes have encountered out-of-memory (OOM) problems caused by memory "black holes". Furthermore, users are deterred from containerization due to CGroup issues at the container engine layer when working with large-scale clusters.

The O&M SIG (Special Interest Groups) of the OpenAnolis community, in collaboration with Alibaba Cloud Container Service for Kubernetes (ACK) team, the leading unit of the OpenAnolis community, has accumulated extensive professional experience in containerization migration by providing Kubernetes clusters with millions of cores for various industry customers. Additionally, the SIG works with Alinux to enhance the kernel layer of the operating system, eliminating the "black boxes" at the container engine layer and ensuring users are free from containerization-related problems by leveraging cloud-native container services.

Kubernetes adopts a memory workingset to monitor and manage the memory usage of containers. When the memory usage in a container exceeds the specified limit or the node is under memory pressure, Kubernetes decides whether to evict or terminate the container based on the workingset.

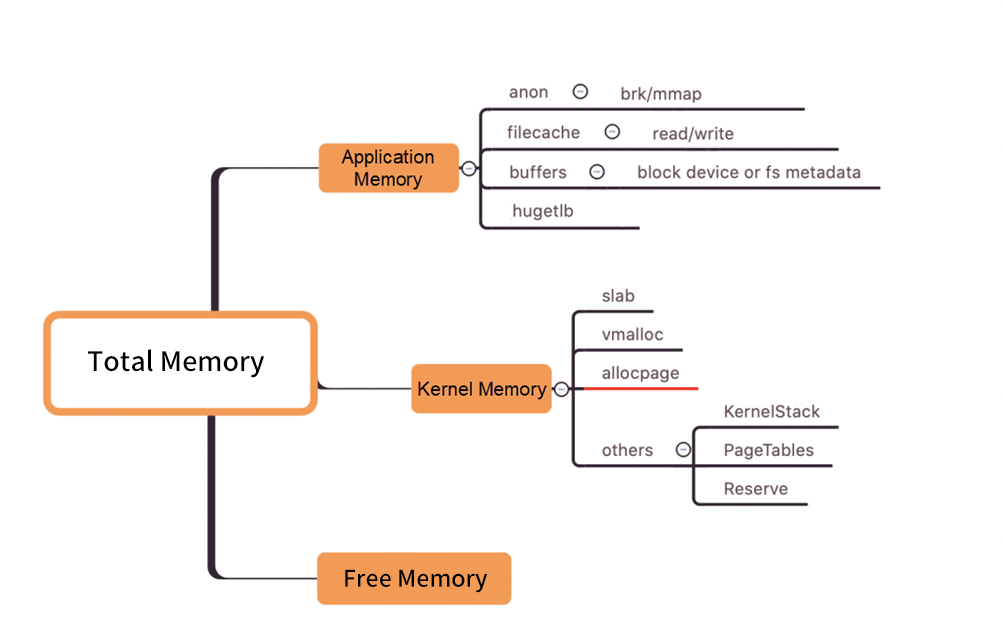

• Memory workingset calculation formula:

Workingset = inactive_anon + active_anon + active_file. In this formula, inactive_anon and active_anon are the total anonymous memory size of the program. active_file is the active file cache size.

• Anonymous memory

Anonymous memory refers to memory that is not associated with a file, such as the program's heap, stack, and data segment. It consists of the following components:

Anonymous mapping: Memory mapping created by a program through the mmap system call without any associated files.

Heap: Dynamic memory allocated by a program through the malloc/new or brk system call.

Stack: Memory used to store function parameters and local variables.

Data segment: Memory used to store initialized and uninitialized global and static variables.

• Active file cache

When a program reads and writes files, a file cache is generated. The active file cache refers to the cache that has been recently used multiple times and is not easily reclaimed by the system.

(Figure/ Kernel Level Memory Distribution)

The following section explains how to troubleshoot the issue of high pod workingset using SysOM monitoring.

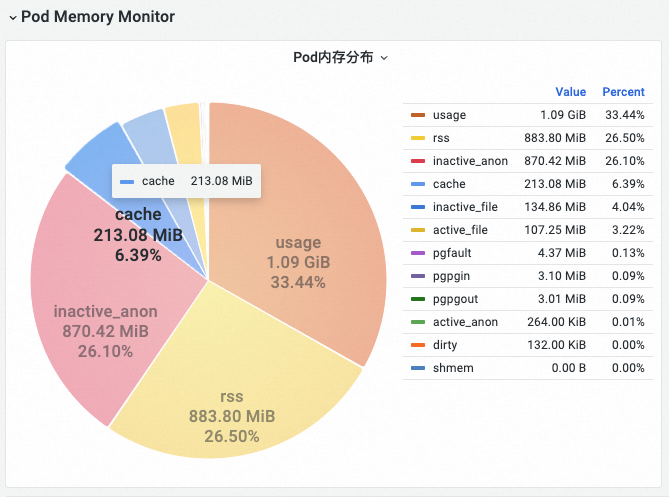

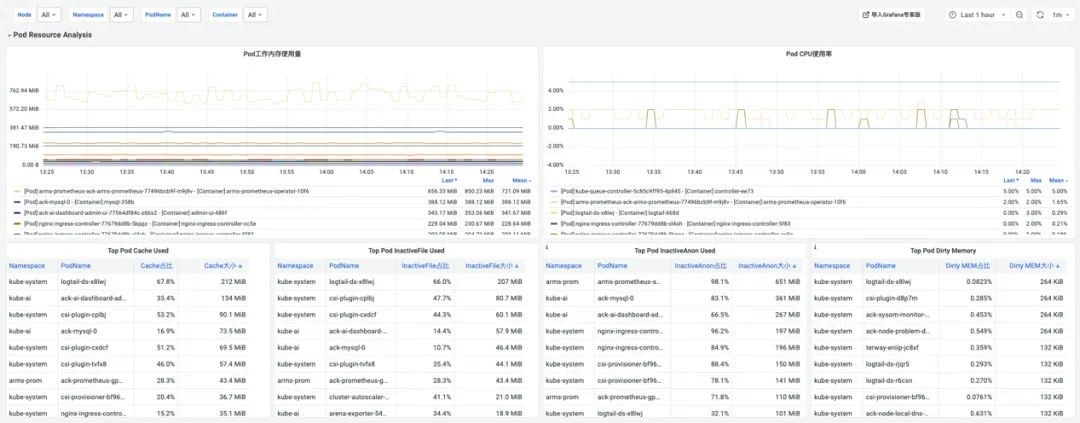

Based on the workingset calculation formula: workingset = inactive_anon + active_anon + active_file, check the workingset monitoring data on the PodMonitor dashboard and identify the type of memory occupying the highest proportion. In this case, it is observed that the active file cache has a larger share.

(Figure/ SysOM monitoring provides pod-level monitoring of memory compositions at the kernel layer of the operating system)

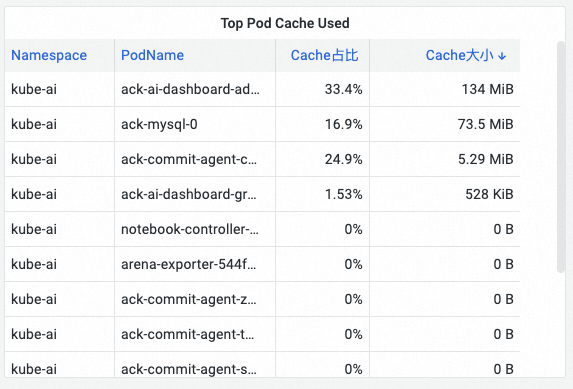

During the problem detection step, SysOM utilizes top analysis to quickly identify the pods in the cluster that consume the most active file cache memory.

By monitoring Pod Cache (cache memory), InactiveFile (inactive file memory usage), InactiveAnon (inactive anonymous memory usage), Dirty Memory (system dirty memory usage), and other memory compositions, you can detect common pod memory black hole issues.

(Figure/ Find the pod with the largest active file cache memory consumption in the cluster through top analysis)

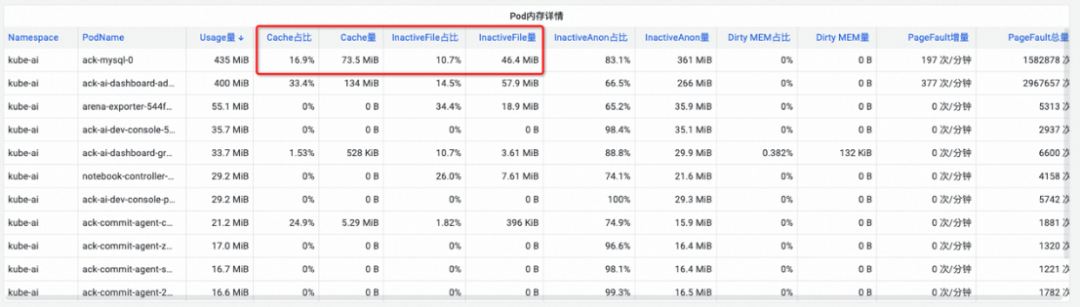

(Figure/ SysOM provides detailed monitoring statistics on each composition of memory at the pod level)

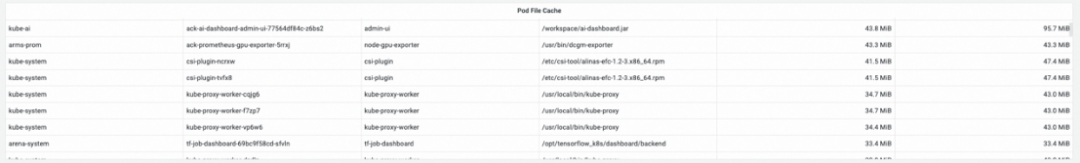

Check the file cache monitoring on the PodMonitor dashboard. It is observed that the ack-ai-dashboard-admin-ui-77564df84c-z6bs2 container generates a large memory cache when performing I/O reads and writes on the /workspace/ai-dashboard.jar file.

When the memory cache of a pod is large, it leads to increased working memory usage, and this portion of cache memory becomes a "black hole" in the pod's working memory, making it difficult to locate. This commonly results in OOM eviction issues caused by pod memory black holes, which negatively affect the business experience of the affected pod.

SysOM is an all-in-one operating system O&M platform developed by the OpenAnolis community O&M SIG. It enables users to perform complex operating system administration tasks such as host management, operating system migration, downtime analysis, system monitoring, exception diagnosis, log auditing, and security control on a unified platform.

With SysOM, ACK has the unique capability to monitor containers at the OS kernel level. SysOM can be used to observe, warn, and diagnose problems related to memory black holes, storage black holes, and other issues that are not effectively addressed by the community and other cloud service providers in containerization migration.

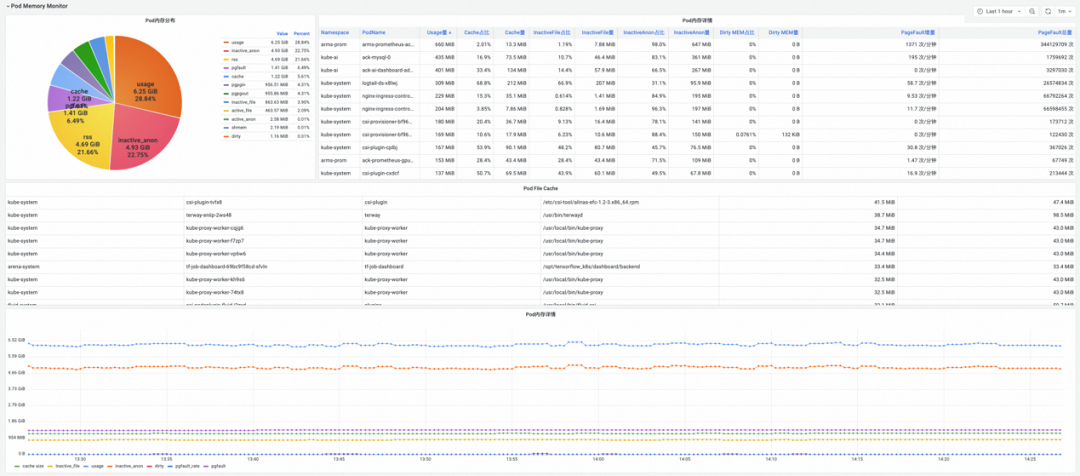

SysOM provides a monitoring dashboard at the Pod and Node levels in the OS kernel layer to monitor real-time system metrics such as memory, network, and storage.

(Figure/ SysOM Pod-level monitoring dashboard)

(Figure/ SysOM Node-level monitoring dashboard)

For more information about SysOM features and metrics, see the following documentation: https://www.alibabacloud.com/help/en/ack/ack-managed-and-ack-dedicated/user-guide/sysom-kernel-level-container-monitoring

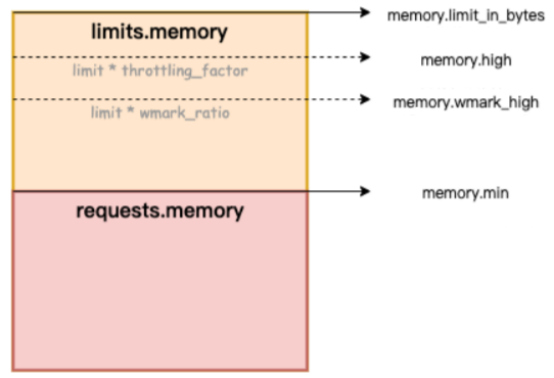

How to fix the memory black hole problem? Alibaba Cloud Container Service utilizes the fine-grained scheduling feature and relies on the open-source Koordinator project, ack-koordinator, to provide memory QoS (Quality of Service) capability for containers. This improves the memory performance of applications during runtime while ensuring the fairness of memory resources. This article introduces the container memory QoS feature. For more information, refer to Container memory QoS [1].

The following memory constraints apply to containers:

• Container memory limit: When the memory (including the page cache) of a container approaches its upper limit, container-level memory reclamation is triggered. This process affects the performance of memory allocation and release for applications within the container. If the memory request is not satisfied, the container OOM is triggered.

• Node memory limit: If a container's memory is overcommitted (Memory Limit > Request) and the overall memory of the host machine is insufficient, node-level global memory reclamation is triggered. This process has a significant impact on performance and may lead to abnormal behavior in extreme cases. If reclamation is insufficient, the container is selected for OOM Kill.

To address the preceding typical container memory issues, ack-koordinator provides the following enhanced features:

• Background memory reclamation utilization: When the memory usage of a pod approaches the limit, a portion of the memory is asynchronously reclaimed in the background. This helps mitigate the performance degradation caused by direct memory reclamation.

• Container memory locks reclamation/throttling utilization: Fair memory reclamation is implemented between pods. When the overall machine's memory resources are insufficient, memory is preferentially reclaimed from pods with excessive memory usage (Memory Usage > Request) to avoid degrading the quality of memory resources for the entire machine caused by individual pods.

• Differentiated memory reclamation: In BestEffort memory overcommitment scenarios, memory quality is given preferential guarantees for Guaranteed and Burstable pods. For more information about the kernel features enabled by ACK container memory QoS, refer to the Overview of Alibaba Cloud Linux kernel features and interfaces [2].

(Figure/ ack-koordinator provides memory QoS (Quality of Service) capability for containers)

After detecting the memory black hole problem in containers, you can leverage the fine-grained scheduling feature of ACK to select memory-sensitive pods and enable the memory QoS feature. By doing so, a closed loop fix can be achieved.

Dragonfly Accelerates Distribution of Large Files with Git LFS

Simplifying OS Migration: Alibaba Cloud Linux Supports Cross-Version Upgrades

84 posts | 5 followers

FollowOpenAnolis - May 8, 2023

Alibaba Cloud Native Community - December 11, 2023

OpenAnolis - September 21, 2022

OpenAnolis - April 7, 2023

OpenAnolis - February 13, 2023

Alibaba Cloud Native Community - September 13, 2023

84 posts | 5 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by OpenAnolis