Watch the replay of the Apsara Conference 2021 at this link!

"Even though cloud computing has emerged, our work is still centering on servers. However, this will not last long because cloud applications are developing in the direction of serverless."

This is Ken Form's opinion on the future of cloud computing from his 2012 article entitled Why The Future of Software and Apps is Serverless.

The inherent elasticity and fault tolerance of Serverless are in line with the dual demands of elasticity and stability of enterprises' online businesses. It is a new direction in the evolution of enterprises' on-cloud architectures.

Today, more medium and large-sized enterprises split the execution units that have requirements for flexible scale-out from the traditional backend realms and run them on the Serverless architecture. Startup teams that pay attention to R&D and delivery efficiency have made their business fully serverless. The concept of Serverless First has become more popular, making more on-cloud workloads run in a serverless manner.

The change in numbers represents the market maturity of the technology.

According to a report from Datadog, half of Datadog's AWS clients use Lambda, and 80% of AWS container clients use Lambda. These users call functions 3.5 times more frequently each day than they did two years ago, with the run time up to 900 hours per day. In China's market, according to the CNCF Cloud Native Survey China 2020, 31% of enterprises are using Serverless technology in production, 41% are evaluating the selection, and 12% plan to use it in the next 12 months.

On October 21, at the Apsara Conference Cloud-Native Summit, Alibaba Cloud Serverless released a series of technological breakthroughs, focusing on solving the difficulties and pain points faced within the industry. The next thing is the large-scale practice of major enterprises on Serverless. For example, NetEase Cloud Music uses Serverless technology to build an offline audio and video processing platform. Pumpkin Film implements fully serverless transformation in seven days and builds an elastic system for business monitoring and release based on serverless transformation.

The essence of Serverless is to realize the focus and freedom of business-layer development by hiding the underlying computing resources. However, the underlying implementation for cloud vendors becomes more complicated based on how abstract the upper layers are. Function Compute (FC) splits services into the granularity of functions, which will inevitably bring new challenges to development, O&M, and delivery. For example, how can we perform end-cloud joint debugging of functions? How can we realize the observability and debugging of functions? How can we optimize GB-level image cold start? These things that were not a problem in the granularity of services in the past have become obstacles during the implementation of enterprises' core production business with Serverless on a large scale.

Since the Alibaba Cloud FC Team entered the Forrester Leaders Quadrant last year, they have continued to overcome these technical challenges in the industry and released seven major technological innovations and breakthroughs during this year’s Apsara Conference.

The Serverless developer platform Serverless Devs 2.0 has officially released one year later after being opened. Compared with version 1.0, Serverless Devs 2.0 has achieved an all-around improvement in performance and user experience. It has released the first desktop client Serverless Desktop in the industry, which is finely designed, aesthetically pleasing, and pragmatic, with stronger enterprise-level service capabilities.

Serverless Devs is the first platform in the industry for cloud-native full-lifecycle management that supports mainstream Serverless services/frameworks. It is committed to creating an overall service for developers to develop Serverless applications. Serverless Devs 2.0 provides a multi-mode debugging scheme, including connecting online and offline environments, the end-cloud debugging scheme for local connection to the production environment, the local debugging scheme for direct development state debugging, and the online/remote debugging scheme for cloud O&M state debugging. The new version adds multi-environment deployment capabilities. Serverless Devs 2.0 supports all-in-one deployment of more than 30 frameworks, including Django, Express, Koa, Egg, Flask, Zblog, and WordPress.

An instance is the smallest atomic unit that can be scheduled for function resources. It is similar to the Pod of a container. Serverless abstracts heterogeneous basic resources. Therefore, the black-box problem is a major pain point to the large-scale implementation of Serverless. Similar products in the industry have not revealed the concept of instance nor indicators, such as CPU and memory in the observable functionality. However, observability is the eye of developers. Without it, high availability is not possible.

FC releases instance-level observability. This feature provides real-time monitoring and performance data collection of function instances and displays the data in a visualized way. Thus, developers have access to end-to-end monitoring and troubleshooting paths for function instances. Instance-level metrics allow you to view core metrics, such as CPU and memory usage, instance network connection, and the number of requests within an instance. This makes the black box not as black. At the same time, FC supports observability and debugging by opening the login permissions to some instances.

The cold start of FC is affected by multiple factors: the size of the code and image, startup container, runtime initialization of language, process initialization, and execution logic. It depends on the bidirectional optimization of users and cloud service providers. Cloud service providers assign the most appropriate number of instances to each function and perform cold start optimization on the platform side. However, some online businesses are very sensitive to latency. Cloud service providers cannot replace users when performing deeper business optimization, such as streamlining code or dependencies, selecting programming language, initializing processes, and optimizing algorithms.

Similar products in the industry generally adopt the policy of reserving a fixed number of instances. In other words, users should configure the concurrency value of N. Unless it is adjusted manually, N instances will not scale after they are allocated. This solution only solves the cold start latency of some business peaks and increases the O&M and resource costs substantially. It is not friendly to businesses with irregular PV periods, such as red envelope promotion.

Therefore, FC is the first to grant the scheduling privilege of some instance resources to users. It allows users to reserve an appropriate number of function instances using multi-dimensional instance reservation policies, such as fixed quantity, scheduled scaling, resource usage scaling, and hybrid scaling. These policies meet the demands of different scenarios, such as the businesses that have relatively stable curves (AI/ML scenarios), specific PV periods (gaming and interactive entertainment, online education, and new retailing), unpredictable bursting traffic (e-commerce promotion and advertising), and mixed traffic (Web backend and data processing). Thus, the impact of cold start on latency-sensitive businesses is reduced, and the ultimate goal of balancing elasticity and performance is achieved.

FC provides two types of instances: elasticity instances and performance instances. Elasticity instances range from 128 MB to 3 GB. Its fencing granularity is the finest in the entire cloud ecosystem and can truly achieve 100% resource utilization in all scenarios. The specification of performance instances includes 4 GB, 8 GB, 16 GB, and 32 GB. Its upper limit of resources is higher. It mainly applies to compute-intensive scenarios, such as audio and video processing, AI modeling, and enterprise-level Java applications.

With the accelerated development of hardware in the dedicated field, various GPU manufacturers have launched dedicated ASIC for video codec, such as NVIDIA integrated dedicated circuit of video encoding from the Kepler architecture and integrated dedicated circuit of video decoding from the Fermi architecture.

FC officially launched the GPU instances based on the Turing architecture, allowing Serverless developers to expand the workload of video encoding and decoding to GPU hardware for acceleration. This promotes the efficiency of video production and video transcoding significantly.

The so-called Serverless does not mean software applications can run without servers. It means users do not need to care about the status, resources (such as CPU, memory, disk, and network), and quantity of the underlying servers involved during the run time of applications. The computing resources required for the normal operation of software applications are dynamically provided by cloud service providers. However, users are still concerned about the resource delivery capability of them and their capability to deal with access fluctuations caused by insufficient resources in bursting traffic scenarios.

FC relies on the powerful cloud infrastructure service capabilities of Alibaba Cloud. FC achieves a maximum delivery of 20,000 instances per minute during peak business periods with the resource pools of X-Dragon and ECS. This has improved the delivery capability of FC in the core businesses of clients.

When users need to access resources in their VPCs, such as using RDS/NAS, VPC networks have to be interconnected. FaaS products in the industry generally dynamically mount ENI to realize VPC connection. They create an ENI in the VPC and mount it to the machine that executes functions in the VPC. This solution allows users to link backend cloud services simply. However, the mounting of ENI generally takes more than 10 seconds, which brings a large performance overhead in latency-sensitive business scenarios.

FC decouples computing and networks by transforming VPC Gateway to a service, and the scaling of compute nodes is no longer limited by the capability of ENI mounting. In this solution, the gateway service is responsible for ENI mounting and high availability and auto scaling of gateway nodes, while FC focuses on the scheduling of compute nodes. When the VPC network is finally connected, the cold start time of a function is reduced to 200 milliseconds.

FC released the function deployment method of container images in August 2020. AWS Lambda was also released at the Re:Invent event held in December 2020. Some enterprises in China also announced that their FaaS services would support containers in June 2021. Cold start has always been a pain point for FaaS. The introduction of container images that are dozens of times larger than the compressed code packages has increased the latency caused by the cold start procedure.

FC innovated and invented Serverless Caching. It can realize the co-optimization of software and hardware and build a data-driven, intelligent, and efficient cache system based on the characteristics of different storage services. This improves the experience of using Custom Container. So far, FC has optimized the image acceleration to a high level. We selected four typical public use cases of FC (please see – https://github.com/awesome-fc ) and adapted them to several large cloud service providers inside and outside China for horizontal comparison. We called the images above every three hours and repeated this several times.

Experiments proved that FC reduced the cold start time from minutes to seconds in the scenario of GB-level image cold start.

The problem of implementing FaaS on the core production business of enterprises on a large scale needs to be solved through technical challenges, while Serverless PaaS services, such as Serverless Application Engine (SAE), focus more on the breakthroughs of product usability and scenario coverage.

SAE is different from Serverless FaaS services because it focuses on application and provides application-oriented UI and API. It maintains the user experience in server and classic PaaS form, which means the application can be seen and accessed. This avoids FaaS transformation of applications and its weak observability and adjustment experience, making zero-code transformation and smooth migration of online applications possible.

SAE breaks the implementation boundary of Serverless, making it not only used in frontend full-stack and small programs. Backend microservices, SaaS services, and IoT applications can also be built on Serverless. Therefore, SAE is naturally suitable for large-scale implementation of enterprise core businesses. In addition, SAE supports the deployment of multi-language source code packages in PHP and Python. It also supports multiple runtime environments and user-defined extensions, which allows Serverless implementation to be general-purpose.

Traditional PaaS is criticized for being complex to use, difficult to migrate, and troublesome to expand. The underlying layer of SAE transforms virtualization technology into container technology, making full use of container fencing technology to improve startup time and resource utilization and realize rapid containerization of applications. In the application management layer, the original management paradigm of microservice applications, such as Spring Cloud/Dubbo, is retained. The float and complicated Kubernetes are not required to manage applications.

In addition, after the underlying computing resources become pools, its natural Serverless attribute enables users to configure the required computing resources according to the amount of CPU and memory resources instead of purchasing retaining servers separately or continuously. Coupled with the advanced microservice administration capability tested during the Double 11 Shopping Festival over many years, the container, Serverless, and PaaS can be combined into one. They can integrate advanced technology, optimized resource utilization, and efficient development and O&M experiences. Therefore, it is easier and smoother to implement new technologies.

SAE covers almost all scenarios of cloud migration for applications. It is the best choice for application cloud migration and a model of All on Serverless.

The leadership of technology alone cannot promote the development of the industry. Serverless brings immediate changes to enterprise clients and developers. These two features constitute the two-wheel drive of market maturity. Technology is evolving by itself, and customers are giving back while implementing. This is the right posture for any new technology to keep developing.

A full-stack engineer from a startup company says, "I no longer focus on the cold and boring server. I bid farewell to the dilemma that the processing time of the server is longer than that of writing code. I can spend more time on business and use the most familiar code to ensure the stable operation of the application."

The daily life of a full-stack engineer that focuses on the frontend may consist of mastering at least one frontend language (such as JavaScript or Puppeteer), writing some APIs, catching some backend bugs, and spending a lot of energy on the O&M of a server. The larger the company's business volume is, the more time the engineer will take on O&M.

FC lowers the threshold for server maintenance in frontend languages such as JavaScript. You can maintain Node services without learning about DevOps as long as you can write code in JavaScript.

A Java engineer in the realm of algorithm says, "I no longer worry about the large number of server specifications, complicated procurement, and difficult operation and maintenance caused by the increase in algorithm and complexity. Instead, I use unlimited resource pools, fast cold startups, and reserved instances to improve elasticity and freedom."

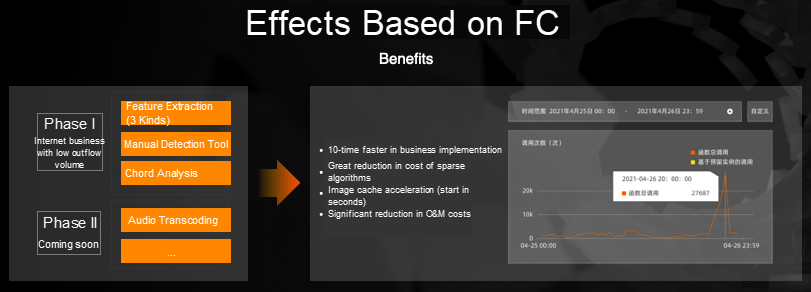

NetEase Cloud Music has implemented more than 60 audio and video algorithms in more than 100 business scenarios and has adapted over 1,000 ECS instances and physical machines of different specifications. Although there are many ways to simplify the connection of internal business scenarios and algorithms, more algorithms involve inventory and incremental processing. Business scenarios have traffic of different scales, and different business scenarios may reuse the same algorithm, resulting in less time spent on business.

Based on FC, NetEase Cloud Music upgraded its offline audio and video processing platform and applied it to music listening, karaoke, music recognition, and other business scenarios. NetEase has accelerated the speed of business implementation by ten times and reduced the computing cost of sparse algorithms and O&M significantly.

A lead programmer of a game says, "I no longer worry about the polling mechanism of SLB, which leads to the inability to sense the actual load of pods and the uneven load. The FC scheduling system will reasonably arrange each request to ensure high CPU consumption and high elasticity in the verification scenarios of the combat."

Combat verification is a necessary business scenario for Lilith's many combat games. It is used to verify whether there is cheating in the combat uploaded by the player. Combat verification generally requires frame-by-frame calculation. As a result, the CPU consumption will be very high. Usually, the battle between two players takes N milliseconds, while a 5v5 battle takes 5N milliseconds, which requires high elasticity. In addition, SLB mounted in the container architecture cannot sense the actual load of Pods due to the polling mechanism, resulting in uneven load, dead loop, and stability risks.

The FC scheduling system helps Lilith arrange each request reasonably. For the dead loop problem, it also provides a mechanism that kills timeout processes. FC expands the complexity of the scheduling system to the infrastructure. The latency of cold start after deep optimization by providers is reduced substantially. Scheduling, obtaining computing resources, and service startup take roughly one second.

An O&M engineer from the interactive entertainment industry says, "I no longer worry about the problems of slow and error-prone release, difficult guarantee of environmental consistency, cumbersome privilege allocation, and troublesome rollback under the traditional server mode. The service administration capabilities of SAE improve the efficiency of development and O&M by 70%, while elastic resource pools shorten the scale-out time of the business end by 70%."

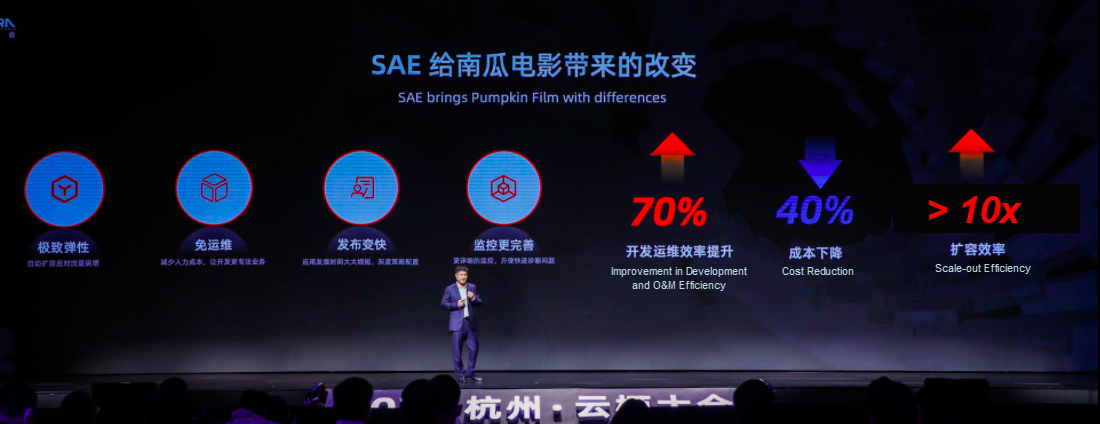

After a popular movie was released on Pumpkin Film, the number of registered users on Pumpkin Film exceeded 800,000 per day, which led to the breakdown of the API Gateway. Then, all services at the backend faced threats of stability. Afterward, it started an emergent scale-out, purchased ECS instances, uploaded scripts to the server, executed scripts, and expanded the database. The whole procedure took four hours. However, it is not uncommon for such hits to bring about bursts naturally. Therefore, it promoted the technological upgrading of Pumpkin Film.

With the help of SAE, Pumpkin Film's services were fully Serverless in seven days. It also embraces Kubernetes with zero-threshold to cope with the bursting traffic led by popular movies. Compared with the traditional server O&M mode, the efficiency of development and O&M was improved by 70%, the cost was reduced by 40%, and the expansion efficiency improved by more than ten times.

In 2009, Berkeley put forward six predictions about cloud computing that was on the rise then, including the possibility of pay-as-you-go billing for services and the increase of physical hardware utilization. Over the past 12 years, all those predictions have become a reality. In 2019, Berkeley predicted once again that Serverless computing would become the default computing paradigm in the cloud era and replace the Serverful (traditional cloud) computing mode.

Serverless is in the third year of Berkeley's prediction, which has just passed a quarter. Over the past three years, we have had a bright vision about the future of the cloud. Cloud service providers advocated Serverless First and made massive investments. Enterprise users made full use of Serverless to optimize current architectures and objectively faced the barriers that affected the large-scale implementation of Serverless into the core business of enterprises. Nowadays, technology innovation and breakthroughs are solving the common pain points of the industry.

Special thanks to Moyang, Daixin, Xiaojiang, and Liu Yu for their contributions to this article.

949 posts | 221 followers

FollowAlibaba Cloud Community - November 25, 2021

Alibaba Cloud Community - December 9, 2021

Alibaba Developer - March 3, 2020

Alibaba Developer - March 3, 2020

Alibaba Cloud Blockchain Service Team - January 17, 2020

Alibaba Clouder - June 24, 2020

949 posts | 221 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Community