Highlights from Apsara Conference 2025

At the Apsara Conference 2025, Alibaba Cloud unveiled a series of innovations that directly address the toughest challenges enterprises face in scaling AI. These announcements were not about abstract technology for its own sake. They were about solving real problems — latency, inefficiency, complexity, and cost — and delivering solutions that translate into measurable customer benefits.

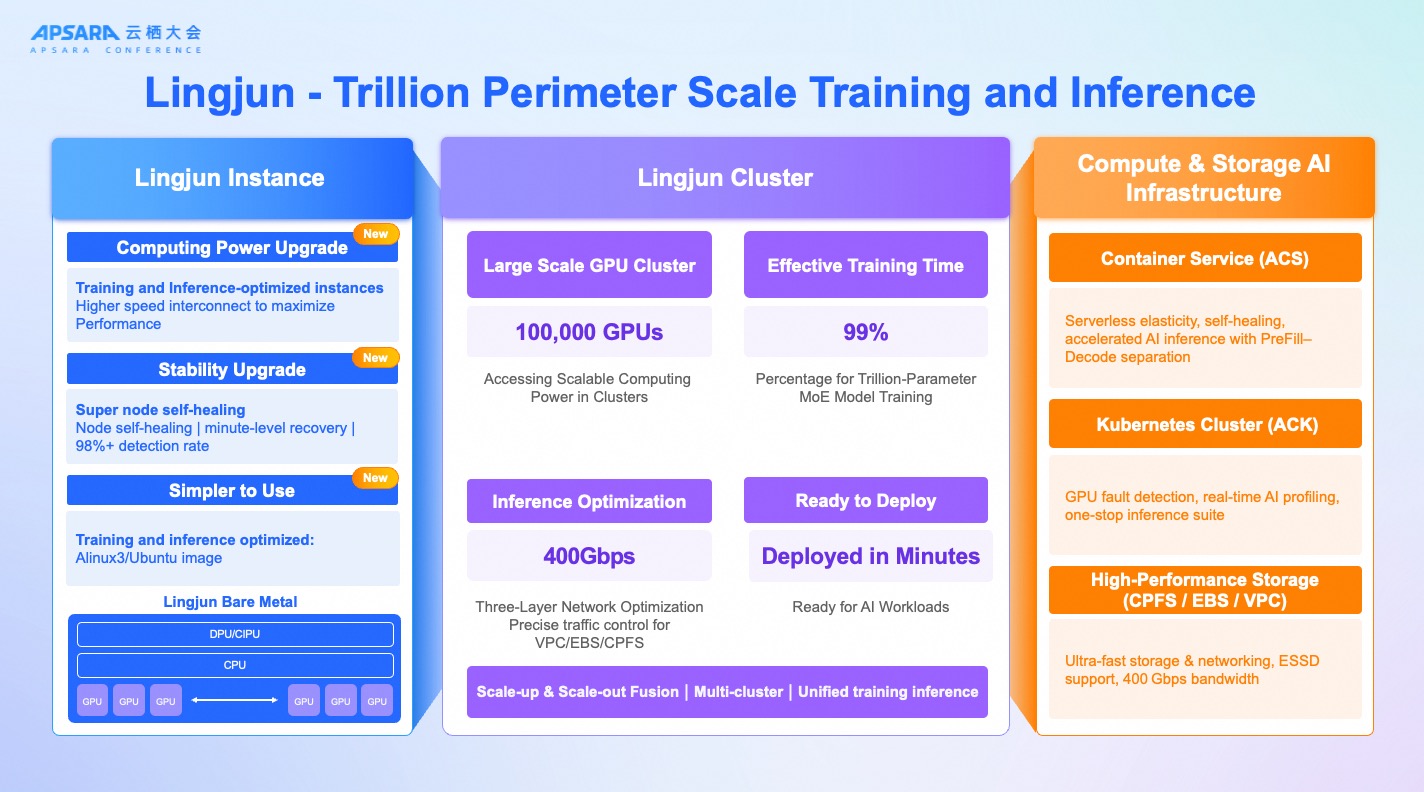

Supercomputing for Trillion‑Parameter Models

As models grow to the trillion‑parameter scale, enterprises face spiraling costs and wasted GPU cycles. Lingjun, our AI supercomputing platform, was designed to solve this. It brings together 100,000 GPUs with 99 percent effective training time, optimized interconnects, and 400 gigabits per second inference bandwidth. Serverless elasticity and minute‑level recovery ensure that workloads scale smoothly and recover quickly from failures. For customers, Lingjun translates into shorter training times, higher utilization of expensive GPU resources, and the ability to deploy massive models that were previously out of reach.

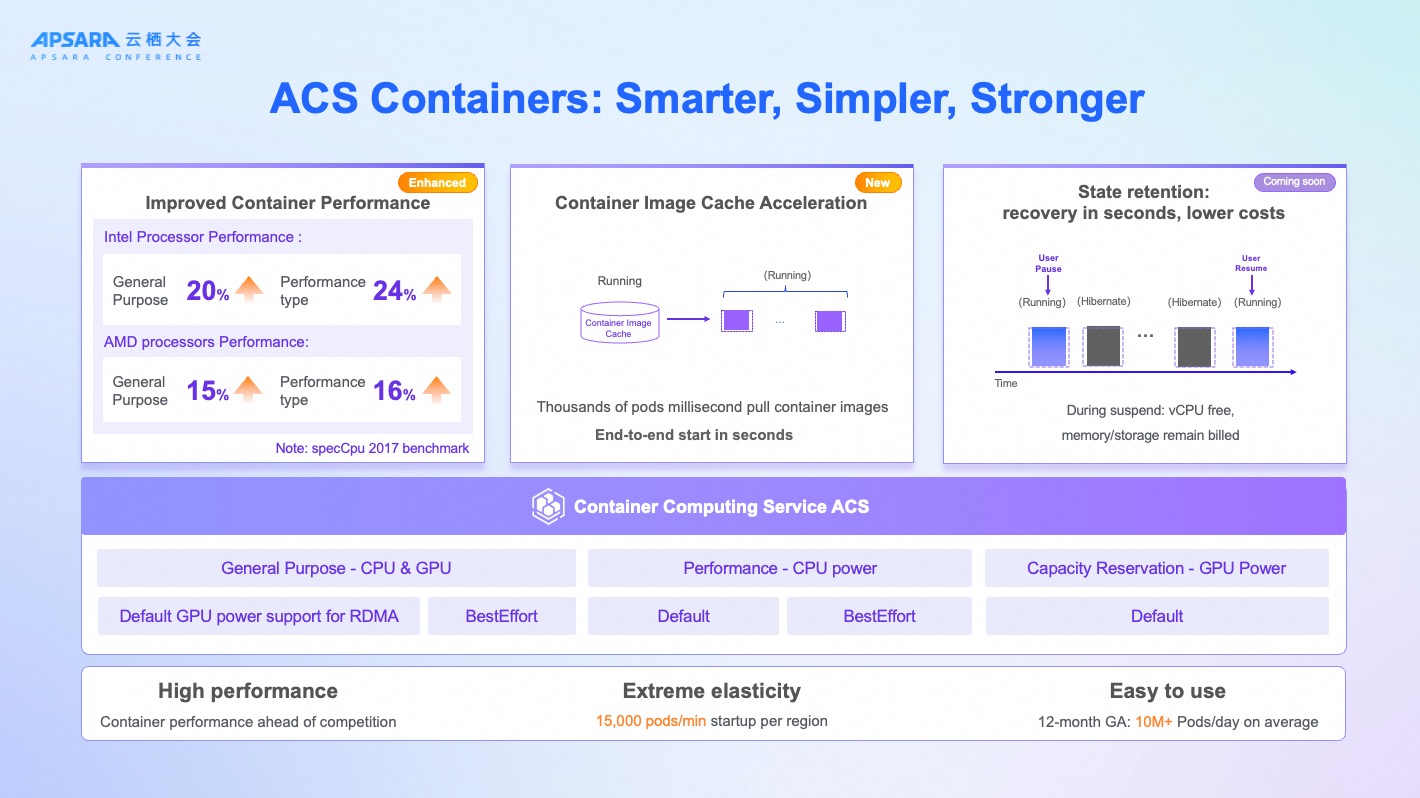

Containers Built for AI Speed

AI workloads demand elasticity, but conventional container platforms often struggle with startup delays and resource waste. Alibaba Cloud’s ACS container service addresses this by supporting 15,000 pods per minute per region. Container image cache acceleration enables millisecond pulls, while hibernate and resume functions reduce costs by pausing workloads without losing state. For customers, this means AI applications can scale up instantly when demand spikes, and scale down without incurring unnecessary costs.

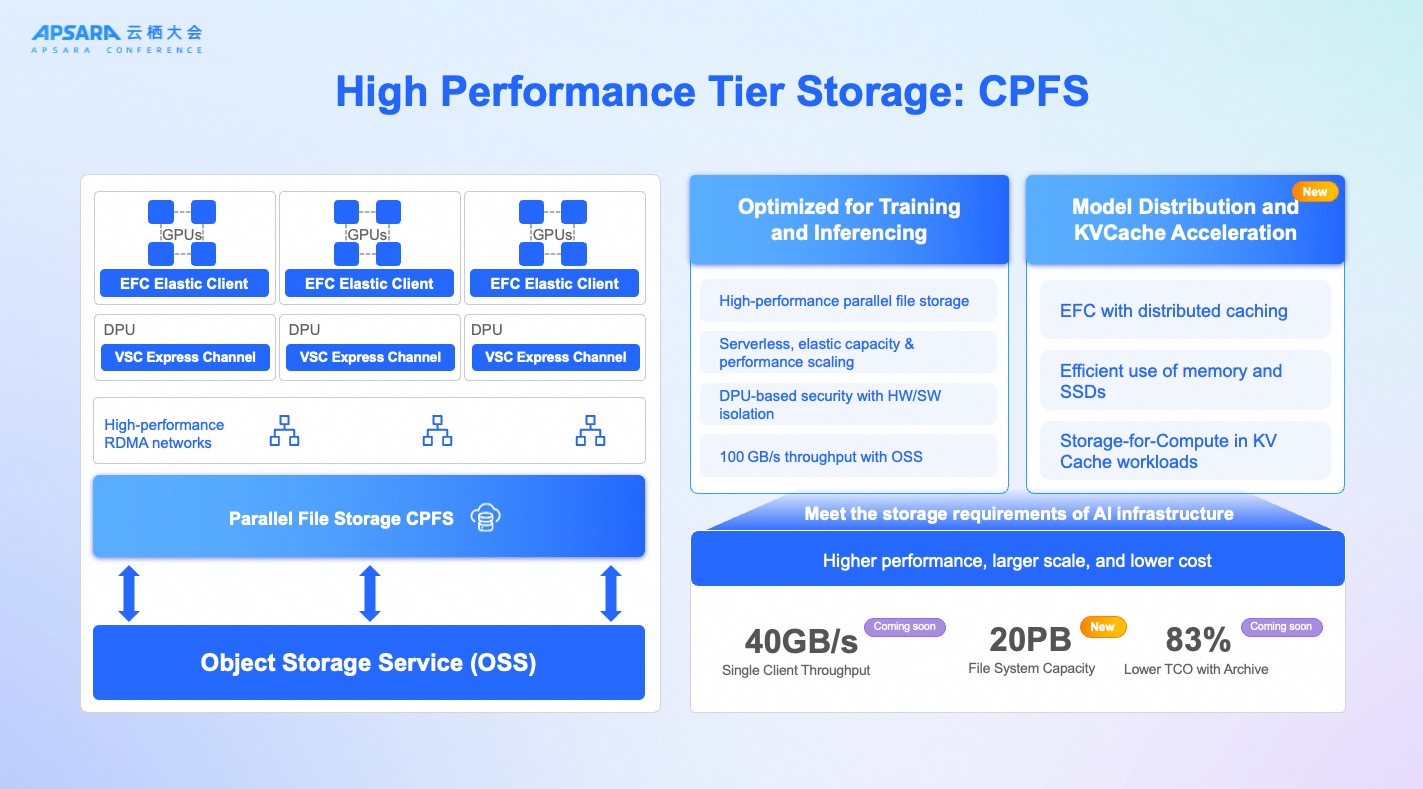

High‑Performance Storage for AI Training and Inference

One of the most persistent bottlenecks in AI infrastructure is storage throughput. Training large models requires moving massive datasets between compute and storage at extreme speeds. Traditional file systems often become the choke point, unable to keep up with the parallel I/O demands of thousands of GPUs working simultaneously. The result is wasted compute cycles, slower training times, and higher costs.

Alibaba Cloud’s Cloud Parallel File System (CPFS) was designed to eliminate this bottleneck. Optimized specifically for AI training and inference, CPFS delivers 40 gigabytes per second of single‑client throughput and scales up to 20 petabytes of capacity per file system. It supports high‑performance parallel file access, ensuring that GPUs are never starved of data.

For customers, the benefits are clear. Training jobs that once stalled due to I/O limits can now run at full GPU utilization, reducing time‑to‑results and lowering overall costs. Model distribution and KVCache acceleration ensure that inference workloads are equally efficient, enabling real‑time AI applications to respond faster. And because CPFS is serverless and elastic, customers don’t need to over‑provision storage; capacity and performance scale automatically with demand. In short, CPFS turns storage from a bottleneck into a performance multiplier.

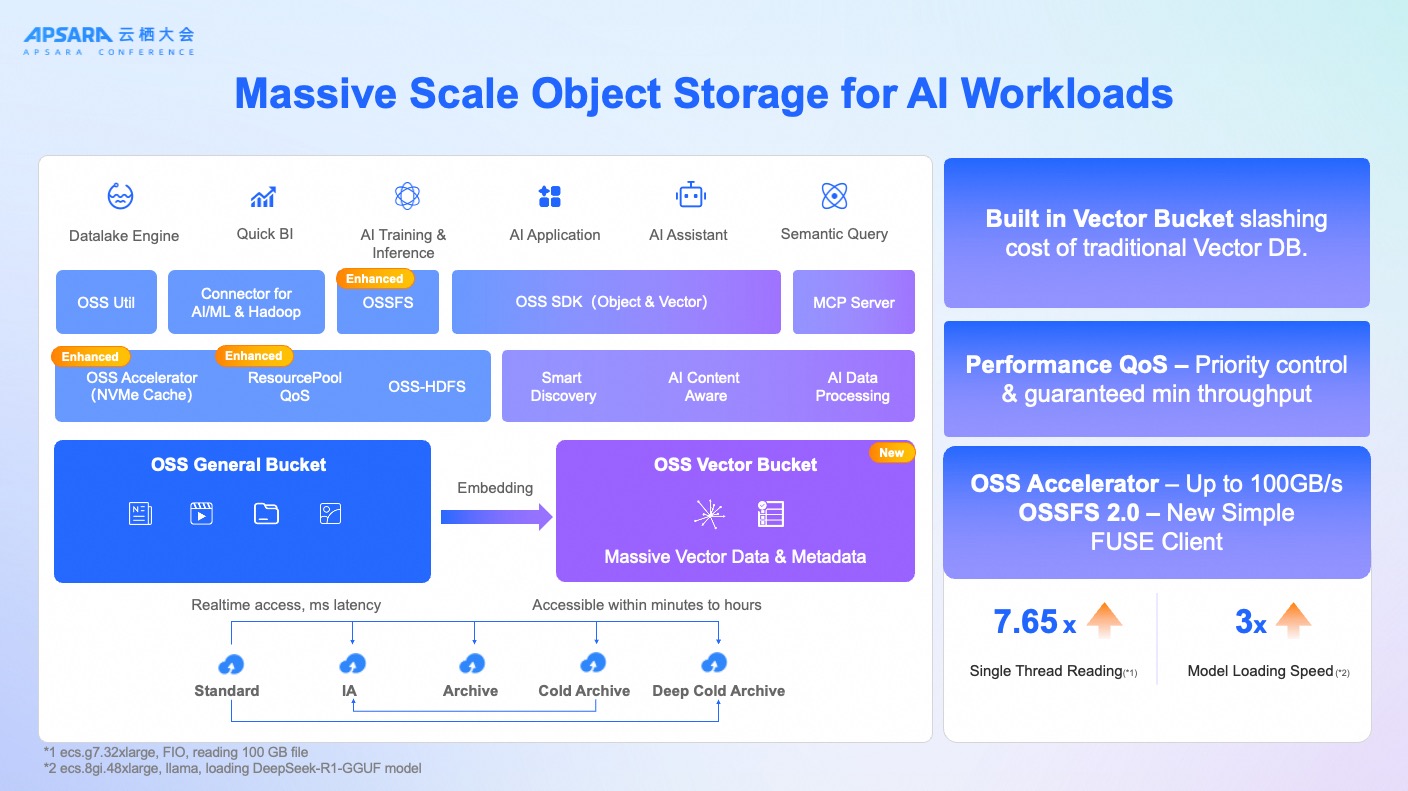

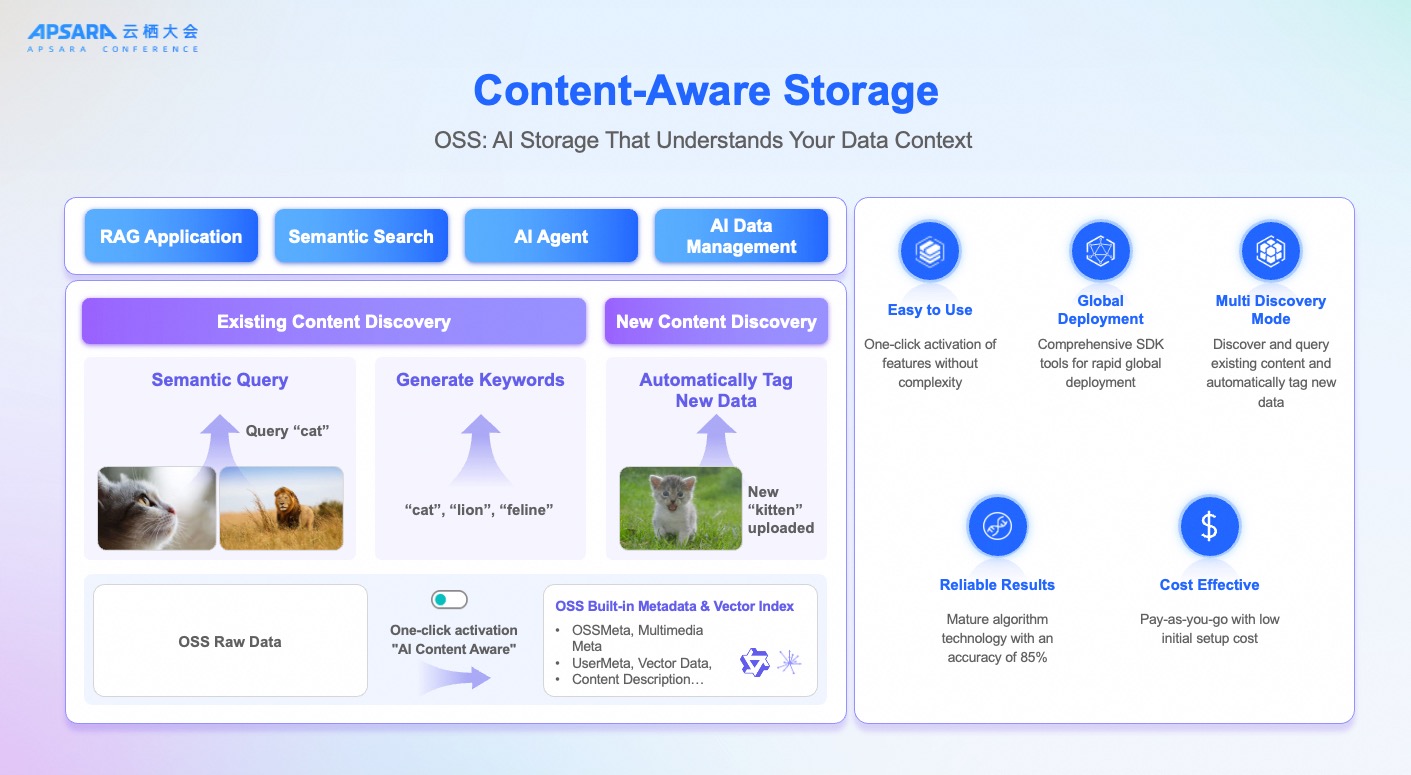

Intelligent, AI‑Aware Data Management in Object Storage

If CPFS is about raw speed, Object Storage Service (OSS) is about intelligence and scale. Enterprises today are drowning in unstructured data — images, videos, logs, documents — and AI workloads depend on making sense of this ocean of information. Traditional object storage can hold the data, but it cannot interpret it, index it, or accelerate access in ways that AI requires.

Alibaba Cloud’s OSS has been re‑engineered for the AI era. With accelerators delivering up to 100 gigabytes per second throughput, OSS ensures that even the largest models can be loaded quickly. The introduction of vector buckets slashes the cost of traditional vector databases by embedding vector indexing directly into storage. This allows semantic queries, similarity searches, and content discovery to happen natively, without the need for a separate database layer.

Perhaps most transformative is AI content‑aware storage. OSS can automatically tag new data, generate metadata, and support semantic queries such as “find all feline images” or “locate documents about renewable energy.” This feature turns raw data into structured, searchable knowledge. Combined with lifecycle management tiers — from real‑time access to deep cold archive — OSS balances performance with cost efficiency.

For customers, OSS means faster model loading, lower infrastructure costs, and smarter data management. A media company can instantly search millions of images by concept rather than filename. A research lab can load massive models in minutes instead of hours. An enterprise can reduce storage costs by archiving rarely used data while keeping it accessible when needed. OSS is no longer just a place to store data; it is a data platform that understands and accelerates AI workloads.

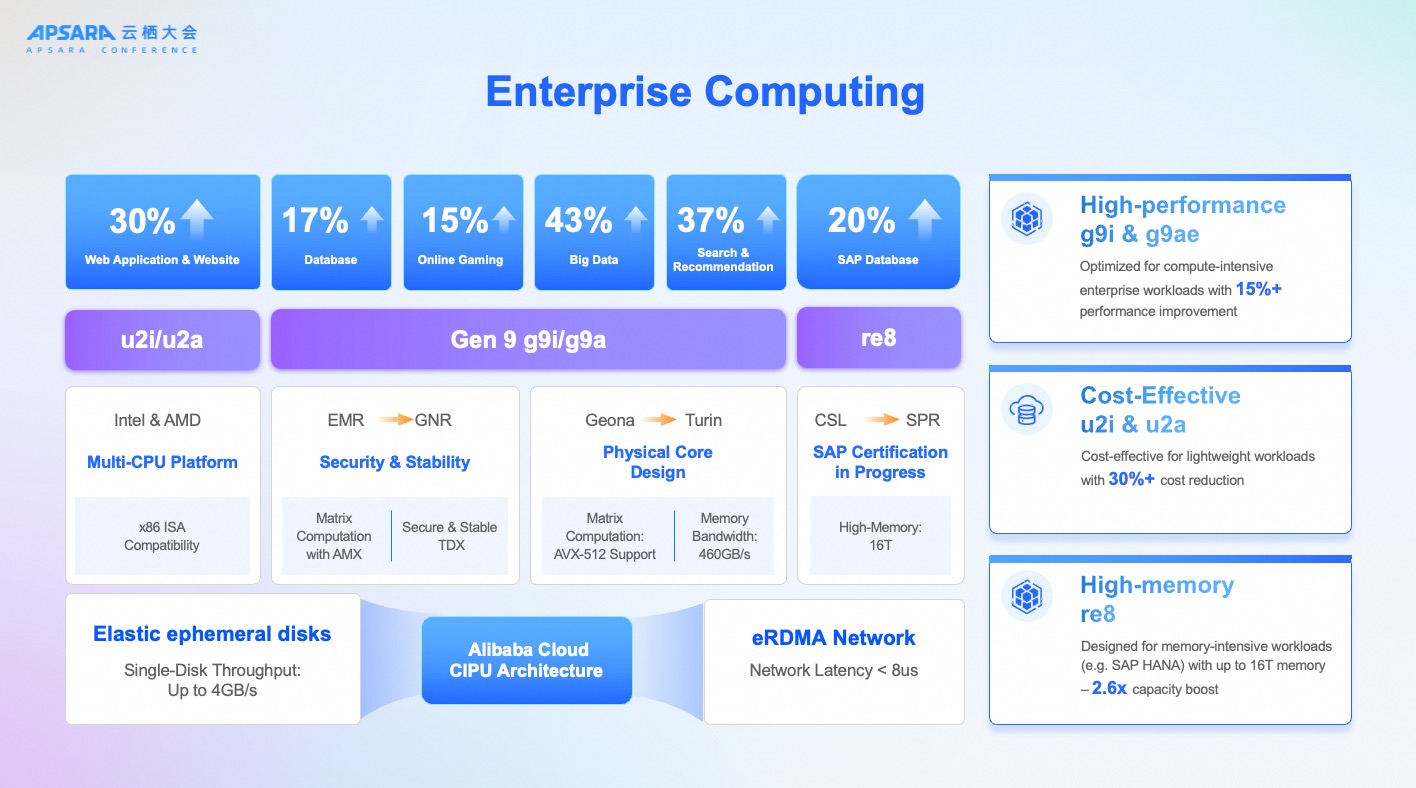

Enterprise Compute: Optimized for AI Workloads

Enterprises today face a dual challenge. On one hand, traditional workloads — databases, ERP systems, online gaming, and web applications — demand predictable performance and stability. On the other, AI workloads introduce entirely new requirements: massive memory footprints for training large models, ultra‑low latency for inference, and cost efficiency at scale. Conventional compute architectures often force customers to compromise, either over‑provisioning resources or accepting bottlenecks that slow innovation.

At Apsara Conference 2025, Alibaba Cloud showcased how its ninth‑generation compute services are designed to bridge this gap. The new g9i and g9ae instances deliver more than 15 percent performance improvements for compute‑intensive tasks, while supporting advanced matrix computation with AMX and AVX‑512 instructions. This makes them ideal for AI training workloads that rely on dense linear algebra operations. Memory bandwidth has been expanded to 460 GB/s, ensuring that GPUs and CPUs can access data without delay.

For memory‑intensive AI applications, such as training large language models or running SAP HANA databases with embedded AI features, Alibaba Cloud now offers up to 16 terabytes of memory per instance — a 2.6x capacity boost compared to previous generations. This allows enterprises to train larger models in‑memory, reducing the need for complex sharding strategies and accelerating convergence.

Cost efficiency remains a critical concern. The new u2i and u2a instances are optimized for lightweight workloads, delivering more than 30 percent cost reduction. This is particularly valuable for AI pipelines that mix heavy training with lighter preprocessing or inference tasks. Enterprises can allocate the right compute tier for each stage of the AI lifecycle, balancing performance with budget.

In short, Alibaba Cloud’s enterprise compute portfolio is no longer just about supporting traditional IT. It has been re‑architected for the AI era, giving enterprises the performance, memory, networking, and cost efficiency they need to bring AI workloads into production at scale.

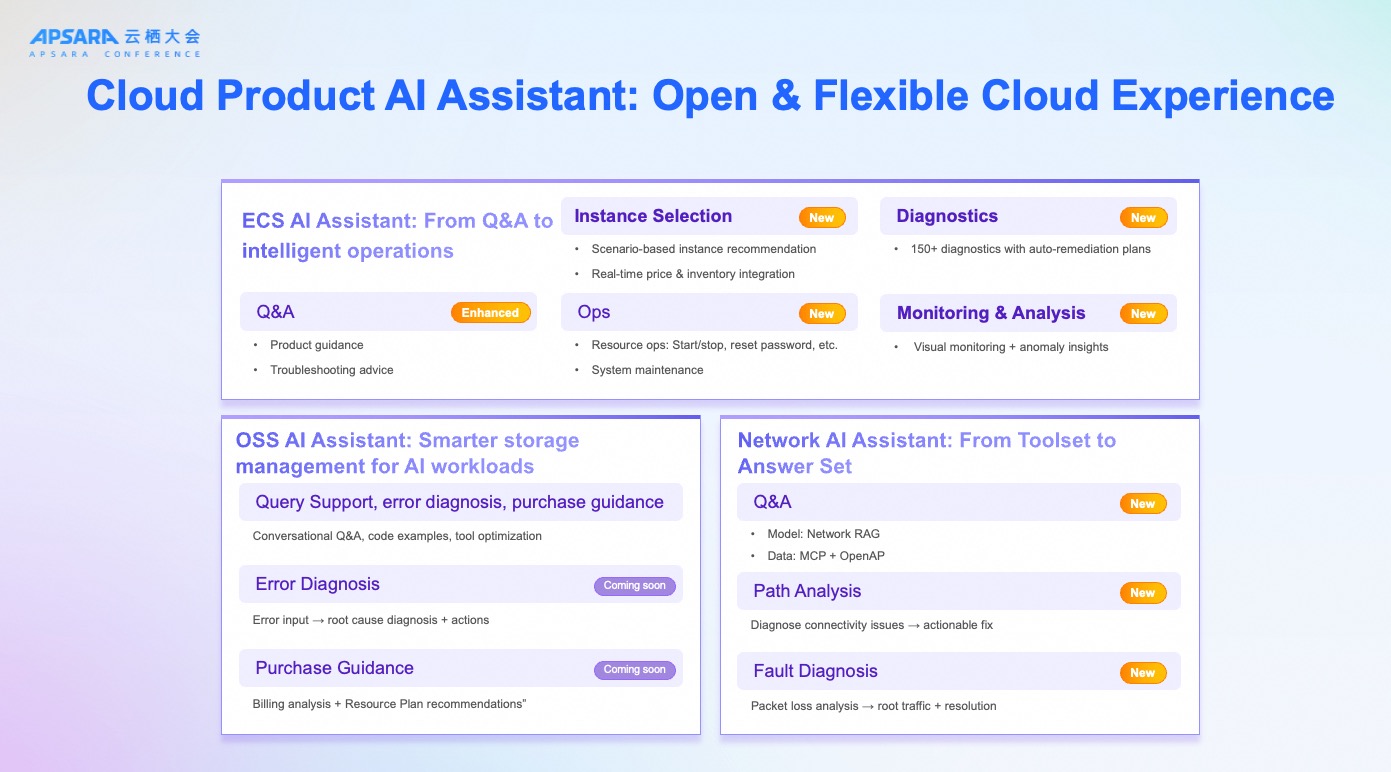

Simplifying Operations with AI Assistants

Even with powerful infrastructure, cloud operations can be complex. Alibaba Cloud is simplifying this with AI‑powered assistants. ECS AI Assistant provides intelligent Q&A, troubleshooting, and product guidance. OSS AI Assistant helps customers manage storage more effectively, while Network AI Assistant diagnoses connectivity issues and prescribes actionable fixes. For customers, this reduces operational overhead, shortens resolution times, and frees teams to focus on innovation rather than firefighting.

The Customer Impact

These are just some of the highlights at Apsara Conference 2025. Taken together, these innovations represent more than technical milestones. They are answers to the real challenges enterprises face in bringing AI to production. Higher performance means faster insights. Higher GPU utilization means lower costs. Smarter storage means easier data management. Built‑in redundancy means greater resilience. And AI‑powered operations mean simpler, more efficient cloud management.

At Apsara Conference 2025, Alibaba Cloud made one thing clear: we are not just scaling infrastructure, we are solving the problems that hold enterprises back from realizing the full potential of AI. The result is a cloud that is faster, smarter, more reliable, and more cost‑effective — a cloud built for the AI era.

To learn more, contact us.

A Guide to Deploy QWEN3 Inference in Alibaba Cloud PAI's Model Gallery

Unleashing Next-Gen Performance: A Deep Dive into Alibaba Cloud’s 9th Generation ECS (g9i) Instances

4 posts | 0 followers

FollowAlibaba Cloud Native Community - July 28, 2025

ray - May 2, 2024

Alibaba Cloud Community - July 8, 2025

Maya Enda - June 16, 2023

Alibaba Cloud Community - January 24, 2024

Alibaba Cloud Community - July 29, 2025

4 posts | 0 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More GPU(Elastic GPU Service)

GPU(Elastic GPU Service)

Powerful parallel computing capabilities based on GPU technology.

Learn More Apsara File Storage NAS

Apsara File Storage NAS

Simple, scalable, on-demand and reliable network attached storage for use with ECS instances, HPC and Container Service.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More