The network features in Kubernetes mainly include POD network, service network, and network policies. Among them, the POD network and network policies specify the model but do not provide a built-inimplementation. As Serivce is a built-in feature of Kubernetes , the official version has multiple implementations:

| Service mode | Description |

| userspace proxy mode | The kube-proxy is responsible for list/watch, rule settings, and user state forwarding. |

| iptables proxy mode | The kube-proxy is responsible for list/watch and rule settings. IPtables-related kernel modules are responsible for forwarding. |

| IPVS proxy mode | The kube-proxy is responsible for watching the k8s resource and setting rules. IPVS in Linux kernel is responsible for forwarding. |

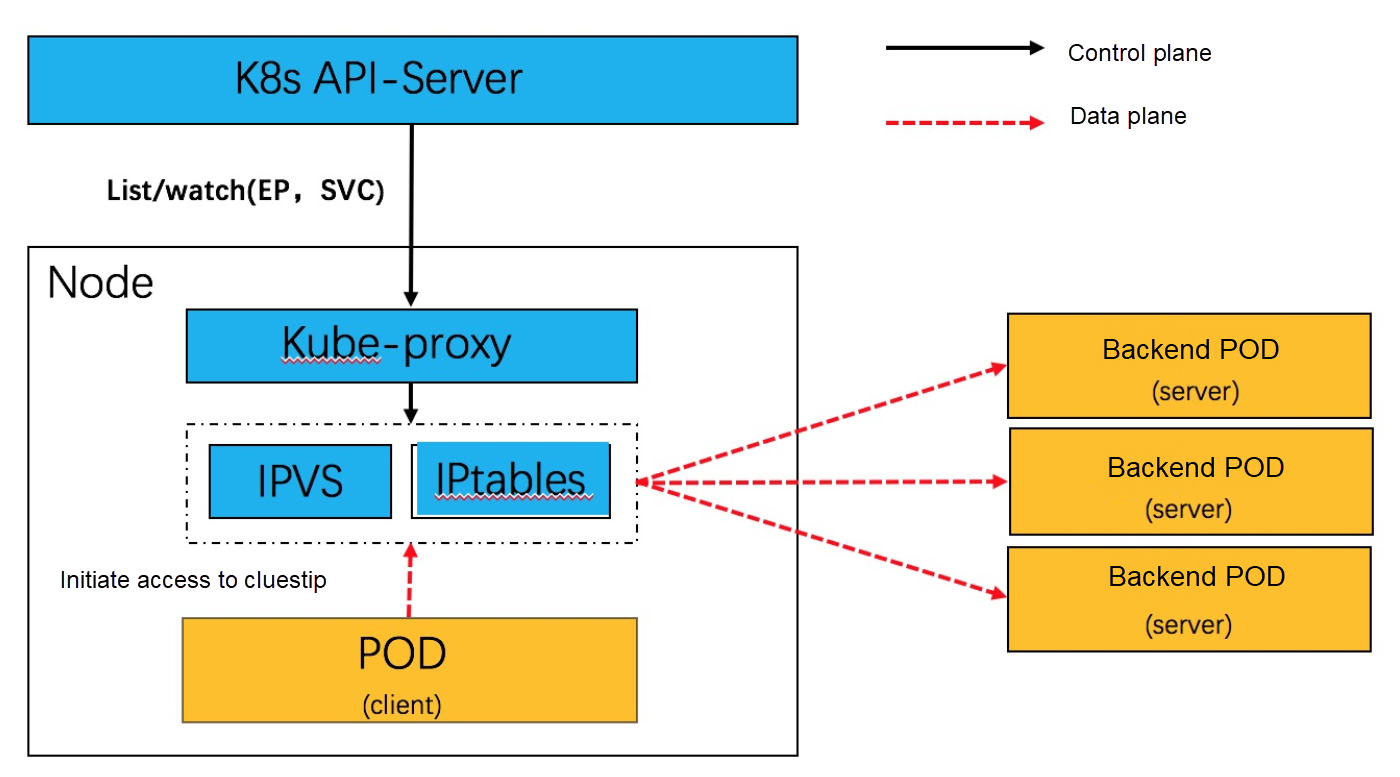

Among the several Service implementations that appeared in Kubernetes, the overall purpose is to provide higher performance and extensibility.

Service network is a distributed server load balancer. With kube-proxy deployed in daemonset mode, it listens to endpoint(or endpointslice) and service , and generates forwarding table items locally to node. Currently, the iptables and IPVS modes are used in the production environment. The following is the principle:

In this article, the logic of using socket eBPF to complete server load balancer at the socket level is introduced. This eliminates packet-by-packet NAT transformation processing and improves the Service network's performance.

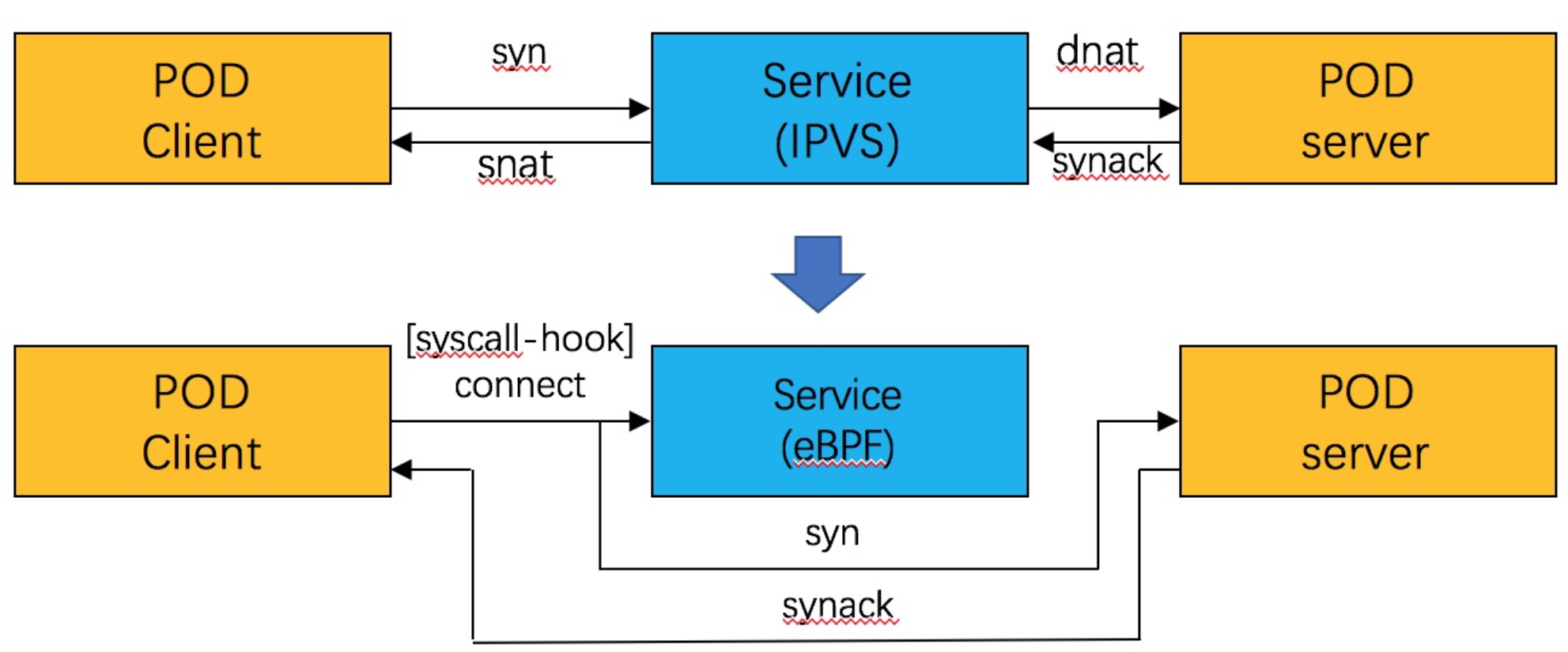

Whether kube-proxy uses IPVS or tc eBPF service network acceleration mode, each network request from a pod must go through IPVS or tc eBPF (POD <--> Service <--> POD). As traffic increases, there will be performance overhead. Then, can we change the cluster IP address of the service to the corresponding pod IP in the connection? The service network services based on Kube-proxy and IPVS are implemented based on per-report processing plus session.

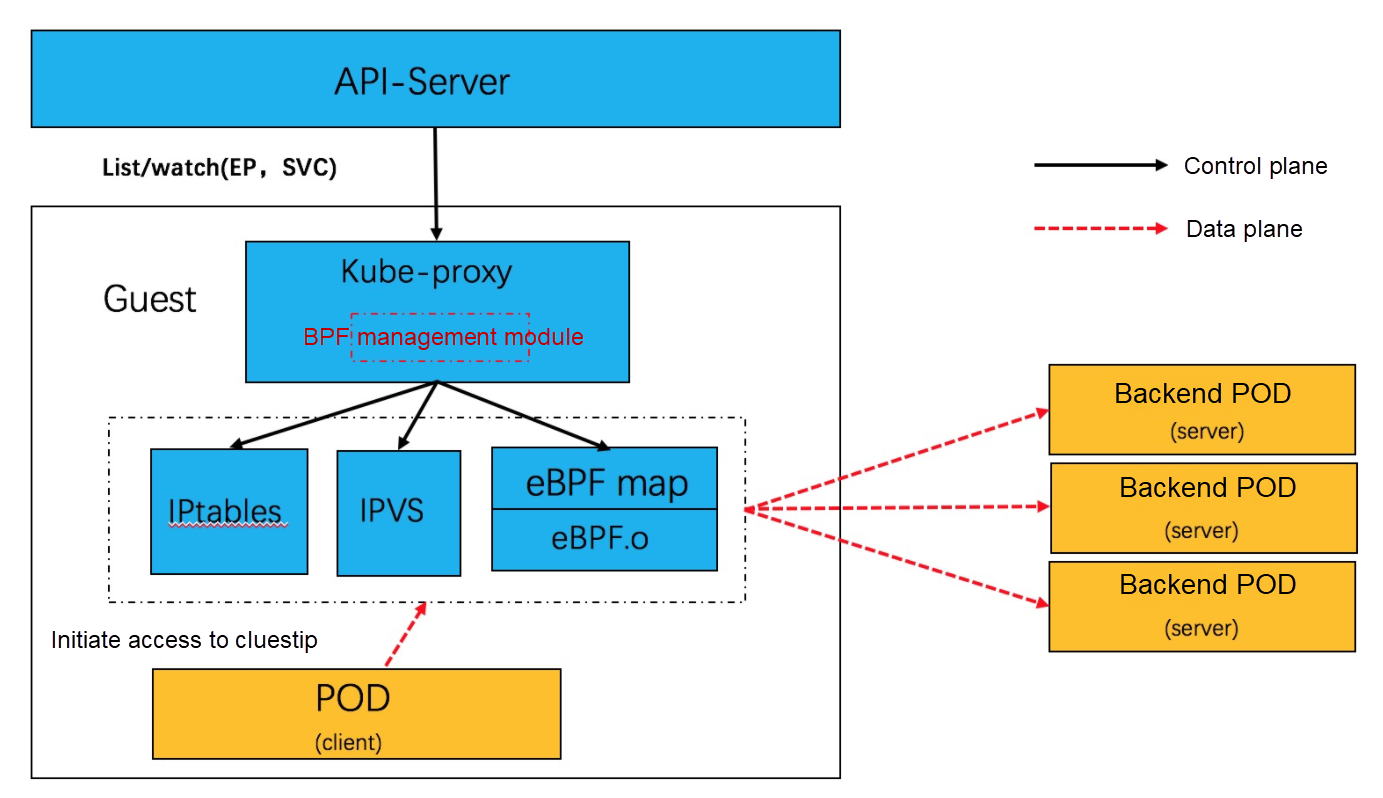

With socket eBPF, we can implement SLB logic without processing messages and NAT conversion. The Service network is optimized to POD <--> POD in synchronization so that the service network performance is equivalent to that of the POD network. The following is the software structure:

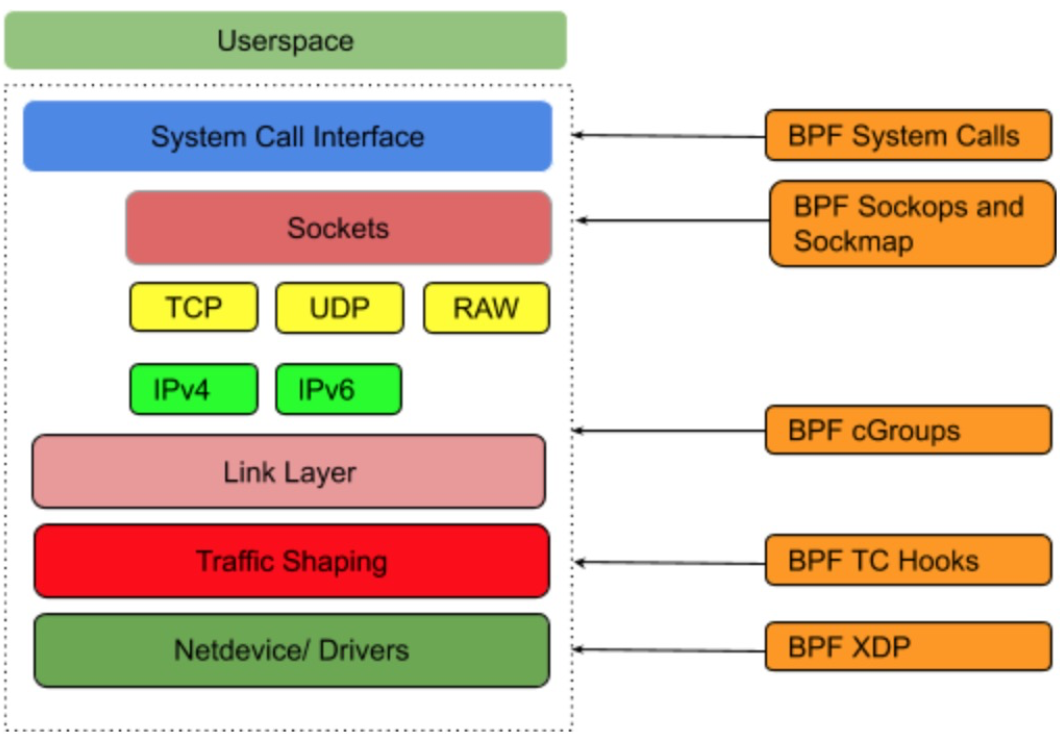

In the Linux kernel, BPF_PROG_TYPE_CGROUP_SOCK types of eBPF hook can be used to insert necessary EBPF programs for socket system calls to hook.

The current Linux kernel is improving related hooks to support more bpf_attach_type. The following is some distances: BPF_CGROUP_INET_SOCK_CREATE BPF_CGROUP_INET4_BIND BPF_CGROUP_INET4_CONNECT BPF_CGROUP_UDP4_SENDMSG BPF_CGROUP_UDP4_RECVMSG BPF_CGROUP_GETSOCKOPT BPF_CGROUP_INET4_GETPEERNAME BPF_CGROUP_INET_SOCK_RELEASE

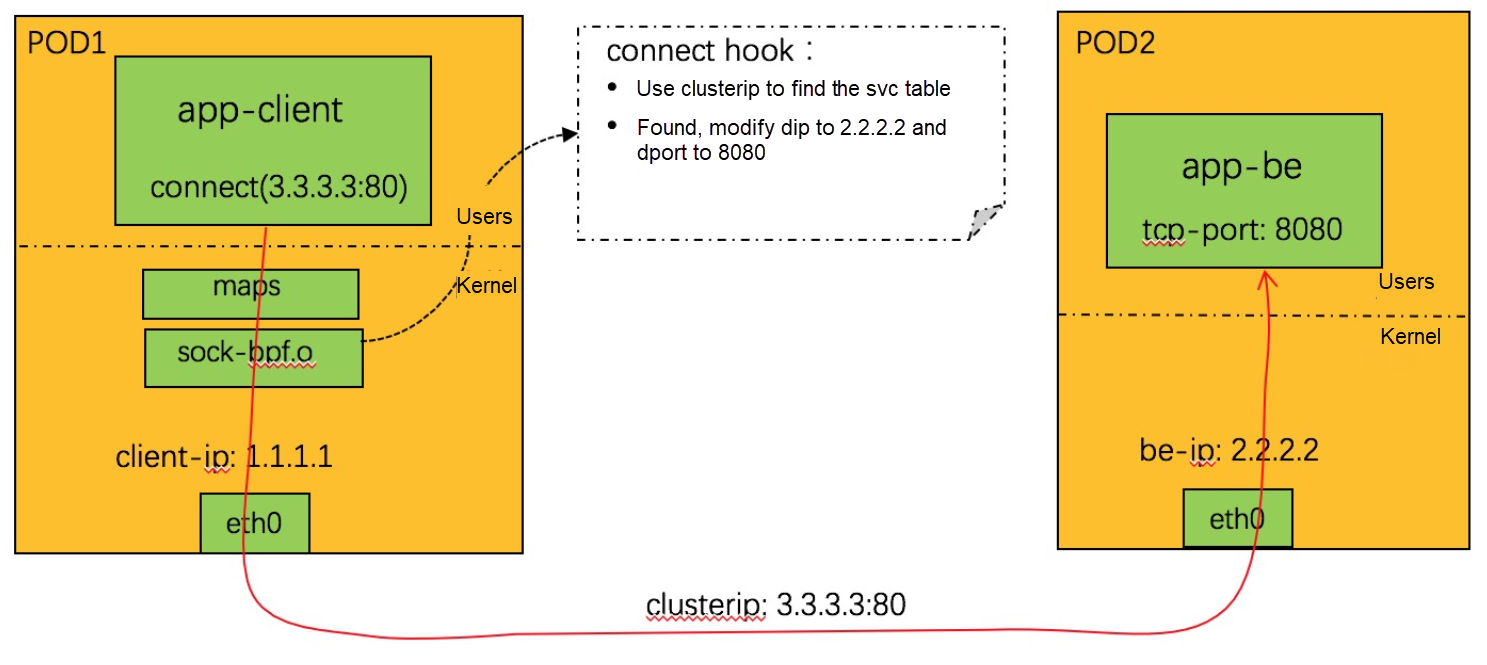

TCP is based on connection, so the implementation is simple. You only need to hook connect system calls, as shown in the following:

At the socket level, port conversion is completed. For the clusterip access to TCP, it is equivalent to the east-west communication between PODs, thus minimizing the overhead of clusterip.

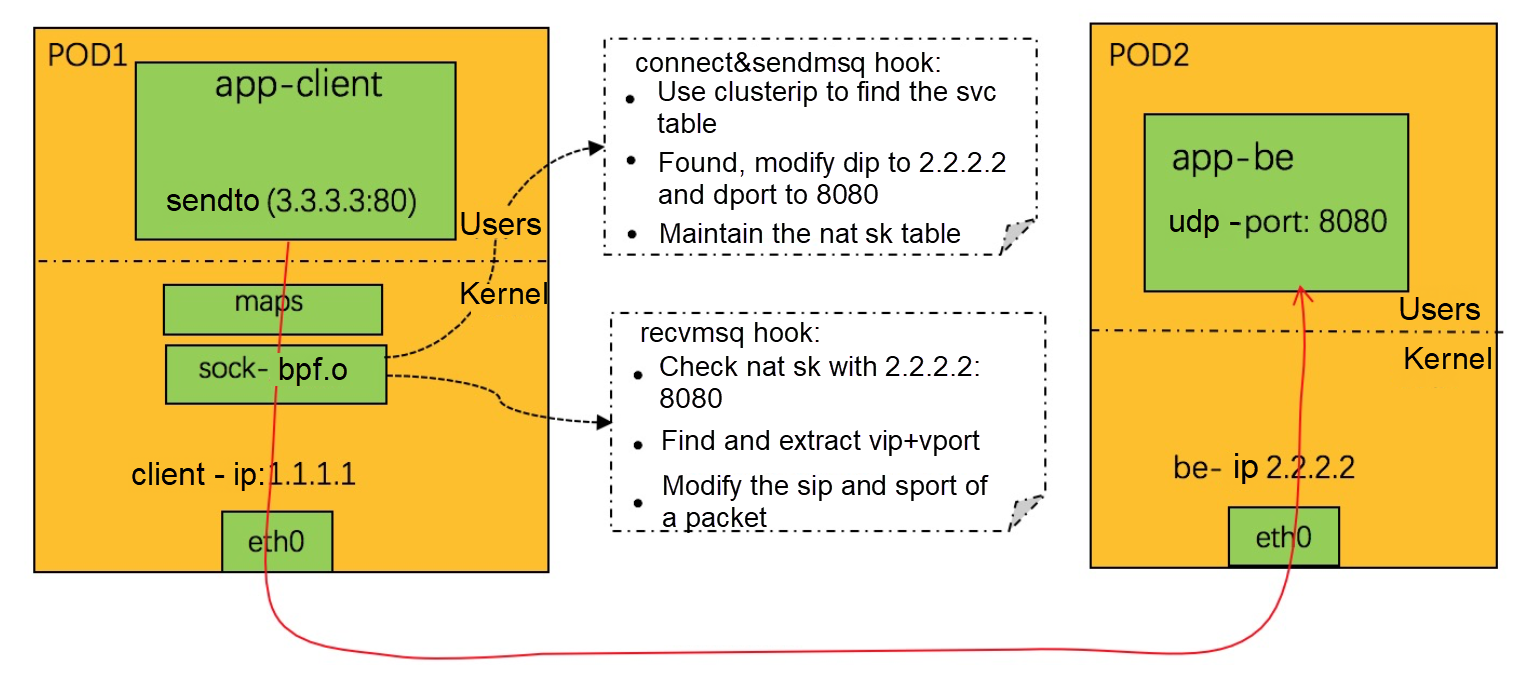

UDP is connectionless and is more complex, as shown in the following figure:

For the definition of the nat_sk table, see: LB4_REVERSE_NAT_SK_MAP

The implementation of the clusterIP bases on socket eBPF. In addition to the preceding basic forwarding principles, there are some special details to consider. One of them is the peer address. Unlike implementations such as IPVS, on the clusterIP of socket eBPF, the client communicates directly with backend, and the intermediate service is bypassed. The following is the forwarding path:

If the APP on the client calls interface query peer address such as getpeername, the address obtained at this time is inconsistent with the address initiated by the connect. If the app has a judgment or special purpose for the peeraddr, there may be unexpected situations.

In view of this situation, we can correct it at the socket level by eBPF:

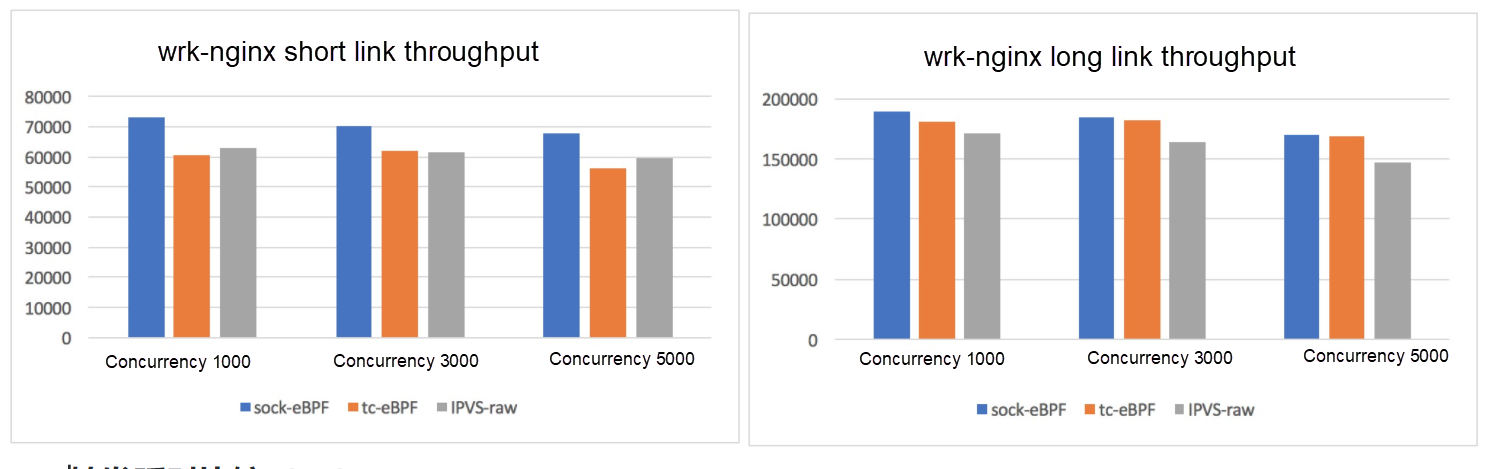

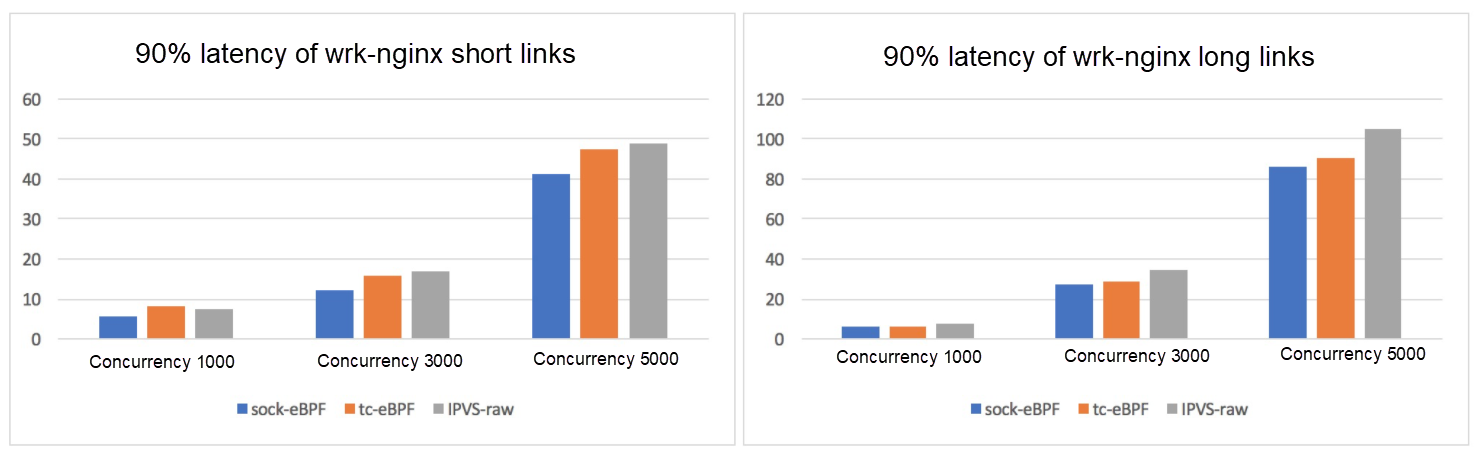

Test environment: 4 vCPUs and 8 GB mem secure container instances; single client, single clusterip, and 12 backend. socket BPF: a service implementation based on socket ebpf. tc eBPF: a cls-bpf-based service implementation, which has been applied in the ack service. IPVS-raw: remove all security group rules and overhead such as veth and only implement the service IPVS forwarding logic. Socket BPF improves all performance metrics to varying degrees. For a large number of concurrent short connections, the throughput is improved by 15%, and the latency is reduced by 20%. Comparison of forwarding performance (QPS)

Comparison of 90% Forwarding Latency (ms)

The service based on the implementation of socket eBPF simplifies the load balancerlogic implementation and reflects the flexible and compact features of eBPF. These features of eBPF fit cloud-native scenarios well. Currently, this technology has been implemented in Alibaba Cloud to accelerate the service network of kubernetes.

84 posts | 5 followers

FollowAlibaba Cloud Community - October 28, 2022

OpenAnolis - October 26, 2022

Alibaba Cloud Native Community - January 19, 2023

Alibaba Cloud Native Community - December 13, 2023

Alibaba Cloud Native - November 16, 2023

Alibaba Cloud Native Community - December 11, 2023

84 posts | 5 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn MoreMore Posts by OpenAnolis