This article is compiled from the speech delivered by Teng Yu (Founder of Pravega China Community and Director of Software Engineering and Technology at Dell Technologies) during the main conference venue of the Flink Forward Asia 2021. The main contents include:

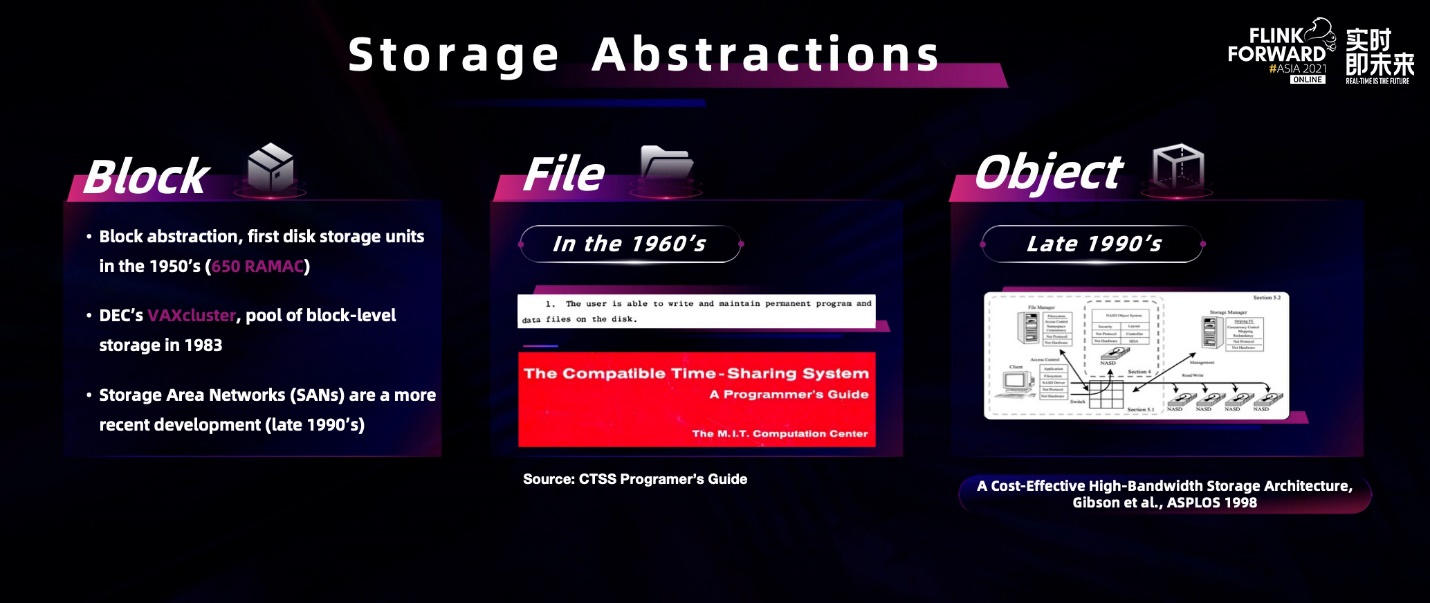

There is a commonly known point in computer software design. Any new computer problem can be solved with a newly added abstraction layer. The same is true for storage. The preceding figure lists three storage abstractions that are mainly used: elastic block storage, file storage, and object storage service. Elastic block storage was created at the same time as the modern computer industry. File storage appeared later as the mainstream storage abstraction. Object Storage Service (OSS) was born in the 1990s. With the development of real-time computing and the cloud era, streaming data has become more widely used. An emerging storage abstraction is needed to deal with this kind of data that emphasizes low latency and high concurrent traffic. Adding byte streams to file storage may be the most intuitive idea, but it faces a series of challenges in actual requirements. The implementation is more complicated, as shown in the following figure:

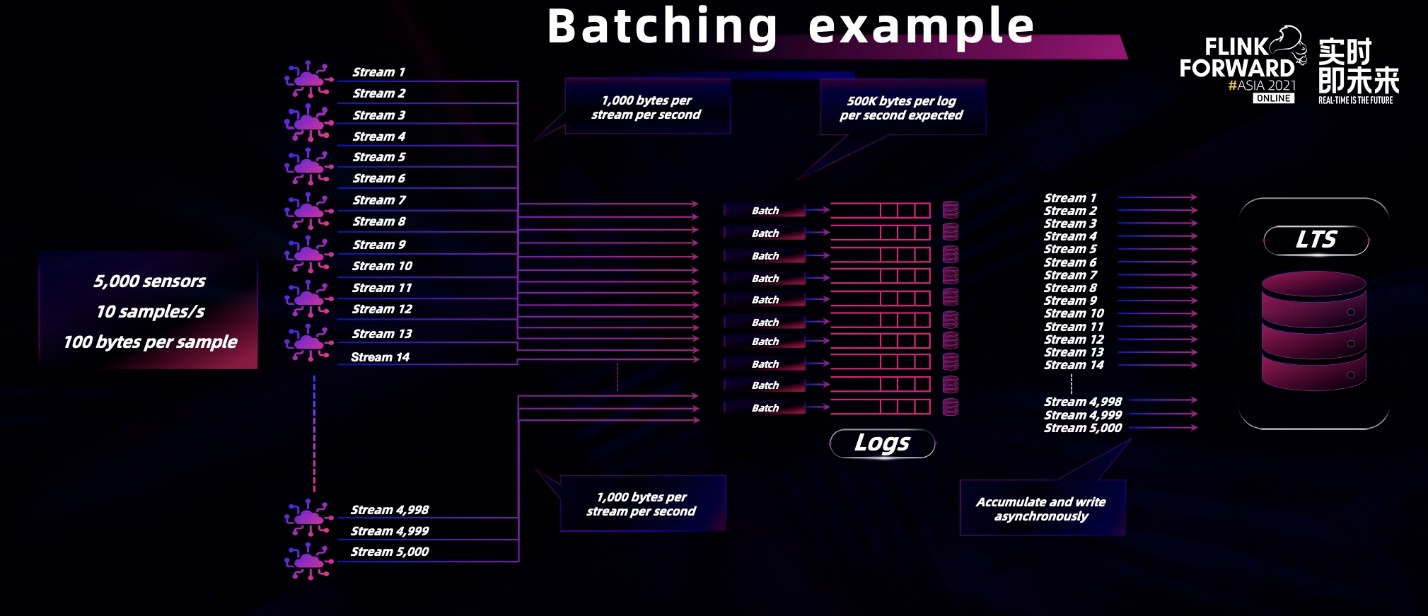

The write size of data is critical to the impact on throughput. Batching needs to be introduced in the implementation to balance latency and throughput, and space needs to be pre-allocated to avoid performance losses caused by block allocation. Finally, like storage, persistence must also be guaranteed.

The local file system is inherently insecure. In the real world, hardware nodes and disk media can be damaged at all times. It is necessary to introduce distributed storage systems to solve these problems, such as common multi-replica systems. However, the copy of replicas takes time and space. The data security risk of distributed consistency protocols will become a new problem.

If the design is based on the shared-nothing architecture, there is no shared resource in parallel. For example, in the preceding figure, three replicas are stored on three independent physical nodes. Then, the storage size of a single stream of data is limited by the upper limit of the storage of a single physical boundary point, so this is still not a complete solution.

Due to a series of questions, file system-based flow saving is very complicated.

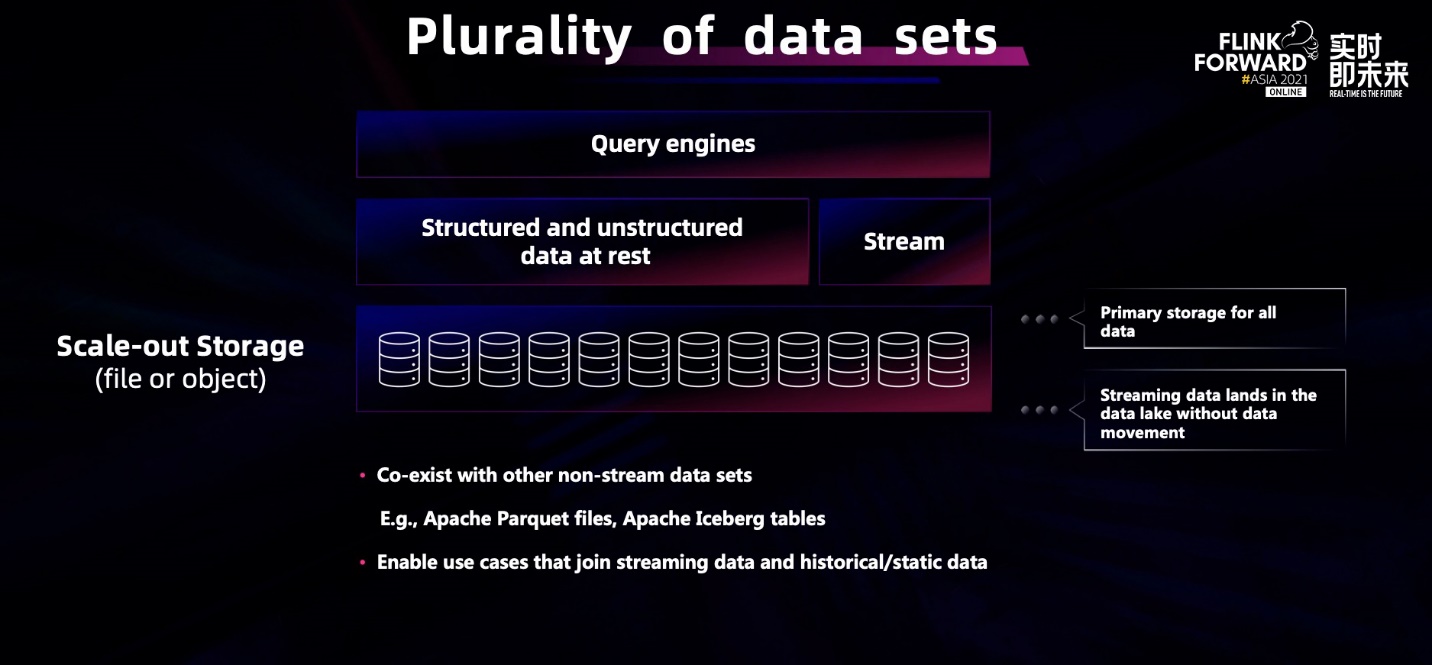

As a result, we are inspired by the opening point of view and try to solve this problem from another angle. A hierarchical architecture model of distributed files and object storage service is used to solve the problem. The underlying scalable storage can be used as a data lake, where the historical data of the stream can be stored to coexist with other non-streaming data sets. It saves the overhead of data migration, storage, and O&M and solves the problem of data silos. The combination of batch and stream computing will be more convenient.

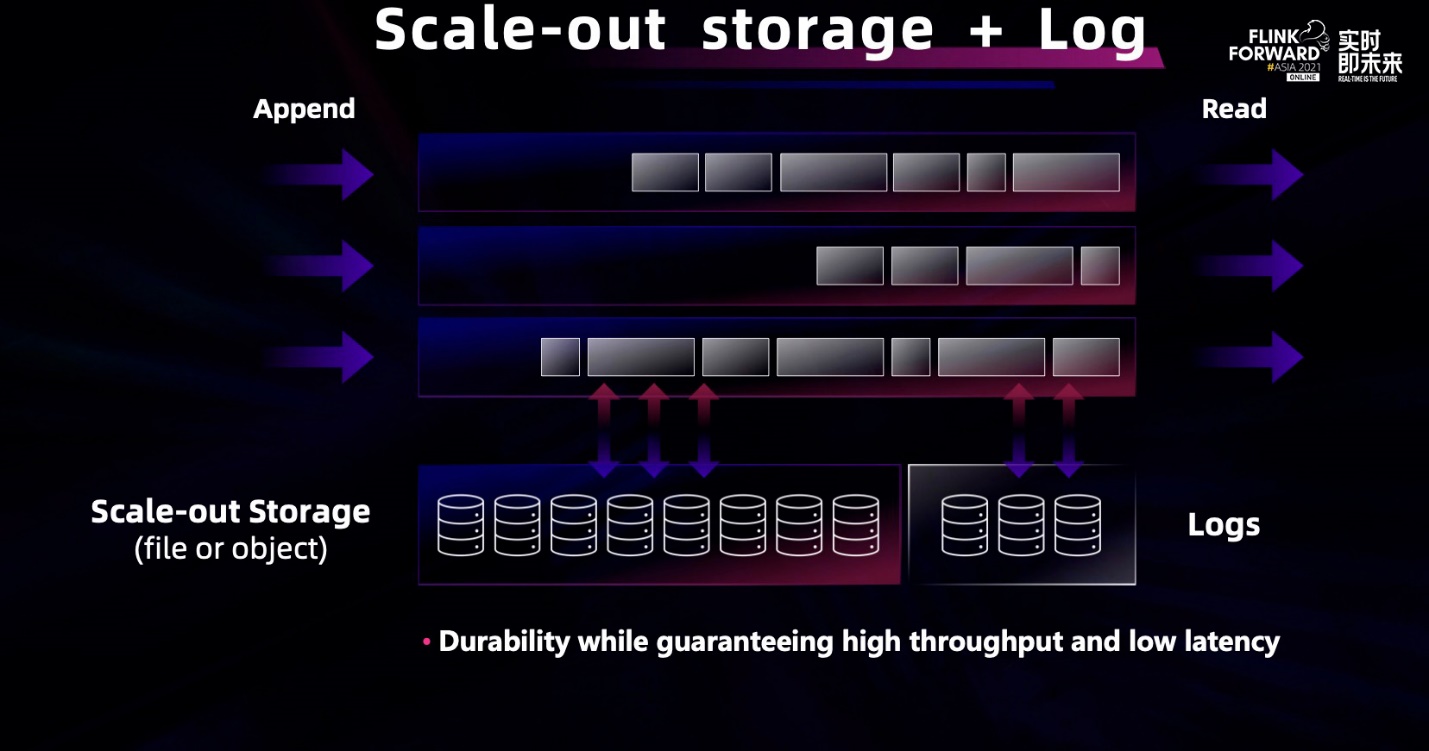

A hierarchical architecture can address decentralized multi-clients. We added distributed log storage to stream storage to solve the problem of fault tolerance and recovery.

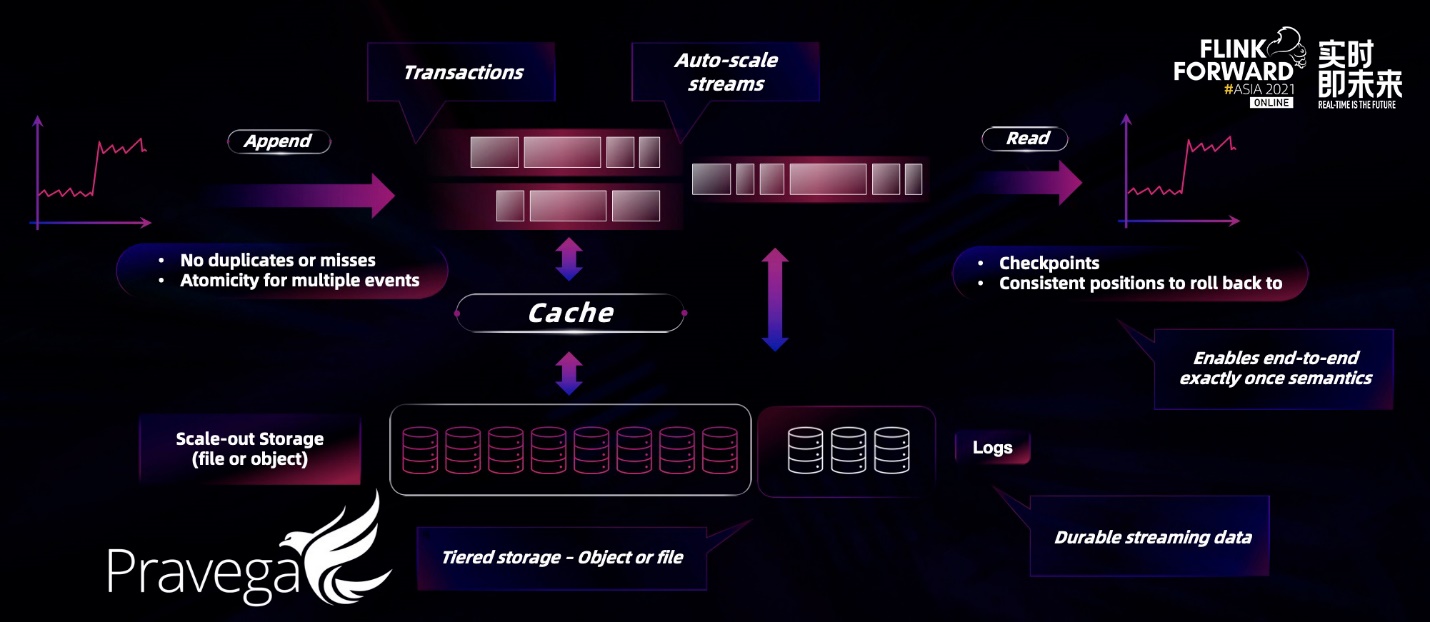

In real application instances, the traffic of real-time applications may fluctuate over time. The dynamic scaling feature was introduced to cope with the peaks and valleys of data.

In the icon in the upper left corner, the horizontal axis represents time, and the vertical axis represents the application's data. Its waveform diagram is very stable at first, and the amount of data suddenly increases at a certain point. These are often the characteristics of some time-related applications, such as morning and evening peaks, Double 11, and other scenes. This time traffic will swarm in.

A complete streaming storage system should be able to perceive changes in real-time traffic for a reasonable allocation of resources. When the amount of data increases, the underlying layer dynamically allocates more storage units to process the data. In the underlying architecture of the cloud-native era, dynamic scaling is also very important in the general storage system.

Pravega is designed to be a real-time storage solution for streams:

Pravega's independent scaling mechanism allows producers and consumers to be unaffected by each other. The data processing pipeline becomes flexible and can respond to real-time changes in data on time.

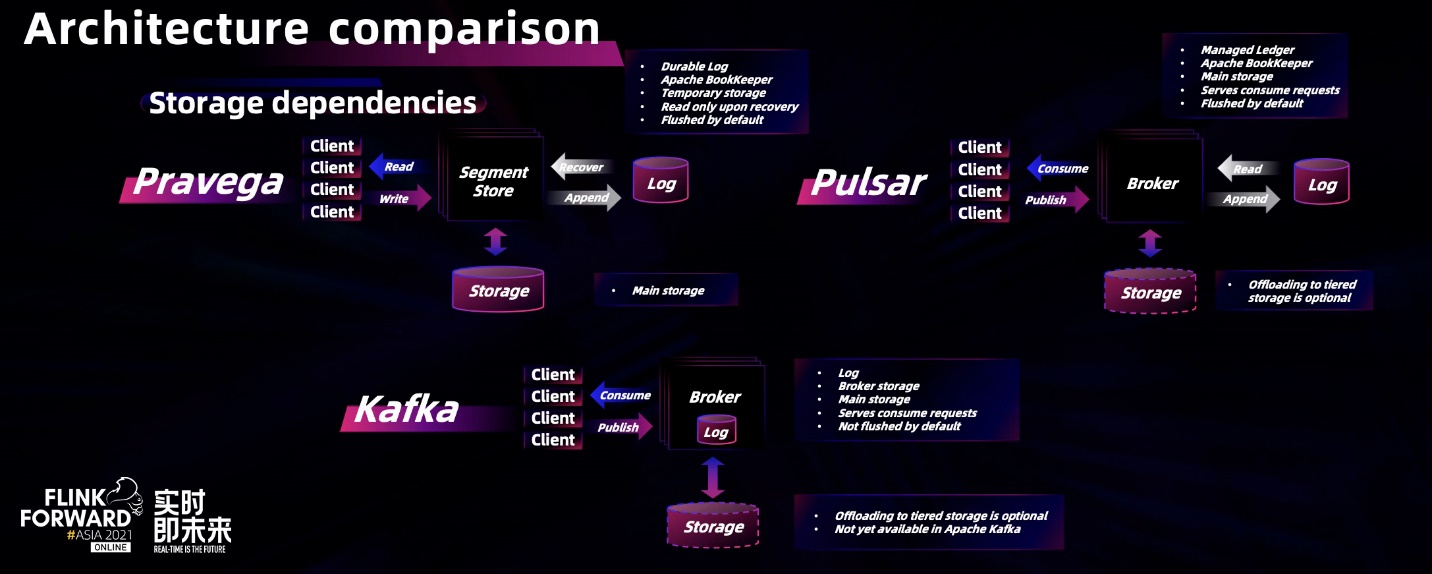

As shown in the figure, Pravega looks at streaming data from the perspective of storage. Kafka is positioned as a messaging system, so it looks at streaming data from a message perspective. The positioning of a messaging system is different from a storage system. A messaging system refers to a message transmission system that focuses on the process of data transmission service and production and consumption. Pravega is an enterprise-level distributed stream storage product. It meets the attributes of streams and supports the attributes of data storage (such as persistence, security, reliability, and isolation).

Different perspectives bring about differences in design architecture. Since messaging systems do not need to process long-term data storage, additional tasks and components are required to carry data to complete persistence. Pravega, which locates the stream storage, directly solves the data retention problem in the primary storage. The core engine of Pravega's data storage is called the segment store. It is directly connected to HDFS or S3 distributed storage components for long-term and persistent storage. Relying on the mature architecture and scalability of the underlying storage components, Pravega naturally has the features of large-scale storage and scalability on the storage side.

Another architectural difference comes from the optimization of the client. In a typical IoT scenario, the number of write ends at the edge is usually large, and the amount of data written at each time may not be large. The design of Pravega also takes this scenario into full consideration. It adopts the optimization of twice batching on the client and the segment store. It combines lowercase letters from multiple clients into small batch writes that are friendly to the underlying disk. It significantly improves the performance of high concurrent writes on based on low latency.

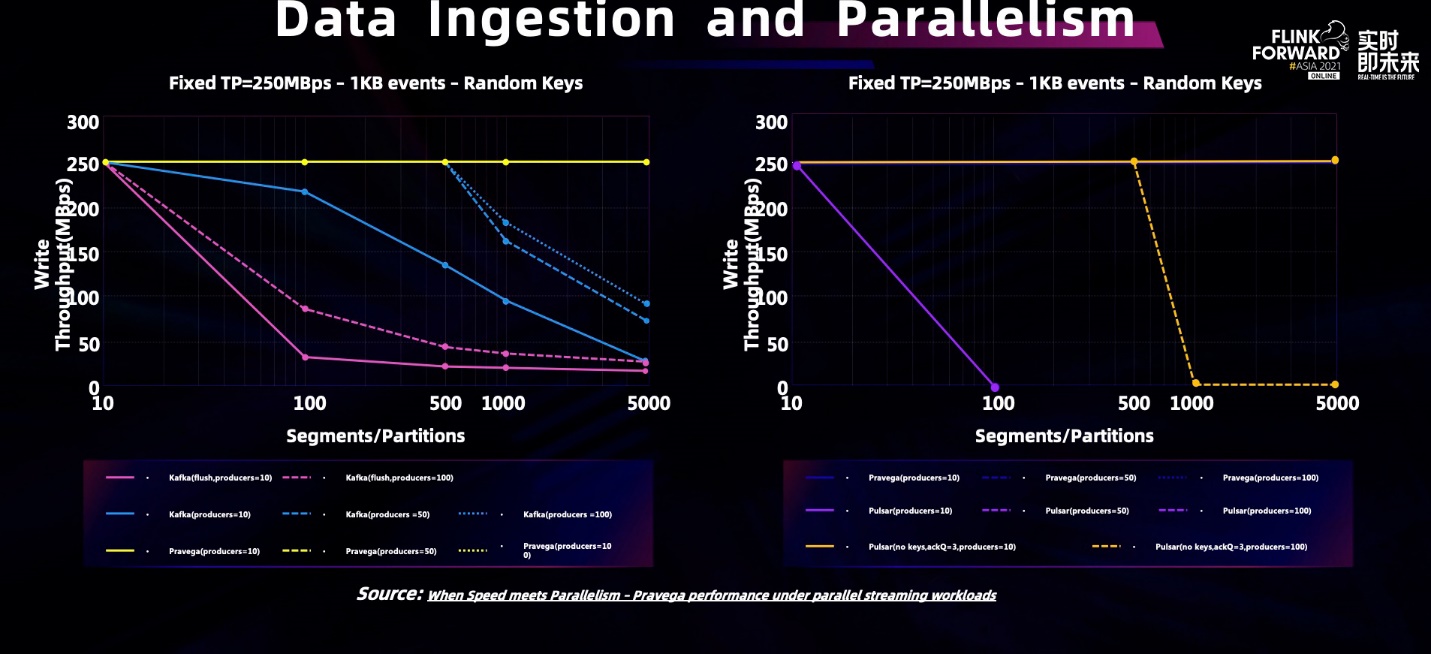

This design also has an impact on performance accordingly. Pravega tested the performance of Kafka and Pulsar under the same high concurrent load. The following figure shows the experimental results. Using a highly parallel load in our tests, each Stream/Topic has a maximum of 100 writes and 5000 segments. Pravega can maintain a rate of 250MBps in all cases. It is also the maximum write rate for disks in a test cloud environment. As shown in the left and right figures, the performance of Kafka and Pulsar is significantly reduced when the number of partitions and producers is increased. The performance is degraded successively under large-scale concurrency.

This experimental process and environment are also fully disclosed in this blog. The experimental code is also completely open-source and contributed to the openmessaging-benchmark. If you are interested, you can try to reproduce it.

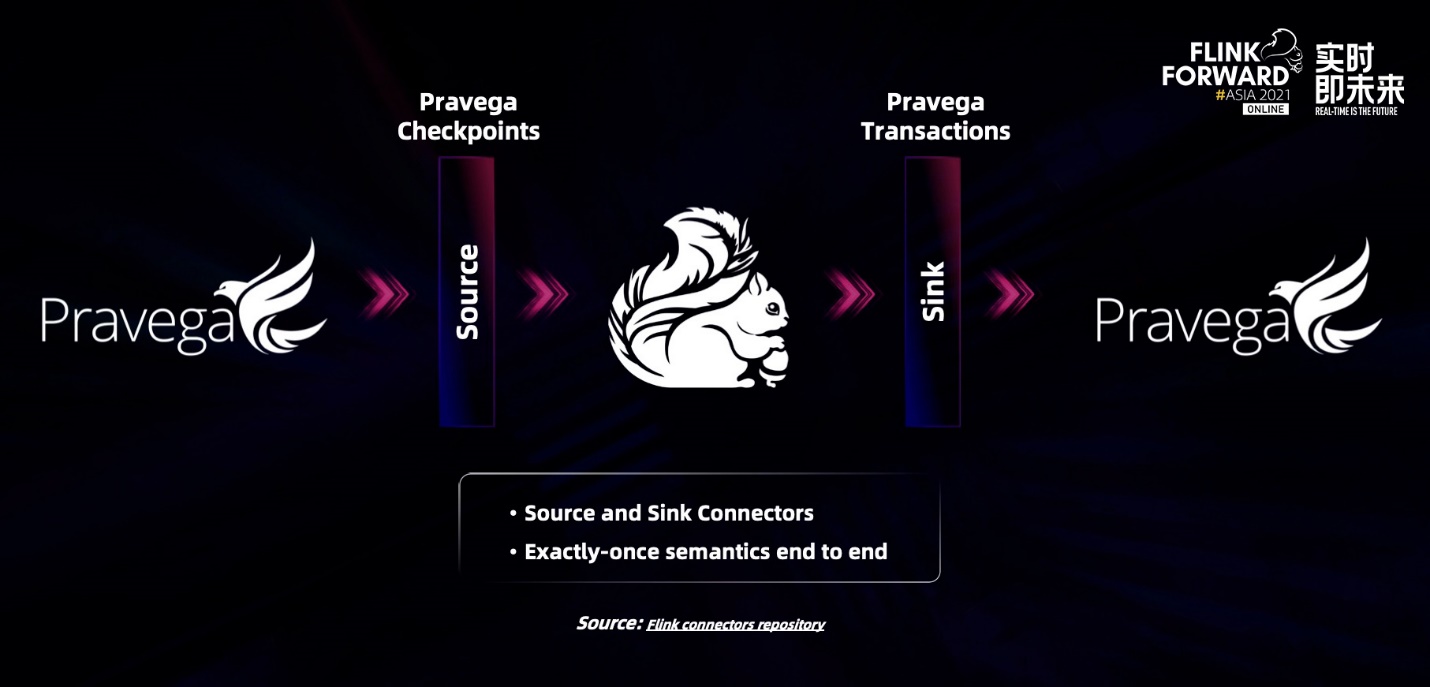

The changes and architectural features of Pravega's stream storage have been introduced from the storage perspective. Next, let's talk about how to consume stream storage data. How does Pravega work with Flink to build an end-to-end big data pipeline?

The big data architecture uses Apache Flink as the computing engine to unify batch processing and stream processing through a unified model and API. Pravega, as the storage engine, provides a unified abstraction for streaming data storage, enabling consistent access to historical and real-time data. Both of them form a closed loop from storage to computing, which can cope with both high-throughput historical data and low-latency real-time data.

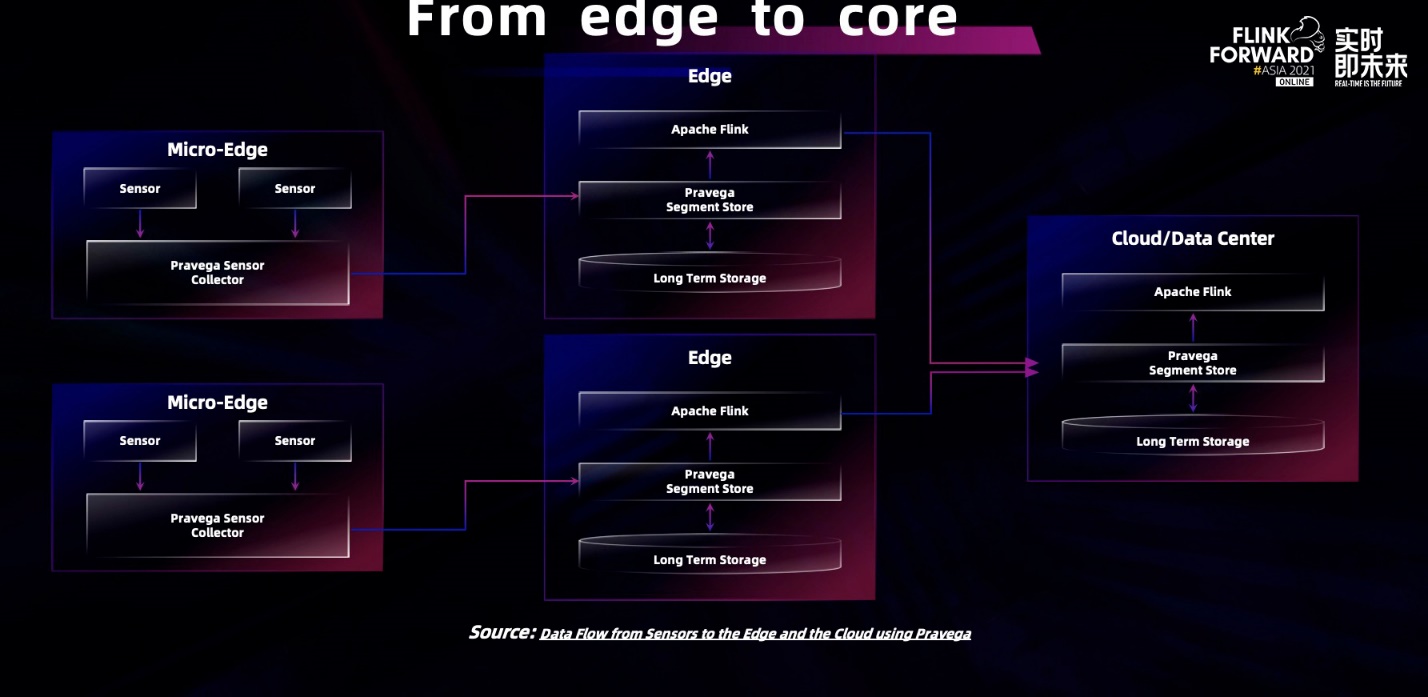

Furthermore, for edge computing scenarios, Pravega is also compatible with commonly used message communication protocols and implements corresponding data receivers. It can be used as a pipeline for large data stream water treatment, collect data from the pipeline, and send data to downstream computing engine applications to complete the data processing pipeline from the edge to the data center. Therefore, enterprise users can easily build a big data processing pipeline. This is also the purpose of our development of streaming storage products.

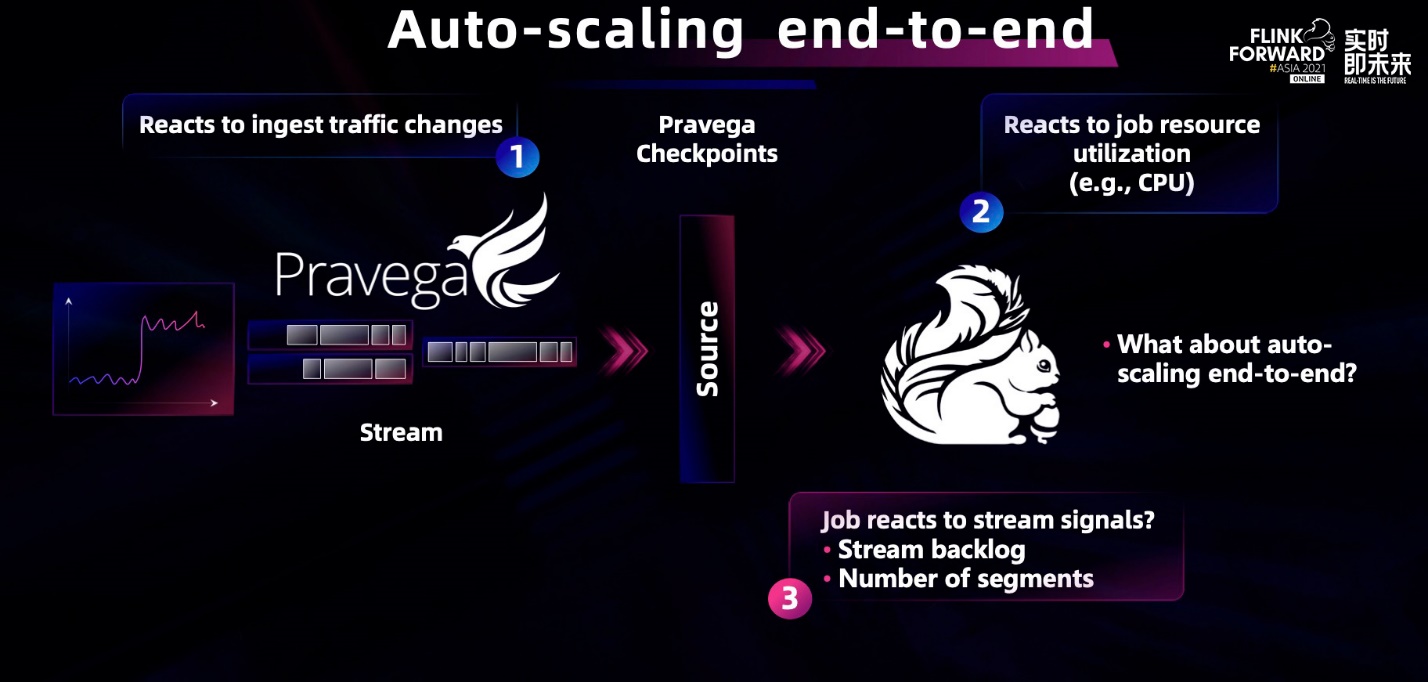

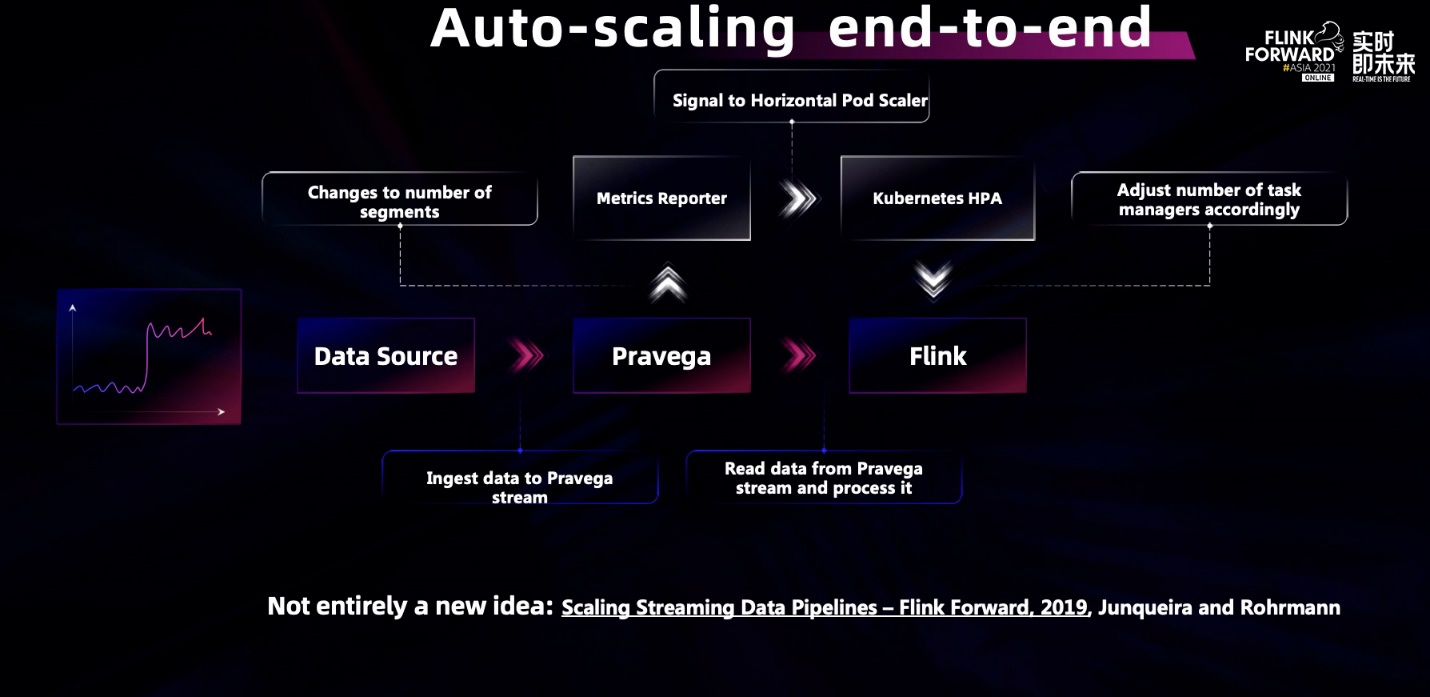

We believe dynamic scaling is very important in the cloud-native age. It can reduce the difficulty of application development and make more efficient use of the underlying hardware resources. The dynamic scaling of Pravega has been introduced. The new version of Flink also supports the dynamic scaling feature. So, what if we link two independent scalings and promote the dynamic scaling function to the entire pipeline?

We worked with the community to finish the complete scaling link and bring the end-to-end auto-scaling function to all customers. The preceding figure is a schematic diagram of the end-to-end auto-scaling concept. When the data injection becomes larger, Pravega can use auto-scaling and allocate more segments to handle the pressure on the storage side. Flink can also allocate more parallel computing nodes to the corresponding computing tasks to cope with changes in data traffic by using metrics and Kubernetes HPA. This is a new generation of cloud-native capabilities that are critical to enterprise users.

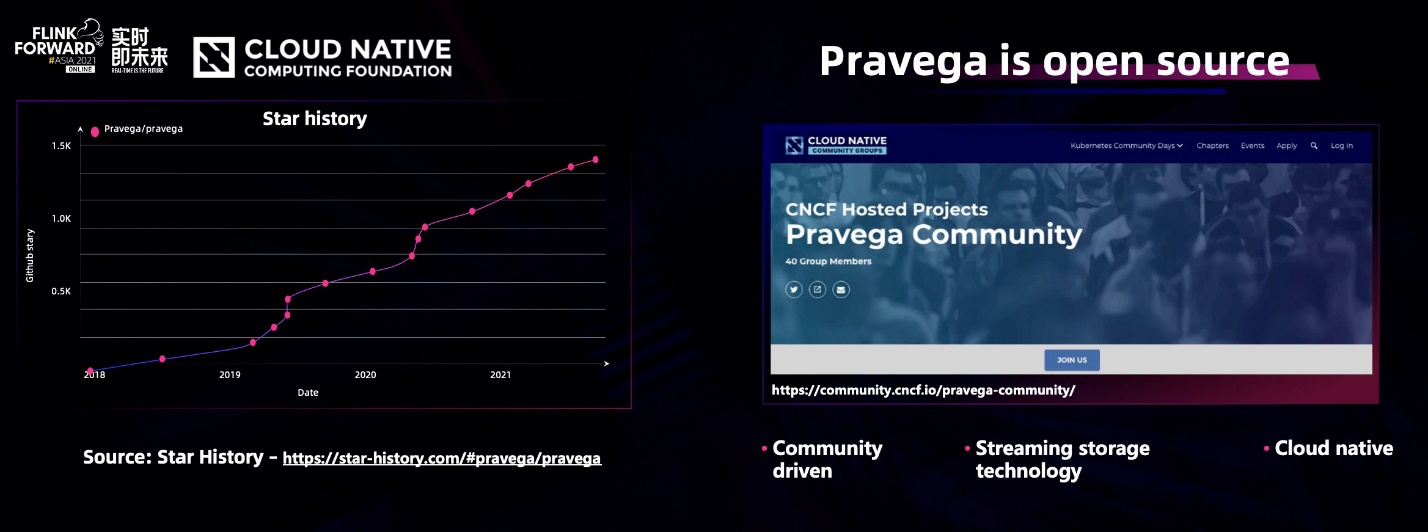

At Flink Forward Asia 2021, the Pravega community and Flink also jointly released a whitepaper on the database synchronization solution. Pravega, as a project of CNCF, is also evolving and will be more open-source.

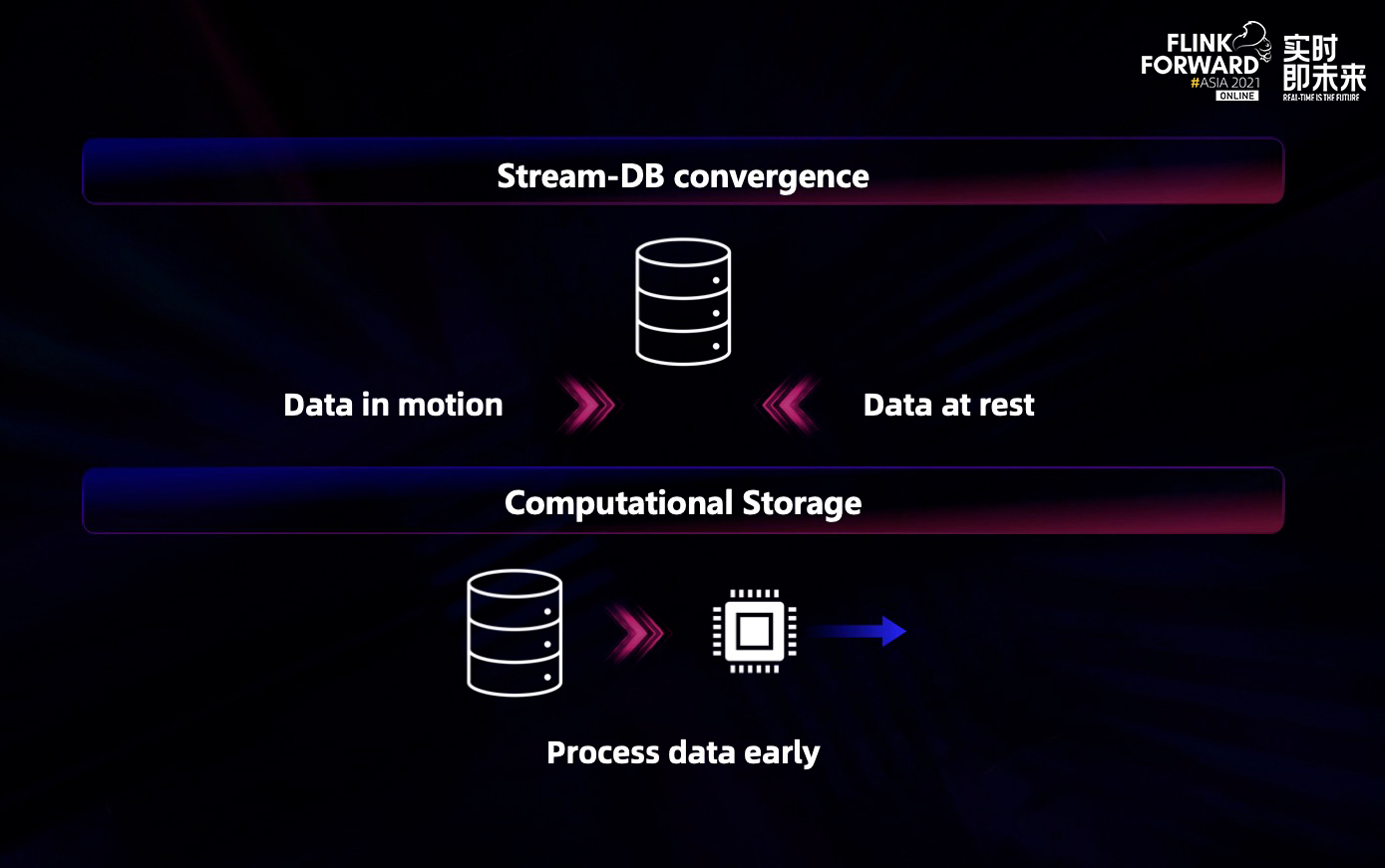

With the continuous development of technology, more streaming engines and database engines begin to develop in the direction of convergence. Looking ahead, the boundaries between storage and computing, flow, and table are gradually blurred. The storage design of Pravega batch and stream integration also coincides with a very important development direction for Flink in the future. Pravega will also actively communicate and cooperate with open-source communities related to data lakes, including Flink. We will build a more friendly and powerful next-generation data pipeline for enterprise users.

An In-Depth Analysis of Flink Fine-Grained Resource Management

138 posts | 41 followers

FollowApache Flink Community China - July 21, 2020

Apache Flink Community China - January 11, 2021

Apache Flink Community China - April 19, 2022

Apache Flink Community China - September 27, 2020

Apache Flink Community China - September 27, 2020

Apache Flink Community China - September 26, 2021

138 posts | 41 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Apache Flink Community