The following content is based on the speech transcript. This article covers the Alibaba Risk Control Brain, Nearline Engine, and Offline Engine.

Alibaba's risk control brain covers both finance where our main business is Alipay, and several other industries such as new retail, mobile navigation and directions, and mass entertainment. Our team, in particular, is responsible with the other ventures. The Alibaba risk control brain is complex and can be interpreted in many different ways. But, in essence, it represents several different efforts at Alibaba Group.

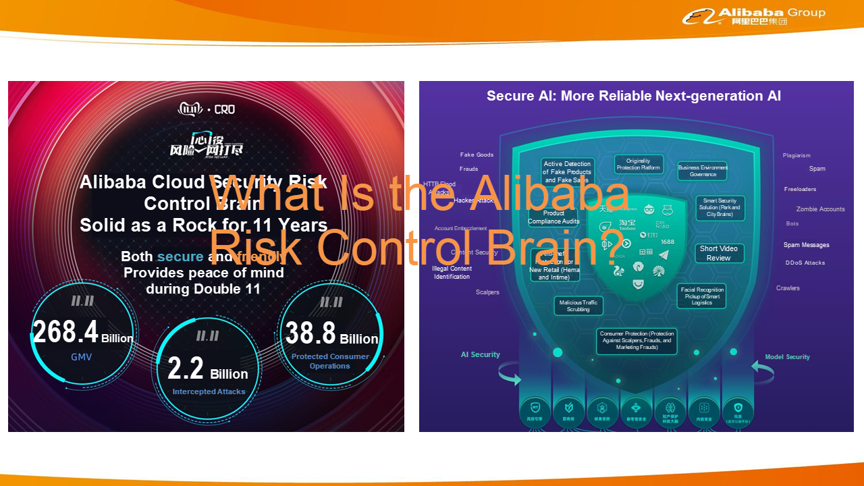

First, the Alibaba risk control brain adopt a "Rich Mid-end, Light Front-end" strategy. And, due to the large number of risk businesses managed by Alibaba risk control and the complex fields, different fields and risk control scenarios have their own unique interactions and consoles. However, the underlying engine must be centralized. The risk control engine performs unified computing and processing operations. Second, the Alibaba risk control brain represents a high level of intelligence. Large numbers of deep learning and unsupervised learning models will be launched in the future. In addition, prevention and control policies and methods will become more intelligent. In the following figure, the section on the right shows the main businesses and risk control scenarios covered by Alibaba risk control, such as hacker attacks, consumer protection, and merchant protection.

The section on the left of the picture below shows data concerning Alibaba risk control during last year's Double 11 Shopping Festival, where it protected the operations of about 38.8 billion consumers and blocked about 2.2 billion malicious attacks.

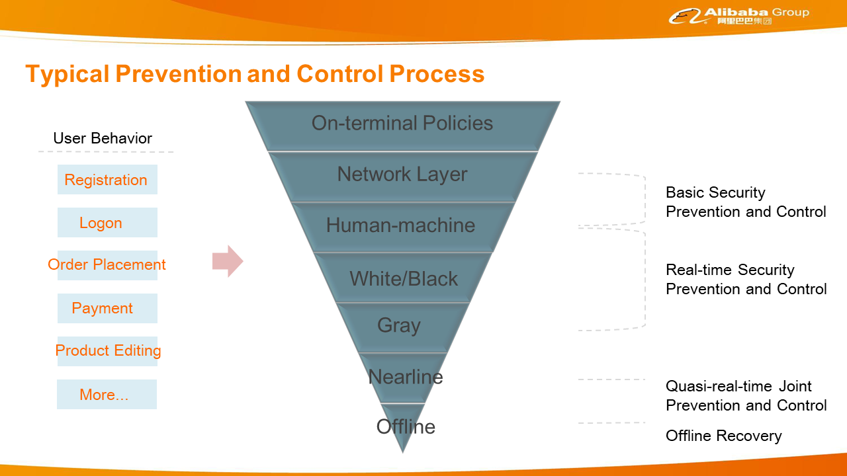

A large number of operations happen in the background when a user accesses Alibaba businesses through an Alibaba mobile app or website. These operations probably go through the seven-layer prevention and control process, as shown in the following figure. The first layer is on-terminal prevention and control, mainly at the application layer. Application reinforcement and application code obfuscation are found at this layer. This layer also applies on-terminal security policies. The second layer is the network layer, where traffic scrubbing and traffic protection are performed.

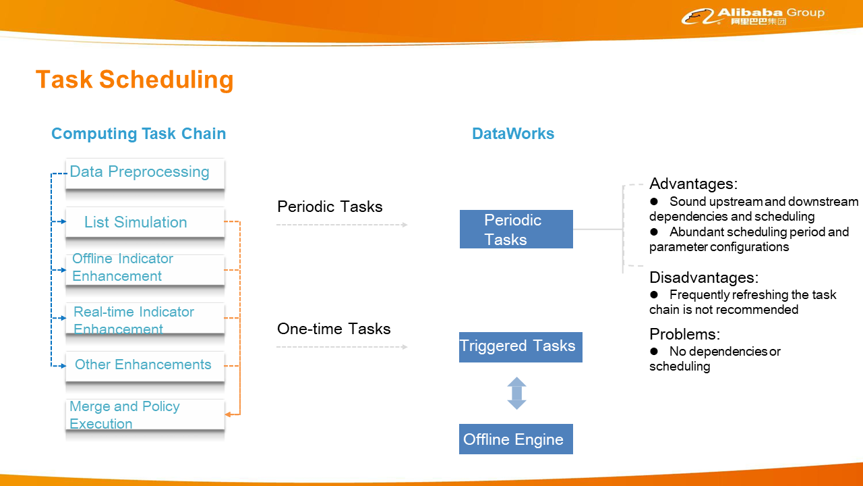

Nearline and offline operations are not mandatory for all businesses. This largely depends on the exact business requirements.

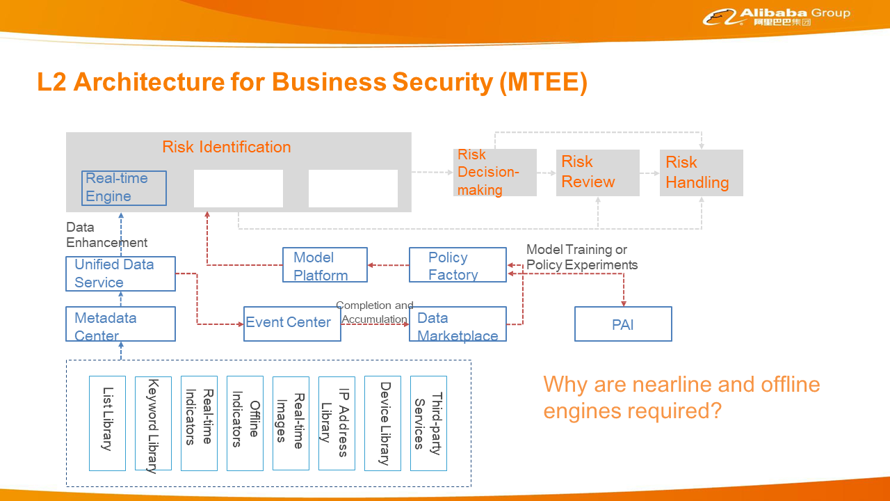

As shown below, business security prevention and control can be divided into risk identification, risk decision-making, risk review, and risk handling. Risk identification mainly determines whether operations are risky or not. Upon detection of certain risky user operations, if the business is quite simple and the detection accuracy is high, these operations can directly enter the risk handling node. If an identified operation cannot be determined or the identification accuracy is not high, it will enter the risk review node for further determination through machine or manual review, followed by risk handling. In addition, certain complex businesses probably require further comprehensive determination. They will enter the risk decision-making node. For example, certain risks can be penalized only once no matter how many times they are triggered within a period. However, they may be identified many times on different nodes or in different operations. Therefore, they need to be deduplicated in a centralized manner during risk decision-making.

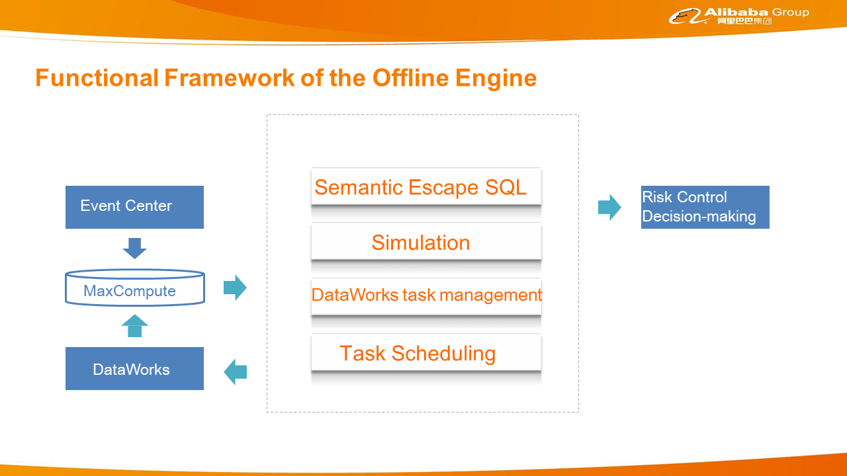

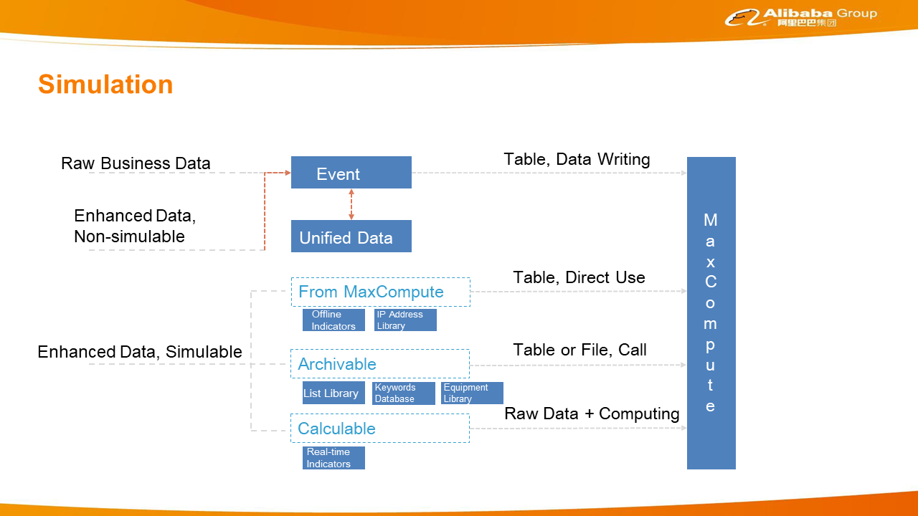

Risk identification is the most complex process. This node uses various business data transmitted from front-end business systems, such as the Taobao and Tmall app. However, risk prevention and control based on business data are far from enough. Alibaba has also developed many big data applications, such as the list library, keyword library, indicators, real-time images, and IP library. This data is defined and managed in a centralized manner by the metadata center, and ultimately provides data enhancement for risk identification through a unified data service. In addition, the data helps build a quick experiment and launch process for policies or models through the event center, policy factory, and model platform. The event center performs data completion in the real-time or nearline engine and writes it into MaxCompute. The policy factory interconnects with the PAI. Data prepared in the policy factory will be sent to the PAI for model training. Finally, new policies and models are deployed online in the policy factory, forming a fast data, training, and online deployment process.

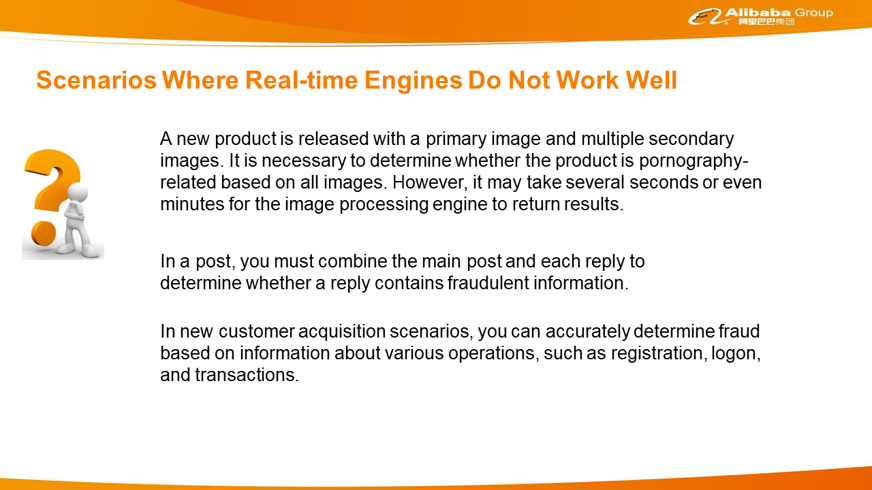

Before using the nearline engine, Alibaba discussed the issue for a long time. The boundary of the nearline engine is close to that of the real-time engine and sometimes it is difficult to distinguish them. In most cases, what you do by using the nearline engine can also be done by using the real-time engine. Then why does Alibaba need to use the nearline engine? Alibaba has found that many scenarios require a great deal of customized development when using a real-time engine. Specialized developers must be found to implement these scenarios. With the large-scale promotion of models, such application scenarios are more common. Therefore, we need better methods to solve these problems. For example, when a new product is released, it is necessary to comprehensively determine whether the product has illicit content that is inappropriate. This is done based on the product image information and other product information. To process image information, most companies use an image recognition engine. However, the processing capabilities of image recognition engines are sometimes fast and other times slow. Therefore, the response time is not necessarily consistent. In this case, it is impossible to wait for responses using the real-time engine. Therefore, a lot of customized development is required to implement asynchronous processing throughout the process. There are other difficult scenarios as well. One example is posts with many replies, some of which may be junk replies or contain fraudulent information. In most cases, it is impossible to determine fraud based on a single message or reply. Instead, you have to thoroughly analyze the entire process from post to reply. Therefore, a lot of messages from a long time span need to be put together for processing. This is difficult for a real-time engine because it is triggered by event messages. There are also some very complex business scenarios. For example, in new customer acquisition scenarios, you need to determine fraud, such as bonus hunting, based on registration, logon, and transaction behaviors. Many operations need to be put together for a comprehensive determination, which is not easy for a real-time engine. In the end, Alibaba decided to develop a nearline engine to better abstract and process such problems in order to provide better solutions.

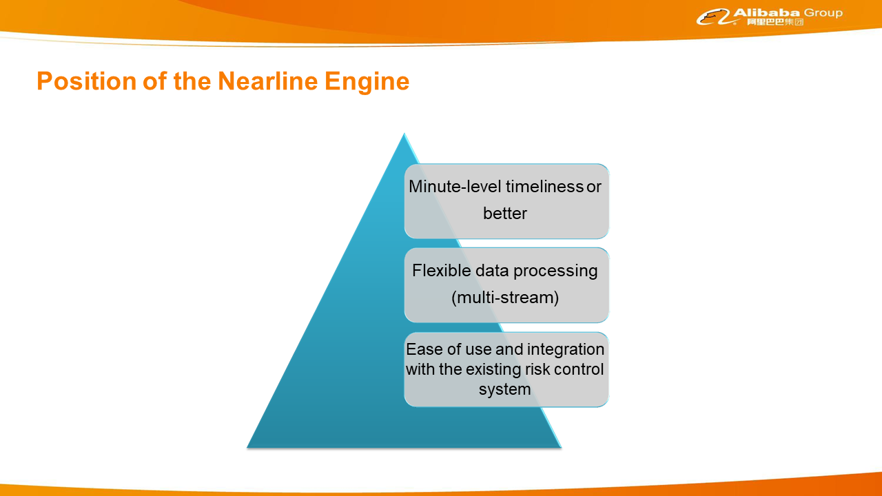

Based on Alibaba scenarios, the nearline engine must meet at least three requirements, as shown in the following figure. First, it must provide adequate timeliness. The allowed latency cannot be too long. If the latency is too long and no risk result is returned within the expected period, the business side considers operations to be normal and is prone to overlook abnormal operations. Second, it must support the comprehensive processing of multiple events, which is called multi-stream joint processing in stream computing. In addition, it requires extremely flexible data processing capabilities. Most algorithms involve a lot of flexible data processing, which must be implemented by the algorithm staff. Third, the nearline engine must be seamlessly integrated with Alibaba's existing risk control system. Alibaba's risk control system is very large, involving many upstream and downstream nodes. If the nearline engine is isolated, you cannot avoid doing the same job multiple times.

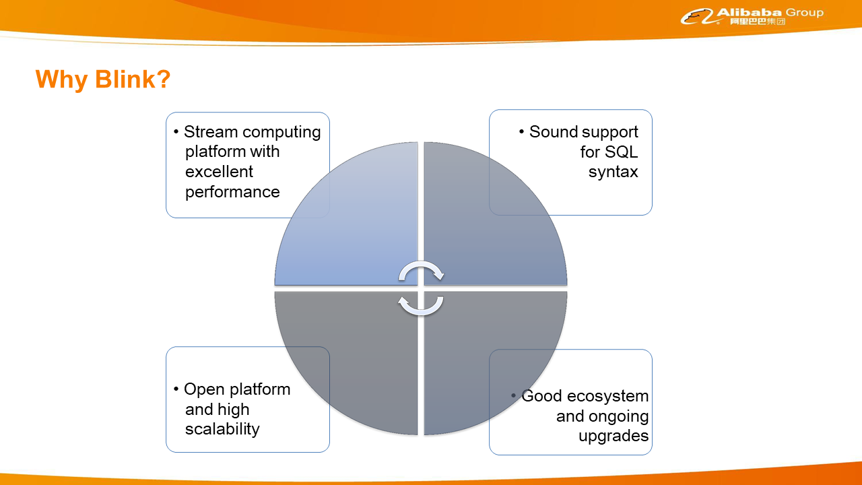

Due to the rapid development of stream computing, Alibaba chose to use a stream-computing platform as the underlying core of the nearline engine. After comparing several popular stream computing platforms on the market, Alibaba finally chose Blink. The following figure shows why Alibaba chose Blink. Blink is the customized Flink version used within Alibaba. Alibaba has already built this stream computing platform, which provides excellent performance. The platform is open, scalable, and cost-effective. In addition, Blink supports a complete set of SQL semantics, which is extremely important. Alibaba advises business units to use SQL as much as possible because it is easy to use and cost-effective. The Blink team provides continuous SQL performance optimization, which also benefits SQL users.

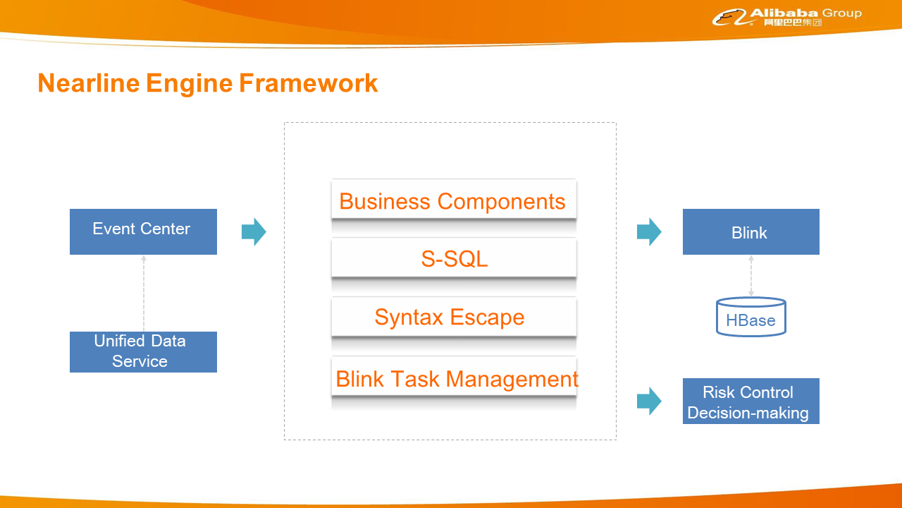

The nearline engine mainly converts the risk control logic into tasks that Blink can perform. The nearline engine sends data requests to the event center. The event center performs data enhancement through a unified data service. Then, data flows to Blink for computing. Why is the data completion process placed first? Blink performs computing according to tasks. The same event or the same traffic may be calculated multiple times in different tasks. If data enhancement is implemented in Blink, completion may be performed multiple times. In addition, data completion involves large volumes and high costs. If you implement it in Blink, it puts great pressure on Blink and causes performance optimization difficulties.

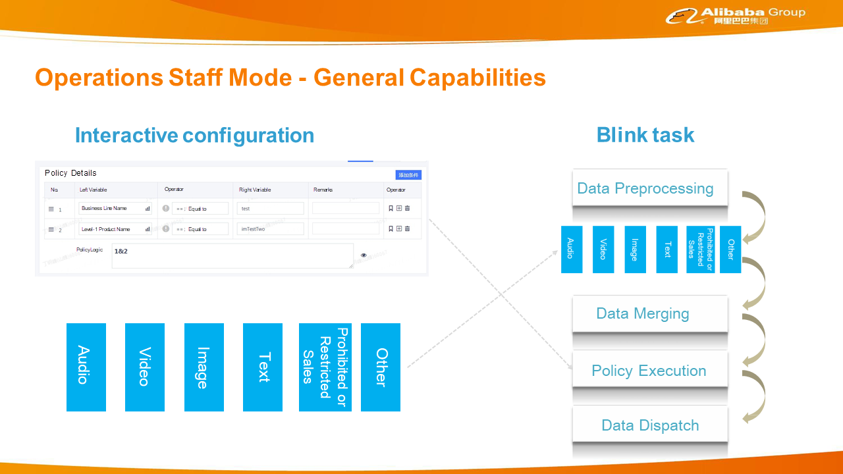

The nearline engine is divided into four functional modules.

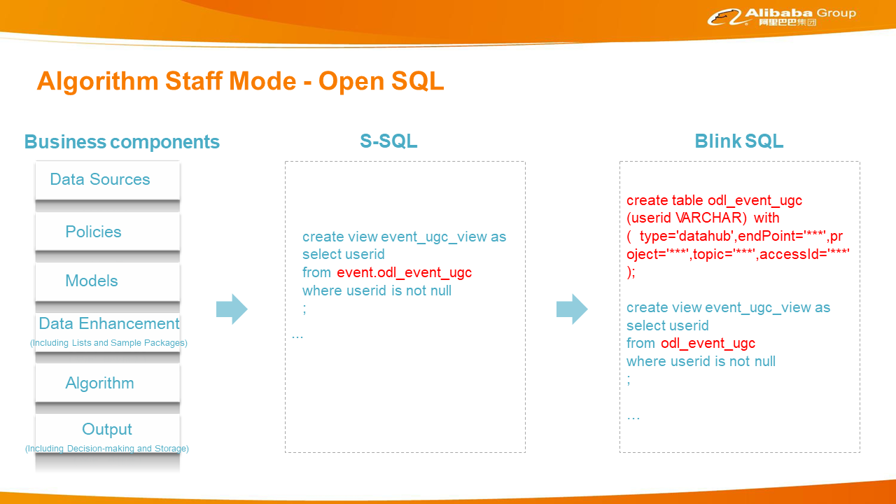

1. Business component: encapsulates risk control elements. The nearline risk control process involves many risk control elements, such as the data source of the event center and the models, algorithms, and policies required in the corresponding downstream risk control decision-making or processes. These elements are encapsulated in components allowing you to quickly use them when using the nearline engine.

2. Security-SQL: This syntax is similar to that of Blink SQL, and Blink SQL requires writing specific physical implementation. Alibaba designed Security-SQL (S-SQL) to allow users to focus on the business logic instead of implementation details.

3. Syntax escape: translates S-SQL into Blink SQL.

4. Blink task management: This includes upper and lower task limits, production safety, phased release, and testing.

event.odl_event_ugc. Here, event is a special term (reserved keyword) for the data source. When you use S-SQL, you do not need to pay attention to information that affects R&D efficiency, such as where event comes from, which is indicated by a set of conventions in system and business components.

In addition, data is transmitted between tasks in two ways. The first is MetaQ. The upstream tasks are distributed to the downstream through MetaQ. The second is HBase. The multi-column function and HLog of HBase can easily merge multiple messages into one message. Therefore, data merging is basically completed using HBase.

Currently, the nearline engine uses about 2000 Compute Units (CUs), and the average daily event handling volume is about 30 billion. The main scenarios covered are products, content, live broadcasts, and innovation. Risk identification accounts for about 10% of the risk control field. As models and algorithms further develop and products further improve, this proportion will increase significantly.

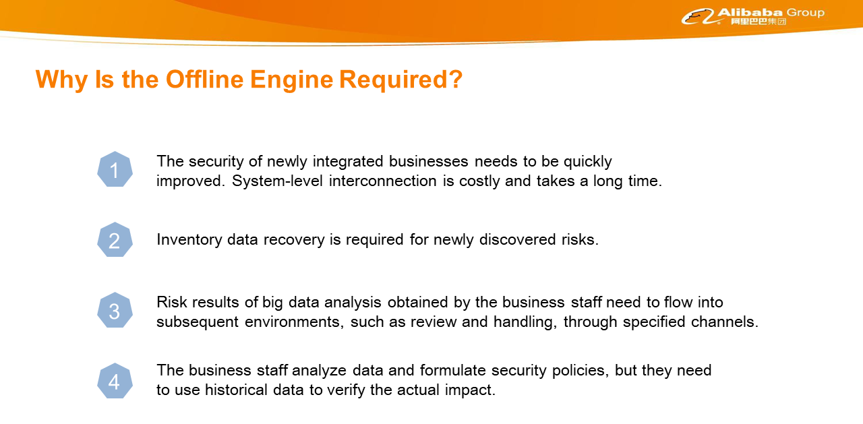

The offline engine was considered at almost the same time as the nearline engine. At first, we found that a lot of offline data was imported into the real-time engine in batches, which was not conducive to the stability of the real-time engine. After in-depth exploration and research, we found that many scenarios required batch processing capabilities for risk identification. The offline engine was initially designed to solve the following problems. The first is the integration of new businesses. In recent years, Alibaba Group has witnessed increasingly rapid development, covering a large number of business fields. Most of the new business units have relatively low security levels and therefore need to be connected to the risk control system. Originally, a new business was interconnected with the real-time engine, which was costly and slow. Both the new businesses and security teams desire a more convenient and faster interconnection method. The another problem is the many newly discovered risks, or currently undetected risks. Historical data must be retrieved after risk discovery, which represents a high demand. Third, some results of offline big data risk operations obtained by the business staff need to flow into subsequent environments, such as review and handling, through specified channels. Fourth, when the business staff makes policy changes, they need to use historical data to verify the actual impact. Otherwise, the launch process becomes very slow.

Currently, Alibaba provides three engines: a real-time engine, a nearline engine, and an offline engine. Together, these engines allow users to perform more tasks and take advantage of stronger capabilities. However, more engines and concepts indicate greater knowledge requirements and higher usage costs. Therefore, Alibaba's aim from the very beginning has been to use the same set of metadata for all the engines so to create a more unified system. For a long time now, Alibaba has tried to have all engines use the same data sets as far as possible. In the future, Alibaba hopes that all engines will use the same risk control language, such as S-SQL or a more mature and abstract language. This language can be used to explain the semantics in risk control scenarios. With such a risk control language, risk control users will be able to more clearly describe risks.

The Journey of an SQL Query in the MaxCompute Distributed System

135 posts | 18 followers

FollowAlibaba Cloud MaxCompute - September 18, 2019

Alibaba Clouder - September 30, 2017

Alibaba Clouder - November 26, 2019

Alibaba Clouder - June 12, 2018

Alibaba Clouder - March 26, 2020

Alibaba Clouder - November 21, 2018

135 posts | 18 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Security on the Cloud Solution

Data Security on the Cloud Solution

This solution helps you easily build a robust data security framework to safeguard your data assets throughout the data security lifecycle with ensured confidentiality, integrity, and availability of your data.

Learn More Database Security Solutions

Database Security Solutions

Protect, backup, and restore your data assets on the cloud with Alibaba Cloud database services.

Learn MoreMore Posts by Alibaba Cloud MaxCompute