By Long Qingyun

This article explains how Amazon Redshift can migrate to Alibaba Cloud MaxCompute, including the aspects of syntax comparison and data migration.

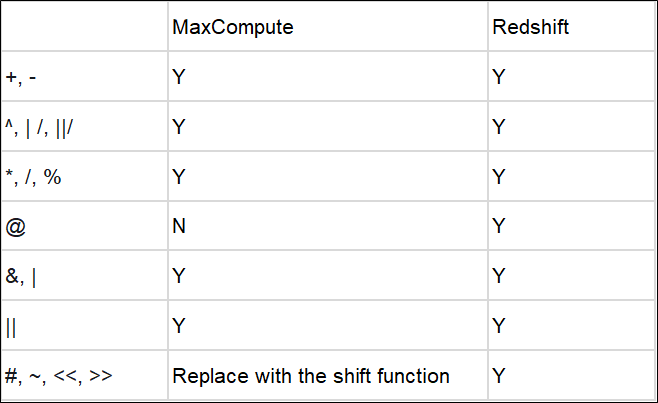

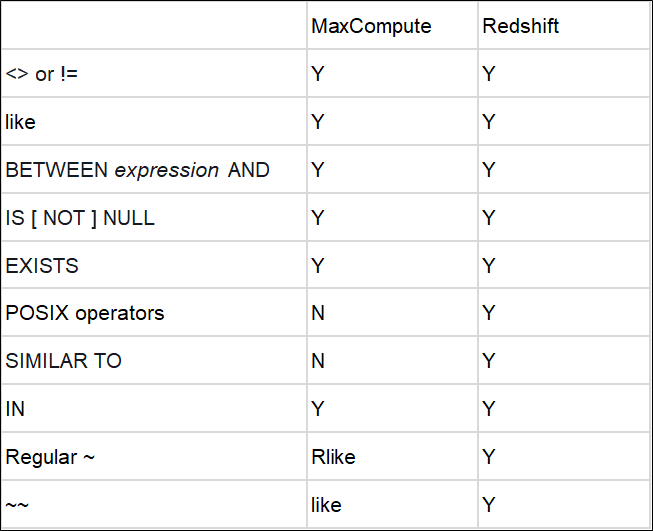

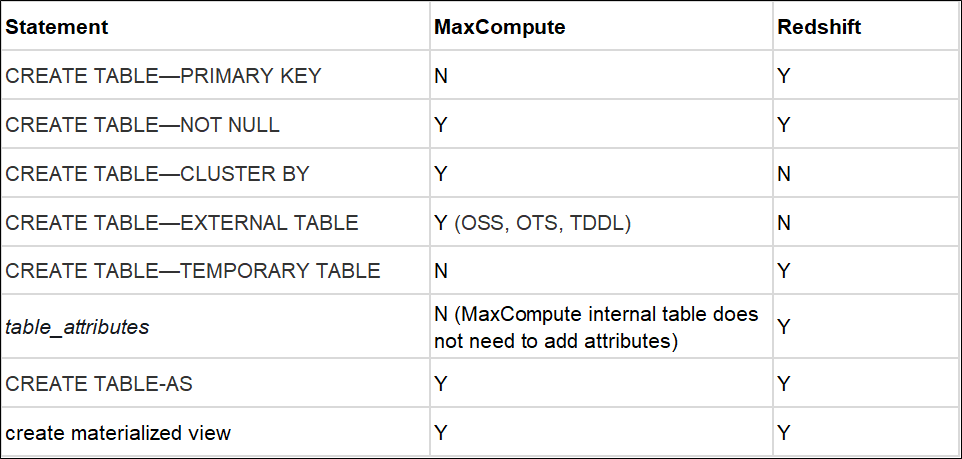

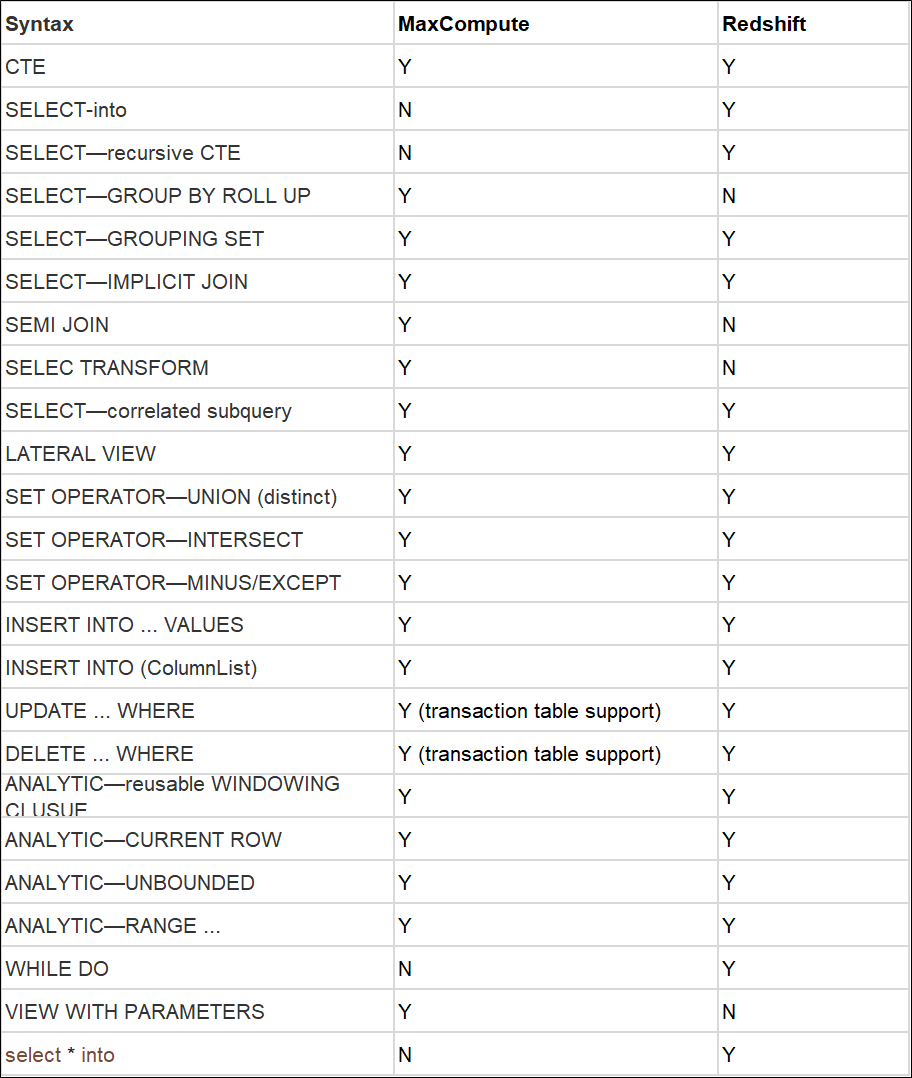

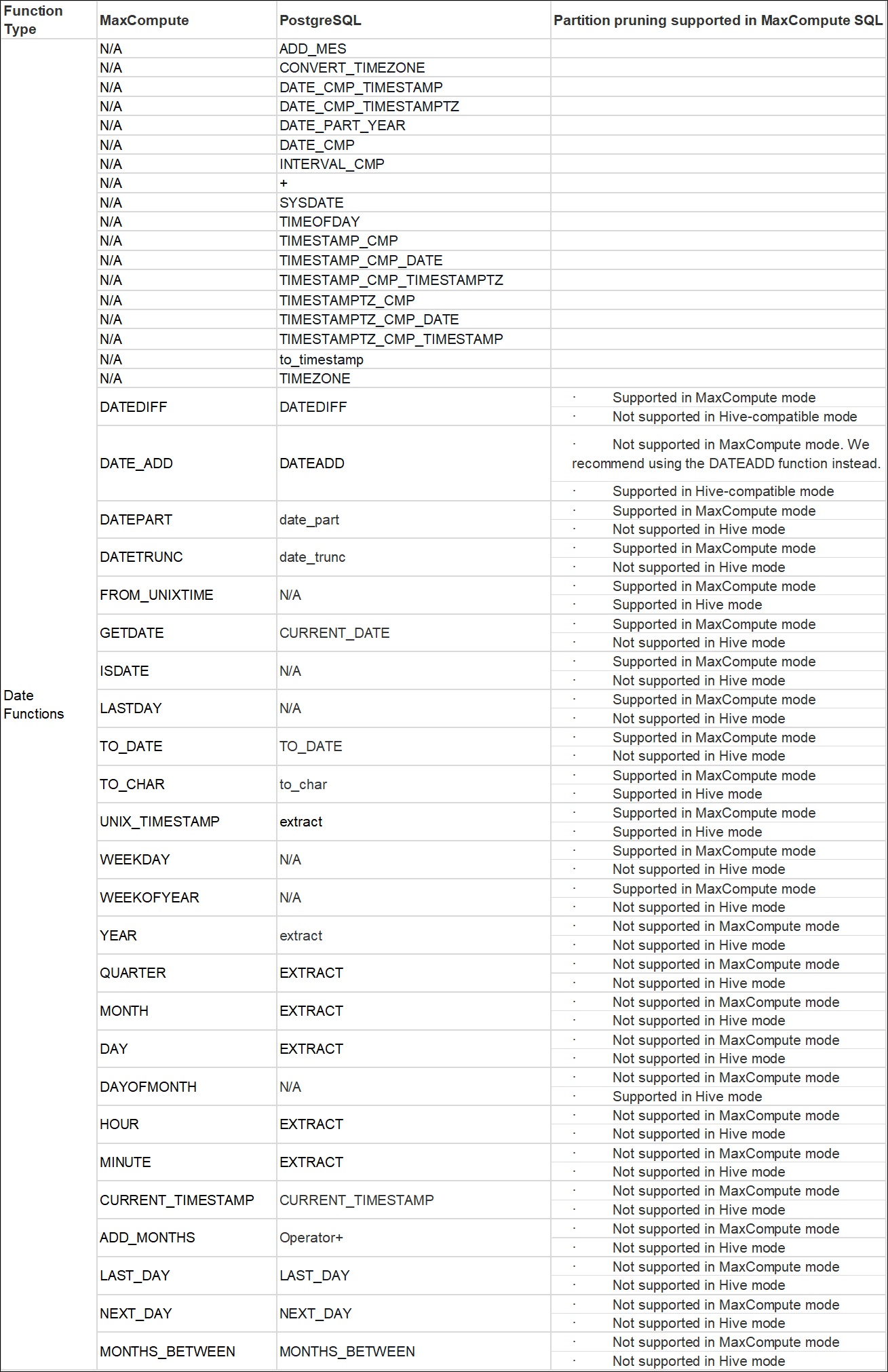

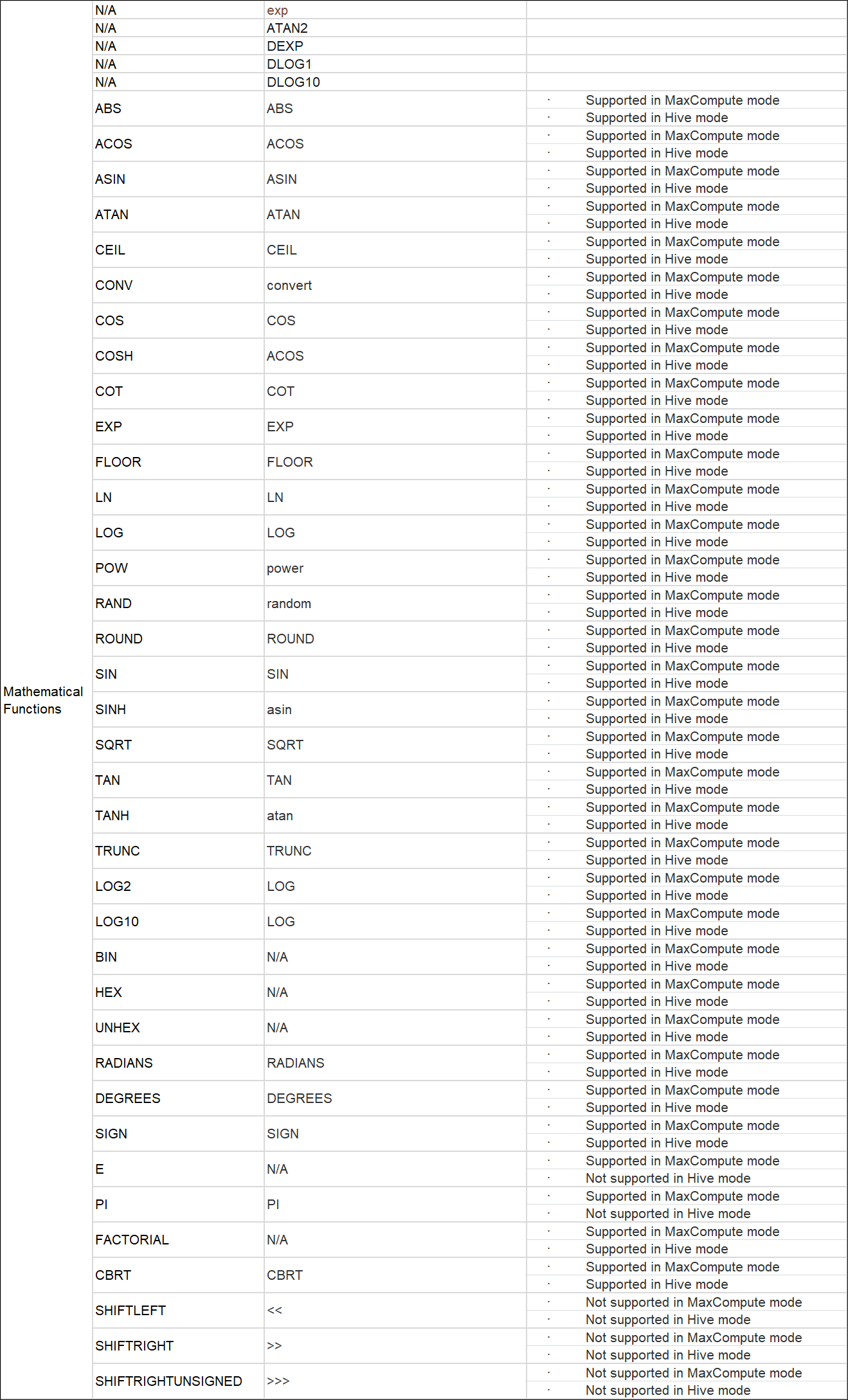

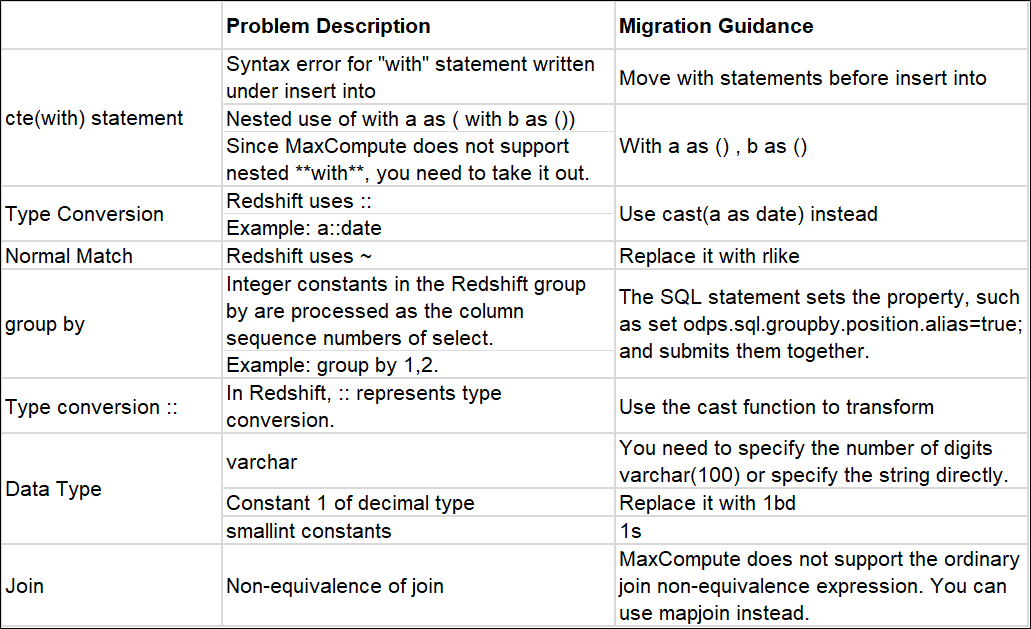

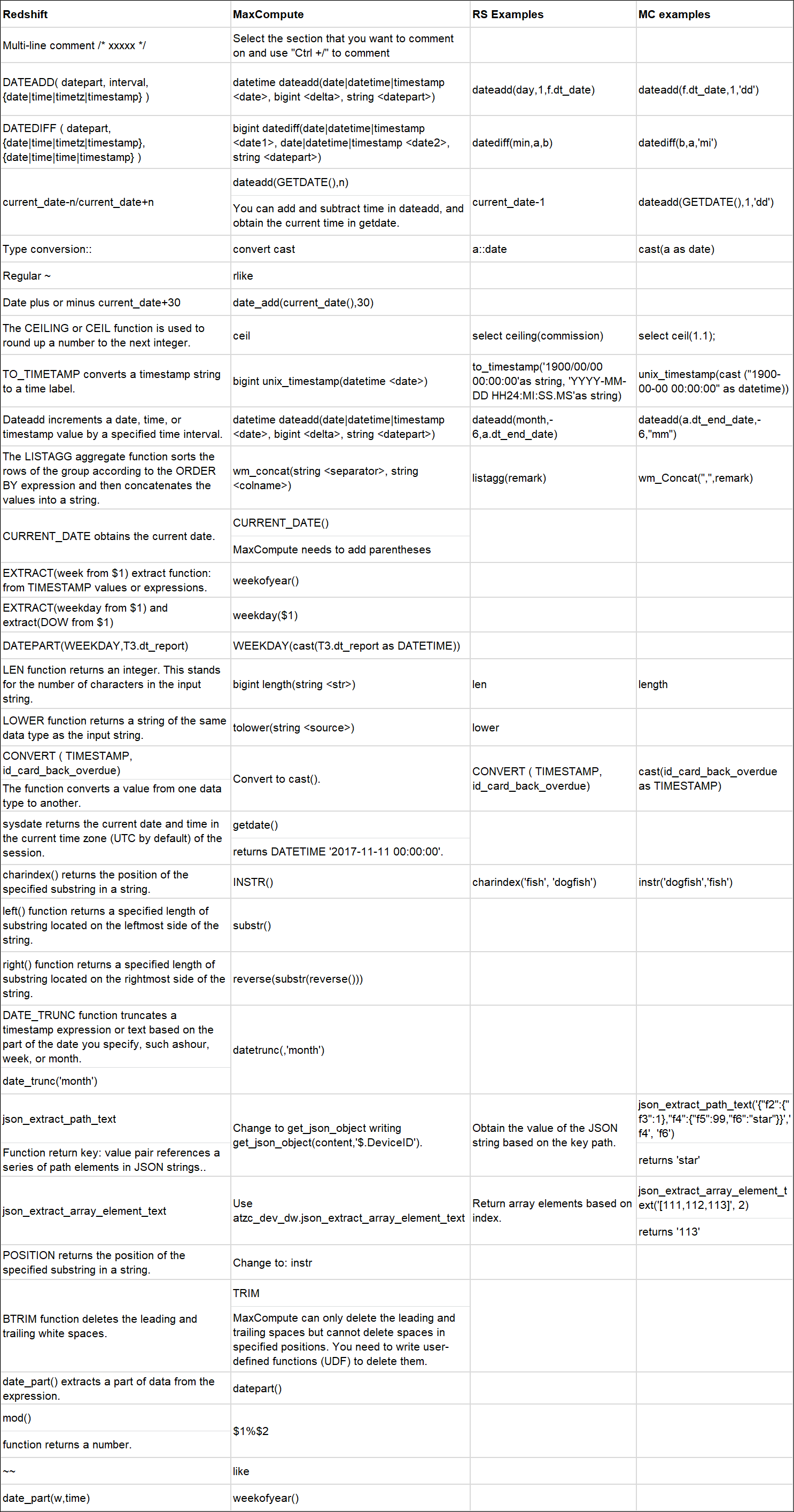

This article is a detailed introduction to the similarities and differences of SQL syntax between Redshift and MaxCompute. It is helpful to accelerate the migration from SQL tasks to MaxCompute. There are many syntax differences between Redshift and MaxCompute. Therefore, we need to modify the script written on Redshift before we use it in MaxCompute.

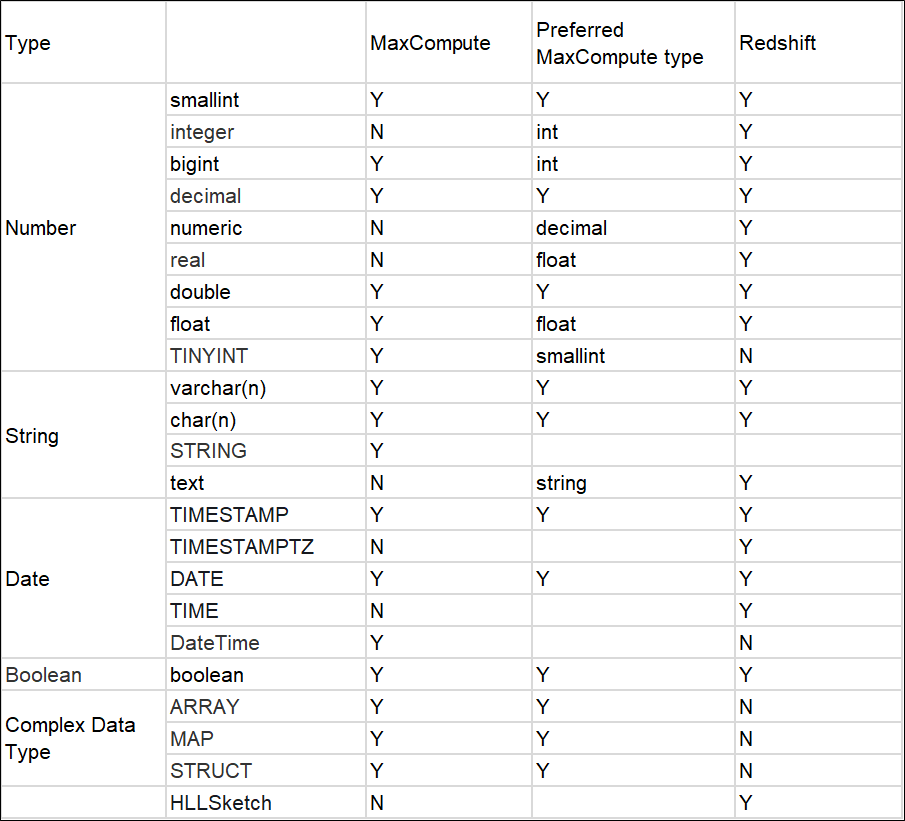

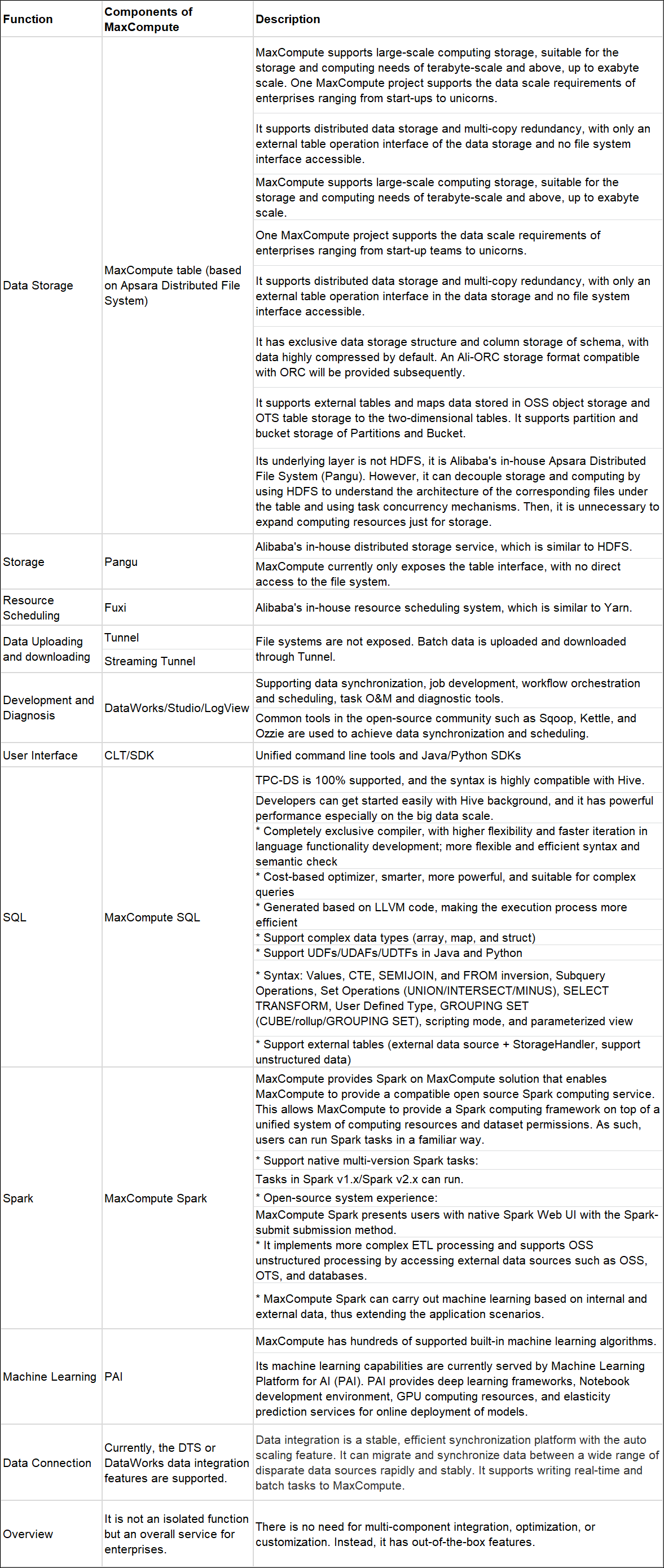

For more details about MaxCompoute data types, please see this link

MaxCompute does not have the concepts of schema, group, library, and stored procedure. There are only projects, tables, and partitions. MaxCompute does not have auto-incremental sequence and foreign keys when creating tables. It does not support choosing the encoding method, and its default encoding method is UTF-8. The internal tables do not support choosing the storage format, with Aliorc as the default storage format.

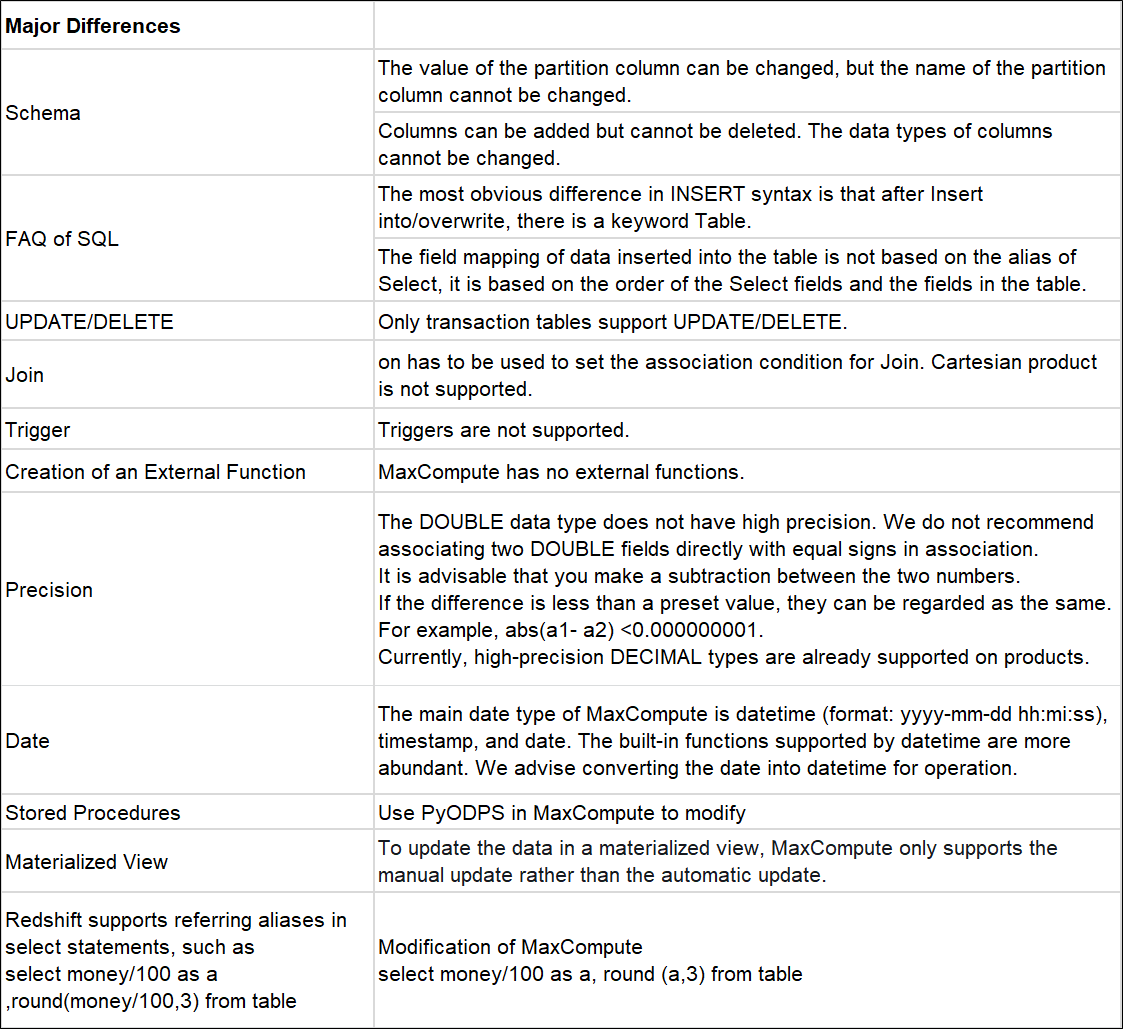

Main Differences:

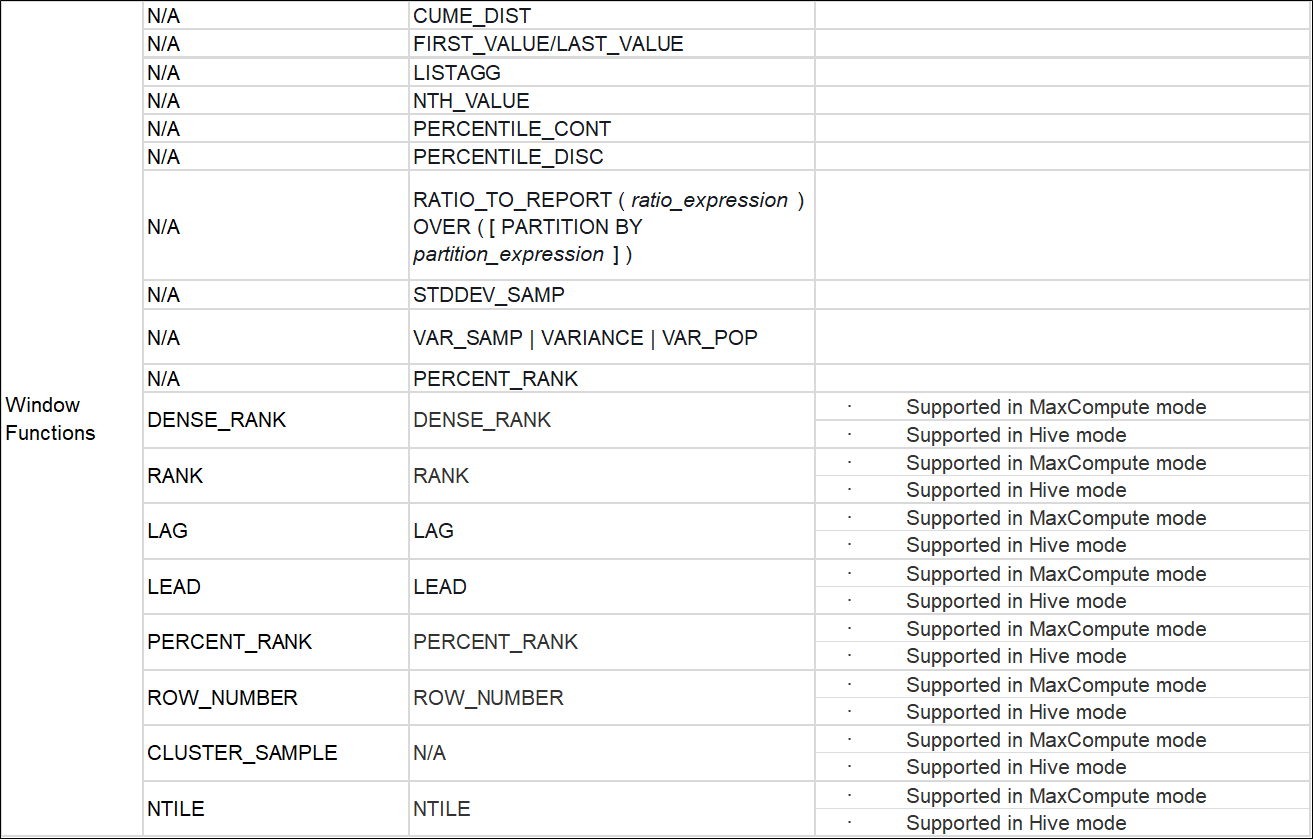

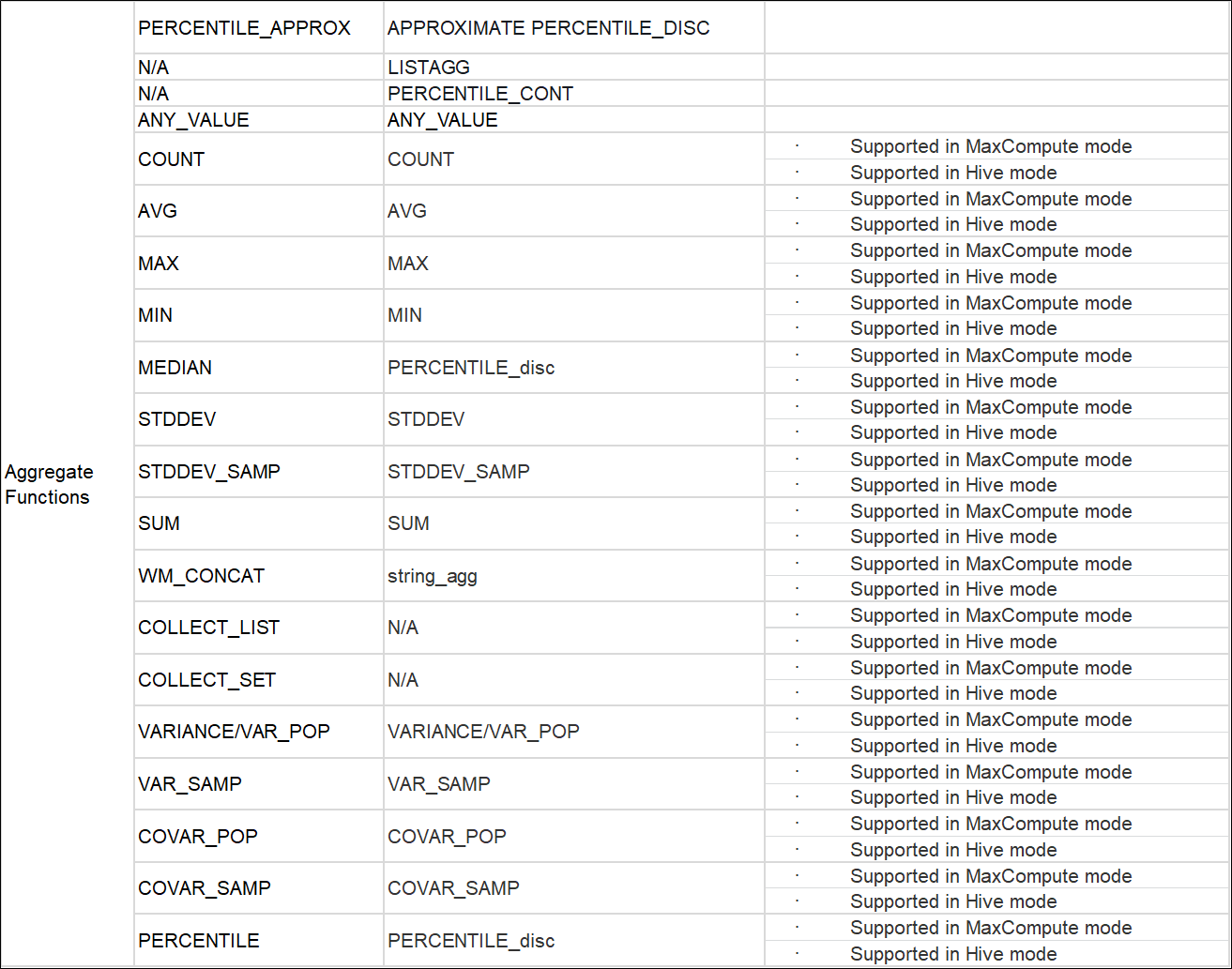

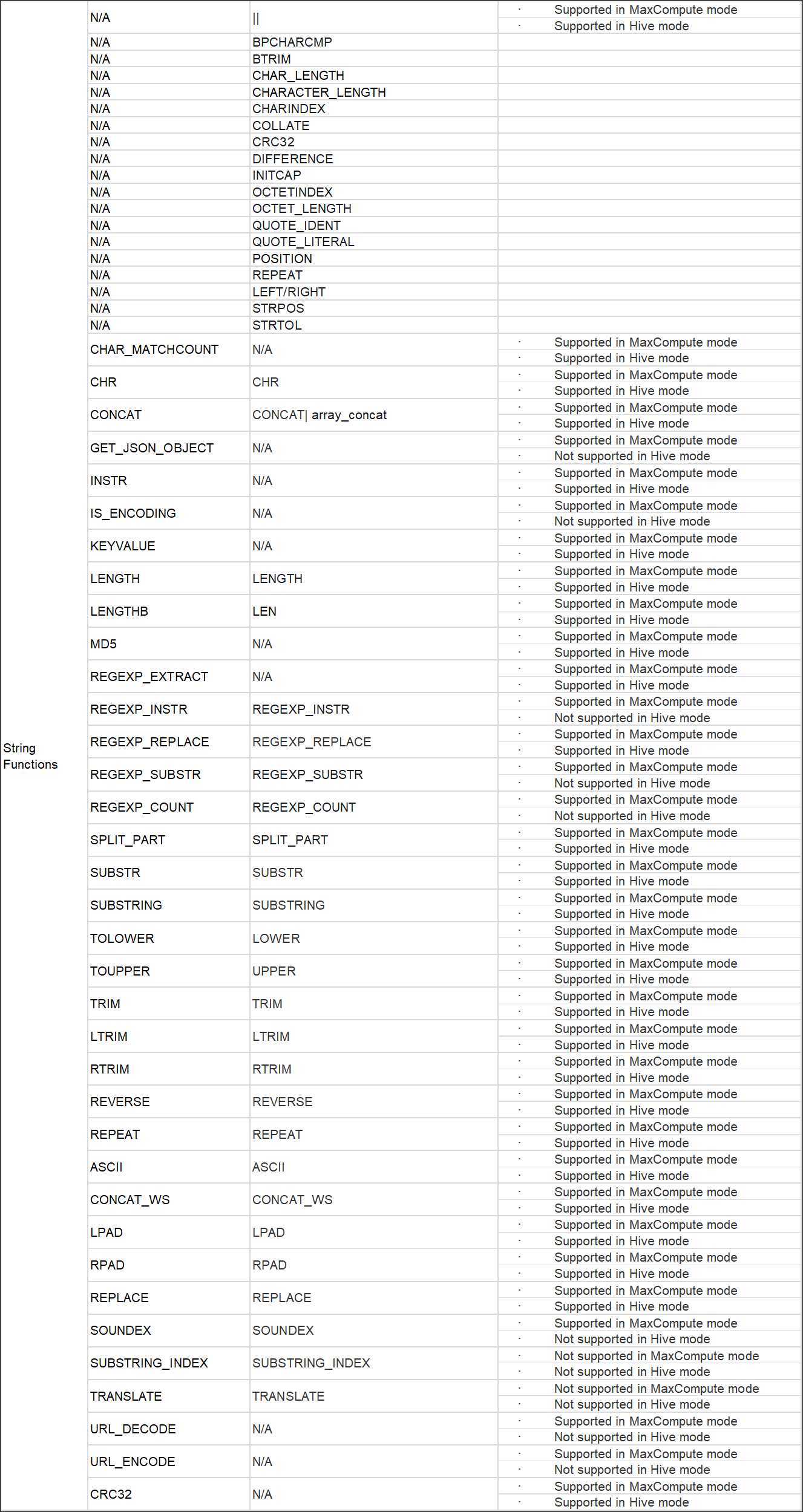

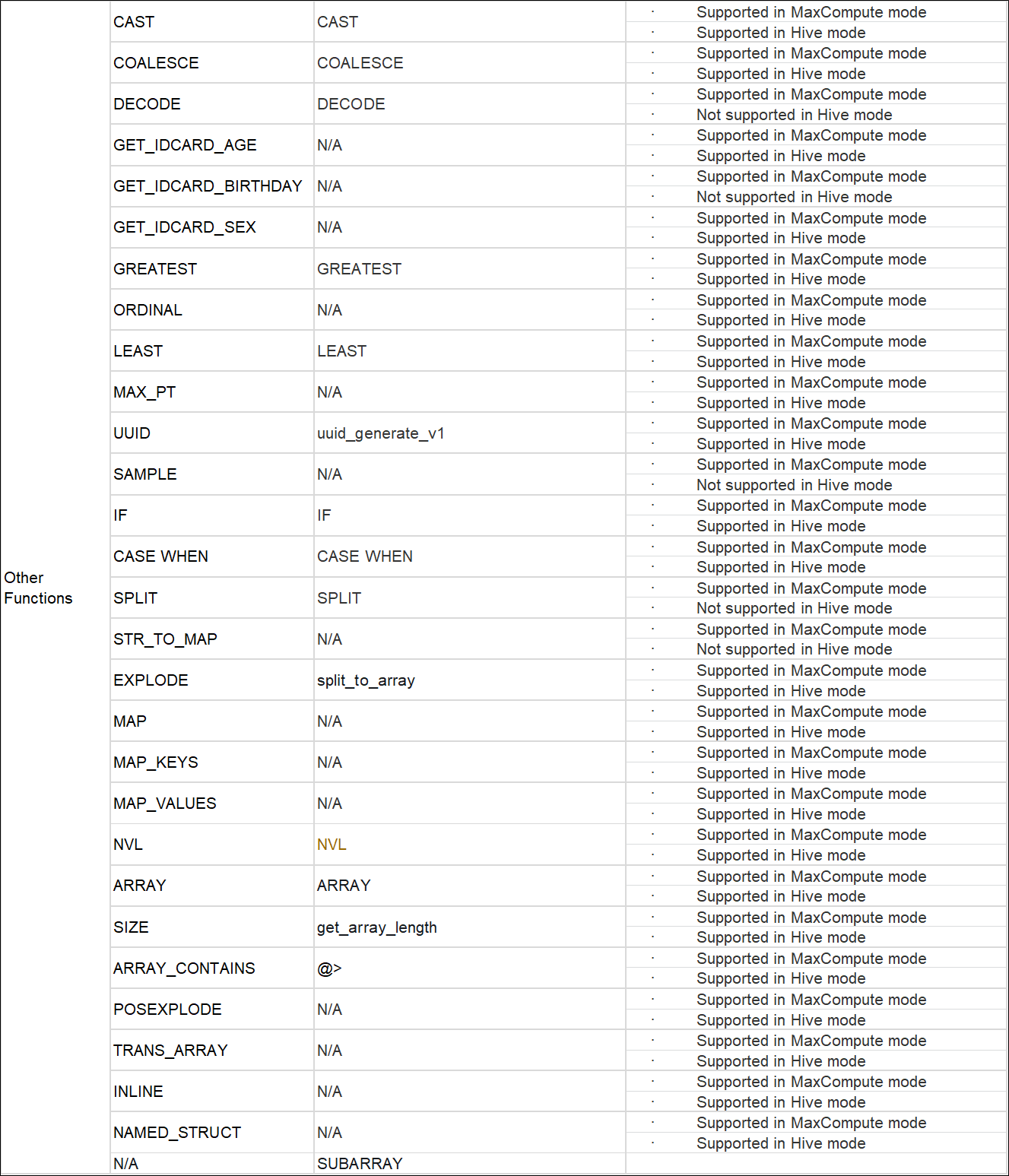

Other unlisted functions are not supported in Redshift.

For more details about MaxCompoute built-In functions, please see this link

Choose one syntax verification tool:

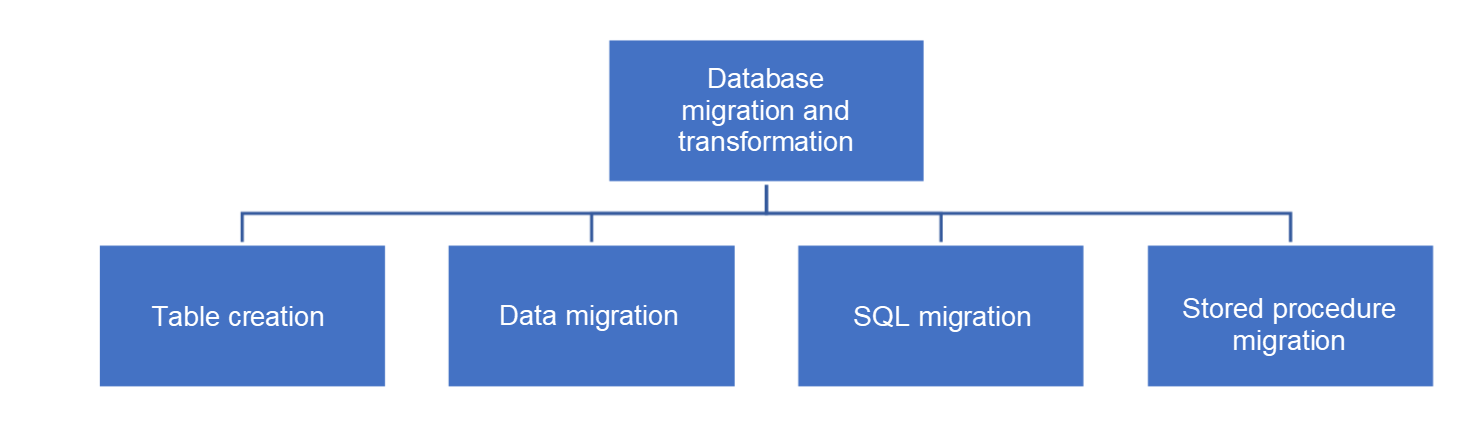

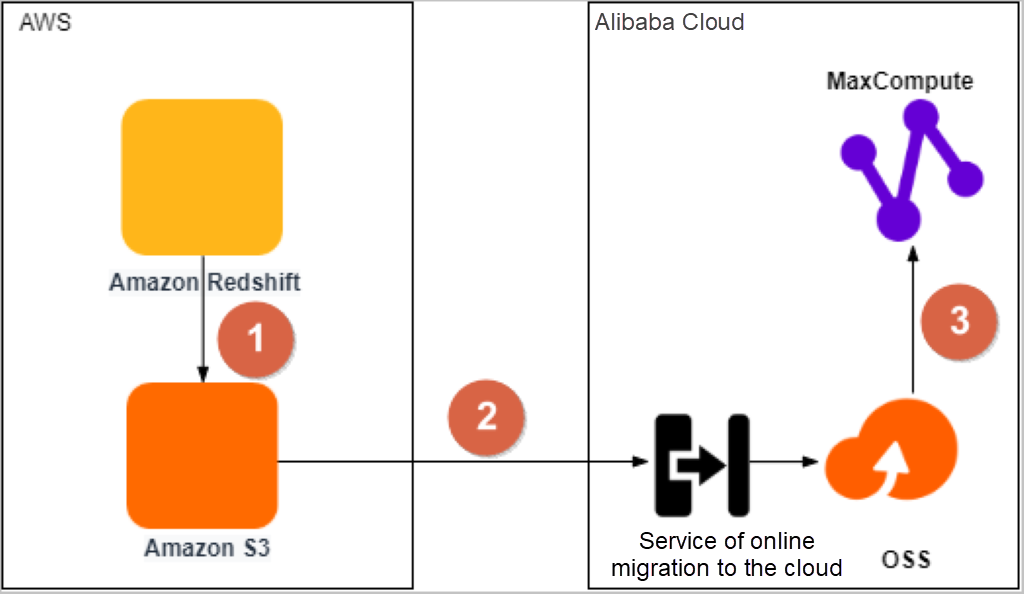

Database migration mainly includes the following steps:

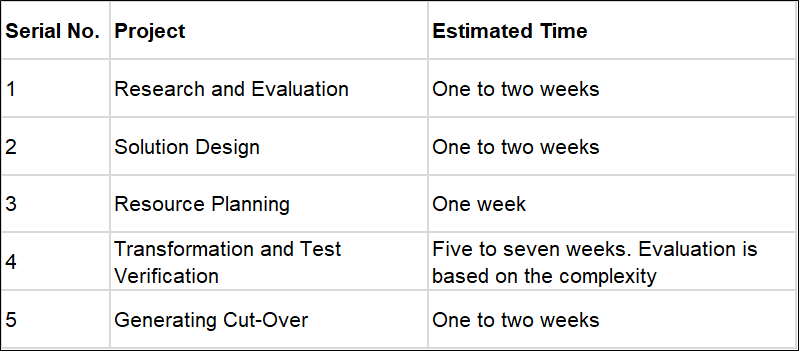

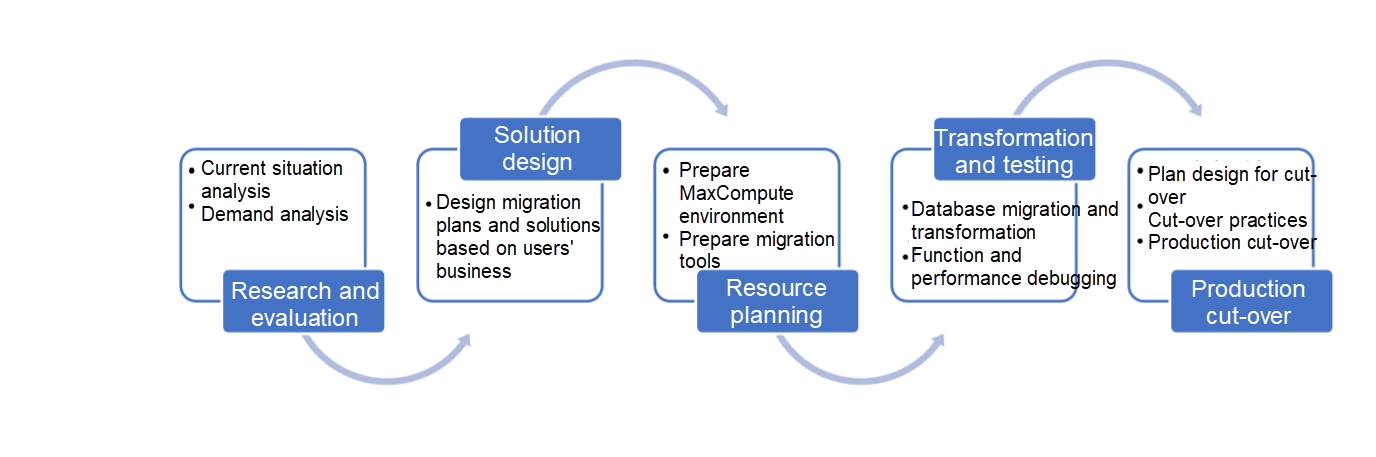

Migration Implementation Plan:

Users can make a reasonable migration plan based on their existing Redshift data volume, QPS, TPS, other performance indicators, high availability requirements, and future business growth requirements.

Users need to prepare the MaxCompute environment and obtain corresponding migration tools. For more information about migration tools, please see the section entitled An Introduction to Migration Tools for Redshift to MaxCompute.

The migration tool can use MaxCompute studio (or a new SQL node created by DataWorks) client syntax verification to create a new SQL file. Sections with unsupported syntax will turn red.

Obtain the definitions of table list and table field in Redshift and convert them based on the field values supported by MaxCompute. A transactional table is needed for tables with update and delete statements.

For information about type conversion, please refer to the section entitled Data Type and Type Conversion.

The syntax for creating a table:

create [external] table [if not exists]

[( [not null] [default ] [comment ], ...)]

[comment ]

[partitioned by ( [comment ], ...)][clustered by | range clustered by ( [, , ...]) [sorted by ( [asc | desc] [, [asc | desc] ...])] into buckets] [stored by StorageHandler][with serdeproperties (options)][location ][tblproperties("transactional"="true")]

[lifecycle ];create table [if not exists] [lifecycle ] as ;create table [if not exists] like [lifecycle ];Note:

The name cannot exceed 128 bytes in length.

create table like statement does not copy the lifecycle attribute of source table.create table ... as select ... statement, if a constant is used as the column's value in a select clause, we advise specifying the column's name.create table ... like statement to ensure that the destination table has the same schema as the source table:

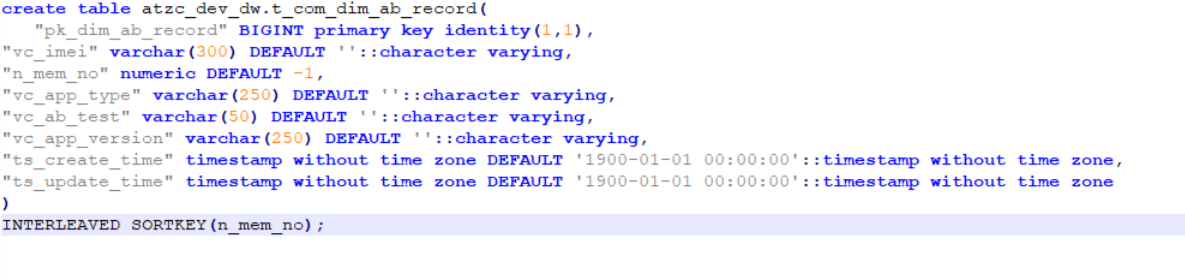

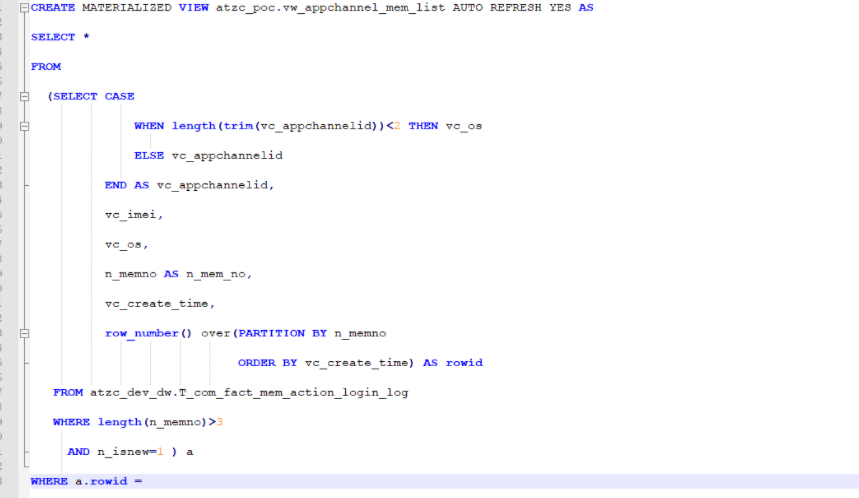

BIGINT primary key identity(1,1), have to be removed, with only the default value of default 1 retained.::character varying, '1900/01/01'::text, the two colons and their following contents need to be deleted because MaxCompute does not support them."n_car_no" numeric DEFAULT -1::numeric, -1 needs to be removed. MaxCompute does not support the default value of -1."ts_req_time" timestamp without time zone DEFAULT to_timestamp('1900/00/00 00:00:00'::text, 'YYYY-MM-DD HH24:MI:SS.MS'::text), you need to remove the time zone and change it to the timestamp DEFAULT timestamp "1900-01-01 00:00:00".INTERLEAVED SORTKEY(vc_trans_id), MaxCompute does not support the interlaced sorting column function, so consider replacing it with zorder.AUTO REFRESH YES needs to be removed when modifying materialized views, and the materialized view of MaxCompute does not support window functions.

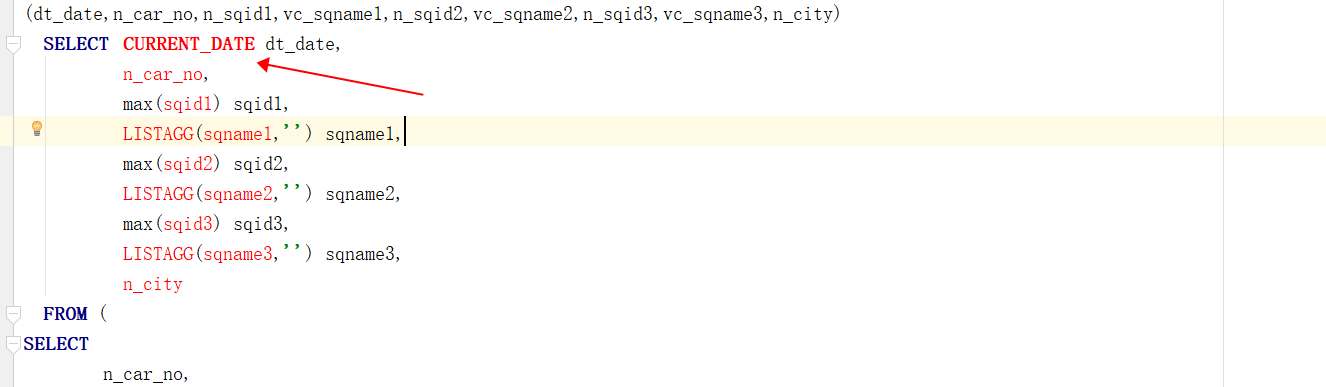

SQL migration converts Redshift SQL into MaxCompute SQL based on the differences to make SQL available. For the differences between SQL statements, please refer to the chapter entitled Comparison Between Redshift and MaxCompute Before Migration.

DML Statements

COMMENT ON column, atzc_dev_dw.t_com_fact_auto_pay_gw_trans_pay_gw."n_trans_amt" is 'dml';, you need to change it to the syntax supported by MaxCompute, using alter table change column comment '';.DQL Statement:

Built-In Functions:

We suggest changing to the temporary tables or PyODPS method.

| Serial No. | Description |

| 1 | Unload data from Amazon Redshift to a data lake on Amazon Simple Storage Service (S3). |

| 2 | Migrate data from Amazon S3 to an OSS bucket using the Data Online Migration service of OSS. |

| 3 | Migrate data from the OSS bucket to a MaxCompute project in the same region and then verify the integrity and accuracy of the migrated data. |

Click this link to see the reference document on data migration.

Currently, there is no tool to support the data test and verification of the migration from Redshift to MaxCompute. So, we have to write a script tool to complete this task. The common verification solutions are listed below:

Cloud-Native Upgrade Practices for Big Data Platforms in the Digital Marketing Industry

137 posts | 19 followers

FollowAlibaba Cloud MaxCompute - May 9, 2019

Alibaba Clouder - January 3, 2018

ApsaraDB - April 28, 2020

Alibaba Clouder - February 7, 2018

Alibaba Cloud MaxCompute - July 15, 2021

Alibaba Cloud MaxCompute - September 18, 2019

137 posts | 19 followers

Follow Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud MaxCompute