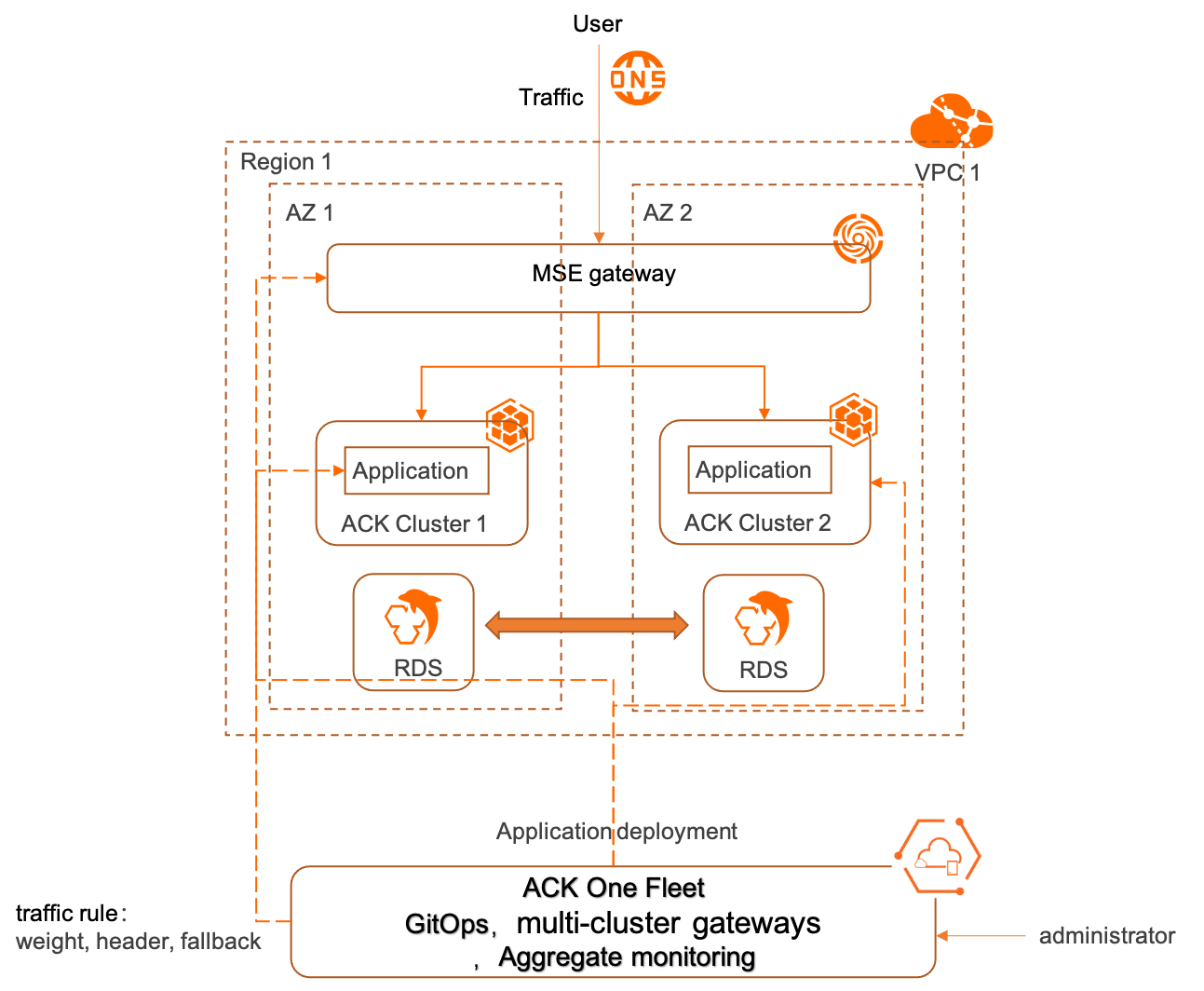

Gerbang multi-kluster adalah gateway cloud-native yang disediakan oleh ACK One untuk lingkungan multi-cloud dan multi-kluster. Gerbang ini memanfaatkan Ingress Microservices Engine (MSE) terkelola dan API Ingress untuk mengelola lalu lintas utara-selatan Lapisan 7. Gerbang multi-kluster mendukung pemulihan bencana antarzona otomatis dan rilis bertahap berbasis header, yang menyederhanakan operasi dan pemeliharaan (O&M) aplikasi multi-kluster serta mengurangi biaya. Ketika dikombinasikan dengan kemampuan ACK One GitOps, Anda dapat dengan cepat membangun sistem zona redundansi aktif atau pemulihan bencana primer/sekunder. Sistem ini tidak mencakup pemulihan bencana data.

Tinjauan pemulihan bencana

Solusi pemulihan bencana di cloud meliputi hal-hal berikut:

Pemulihan Bencana Antar-AZ dalam Satu Kota

Pemulihan bencana antarzona mencakup zona redundansi aktif dan pemulihan bencana primer/sekunder. Latensi jaringan antar pusat data dalam wilayah yang sama rendah, sehingga pemulihan bencana antarzona cocok untuk melindungi data dari bencana tingkat zona, seperti kebakaran, gangguan jaringan, atau pemadaman listrik.

Pemulihan bencana aktif-aktif lintas wilayah

Solusi pemulihan bencana aktif-aktif lintas wilayah memiliki latensi jaringan yang lebih tinggi antar pusat data, namun melindungi data dari bencana tingkat wilayah, seperti gempa bumi dan banjir.

Tiga pusat data di dua wilayah

Solusi ini menggabungkan pemulihan bencana antarzona dual-center dan pemulihan bencana lintas wilayah, sehingga menawarkan manfaat keduanya dan cocok untuk skenario yang memerlukan kontinuitas serta ketersediaan aplikasi dan data yang tinggi.

Dalam praktiknya, pemulihan bencana antarzona lebih mudah diterapkan untuk data dibandingkan pemulihan bencana lintas wilayah, sehingga tetap menjadi solusi yang sangat penting.

Manfaat

Rencana pemulihan bencana yang menggunakan gerbang multi-kluster memiliki keunggulan berikut dibandingkan rencana berbasis distribusi trafik DNS:

Rencana berbasis DNS memerlukan beberapa alamat IP Server Load Balancer (SLB), satu untuk setiap kluster. Sebaliknya, rencana berbasis gerbang multi-kluster hanya memerlukan satu alamat IP SLB di tingkat wilayah dan secara default menyediakan ketersediaan tinggi di beberapa zona dalam wilayah yang sama.

Rencana berbasis gerbang multi-kluster mendukung routing dan penerusan Lapisan 7, sedangkan rencana berbasis DNS tidak.

Dalam rencana berbasis DNS, klien sering menyimpan cache alamat IP, yang dapat menyebabkan gangguan layanan singkat selama pergantian alamat IP. Sebaliknya, rencana berbasis gerbang multi-kluster dapat melakukan failover trafik secara mulus ke layanan backend kluster lainnya.

Gerbang multi-kluster merupakan sumber daya tingkat wilayah. Semua operasi—seperti membuat gerbang dan sumber daya Ingress—dapat dilakukan pada instans armada tanpa perlu menginstal pengontrol Ingress atau membuat sumber daya Ingress di setiap kluster ACK. Hal ini memungkinkan manajemen trafik global di tingkat wilayah dan mengurangi biaya manajemen multi-kluster.

Arsitektur

Gerbang multi-kluster ACK One diimplementasikan menggunakan Ingress MSE terkelola. Ketika dikombinasikan dengan kemampuan distribusi aplikasi multi-kluster dari ACK One GitOps, Anda dapat dengan cepat menerapkan sistem pemulihan bencana antarzona untuk aplikasi Anda. Topik ini menggunakan contoh penyebaran aplikasi sampel dengan GitOps ke dua kluster ACK (Kluster 1 dan Kluster 2) di zona berbeda dalam wilayah China (Hong Kong) untuk menunjukkan cara menerapkan zona redundansi aktif dan pemulihan bencana primer/sekunder.

Aplikasi sampel dalam topik ini adalah aplikasi web yang mencakup sumber daya Deployment dan Service. Gambar berikut menunjukkan arsitektur rencana pemulihan bencana antarzona yang menggunakan gerbang multi-kluster.

Buat gerbang MSE menggunakan sumber daya MseIngressConfig di armada ACK One.

Buat dua kluster ACK, Kluster 1 dan Kluster 2, di dua zona ketersediaan (AZ) berbeda, AZ 1 dan AZ 2, dalam wilayah yang sama.

Gunakan ACK One GitOps untuk mendistribusikan aplikasi ke Kluster 1 dan Kluster 2 yang telah dibuat.

Setelah gerbang multi-kluster dibuat, Anda dapat mengatur aturan trafik untuk mengarahkan trafik berdasarkan bobot atau ke kluster tertentu berdasarkan header permintaan. Jika salah satu kluster mengalami anomali, trafik akan secara otomatis diarahkan ke kluster lainnya.

Prasyarat

Anda telah mengaitkan armada ACK One dengan dua kluster ACK yang berada dalam VPC yang sama dengan instans armada. Untuk informasi selengkapnya, lihat Mengaitkan kluster.

Anda telah memperoleh file KubeConfig untuk instans armada dari Konsol ACK One dan menggunakan kubectl untuk terhubung ke instans armada.

Anda telah mengaktifkan fitur gerbang multi-kluster.

CatatanUntuk informasi selengkapnya tentang penagihan gerbang multi-kluster, lihat Aturan penagihan.

Anda telah membuat namespace di instans armada ACK One. Namespace ini harus sama dengan namespace tempat aplikasi dideploy di kluster terkait. Dalam topik ini, namespace-nya adalah

gateway-demo.

Langkah 1: Gunakan GitOps untuk mendeploy aplikasi ke beberapa kluster

Deploy menggunakan UI ArgoCD

Masuk ke Konsol ACK One. Di panel navigasi sebelah kiri, pilih .

Pada halaman GitOps, klik GitOps Console.

CatatanJika GitOps belum diaktifkan untuk instans armada ACK One, klik Enable GitOps Now untuk masuk ke GitOps Console.

Untuk mengakses GitOps melalui jaringan publik, lihat Aktifkan akses publik ke GitOps.

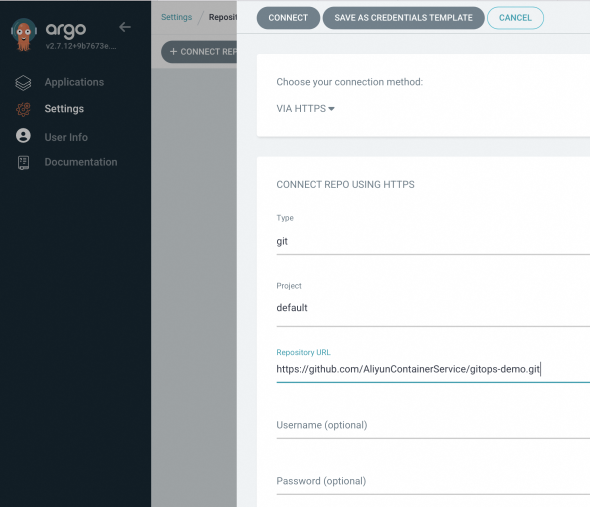

Tambahkan repositori aplikasi.

Di panel navigasi sebelah kiri UI Argo CD, pilih Settings. Lalu, pilih .

Pada panel yang muncul, konfigurasikan parameter berikut dan klik CONNECT.

Area

Parameter

Nilai

Choose your connection method

-

VIA HTTP/HTTPS

CONNECT REPO USING HTTP/HTTPS

Type

git

Project

default

Repository URL

https://github.com/AliyunContainerService/gitops-demo.git

Skip server verification

Pilih kotak centang ini.

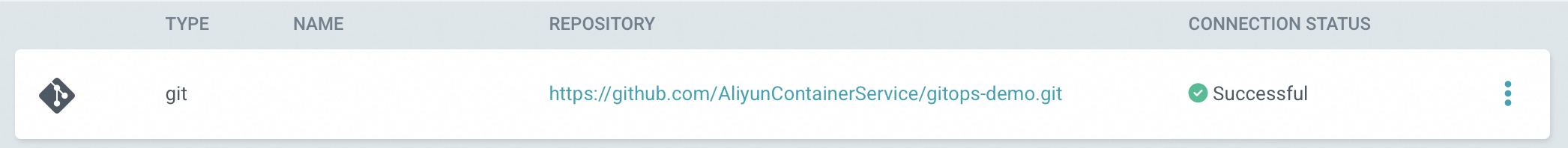

Setelah repositori terhubung, STATUS KONEKSI repositori Git berubah menjadi Berhasil.

Buat aplikasi.

Pada halaman Applications di ArgoCD, klik + NEW APP dan konfigurasikan parameter berikut.

Bagian

Parameter

Deskripsi

UMUM

Nama Aplikasi

Masukkan nama aplikasi kustom.

Nama Proyek

default

KEBIJAKAN SYNC

Pilih kebijakan sinkronisasi sesuai kebutuhan. Nilai yang valid:

Manual: Saat terjadi perubahan di repositori Git, Anda harus secara manual menyinkronkan perubahan tersebut ke kluster tujuan.

Otomatis: Server ArgoCD memeriksa perubahan di repositori Git setiap 3 menit dan secara otomatis menerapkannya ke kluster tujuan.

OPSI SYNC

Pilih

AUTO-CREATE NAMESPACE.SUMBER

URL Repositori

Pilih repositori Git yang Anda tambahkan pada langkah sebelumnya dari daftar tarik-turun. Dalam contoh ini, pilih

https://github.com/AliyunContainerService/gitops-demo.git.Revisi

Pilih Cabang:

gateway-demo.Jalur

manifests/helm/web-demo

TUJUAN

URL Kluster

Pilih URL Kluster 1 atau Kluster 2.

Anda juga dapat mengklik

URLdi sebelah kanan dan memilih kluster berdasarkanNAMA KLUSTER.Namespace

Topik ini menggunakan

gateway-demo. Sumber daya aplikasi, seperti Service dan Deployment, akan dibuat di namespace ini.Helm

Parameter

Atur nilai

envClustermenjadicluster-demo-1ataucluster-demo-2untuk membedakan kluster backend mana yang memproses permintaan.

Deploy menggunakan CLI ArgoCD

Aktifkan GitOps pada instans armada ACK One. Untuk informasi selengkapnya, lihat Aktifkan GitOps pada instans armada ACK One.

Akses ArgoCD. Untuk informasi selengkapnya, lihat Akses ArgoCD menggunakan CLI ArgoCD.

Buat dan publikasikan aplikasi.

Jalankan perintah berikut untuk menambahkan repositori Git.

argocd repo add https://github.com/AliyunContainerService/gitops-demo.git --name ackone-gitops-demosOutput yang diharapkan:

Repository 'https://github.com/AliyunContainerService/gitops-demo.git' addedJalankan perintah berikut untuk melihat daftar repositori Git yang telah ditambahkan.

argocd repo listOutput yang diharapkan:

TYPE NAME REPO INSECURE OCI LFS CREDS STATUS MESSAGE PROJECT git https://github.com/AliyunContainerService/gitops-demo.git false false false false Successful defaultJalankan perintah berikut untuk melihat daftar kluster.

argocd cluster listOutput yang diharapkan:

SERVER NAME VERSION STATUS MESSAGE PROJECT https://1.1.XX.XX:6443 c83f3cbc90a****-temp01 1.22+ Successful https://2.2.XX.XX:6443 c83f3cbc90a****-temp02 1.22+ Successful https://kubernetes.default.svc in-cluster Unknown Cluster has no applications and is not being monitored.Gunakan Application untuk membuat dan mempublikasikan aplikasi demo ke kluster tujuan.

Buat file apps-web-demo.yaml dengan konten berikut.

Ganti

repoURLdengan alamat repositori aktual Anda.Jalankan perintah berikut untuk mendeploy aplikasi.

kubectl apply -f apps-web-demo.yamlJalankan perintah berikut untuk melihat daftar aplikasi.

argocd app listOutput yang diharapkan:

# app list NAME CLUSTER NAMESPACE PROJECT STATUS HEALTH SYNCPOLICY CONDITIONS REPO PATH TARGET argocd/web-demo-cluster1 https://10.1.XX.XX:6443 default Synced Healthy Auto <none> https://github.com/AliyunContainerService/gitops-demo.git manifests/helm/web-demo main argocd/web-demo-cluster2 https://10.1.XX.XX:6443 default Synced Healthy Auto <none> https://github.com/AliyunContainerService/gitops-demo.git manifests/helm/web-demo main

Langkah 2: Gunakan kubectl di instans armada untuk membuat gerbang multi-kluster

Buat objek MseIngressConfig di armada ACK One untuk membuat gerbang multi-kluster dan menambahkan kluster terkait ke dalamnya.

Peroleh dan catat ID vSwitch dari instans armada ACK One. Untuk informasi selengkapnya, lihat Peroleh ID vSwitch.

Buat file gateway.yaml dengan konten berikut.

CatatanGanti

${vsw-id1}dan${vsw-id2}dengan ID vSwitch yang diperoleh dari langkah sebelumnya, dan ganti${cluster1}dan${cluster2}dengan ID kluster terkait yang ingin Anda tambahkan.Untuk kluster terkait

${cluster1}dan${cluster2}, Anda harus mengonfigurasi aturan masuk grup keamanan mereka untuk mengizinkan akses dari semua alamat IP dan port dalam blok CIDR vSwitch.

apiVersion: mse.alibabacloud.com/v1alpha1 kind: MseIngressConfig metadata: annotations: mse.alibabacloud.com/remote-clusters: ${cluster1},${cluster2} name: ackone-gateway-hongkong spec: common: instance: replicas: 3 spec: 2c4g network: vSwitches: - ${vsw-id} ingress: local: ingressClass: mse name: mse-ingressParameter

Deskripsi

mse.alibabacloud.com/remote-clusters

Kluster yang akan ditambahkan ke gerbang multi-kluster. Masukkan ID kluster yang sudah dikaitkan dengan instans armada.

spec.name

Nama instans gerbang.

spec.common.instance.spec

Opsional. Tipe instans gerbang. Nilai default adalah

4c8g.spec.common.instance.replicas

Opsional. Jumlah replika gerbang. Defaultnya adalah 3.

spec.ingress.local.ingressClass

Opsional. Nama ingressClass yang akan didengarkan. Artinya mendengarkan semua Ingress di instans armada tempat bidang

ingressClassdiatur kemse.Jalankan perintah berikut untuk mendeploy gerbang multi-kluster.

kubectl apply -f gateway.yamlJalankan perintah berikut untuk memverifikasi bahwa gerbang multi-kluster telah dibuat dan berada dalam status listening.

kubectl get mseingressconfig ackone-gateway-hongkongOutput yang diharapkan:

NAME STATUS AGE ackone-gateway-hongkong Listening 3m15sStatus gerbang dalam output adalah

Listening. Ini menunjukkan bahwa gateway cloud-native telah dibuat, berjalan, dan secara otomatis mendengarkan sumber daya Ingress dengan IngressClassmsedi kluster.Status gerbang yang dibuat dari MseIngressConfig berubah dalam urutan berikut: Pending, Running, dan Listening. Deskripsi status:

Pending: Gateway cloud-native sedang dibuat. Proses ini mungkin memakan waktu sekitar 3 menit.

Running: Gateway cloud-native telah dibuat dan berjalan.

Listening: Gateway cloud-native berjalan dan mendengarkan Ingress.

Failed: Gateway cloud-native berada dalam kondisi abnormal. Anda dapat memeriksa pesan di bidang Status untuk mengidentifikasi penyebabnya.

Jalankan perintah berikut untuk memastikan bahwa kluster terkait berhasil ditambahkan.

kubectl get mseingressconfig ackone-gateway-hongkong -ojsonpath="{.status.remoteClusters}"Output yang diharapkan:

[{"clusterId":"c7fb82****"},{"clusterId":"cd3007****"}]Output mencakup ID kluster yang ditentukan dan tidak ada pesan Failed. Ini menunjukkan bahwa kluster terkait telah berhasil ditambahkan ke gerbang multi-kluster.

Langkah 3: Gunakan Ingress untuk menerapkan pemulihan bencana antarzona

Gerbang multi-kluster menggunakan Ingress untuk mengelola trafik di beberapa kluster. Anda dapat membuat objek Ingress di instans armada ACK One untuk menerapkan zona redundansi aktif dan pemulihan bencana primer/sekunder.

Pastikan Anda telah membuat namespace gateway-demo di instans armada. Ingress yang dibuat di sini harus berada dalam namespace yang sama dengan Service dalam aplikasi demo yang dideploy pada langkah sebelumnya, yaitu gateway-demo.

Pemulihan Bencana Zona Redundansi Aktif

Buat Ingress untuk menerapkan zona redundansi aktif

Buat objek Ingress berikut di instans armada ACK One untuk menerapkan zona redundansi aktif.

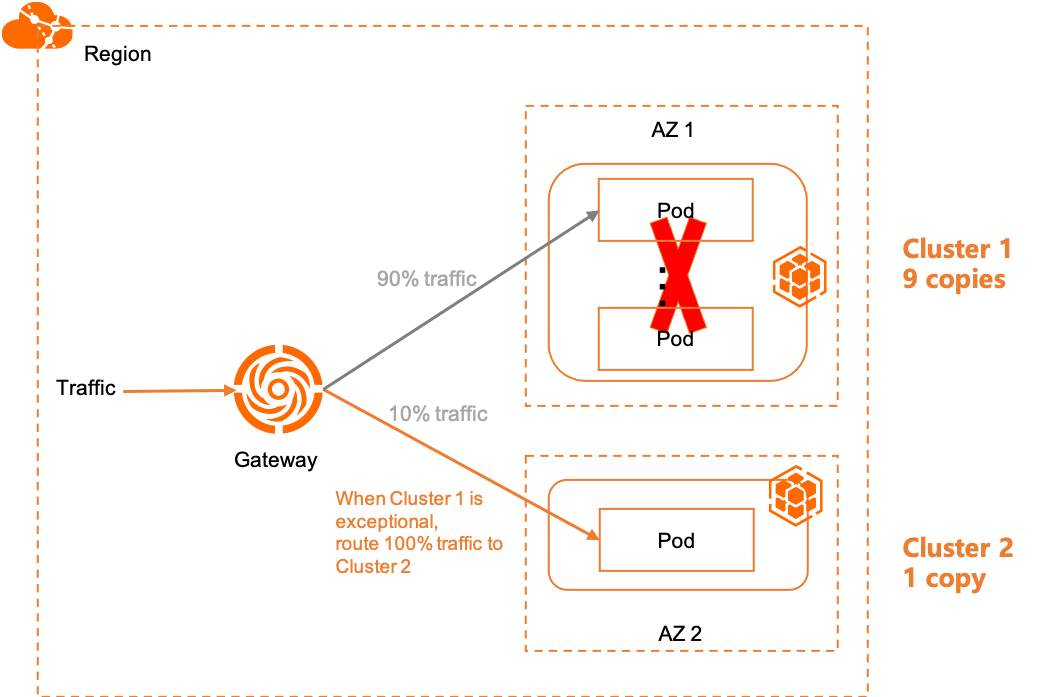

Secara default, trafik diseimbangkan ke setiap replika layanan backend dengan nama yang sama di kedua kluster yang ditambahkan ke gerbang multi-kluster. Jika backend Kluster 1 mengalami anomali, gerbang secara otomatis mengarahkan seluruh trafik ke Kluster 2. Jika rasio replika Kluster 1 terhadap Kluster 2 adalah 9:1, maka secara default 90% trafik diarahkan ke Kluster 1 dan 10% ke Kluster 2. Jika semua backend di Kluster 1 mengalami anomali, 100% trafik secara otomatis diarahkan ke Kluster 2. Gambar berikut menunjukkan topologinya.

Buat file ingress-demo.yaml dengan konten berikut.

Dalam kode berikut, layanan backend

service1diekspos melalui aturan routing/svc1di bawah nama domainexample.com.apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: web-demo spec: ingressClassName: mse rules: - host: example.com http: paths: - path: /svc1 pathType: Exact backend: service: name: service1 port: number: 80Jalankan perintah berikut untuk mendeploy Ingress di instans armada ACK One.

kubectl apply -f ingress-demo.yaml -n gateway-demo

Verifikasi versi rilis canary

Dalam skenario zona redundansi aktif, Anda dapat memverifikasi versi rilis canary aplikasi tanpa memengaruhi layanan Anda.

Buat aplikasi baru di kluster yang sudah ada. Bedakan aplikasi ini dari aplikasi asli hanya berdasarkan nama dan selector Service dan Deployment. Kemudian, gunakan header permintaan untuk memverifikasi aplikasi baru tersebut.

Buat file new-app.yaml di Kluster 1 dengan konten berikut. Hanya Service dan Deployment yang berbeda dari aplikasi asli.

apiVersion: v1 kind: Service metadata: name: service1-canary-1 namespace: gateway-demo spec: ports: - port: 80 protocol: TCP targetPort: 8080 selector: app: web-demo-canary-1 sessionAffinity: None type: ClusterIP --- apiVersion: apps/v1 kind: Deployment metadata: name: web-demo-canary-1 namespace: gateway-demo spec: replicas: 1 selector: matchLabels: app: web-demo-canary-1 template: metadata: labels: app: web-demo-canary-1 spec: containers: - env: - name: ENV_NAME value: cluster-demo-1-canary image: 'registry-cn-hangzhou.ack.aliyuncs.com/acs/web-demo:0.6.0' imagePullPolicy: Always name: web-demoJalankan perintah berikut untuk mendeploy aplikasi baru di Kluster 1.

kubectl apply -f new-app.yamlBuat file new-ingress.yaml dengan konten berikut.

Buat Ingress berbasis header di instans armada. Tambahkan anotasi untuk mengaktifkan fitur canary dan tentukan header. Gunakan header

canary-dest: cluster1dalam permintaan untuk mengarahkan trafik ke versi rilis canary guna verifikasi.nginx.ingress.kubernetes.io/canary: Atur ke"true"untuk mengaktifkan fitur rilis canary.nginx.ingress.kubernetes.io/canary-by-header: Kunci header untuk permintaan ke kluster ini.nginx.ingress.kubernetes.io/canary-by-header-value: Nilai header untuk permintaan ke kluster ini.apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: web-demo-canary-1 namespace: gateway-demo annotations: nginx.ingress.kubernetes.io/canary: "true" nginx.ingress.kubernetes.io/canary-by-header: "canary-dest" nginx.ingress.kubernetes.io/canary-by-header-value: "cluster1" spec: ingressClassName: mse rules: - host: example.com http: paths: - path: /svc1 pathType: Exact backend: service: name: service1-canary-1 port: number: 80

Jalankan perintah berikut untuk mendeploy Ingress berbasis header di instans armada.

kubectl apply -f new-ingress.yaml

Verifikasi zona redundansi aktif

Ubah jumlah replika yang dideploy ke Kluster 1 menjadi 9 dan jumlah replika yang dideploy ke Kluster 2 menjadi 1. Hasil yang diharapkan adalah trafik diarahkan ke Kluster 1 dan Kluster 2 dengan rasio 9:1 secara default. Ketika Kluster 1 mengalami anomali, seluruh trafik secara otomatis diarahkan ke Kluster 2.

Jalankan perintah berikut untuk memperoleh alamat IP publik gerbang multi-kluster.

kubectl get ingress web-demo -n gateway-demo -ojsonpath="{.status.loadBalancer}"Trafik default diarahkan ke kedua kluster berdasarkan rasio

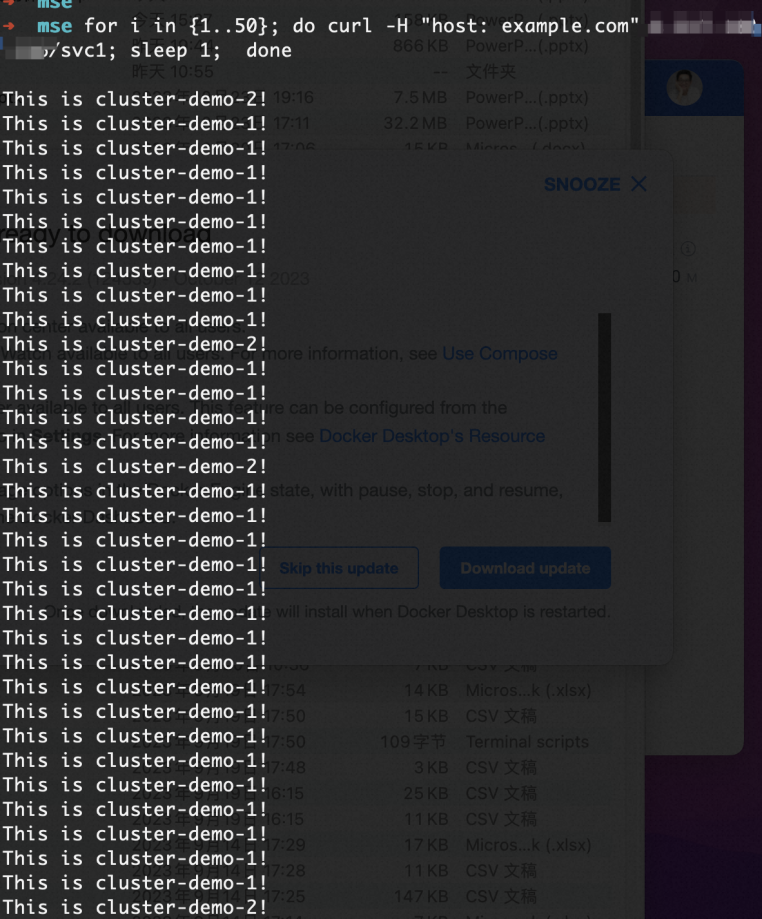

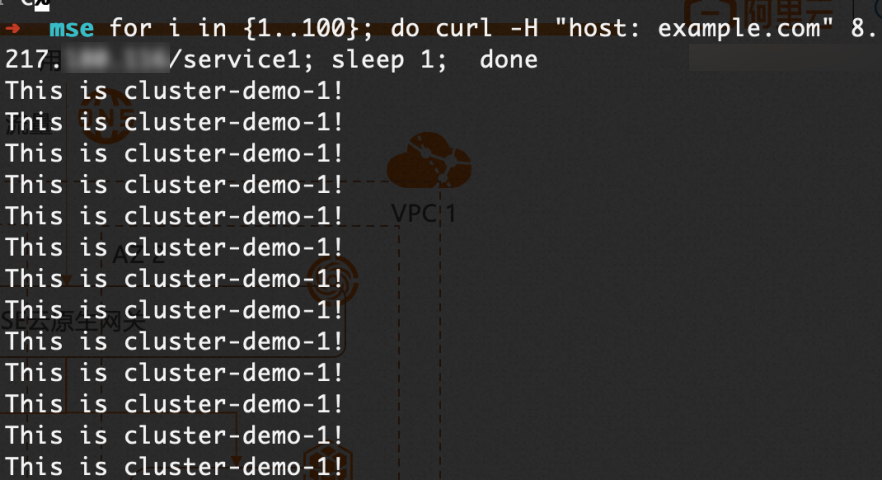

Jalankan perintah berikut untuk memeriksa routing trafik.

Ganti

XX.XX.XX.XXdengan alamat IP publik gerbang multi-kluster yang Anda peroleh pada langkah sebelumnya.for i in {1..100}; do curl -H "host: example.com" XX.XX.XX.XX doneOutput yang diharapkan:

Output menunjukkan bahwa trafik diseimbangkan ke Kluster 1 dan Kluster 2. Rasio trafik antara Kluster 1 dan Kluster 2 adalah 9:1.

Output menunjukkan bahwa trafik diseimbangkan ke Kluster 1 dan Kluster 2. Rasio trafik antara Kluster 1 dan Kluster 2 adalah 9:1.Seluruh trafik dialihkan ke Kluster 2 saat Kluster 1 mengalami anomali

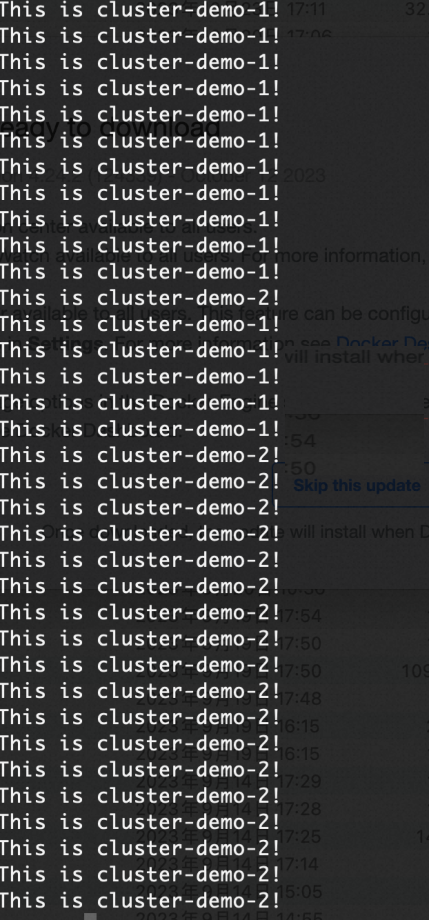

Jika Anda mengubah jumlah

replikaDeployment di Kluster 1 menjadi 0, output berikut akan dikembalikan. Ini menunjukkan bahwa trafik telah secara otomatis dialihkan ke Kluster 2.

Arahkan permintaan ke versi rilis canary menggunakan header

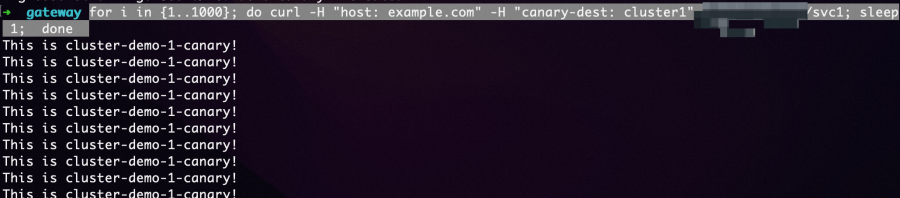

Jalankan perintah berikut untuk memeriksa routing trafik.

Ganti

XX.XX.XX.XXdengan alamat IP yang diperoleh setelah aplikasi dan Ingress dideploy seperti yang dijelaskan di bagian Verifikasi versi rilis canary.for i in {1..100}; do curl -H "host: example.com" -H "canary-dest: cluster1" xx.xx.xx.xx/svc1; sleep 1; doneOutput yang diharapkan:

Output menunjukkan bahwa permintaan dengan header

Output menunjukkan bahwa permintaan dengan header canary-dest: cluster1semuanya diarahkan ke versi rilis canary di Kluster 1.

Pemulihan bencana primer/sekunder

Buat objek Ingress berikut di instans armada ACK One untuk menerapkan pemulihan bencana primer/sekunder.

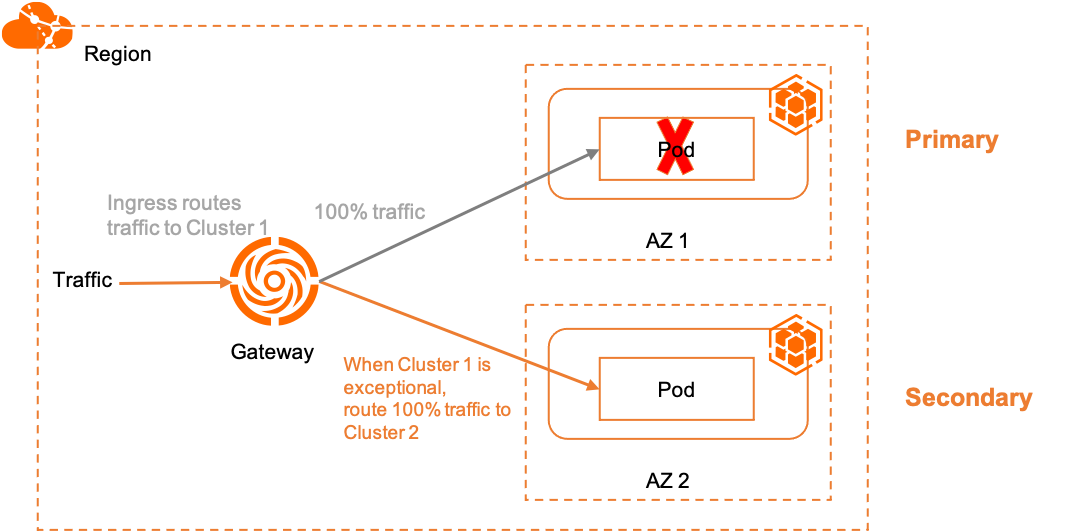

Ketika backend kedua kluster dalam kondisi normal, trafik hanya diarahkan ke backend layanan Kluster 1. Jika backend Kluster 1 mengalami anomali, gerbang secara otomatis mengarahkan trafik ke Kluster 2. Gambar berikut menunjukkan topologinya.

Buat Ingress untuk menerapkan pemulihan bencana primer/sekunder

Buat file ingress-demo-cluster-one.yaml dengan konten berikut.

Tambahkan anotasi

mse.ingress.kubernetes.io/service-subsetdanmse.ingress.kubernetes.io/subset-labelske file YAML objek Ingress untuk menggunakan/service1di bawah nama domainexample.comguna mengekspos Service backendservice1. Untuk informasi selengkapnya tentang anotasi yang didukung oleh Ingress MSE, lihat Anotasi yang didukung oleh gerbang Ingress MSE.mse.ingress.kubernetes.io/service-subset: Nama subset layanan. Kami menyarankan Anda menentukan nilai yang dapat dibaca terkait dengan kluster tujuan.mse.ingress.kubernetes.io/subset-labels: Menentukan ID kluster tujuan.apiVersion: networking.k8s.io/v1 kind: Ingress metadata: annotations: mse.ingress.kubernetes.io/service-subset: cluster-demo-1 mse.ingress.kubernetes.io/subset-labels: | topology.istio.io/cluster ${cluster1-id} name: web-demo-cluster-one spec: ingressClassName: mse rules: - host: example.com http: paths: - path: /service1 pathType: Exact backend: service: name: service1 port: number: 80

Jalankan perintah berikut untuk mendeploy Ingress di instans armada ACK One.

kubectl apply -f ingress-demo-cluster-one.yaml -ngateway-demo

Terapkan rilis canary tingkat kluster

Gerbang multi-kluster mendukung pembuatan Ingress berbasis header untuk mengarahkan permintaan ke kluster tertentu. Anda dapat menggunakan kemampuan ini, bersama dengan kemampuan load balancing replika penuh dari objek Ingress yang dijelaskan di bagian Buat Ingress untuk menerapkan pemulihan bencana primer/sekunder, untuk menerapkan rilis canary berbasis header.

Buat Ingress seperti yang dijelaskan di Buat Ingress untuk menerapkan pemulihan bencana primer/sekunder.

Buat Ingress dengan anotasi terkait header di instans armada ACK One untuk menerapkan rilis canary tingkat kluster. Ketika header permintaan sesuai dengan konfigurasi Ingress, permintaan diarahkan ke backend versi rilis canary.

Buat file ingress-demo-cluster-gray.yaml dengan konten berikut.

Dalam file YAML untuk objek Ingress berikut, ganti

${cluster1-id}dengan ID kluster target. Selain anotasimse.ingress.kubernetes.io/service-subsetdanmse.ingress.kubernetes.io/subset-labels, tambahkan anotasi berikut untuk mengekspos layanan backendservice1untuk aturan routing/service1dari nama domainexample.com.nginx.ingress.kubernetes.io/canary: Atur ke"true"untuk mengaktifkan fitur rilis canary.nginx.ingress.kubernetes.io/canary-by-header: Kunci header untuk permintaan ke kluster ini.nginx.ingress.kubernetes.io/canary-by-header-value: Nilai header untuk permintaan ke kluster ini.

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: annotations: mse.ingress.kubernetes.io/service-subset: cluster-demo-2 mse.ingress.kubernetes.io/subset-labels: | topology.istio.io/cluster ${cluster2-id} nginx.ingress.kubernetes.io/canary: "true" nginx.ingress.kubernetes.io/canary-by-header: "app-web-demo-version" nginx.ingress.kubernetes.io/canary-by-header-value: "gray" name: web-demo-cluster-gray spec: ingressClassName: mse rules: - host: example.com http: paths: - path: /service1 pathType: Exact backend: service: name: service1 port: number: 80Jalankan perintah berikut untuk mendeploy Ingress di instans armada ACK One.

kubectl apply -f ingress-demo-cluster-gray.yaml -n gateway-demo

Verifikasi pemulihan bencana primer/sekunder

Untuk mempermudah verifikasi, ubah jumlah replika yang dideploy ke Kluster 1 dan Kluster 2 masing-masing menjadi 1. Hasil yang diharapkan adalah trafik default hanya diarahkan ke Kluster 1, trafik dengan header canary diarahkan ke Kluster 2, dan ketika aplikasi di Kluster 1 mengalami anomali, trafik default juga diarahkan ke Kluster 2.

Jalankan perintah berikut untuk memperoleh alamat IP publik gerbang multi-kluster.

kubectl get ingress web-demo -n gateway-demo -ojsonpath="{.status.loadBalancer}"Trafik default diarahkan ke Kluster 1

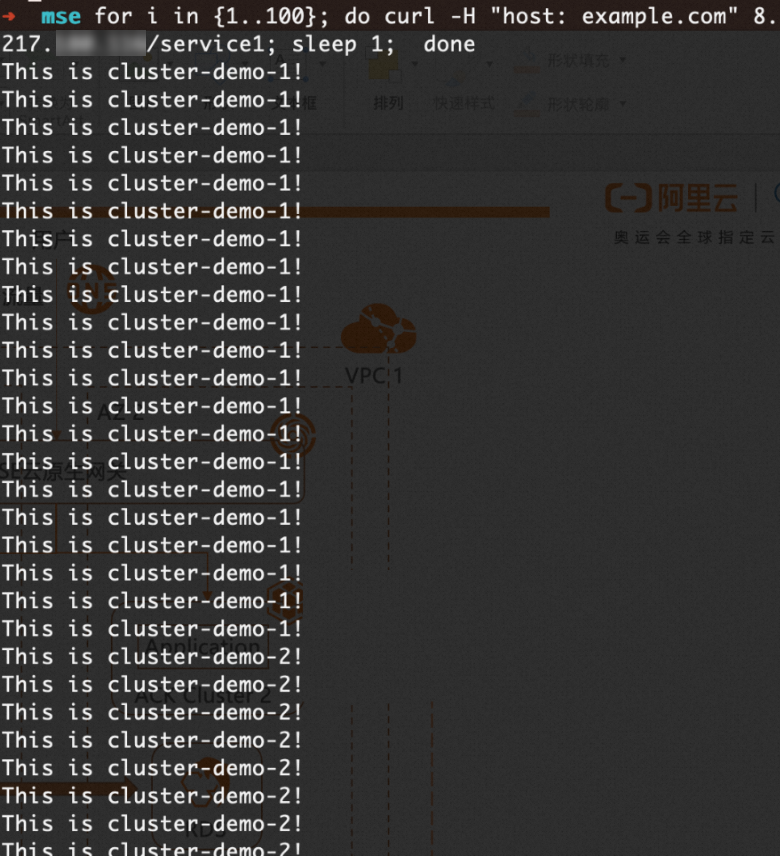

Jalankan perintah berikut untuk memeriksa apakah trafik default diarahkan ke Kluster 1.

Ganti

XX.XX.XX.XXdengan alamat IP publik gerbang multi-kluster yang Anda peroleh pada langkah sebelumnya.for i in {1..100}; do curl -H "host: example.com" xx.xx.xx.xx/service1; sleep 1; doneOutput yang diharapkan:

Output menunjukkan bahwa seluruh trafik default diarahkan ke Kluster 1.

Output menunjukkan bahwa seluruh trafik default diarahkan ke Kluster 1.Arahkan permintaan ke versi rilis canary menggunakan header

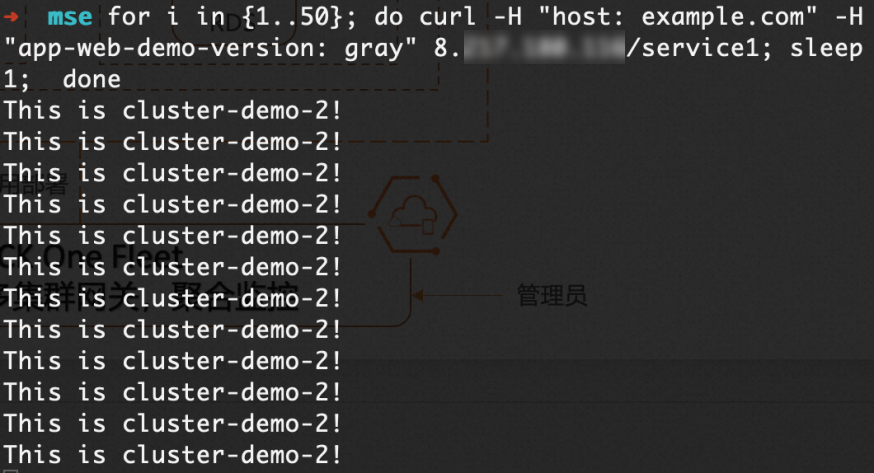

Jalankan perintah berikut untuk memeriksa apakah permintaan dengan header diarahkan ke versi rilis canary.

Ganti

XX.XX.XX.XXdengan alamat IP publik gerbang multi-kluster yang Anda peroleh pada langkah sebelumnya.for i in {1..50}; do curl -H "host: example.com" -H "app-web-demo-version: gray" xx.xx.xx.xx/service1; sleep 1; doneOutput yang diharapkan:

Output menunjukkan bahwa permintaan dengan header

Output menunjukkan bahwa permintaan dengan header app-web-demo-version: graysemuanya diarahkan ke Kluster 2 (versi rilis canary).Trafik dialihkan ke Kluster 2 saat Kluster 1 mengalami anomali

Jika Anda mengubah jumlah

replikaDeployment di Kluster 1 menjadi 0, output berikut akan dikembalikan. Ini menunjukkan bahwa trafik telah secara otomatis dialihkan ke Kluster 2.

Referensi

Untuk mempelajari kemampuan manajemen lalu lintas utara-selatan gerbang multi-kluster ACK One, lihat Kelola lalu lintas utara-selatan.

Untuk informasi selengkapnya tentang distribusi aplikasi dengan ACK One GitOps, lihat Panduan Memulai Cepat GitOps.