Create evaluation datasets according to the sample template. An evaluation dataset must contain at least 50 questions and answers. You can then evaluate the Q&A performance through evaluation tasks.

Procedure

Log on to the OpenSearch console.

Select the destination region and switch to OpenSearch LLM-Based Conversational Search Edition.

In the instance list, click Manage on the right side of the destination instance. In the left-side navigation pane, select Effect Comparison.

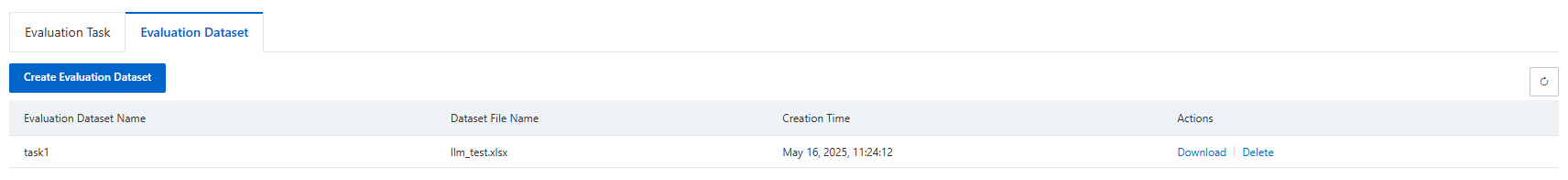

On the Evaluation Dataset tab, click Create Evaluation Dataset, enter the evaluation dataset name, and upload the evaluation dataset in Excel or JSON format according to the Data Sample.

NoteTo obtain accurate evaluation results, the evaluation dataset must contain at least 50 questions.

Download: Download the evaluation dataset.

Delete: Delete the evaluation dataset in the evaluation task.

Next step

Test datasets

1. University website admission dataset

Overview: The original document library comes from the Renmin University of China admission website, with content mostly about admission policies, department introductions, etc.

Source: https://arxiv.org/abs/2406.05654

Dataset: domainrag_xlsx_corpus.xlsx

Q&A set: basic_qa_anslen1.xlsx

2. Question-Answering Based on Insurance Clauses

Overview: The original document library content comes from various insurance products and their corresponding clauses.

Source: https://tianchi.aliyun.com/competition/entrance/532194/information

Dataset: tianchi_doc_with_title.json

Q&A set: dev_qa_sample_50_for_llm.xlsx

3. CRUD news dataset

Overview: The original document library content comes from Chinese news websites (news after July 2023).

Source: https://arxiv.org/abs/2401.17043

Dataset (split into three parts due to console size requirements):

Q&A set: crud_1doc_qa_sample100_for_llm.xlsx