A stored procedure named hg_insert_overwrite is added to Hologres V2.0. You can use the stored procedure to import MaxCompute or Hologres table data into a Hologres internal table in a convenient manner. This topic describes how to use the hg_insert_overwrite stored procedure to import MaxCompute or Hologres table data into a Hologres internal table.

Limits

In Hologres V2.0.15 and later, after you execute the

set hg_experimental_hg_insert_overwrite_enable_view=on;statement to enable the Grand Unified Configuration (GUC), you can use thehg_insert_overwritestored procedure to import data into a table on which a standard view depends. You cannot use thehg_insert_overwritestored procedure to import data into a table on which a physical view depends.If you want to import only specific fields, the fields that you want to import must be in the same sequence as and correspond to the fields in the source table.

For versions earlier than Hologres V2.0.11, you must manually clear temporary tables if the import fails. In Hologres V2.0.11 and later, the system automatically clears temporary tables.

The hg_insert_overwrite operation requires the table owner to create a temporary table. Therefore, only the superuser and the table owner have the permissions to perform the hg_insert_overwrite operation.

Usage notes

Syntax:

call hg_insert_overwrite('holo_table' regclass, ['partition_value' text|int|varchar|date], 'sql' text);Parameters:

holo_table: a Hologres internal table to which you want to import data. The table must already exist. If the Hologres internal table is a partitioned table, you must also configure the partition_value parameter.

partition_value: the value of a partition key column in a partitioned table. This parameter is required only if you set the holo_table parameter to a partitioned table. Data of the INT, TEXT, VARCHAR, or DATE data type can be used as the values of partition key columns.

sql: a standard

SELECTstatement that is used to query data in a MaxCompute or Hologres table. Make sure that the value of the partition field specified in theSELECTstatement is the same as the value of the partition_value parameter. If single quotation marks (') are contained in an SQL statement, you can use$$sql$$to rewrite the SQL statement. This way, the single quotation marks (') are escaped.

Sample statements:

Import data from the test1 table into the 20230421 partition of a partitioned table named parent_table:

call hg_insert_overwrite('parent_table','20230421',$$select * from test1 where partition_value='20230421'$$);Import data from the test1 table into a non-partitioned table named holo_table:

call hg_insert_overwrite('holo_table','select * from test1');

Examples

Example 1: Import data from a MaxCompute non-partitioned table into Hologres

Prepare a non-partitioned table in MaxCompute.

Create a non-partitioned table in MaxCompute. You can also use an existing non-partitioned table in MaxCompute. In this example, the

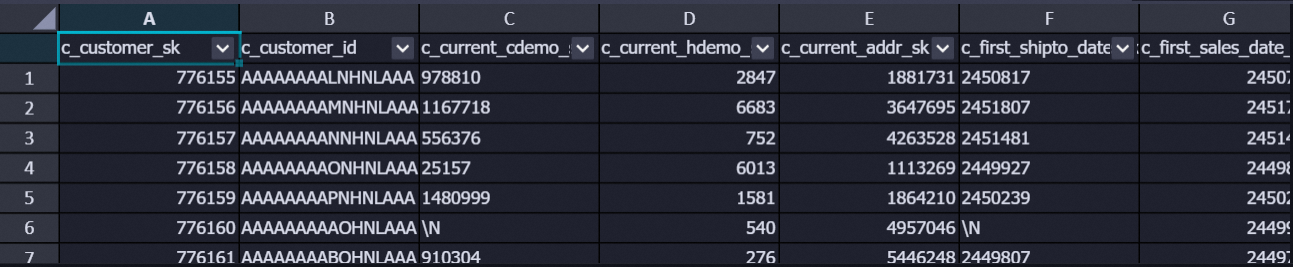

customertable in a MaxCompute public dataset project named public_data is used. The following sample code shows the DDL statement that is used to create the customer table and the SELECT statement that is used to query the customer table:-- The DDL statement that is used to create the customer table: CREATE TABLE IF NOT EXISTS public_data.customer( c_customer_sk BIGINT, c_customer_id STRING, c_current_cdemo_sk BIGINT, c_current_hdemo_sk BIGINT, c_current_addr_sk BIGINT, c_first_shipto_date_sk BIGINT, c_first_sales_date_sk BIGINT, c_salutation STRING, c_first_name STRING, c_last_name STRING, c_preferred_cust_flag STRING, c_birth_day BIGINT, c_birth_month BIGINT, c_birth_year BIGINT, c_birth_country STRING, c_login STRING, c_email_address STRING, c_last_review_date STRING, useless STRING); -- The SELECT statement that is used to query the customer table: SELECT * FROM public_data.customer;The following figure shows part of the data in the customer table.

Create a foreign table in Hologres.

Create a foreign table in Hologres to map the source table in MaxCompute. Sample statements:

CREATE FOREIGN TABLE customer ( "c_customer_sk" int8, "c_customer_id" text, "c_current_cdemo_sk" int8, "c_current_hdemo_sk" int8, "c_current_addr_sk" int8, "c_first_shipto_date_sk" int8, "c_first_sales_date_sk" int8, "c_salutation" text, "c_first_name" text, "c_last_name" text, "c_preferred_cust_flag" text, "c_birth_day" int8, "c_birth_month" int8, "c_birth_year" int8, "c_birth_country" text, "c_login" text, "c_email_address" text, "c_last_review_date" text, "useless" text ) SERVER odps_server OPTIONS (project_name 'public_data', table_name 'customer');The following table describes the parameters in the statement.

Parameter

Description

SERVER

The server in which the foreign table resides. You can use the odps_server server that is created at the underlying layer of Hologres. For more information, see Postgres FDW.

project_name

The name of the project where the MaxCompute table that you want to query resides.

table_name

The name of the MaxCompute table that you want to query.

Create an internal table in Hologres.

Create an internal table in Hologres to receive the data that is imported from the MaxCompute table. Sample statements:

NoteThe following sample statement is provided only for reference. When you create a table, configure the schema based on your business requirements and create appropriate indexes to achieve high query performance. For information about table properties, see Overview.

-- Create a column-oriented table to store imported data. BEGIN; CREATE TABLE public.holo_customer ( "c_customer_sk" int8, "c_customer_id" text, "c_current_cdemo_sk" int8, "c_current_hdemo_sk" int8, "c_current_addr_sk" int8, "c_first_shipto_date_sk" int8, "c_first_sales_date_sk" int8, "c_salutation" text, "c_first_name" text, "c_last_name" text, "c_preferred_cust_flag" text, "c_birth_day" int8, "c_birth_month" int8, "c_birth_year" int8, "c_birth_country" text, "c_login" text, "c_email_address" text, "c_last_review_date" text, "useless" text ); COMMIT;Import data into the Hologres internal table.

Execute statements to import data from the MaxCompute table into the Hologres internal table. Sample statements:

NoteIf your MaxCompute data is updated on a regular basis, we recommend that you execute the IMPORT FOREIGN SCHEMA statement to refresh the metadata of the foreign table that matches the MaxCompute table before data import to obtain the latest MaxCompute data.

IMPORT FOREIGN SCHEMA <project_name> LIMIT to (customer) FROM server odps_server INTO PUBLIC options(if_table_exist 'update');-- Update the metadata in the foreign table. select pg_sleep(30);-- Wait for a given number of seconds before you import data into Hologres. Otherwise, the import operation may fail due to the latency that is caused by the update of metadata. call hg_insert_overwrite('holo_customer', 'SELECT * FROM customer where c_birth_year > 1980');Query data.

Query the data of the MaxCompute table in Hologres. Sample statement:

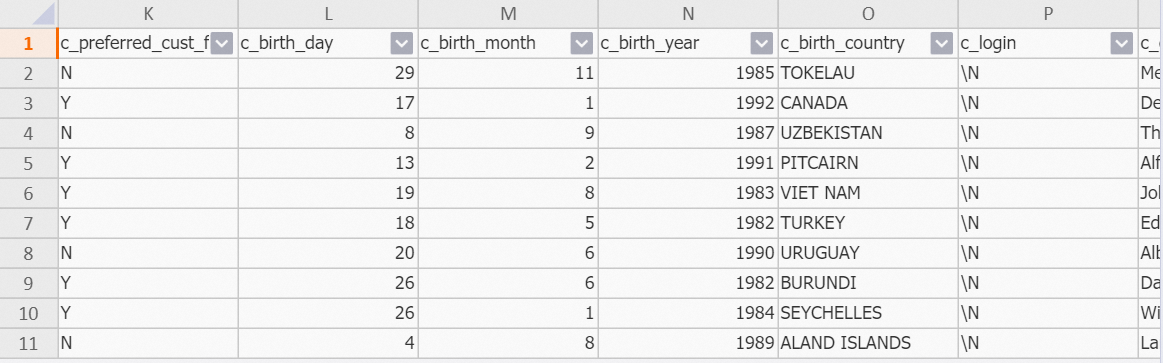

SELECT * FROM holo_customer limit 10;The following figure shows the returned result.

Example 2: Import data from a MaxCompute partitioned table into Hologres

Prepare a partitioned table in MaxCompute.

Create a partitioned table in MaxCompute. The following sample code shows the statements that are used to create the table, add a partition to the table, and insert data into the partition:

DROP TABLE IF EXISTS odps_sale_detail; CREATE TABLE IF NOT EXISTS odps_sale_detail ( shop_name STRING ,customer_id STRING ,total_price DOUBLE ) PARTITIONED BY ( sale_date STRING ) ; -- Add the 20210815 partition to the partitioned table. ALTER TABLE odps_sale_detail ADD IF NOT EXISTS PARTITION(sale_date='20210815') ; -- Insert data into the 20210815 partition. INSERT OVERWRITE TABLE odps_sale_detail PARTITION(sale_date='20210815') VALUES ('s1','c1',100.1), ('s2','c2',100.2), ('s3','c3',100.3) ;Create a foreign table in Hologres.

Create a foreign table in Hologres to map the source table in MaxCompute. Sample statements:

DROP FOREIGN TABLE IF EXISTS odps_sale_detail; -- Create a foreign table. IMPORT FOREIGN SCHEMA <maxcompute_project> LIMIT to ( odps_sale_detail ) FROM SERVER odps_server INTO public OPTIONS(if_table_exist 'error',if_unsupported_type 'error');maxcompute_project specifies the name of the project to which the MaxCompute table belongs.

Create an internal table in Hologres.

Create an internal table in Hologres to receive the data that is imported from the MaxCompute table. Sample statements:

DROP TABLE IF EXISTS holo_sale_detail; -- Create a partitioned table (an internal table) in Hologres. BEGIN ; CREATE TABLE IF NOT EXISTS holo_sale_detail ( shop_name TEXT ,customer_id TEXT ,total_price FLOAT8 ,sale_date TEXT ) PARTITION BY LIST(sale_date); COMMIT;Import data into the Hologres internal table.

Execute a statement to import data from the MaxCompute table into the Hologres internal table. Sample statements:

call hg_insert_overwrite('holo_sale_detail', '20210815', $$ SELECT * FROM public.odps_sale_detail WHERE sale_date='20210815' $$);Query data.

Query the data of the MaxCompute table in Hologres. Sample statement:

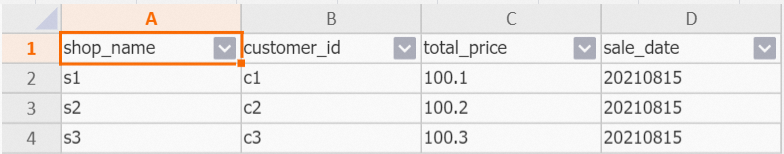

SELECT * FROM holo_sale_detail;The following figure shows a sample result.

Example 3: Import data from a Hologres non-partitioned table (internal table) into a Hologres partitioned table (internal table)

Create a Hologres non-partitioned table (internal table) and prepare data.

Create a non-partitioned table (internal table) in Hologres. Sample statements:

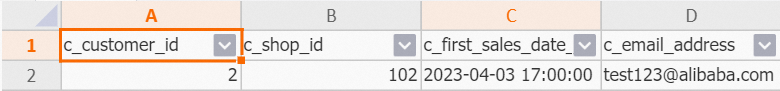

BEGIN; CREATE TABLE public.data( c_customer_id int, c_shop_id int, c_first_sales_date_sk timestamp, c_email_address text ); COMMIT; INSERT INTO public.data VALUES (1,101,'2023-04-03 10:00:00','test123@alibaba.com'), (2,102,'2023-04-03 11:00:00','test123@alibaba.com'), (2,102,'2023-04-03 17:00:00','test123@alibaba.com');Create a Hologres partitioned table (internal table).

Create a partitioned table (internal table) in Hologres to receive data. Sample statements:

BEGIN; CREATE TABLE public.hologres_parent( c_customer_id int, c_shop_id int, c_first_sales_date_sk timestamp, c_email_address text ) PARTITION BY LIST(c_customer_id); CREATE TABLE public.hologres_child1 PARTITION OF public.hologres_parent FOR VALUES IN(1); CREATE TABLE public.hologres_child2 PARTITION OF public.hologres_parent FOR VALUES IN(2); CREATE TABLE public.hologres_child3 PARTITION OF public.hologres_parent FOR VALUES IN(3); COMMIT;Import data from the Hologres non-partitioned table into the Hologres partitioned table.

call hg_insert_overwrite('public.hologres_parent','1', 'SELECT * FROM public.data where c_customer_id=1');Query data.

Query the data in the Hologres partitioned table. Sample statement:

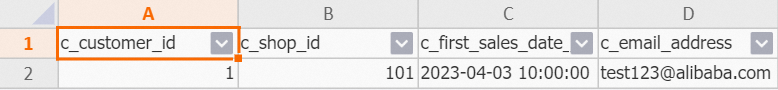

SELECT * FROM public.hologres_parent;The following figure shows a sample result.

Example 4: Import data from a Hologres non-partitioned table (internal table) into another Hologres non-partitioned table (internal table)

Create a Hologres non-partitioned table (internal table) and prepare data.

Create a non-partitioned table (internal table) in Hologres. Sample statements:

BEGIN; CREATE TABLE public.data( c_customer_id int, c_shop_id int, c_first_sales_date_sk timestamp, c_email_address text ); COMMIT; INSERT INTO public.data VALUES (1,101,'2023-04-03 10:00:00','test123@alibaba.com'), (2,102,'2023-04-03 11:00:00','test123@alibaba.com'), (2,102,'2023-04-03 17:00:00','test123@alibaba.com');Create another non-partitioned table (internal table) in Hologres.

Create another non-partitioned table (internal table) in Hologres to receive data. Sample statements:

BEGIN; CREATE TABLE public.re_data( c_customer_id int, c_shop_id int, c_first_sales_date_sk timestamp, c_email_address text ); COMMIT;Import data from the source Hologres internal table into the destination Hologres non-partitioned table.

call hg_insert_overwrite('public.re_data', $$SELECT * FROM public.data where c_first_sales_date_sk='2023-04-03 17:00:00' and c_email_address ='test123@alibaba.com'$$);Query data.

Query the data in the destination Hologres non-partitioned table. Sample statement:

SELECT * FROM public.re_data;The following figure shows a sample result.

FAQ

What do I do if data fails to be imported?

Problem description: An out of memory (OOM) error occurs when MaxCompute data is imported. Error message:

Query executor exceeded total memory limitation xxxxx: yyyy bytes used.Solution:

Step 1: Query the execution plan. In some cases, the CALL statement contains a SELECT statement, but no analysis operation is performed on some tables. In this case, the join order decision of the query optimizer is invalid, and excessive memory overheads are caused.

To fix the issue, execute the

analyze <tablename>statement on all involved internal and foreign tables to update the metadata of the tables. This helps the query optimizer generate better execution plans.Step 2: Limit the number of data entries imported at the same time. If the MaxCompute table contains a large number of columns and the amount of data in each row is large, the amount of data imported at the same time is large. This causes excessive memory overheads. To fix the issue, add a statement to configure the hg_experimental_query_batch_size parameter before the CALL statement.

set hg_experimental_query_batch_size = 1024;-- Default value: 8192. call hg_insert_overwrite('xx','select xx from xx');Step 3: Reduce memory overheads during data import by reducing the data import concurrency. The concurrency is specified by the

hg_experimental_foreign_table_executor_max_dopparameter. The default value of this parameter equals the number of CPU cores of the Hologres instance. You can specify a smaller value to reduce memory overheads during data import.set hg_experimental_foreign_table_executor_max_dop = 8; call hg_insert_overwrite('xx','select xx from xx');Step 4: Reduce the number of DML statements that can be executed at the same time. The number is specified by the

hg_foreign_table_executor_dml_max_dopparameter. The default value is 32. You can specify a smaller value during data import to prevent the import operation from occupying excessive resources.set hg_foreign_table_executor_dml_max_dop = 16; call hg_insert_overwrite('xx','select xx from xx');

Subsequent operation: drop temporary tables

When data is imported from a foreign table into an internal table, a temporary table is created to maintain good atomicity. Data is first written to the temporary table. Then, the temporary table is renamed as the destination table. This minimizes the impact on data queries. If data import fails and you do not want to have a retry, you need to drop the temporary table.

Statements:

Statement to drop a non-partitioned table:

drop table if exists system_insert_overwrite_${<holo_table>}_temp;Replace holo_table with the name of the destination internal table.

Statement if a destination partitioned table exists:

Use

partition_valuesto obtain the desired child partitioned table, such as holo_table_chile.drop table if exists system_insert_overwrite_${holo_table_chile}_temp;Replace holo_table_chile with the name of the child partitioned table.

Statement if a destination partitioned table does not exist:

drop table if exists ${holo_table}_${partition_values};Replace holo_table with the name of the parent partitioned table. Replace partition_values with the values of specific primary key columns.

Examples:

The destination table is a non-partitioned table.

Statement to import data:

call hg_insert_overwrite('ads_holo_table','select * from ads_holo_table_foreign');Statement to drop the temporary table:

drop table if exists system_insert_overwrite_ads_holo_table_temp;The destination table is an existing partitioned table.

Statement to import data:

call hg_insert_overwrite('ads_holo_table','20221207','select * from ads_holo_table_foreign');Statement to drop the temporary table:

drop table if exists system_insert_overwrite_ads_holo_table_20221207_temp;The destination table is a partitioned table that does not exist.

Statement to import data:

call hg_insert_overwrite('ads_holo_table','20221208','select * from ads_holo_table_foreign');Statement to drop the temporary table:

drop table if exists ads_holo_table_20221208;

FAQ

ERROR: can not InsertOverwrite table feedback_detail because materialized view feedback_day_top depends on it

Possible cause: A materialized view depends on the table to which you want to import data. Currently, you are allowed to import data into such a table.

ERROR: InsertOverwrite insert select table data failed : new row for relation "xxx" violates partition constraint

Possible cause: Invalid data is attempted to be imported to a partitioned table.