This topic describes how to use a Simple Log Service CRD (custom resource definition) in a Container Service for Kubernetes (ACK) Serverless cluster to configure log collection and implement automated container log collection.

Background information

Simple Log Service is an end-to-end data logging service. You can use Simple Log Service to collect, consume, deliver, query, and analyze log data without performing further development. For more information, see What is Simple Log Service?

Prerequisites

An ACK Serverless cluster is created. For more information, see Create an ACK Serverless cluster.

Simple Log Service is activated for the ACK Serverless cluster.

Log on to the Simple Log Service console. If Simple Log Service is not activated for the cluster, you are prompted to follow on-screen instructions to activate the service.

Precautions

The log collection feature that is enabled by using Simple Log Service CRDs is valid only for the Elastic Container Instance pods that are created after the CRDs are created. If you want to collect logs of existing pods, you must perform a rolling release for the existing pods.

Configure log collection

After you deploy the alibaba-log-controller component in a cluster, you can use an AliyunLogConfig CRD (CRD for log collection configurations) to configure log collection.

Log on to the Container Service for Kubernetes (ACK) console.

On the Clusters page, find the cluster that you want to manage and click the cluster name. The details page of the cluster appears.

Deploy the Alibaba-log-controller component in the cluster.

In the left-side navigation pane of the details page, choose Operations > Add-ons.

Click the Logs and Monitoring tab, find the card of the Alibaba-log-controller component, and then click Install.

In the message that appears, click OK.

After alibaba-log-controller is installed, the characters "Installed" are displayed in the upper-right corner of the card of alibaba-log-controller.

Create an AliyunLogConfig CRD.

NoteAfter you create an AliyunLogConfig CRD, you can view the generated Logstore and Logtail configuration in the Simple Log Service console. If you want to update the Logtail configuration, you can modify the CRD. The system automatically synchronizes the updated configurations to Simple Log Service.

Write a YAML configuration file for the AliyunLogConfig CRD by referring to the following examples.

The logs that are collected to Simple Log Service can be categorized into stdouts (including stderrs) logs and text logs. For more information about relevant parameters, see Logtail configurations.

Sample YAML configuration file for the CRD that is used to collect stdouts.

Create a file named log-stdout.yaml and copy the following template into the file.

apiVersion: log.alibabacloud.com/v1alpha1 kind: AliyunLogConfig metadata: name: test-stdout # The name of the CRD, which is unique in the current Kubernetes cluster. spec: project: k8s-log-c326bc86**** # Optional. The name of the project. You can specify a name for the project. We recommend that you use the [k8s-log-<The cluster ID>] format. logstore: test-stdout # Required. The name of the Logstore. If the specified Logstore does not exist, the system automatically creates a Logstore. shardCount: 2 # Optional. The number of shards. Default value: 2. Valid values: 1, 2, 3, 4, 5, 6, 7, 8, 9, and 10. lifeCycle: 90 # Optional. The period of time for which logs in the Logstore can be retained. Unit: days. The parameter is valid only when you create a Logstore. Default value: 90. Valid values: 1 to 3650. The value of 3650 indicates that logs are permanently reserved. logtailConfig: # The Logtail configuration. inputType: plugin # The type of the data source. A value of file indicates text logs, and a value of plugin indicates stdout logs. configName: test-stdout # The name of the Logtail configuration. The name must be the same as the CRD name specified in the metadata.name parameter. inputDetail: # The details of the Logtail configuration. plugin: inputs: - type: service_docker_stdout detail: Stdout: true Stderr: true # IncludeEnv: # aliyun_logs_test-stdout: "stdout"Sample YAML configuration file for the CRD that is used to collect text logs.

Create a file named log-file.yaml and copy the following template into the file.

apiVersion: log.alibabacloud.com/v1alpha1 kind: AliyunLogConfig metadata: name: test-file # The name of the CRD, which must be unique in the current Kubernetes cluster. spec: project: k8s-log-c326bc86**** # Optional. The name of the project. You can specify a name for the project. We recommend that you use the [k8s-log-<The cluster ID>] format. logstore: test-file # Required. The name of the Logstore. If the specified Logstore does not exist, the system automatically creates a Logstore. logtailConfig: # The Logtail configuration. inputType: file # The type of the data source. A value of file indicates text logs, and a value of plugin indicates stdout logs. configName: test-file # The name of the Logtail configuration. The name must be the same as the CRD name specified in the metadata.name parameter. inputDetail: # The details of the Logtail configuration. logType: common_reg_log # The type of the logs that you want to collect. For logs that are parsed by using delimiters, you can set the logType parameter to json_log. logPath: /log/ # The folder in which logs are stored. filePattern: "*.log" # The name of the log file. The name supports wildcards. Example: log_*.log. dockerFile: true # To collect text logs in the container, set the dockerFile parameter to true. # The key that is used to parse time. #timeKey: 'time' # The format of time parsing. #timeFormat: '%Y-%m-%dT%H:%M:%S' # Prevents conflicts caused by the same collection directory that is specified in different collection configurations. #dockerIncludeEnv: # aliyun_logs_test-file: "/log/*.log"Run the following commands to create an AliyunLogConfig CRD:

kubectl create -f log-stdout.yaml kubectl create -f log-file.yaml

Test log collection

After you create the AliyunLogConfig CRD, Simple Log Service automatically collects logs of pods that are created later. You can create the following application to test log collection.

Create an application.

The following sample YAML file shows how to create a Deployment. In the example, the system runs relevant commands after the container is started. stdout logs and text logs of the container are continuously displayed.

apiVersion: apps/v1 kind: Deployment metadata: name: eci-sls-demo labels: app: sls spec: replicas: 1 selector: matchLabels: app: sls template: metadata: name: sls-test labels: app: sls alibabacloud.com/eci: "true" spec: containers: - args: - -c - mkdir -p /log;while true; do echo hello world; date; echo hello sls >> /log/busy.log; sleep 1;done command: - /bin/sh image: registry-vpc.cn-beijing.aliyuncs.com/eci_open/busybox:1.30 imagePullPolicy: Always name: busyboxCreate a file named test-sls-crd.yaml and copy the preceding YAML file template into the file. Run the following commands to create an application:

kubectl create -f test-sls-crd.yamlCheck the status of the application.

kubetcl get podExpected output:

NAME READY STATUS RESTARTS AGE eci-sls-demo-7bf8849b9f-cgpbn 1/1 Running 0 2m14sView logs.

Log on to the Simple Simple Log Service console.

Click the name of the project.

Find the Logstore in which the logs of your containers are stored. Click the name of the Logstore to view the logs.

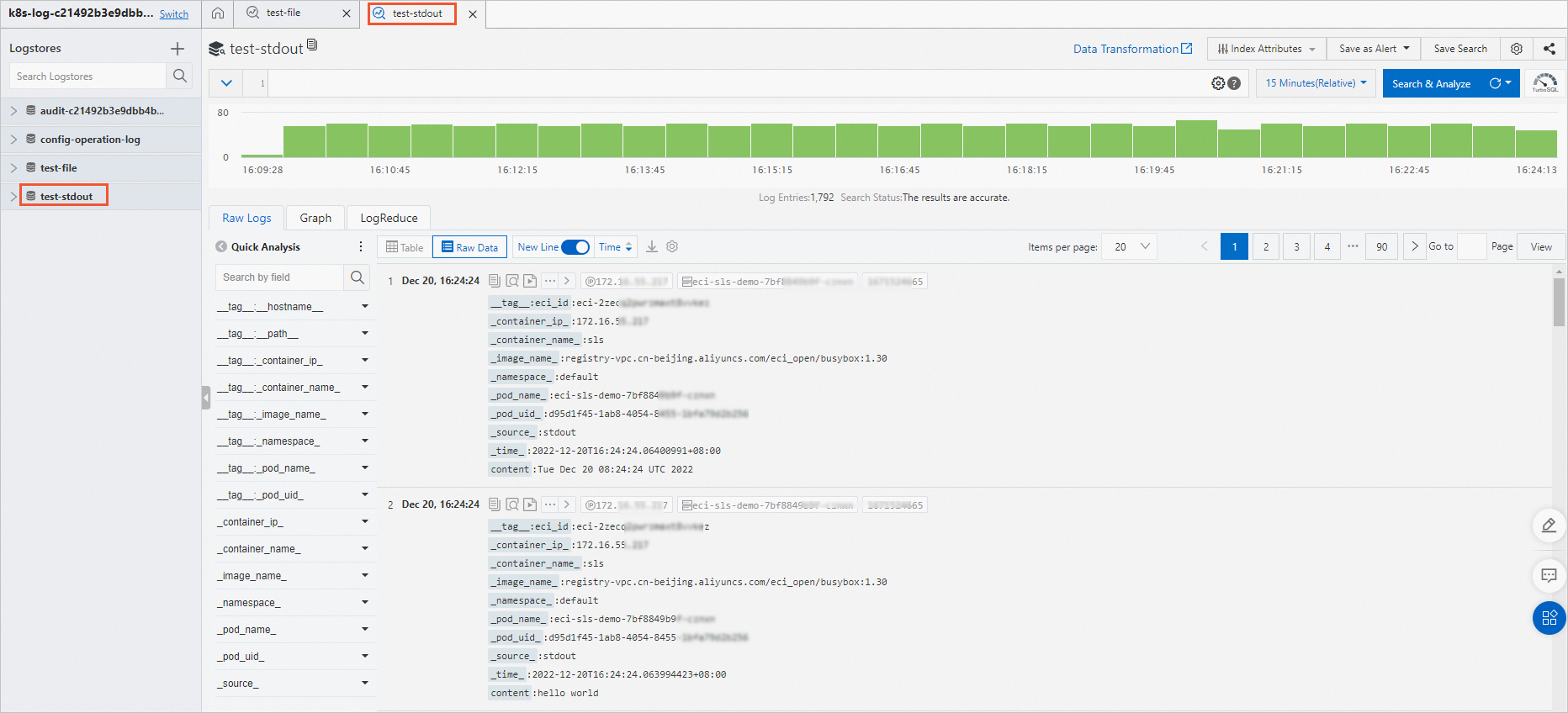

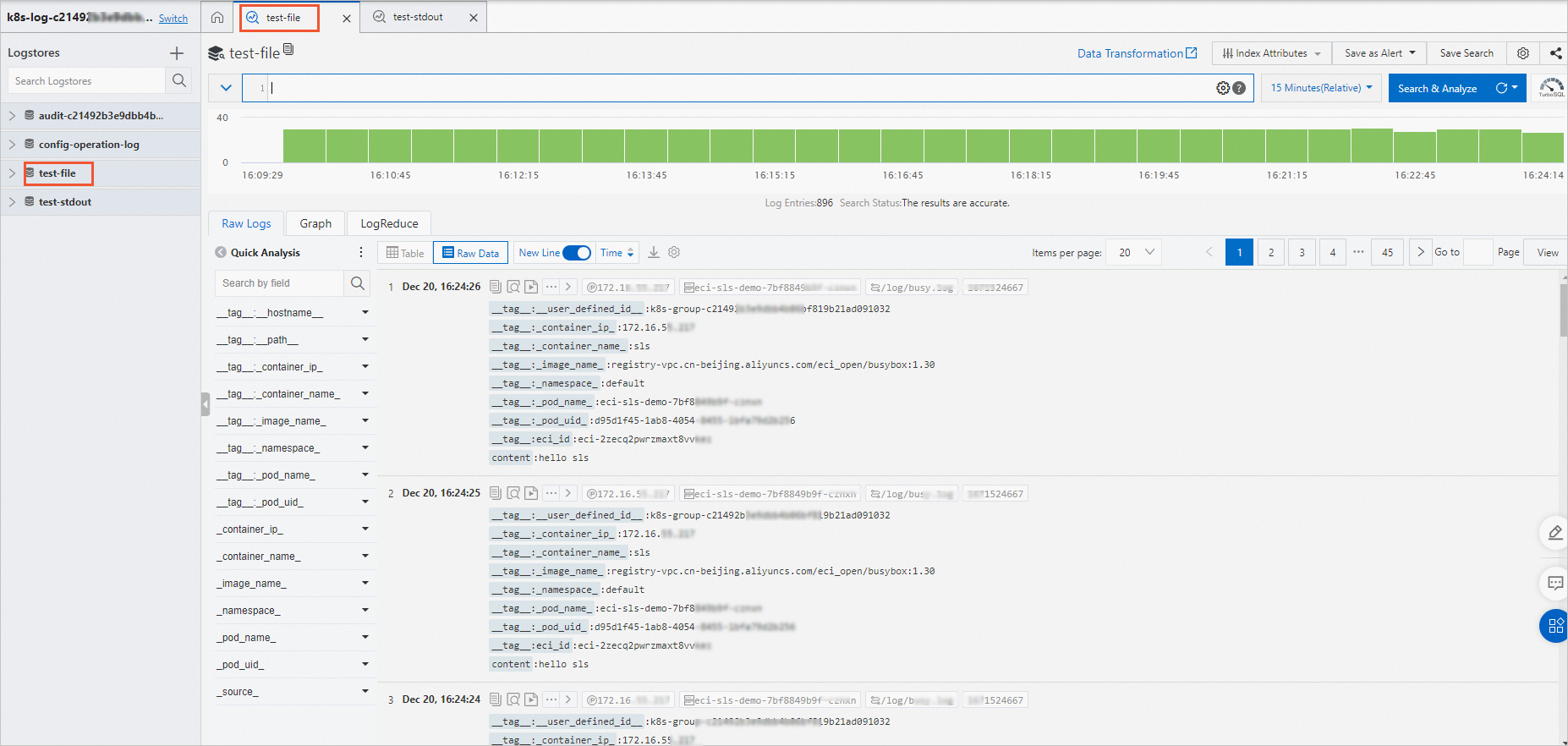

Collection of stdout logs

Collection of text logs

Disable log collection

After you create an AliyunLogConfig CRD, the system automatically collects logs of all pods that meet the conditions. If you do not want to collect logs of specific pods, you can add the k8s.aliyun.com/eci-sls-enable: "false" annotation to the metadata section of the pods to disable log collection. This prevents waste of resources caused by the system automatically creating Logtail.

Annotations must be added to the metadata in the configuration file of the pod. For example, when you create a Deployment, you must add annotations in the spec.template.metadata section.

To use features of Elastic Container Instance, you can add annotations only when you create Elastic Container Instance-based pods. If you add or modify annotations when you update pods, these annotations do not take effect.

Example:

apiVersion: apps/v1

kind: Deployment

metadata:

name: eci-sls-demo2

labels:

app: sls

spec:

replicas: 1

selector:

matchLabels:

app: sls

template:

metadata:

name: sls-test

labels:

app: sls

alibabacloud.com/eci: "true"

annotations:

k8s.aliyun.com/eci-sls-enable: "false" # Disables log collection.

spec:

containers:

- args:

- -c

- mkdir -p /log;while true; do echo hello world; date; echo hello sls >> /log/busy.log; sleep 1;

done

command:

- /bin/sh

image: registry.cn-shanghai.aliyuncs.com/eci_open/busybox:1.30

imagePullPolicy: Always

name: busybox