AIACC-Inference can optimize models that are built based on TensorFlow and exportable frameworks in the Open Neural Network Exchange (ONNX) format to significantly accelerate inference. This topic describes how to automatically install AIACC-Inference and test a demo.

Background information

Conda is an open source package management system and environment management system that can run on different platforms. Miniconda is a minimal installer for Conda and is used to deploy environments. When you create a GPU-accelerated instance, you can configure a Conda environment that contains automatically installed AIACC-Inference. You can use Miniconda to select a Conda environment and use AIACC-Inference to significantly accelerate inference.

ONNX is an open source format in which you can store trained models. You can convert data of models of different frameworks such as PyTorch and MXNet into the ONNX format. This helps simplify the operation to test models of different frameworks in the same environment.

Automatically install AIACC-Inference

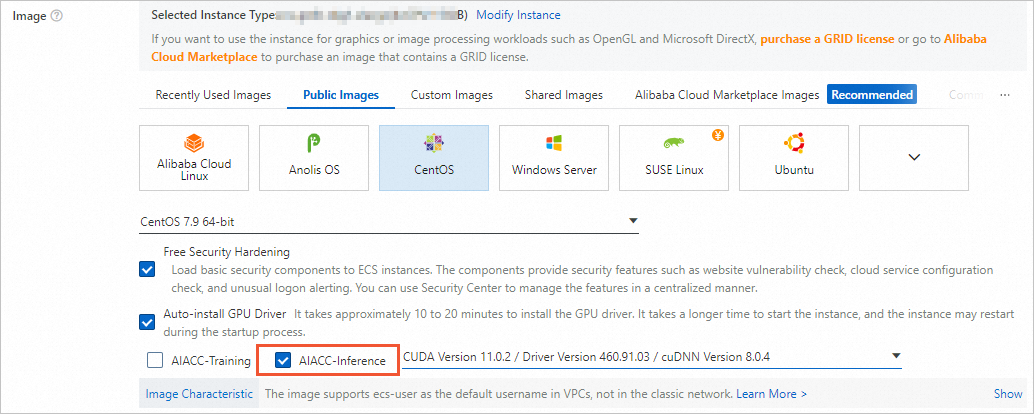

AIACC-Inference depends on the GPU driver, CUDA, and cuDNN. When you create a GPU-accelerated instance, select Auto-install GPU Driver and Auto-install AIACC-Inference. Then, select a CUDA version, a GPU driver version, and a cuDNN version. After the instance is created, you can configure a Conda environment that contains AIACC-Inference based on the CUDA version in a short period of time.

Test a demo

Connect to the instance. For more information, see Connect to a Linux instance by using a password.

Select a Conda environment.

Initialize Miniconda.

source /root/miniconda/etc/profile.d/conda.shView existing Conda environments.

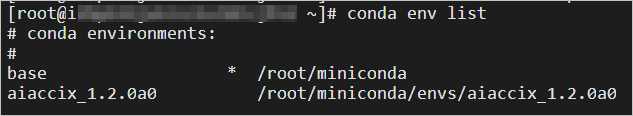

conda env listThe following figure shows an example of the command output.

Select a Conda environment.

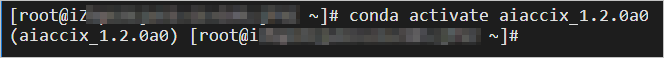

conda activate [environments_name]The following figure shows an example of the command output.

Test a demo.

By default, the aiacc_inference_demo.tgz demo file is stored in the /root directory. In this topic, the ONNX demo is tested as an example.

Decompress the demo test package.

tar -xvf aiacc_inference_demo.tgzGo to the directory of the ONNX demo.

cd /root/aiacc_inference_demo/aiacc_inference_onnx/resnet50v1Run the test script in the directory.

The following sample code provides an example on how you run the test script:

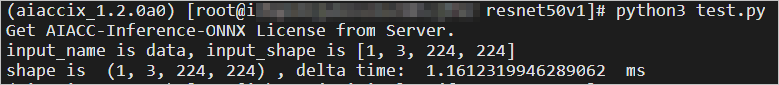

python3 test.pyThe test script executes inference tasks based on a ResNet50 model and randomly generates and classifies an image. This reduces the amount of time that is required for an inference task from 6.4 ms to less than 1.5 ms. The following figure shows an example of the inference result.

Delete Miniconda

If you no longer use AIACC-Inference, you can delete Miniconda. By default, Miniconda is installed for the root user. Before you delete Miniconda, delete the relevant environment variables as a root user. Then, delete the Miniconda folder.

Run the following command to switch to the root user.

NoteIf you logged on as the root user, skip this step.

su - rootDelete the environment variables and the command output.

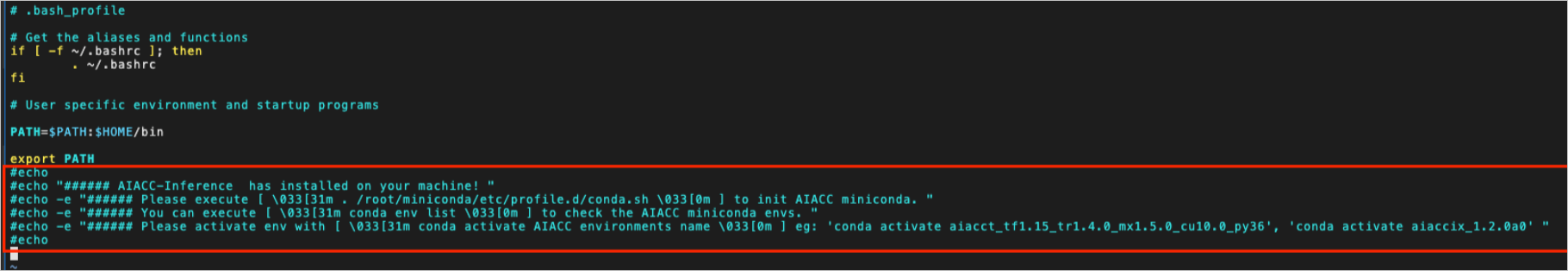

Modify the file /root/.bash_profile and comment out Miniconda-related messages.

The following figure shows an example of the modified environment variables and command output.

Optional:If the Conda environment is started, delete the environment variables related to Conda in the environment.

conda deactivate unset conda export PATH=`echo $PATH | tr ":" "\n" | grep -v "conda" | tr "\n" ":"` export LD_LIBRARY_PATH=`echo $LD_LIBRARY_PATH | tr ":" "\n" | grep -v "conda" | tr "\n" ":"`

Run the following command to delete the Miniconda folder:

rm -rf /root/miniconda