This topic provides answers to some frequently asked questions (FAQ) about Elastic High Performance Computing (E-HPC).

FAQ about clusters

Why am I unable to create clusters in some regions?

In some cases, you cannot create an E-HPC cluster in a region or zone where E-HPC is supported. A possible reason is that the resources required to create a cluster are not supported in the region or the resource inventory is insufficient. For example:

In the current region, File Storage NAS (NAS) file systems cannot be created or no NAS file system is available. In this case, you cannot mount a NAS file system on your cluster.

The region or zone does not have Elastic Compute Service (ECS) instance types that match the compute nodes in your E-HPC cluster, or the ECS instances are insufficient.

In this case, we recommend that you select another region to create an E-HPC cluster.

Can I manage nodes in the ECS console?

No, you cannot manage nodes in the ECS console.

Each node is an ECS instance. However, the E-HPC console provides additional deployment processes, including but not limited to the following processes:

E-HPC allows you to specify the number of cluster nodes for different instance types. In this case, E-HPC creates ECS instances in batches for various types of nodes.

After the ECS instance that corresponds to each node is created, E-HPC deploys the management system.

E-HPC pre-installs the selected software and dependencies on your ECS instances by using the management system.

E-HPC configures a job scheduler on a management node.

All the preceding processes depend on E-HPC. If you manage nodes in the ECS console, exceptions may occur in the clusters or nodes. In addition, the cluster resources may be unavailable. Therefore, you cannot manage nodes in the ECS console.

How do nodes communicate with ECS instances over the internal network?

The communication between a node and the ECS instance over the internal network depends on whether the node and the ECS instance reside in the same virtual private cloud (VPC).

If your node and the ECS instance that you purchase are in the same VPC, the node and the instance can communicate with each other in the VPC.

If your node and the ECS instance that you purchase are in different VPCs, you must connect the VPCs to allow the node and the ECS instance to communicate. You can use the Cloud Enterprise Network (CEN) or VPC to configure instance communication within or between private networks.

Why am I unable to log on to an E-HPC cluster by using SSH?

You may be unable to log on to an E-HPC cluster by using SSH due to various reasons. Troubleshoot the issue based on the actual scenario.

Check whether the username or password is valid.

Check whether the on-premises network of the client or carrier network is connected.

Check whether the security group rule of the logon node allows access to the required ports, such as the default port 22 of SSH.

Run the

iptables -nvL --line-numbercommand to check whether a firewall is enabled or firewall rules are configured for the logon node.

If the issue persists, you can use Virtual Network Computing (VNC) to connect to the cluster. For more information, see FAQ about connecting to ECS instances.

Why does it take so long to log on to an E-HPC cluster equipped with NIS by using SSH?

Problem description

It requires a long period of time to log on to a node by using SSH or switch between nodes. In some cases, the logon fails.

I cannot restart sshd and the error message

Failed to activate service 'org.freedesktop.systemd1': timed outis returned.

Cause

This is a bug of systemd that may occur when Network Information Service (NIS) is used.

Solution

Log on to the node as a root user.

Check the content of the /etc/nsswitch.conf file:

cat /etc/nsswitch.confIf [NOTFOUND=return] is not displayed in the passwd, shadow, and group parameters, perform the following steps. Sample code:

passwd: files sss nis shadow: files sss nis group: files sss nisOptional. Upgrade glibc:

yum update glibcUpdate the nsswitch configuration file.

Open the nsswitch.conf file:

vim /etc/nsswitch.confModify the following content in the nsswitch.conf file and save the file:

passwd: files sss nis [NOTFOUND=return] shadow: files sss nis [NOTFOUND=return] group: files sss nis [NOTFOUND=return]

Can I stop a management node during the auto scaling of the cluster?

The auto scaling service relies on the scheduler service and the domain account service to run as expected. Therefore, the management node must remain in the running state during the auto scaling of the cluster. If the management node is stopped after you enable auto scaling, exceptions may occur.

If you need to shut down or restart the management node, perform the operation after idle nodes are released and no jobs are running on the compute nodes. In this case, we recommend that you disable auto scaling before you shut down or restart the management node, and enable auto scaling after the management node is restarted.

Why am I unable to add nodes for an E-HPC cluster that uses Slurm when I configure the auto scaling policy?

By default, an E-HPC cluster that uses Slurm has eight dummy nodes. If the current cluster has five compute nodes, a job can use up to 13 nodes. If you need to use more nodes to run the job, you must add compute nodes or increase the number of dummy nodes. To increase the number of dummy nodes, perform the following steps:

Log on to the cluster as a root user. For more information, see Log on to an E-HPC cluster.

Add the dummyNodexxx file to the

/opt/slurm/<slurm_version>/nodesdirectory.For example, to perform a job that requires 18 nodes, you must add 10 dummy nodes. You can use dummyNode8 to dummyNode17 to specify the dummy nodes that you want to add.

Note<slurm_version> is the version of Slurm in your cluster.

In the /

opt/slurm/<slurm_version>/etc/slurm.conffile, findPartitionNameand specify information about the dummy nodes.The following sample code provides an example on how to specify information about the dummy nodes:

PartitionName=comp Nodes=dummynode0,dummynode1,dummynode2,dummynode3,dummynode4,dummynode5,dummynode6,dummynode7,dummynode8,dummynode9,dummynode10,dummynode11,dummynode12,dummynode13,dummynode14,dummynode15,dummynode16,dummynode17,compute000 Default=YES MaxTime=INFINITE State=UP

How do I select a Slurm version when I create a cluster that uses Slurm?

When you create an E-HPC cluster, you can select a scheduler type on the 2. Software Configurations page. E-HPC supports multiple versions of the Slurm scheduler. If your business does not require a specific scheduler version, we recommend that you select the latest Slurm version to create a cluster. The latest version of the Slurm scheduler supported by E-HPC is slurm22.

How do I perform account verification to purchase Alibaba Cloud services in the Chinese mainland?

If you want to purchase and use Alibaba Cloud services in the Chinese mainland, you must complete account verification. Then, you can use existing resources, purchase resources, and renew resources. If you select a region in the Chinese mainland when you purchase Alibaba Cloud services, the system checks whether you completed account verification. If you did not complete account verification, an error message appears on the buy page. For more information, see FAQ about account verification of Alibaba Cloud accounts.

FAQ about images

What types of images are supported?

E-HPC supports the following types of images:

Public images: images provided by Alibaba Cloud.

Custom images: images created from ECS instances or snapshots, or images imported from your computer.

Shared images: images shared by other Alibaba Cloud accounts.

Alibaba Cloud Marketplace images: images provided by independent software vendors (ISVs) that are licensed by Alibaba Cloud Marketplace.

Community images: images that are released on the image platform of Alibaba Cloud Community.

The image types that you can select vary based on the specified region, specified instance type for the node, and whether the current Alibaba Cloud account has available image resources. All available image types are displayed on the console.

Why am I unable to select a custom image?

When you create or scale out an E-HPC cluster, or configure an auto scaling policy, you may be unable to select a custom image due to the following reasons:

Your Alibaba Cloud account does not have a custom image in the current region. For more information, see Overview.

The operating system of the custom image is not supported by E-HPC.

The instance type of the node does not support the custom image.

When you configure the auto scaling policy, you must specify the same image in the global settings and the queue settings.

Why am I unable to scale out or create an E-HPC cluster by using a custom image?

When you scale out or create an E-HPC cluster by using a custom image, the cluster may fail to be scaled out or created due to the following limits:

You cannot modify the yum source configurations of the operating system in the custom image.

The

/homedirectory or/optdirectory cannot be the mount directory of the custom image or a symbolic link.If the

/etc/fstabfile of the custom image contains the mounting information about a file system, such as nfs, make sure that the cluster can access the file system or reside in the same VPC as the file system. Otherwise, you must delete the mounting information from the/etc/fstabfile before you scale out or create the cluster.You must keep the group whose account group ID is 1000 in the custom image.

The size of the system disk must be greater than or equal to that of the custom image.

Can I import custom images?

You can import only CentOS images to E-HPC. For information about how to import an image, see Import an image.

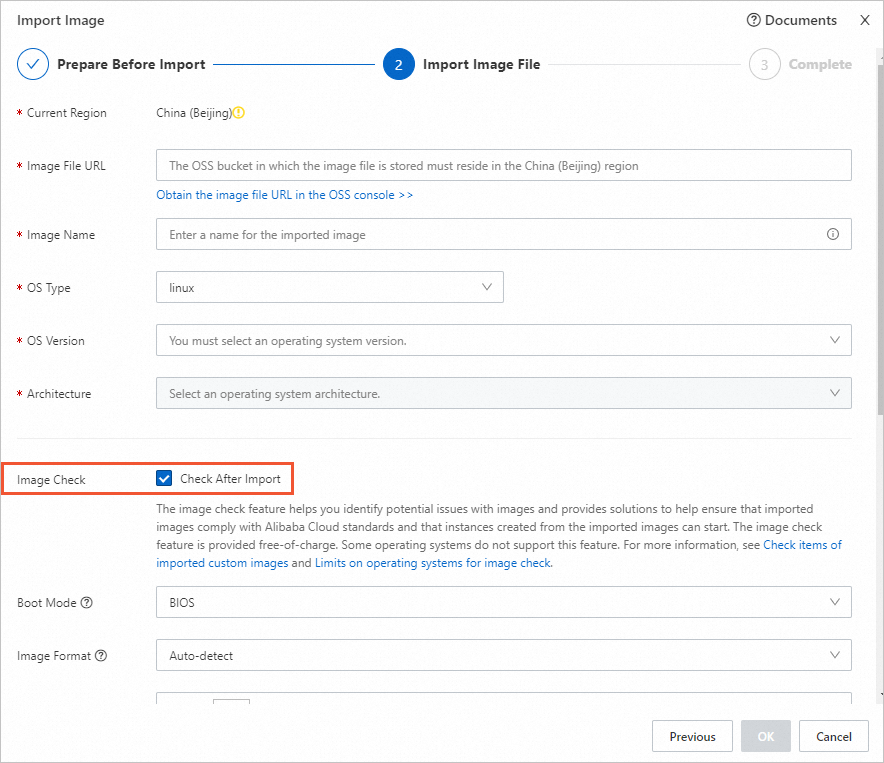

When you import an image, make sure that Check After Import is selected. Otherwise, the image may not be recognized in the E-HPC console.

FAQ about software

How do I manually install business software in an E-HPC cluster?

E-HPC clusters use File Storage NAS to share data between compute nodes. Therefore, you can manually install business software by using one of the following methods:

Install business software in the

/optdirectory. In this case, all cluster users can access and use the business software.Install business software in the home directory of a cluster user. Generally, only the cluster user can access and use the business software.

When you install some software, you must also install drivers or specific runtime environments, such as GPU drivers and YUM packages, on each compute node. After you install software on a compute node, you can use the custom image that is created based on the compute node to add more compute nodes. This way, the software can be automatically installed on all compute nodes.

FAQ about storage

How do I configure a remote mount directory for a NAS file system?

When you create an E-HPC cluster, you can specify a mount target and remote mount directory for a NAS file system. For example, your cluster has the following settings:

ClusterId=ehpc-mrZSoWf**** # The ID of the cluster.

VolumeMountpoint=045324****-m****. cn-hangzhou.nas.aliyuncs.com# The mount target of the NAS file system.

RemotePath=/ # # The remote directory of the NAS file system. To mount the NAS file system on the nodes when you create an E-HPC cluster, perform the following steps.

You can specify a remote mount directory based on your business requirements. Before you mount a NAS file system, you must create a mount target and a mount directory.

Create two subdirectories in the root directory:

/ehpc-mrZSoWf****/opt /ehpc-mrZSoWf****/homeWhen or after you create an E-HPC cluster, configure the remote directory based on your business requirements.

The following sample code provides an example on how to mount different directories. For more information, see Create a cluster by using the wizard and Manage storage resources.

/ # Mount the NAS file system on the /ehpcdata directory. /ehpc-mrZSoWf****/home # Mount the NAS file system on the /home directory. /ehpc-mrZSoWf****/opt # # Mount the NAS file system on the /opt directory.

FAQ about quota

What is the maximum number of clusters that I can create?

You can create up to three clusters in a region. To increase the quota, submit a ticket.

What is the maximum number of nodes that I can create in an E-HPC cluster?

You can create up to 500 nodes in an E-HPC cluster or add 500 compute nodes at the same time. To increase the quota, submit a ticket.

FAQ about permissions

What is role-based authorization?

Resource Access Management (RAM) provides a service-linked role named AliyunServiceRoleForEHPC for E-HPC. This role is used to authorize E-HPC to access associated cloud resources. E-HPC can assume the AliyunServiceRoleForEHPC role to access ECS, VPC, and NAS.

If the AliyunServiceRoleForEHPC role is not attached to your account, you must first complete role authorization. For more information, see Manage a service-linked role.

Why am I unable to log on to the console to view cluster information as a RAM user?

If the RAM user is not granted the AliyunEHPCReadOnlyAccess permission, the Switch to RAM for authorization message appears. You must grant the AliyunEHPCReadOnlyAccess permission to the RAM user. Then, you can view cluster information as a RAM user.

To create an E-HPC cluster, user, or job, you must grant the AliyunEHPCFullAccess and AliyunNASFullAccess permissions to the RAM user. For more information, see Grant permissions to a RAM user.