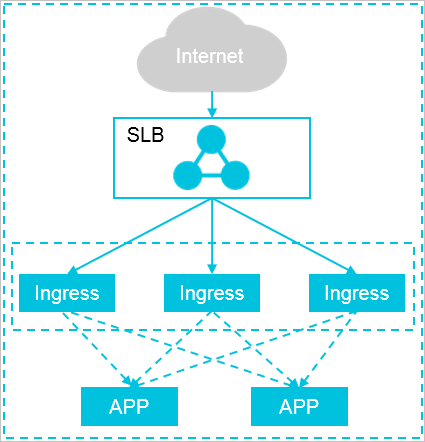

An Ingress is a set of rules that authorize external access to Services within a Kubernetes cluster. Ingresses provide Layer 7 load balancing. You can configure Ingresses to specify the URLs, Server Load Balancer (SLB) instances, Secure Sockets Layer (SSL) connections, and name-based virtual hosts that allow external access. The high reliability of Ingresses is important because Ingresses manage external access to Services within a cluster. This topic describes how to deploy Ingresses in a high-reliability architecture for a Container Service for Kubernetes (ACK) cluster.

Prerequisites

- An ACK cluster is created. For more information, see Create a managed Kubernetes cluster.

- You are connected to the master node of the ACK cluster through SSH. For more information, see Use SSH to connect to an ACK cluster.

High-reliability deployment architecture

The access layer in the preceding figure is composed of multiple exclusive Ingress nodes. You can also scale the number of Ingress nodes based on the traffic volume to the backend applications. If your cluster size is small, you can deploy Ingresses and applications together. In this case, we recommend that you isolate resources and restrict resource consumption.

Query the pods of the NGINX Ingress controller and the public IP address of the SLB instance

After an ACK cluster is created, the NGINX Ingress controller with two pods is automatically deployed. An Internet-facing SLB instance is also created as the frontend load balancing Service.

Deploy an Ingress access layer with high reliability

When the cluster size grows, you must expand the access layer to ensure high performance and high availability of the access layer. You can use the following methods to expand the access layer:

- Method 1: Increase the number of pods

You can increase the number of pods that are provisioned for the Deployment of the NGINX Ingress controller to expand the access layer.

- Run the following command to scale the number of pods to 3:

Expected output:kubectl -n kube-system scale --replicas=3 deployment/nginx-ingress-controllerdeployment.extensions/nginx-ingress-controller scaled - After you scale the number of pods, run the following command to query the pods that

are provisioned for the NGINX Ingress controller:

kubectl -n kube-system get pod | grep nginx-ingress-controllerExpected output:

nginx-ingress-controller-8648ddc696-2bshk 1/1 Running 0 3h nginx-ingress-controller-8648ddc696-jvbs9 1/1 Running 0 3h nginx-ingress-controller-8648ddc696-xqmfn 1/1 Running 0 33s

- Run the following command to scale the number of pods to 3:

- Method 2: Deploy Ingresses on nodes with higher specifications

You can add labels to nodes with higher specifications. Then, pods provisioned for the NGINX Ingress controller are scheduled to these nodes.

- To query information about the nodes in the cluster, run the following command:

kubectl get nodeExpected output:

NAME STATUS ROLES AGE VERSION cn-hangzhou.i-bp11bcmsna8d4bp**** Ready master 21d v1.11.5 cn-hangzhou.i-bp12h6biv9bg24l**** Ready <none> 21d v1.11.5 cn-hangzhou.i-bp12h6biv9bg24l**** Ready <none> 21d v1.11.5 cn-hangzhou.i-bp12h6biv9bg24l**** Ready <none> 21d v1.11.5 cn-hangzhou.i-bp181pofzyyksie**** Ready master 21d v1.11.5 cn-hangzhou.i-bp1cbsg6rf3580z**** Ready master 21d v1.11.5 - Run the following command to add the

node-role.kubernetes.io/ingress="true"label to thecn-hangzhou.i-bp12h6biv9bg24lmdc2oandcn-hangzhou.i-bp12h6biv9bg24lmdc2pnodes.

Expected output:kubectl label nodes cn-hangzhou.i-bp12h6biv9bg24lmdc2o node-role.kubernetes.io/ingress="true"node/cn-hangzhou.i-bp12h6biv9bg24lmdc2o labeled

Expected output:kubectl label nodes cn-hangzhou.i-bp12h6biv9bg24lmdc2p node-role.kubernetes.io/ingress="true"node/cn-hangzhou.i-bp12h6biv9bg24lmdc2p labeledNote- The number of labeled nodes must be no less than the number of pods that are provisioned for the NGINX Ingress controller. This ensures that each pod runs on an exclusive node.

- If the value of ROLES is none in the returned response, it indicates that the related node is a worker node.

- We recommend that you add labels to and deploy Ingresses on worker nodes.

- Run the following command to update the related Deployment by adding the nodeSelector

field:

Expected output:kubectl -n kube-system patch deployment nginx-ingress-controller -p '{"spec": {"template": {"spec": {"nodeSelector": {"node-role.kubernetes.io/ingress": "true"}}}}}'deployment.extensions/nginx-ingress-controller patched - Run the following command to query the pods that are provisioned for the NGINX Ingress

controller. The result indicates that pods are scheduled to the nodes that have the

node-role.kubernetes.io/ingress="true"label.kubectl -n kube-system get pod -o wide | grep nginx-ingress-controllerExpected output:

nginx-ingress-controller-8648ddc696-2bshk 1/1 Running 0 3h 172.16.2.15 cn-hangzhou.i-bp12h6biv9bg24lmdc2p <none> nginx-ingress-controller-8648ddc696-jvbs9 1/1 Running 0 3h 172.16.2.145 cn-hangzhou.i-bp12h6biv9bg24lmdc2o <none>

- To query information about the nodes in the cluster, run the following command: